Tachyon: Reliable, Memory Speed Storage for Cluster Computing Frameworks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tachyon: Reliable, Memory Speed Storage for Cluster Computing Frameworks

Tachyon: Reliable, Memory Speed Storage for Cluster Computing Frameworks The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Haoyuan Li, Ali Ghodsi, Matei Zaharia, Scott Shenker, and Ion Stoica. 2014. Tachyon: Reliable, Memory Speed Storage for Cluster Computing Frameworks. In Proceedings of the ACM Symposium on Cloud Computing (SOCC '14). ACM, New York, NY, USA, Article 6 , 15 pages. As Published http://dx.doi.org/10.1145/2670979.2670985 Publisher Association for Computing Machinery (ACM) Version Author's final manuscript Citable link http://hdl.handle.net/1721.1/101090 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/ Tachyon: Reliable, Memory Speed Storage for Cluster Computing Frameworks Haoyuan Li Ali Ghodsi Matei Zaharia Scott Shenker Ion Stoica University of California, Berkeley MIT, Databricks University of California, Berkeley fhaoyuan,[email protected] [email protected] fshenker,[email protected] Abstract Even replicating the data in memory can lead to a signifi- cant drop in the write performance, as both the latency and Tachyon is a distributed file system enabling reliable data throughput of the network are typically much worse than sharing at memory speed across cluster computing frame- that of local memory. works. While caching today improves read workloads, Slow writes can significantly hurt the performance of job writes are either network or disk bound, as replication is pipelines, where one job consumes the output of another. used for fault-tolerance. Tachyon eliminates this bottleneck These pipelines are regularly produced by workflow man- by pushing lineage, a well-known technique, into the storage agers such as Oozie [4] and Luigi [7], e.g., to perform data layer. -

Freebsd File Formats Manual Libarchive-Formats (5)

libarchive-formats (5) FreeBSD File Formats Manual libarchive-formats (5) NAME libarchive-formats —archive formats supported by the libarchive library DESCRIPTION The libarchive(3) library reads and writes a variety of streaming archive formats. Generally speaking, all of these archive formats consist of a series of “entries”. Each entry stores a single file system object, such as a file, directory,orsymbolic link. The following provides a brief description of each format supported by libarchive,with some information about recognized extensions or limitations of the current library support. Note that just because a format is supported by libarchive does not imply that a program that uses libarchive will support that format. Applica- tions that use libarchive specify which formats theywish to support, though manyprograms do use libarchive convenience functions to enable all supported formats. TarFormats The libarchive(3) library can read most tar archives. However, itonly writes POSIX-standard “ustar” and “pax interchange” formats. All tar formats store each entry in one or more 512-byte records. The first record is used for file metadata, including filename, timestamp, and mode information, and the file data is stored in subsequent records. Later variants have extended this by either appropriating undefined areas of the header record, extending the header to multiple records, or by storing special entries that modify the interpretation of subsequent entries. gnutar The libarchive(3) library can read GNU-format tar archives. It currently supports the most popular GNU extensions, including modern long filename and linkname support, as well as atime and ctime data. The libarchive library does not support multi-volume archives, nor the old GNU long filename format. -

Discretized Streams: Fault-Tolerant Streaming Computation at Scale

Discretized Streams: Fault-Tolerant Streaming Computation at Scale" Matei Zaharia, Tathagata Das, Haoyuan Li, Timothy Hunter, Scott Shenker, Ion Stoica University of California, Berkeley Published in SOSP ‘13 Slide 1/30 Motivation" • Faults and stragglers inevitable in large clusters running “big data” applications. • Streaming applications must recover from these quickly. • Current distributed streaming systems, including Storm, TimeStream, MapReduce Online provide fault recovery in an expensive manner. – Involves hot replication which requires 2x hardware or upstream backup which has long recovery time. Slide 2/30 Previous Methods" • Hot replication – two copies of each node, 2x hardware. – straggler will slow down both replicas. • Upstream backup – nodes buffer sent messages and replay them to new node. – stragglers are treated as failures resulting in long recovery step. • Conclusion : need for a system which overcomes these challenges Slide 3/30 • Voila ! D-Streams Slide 4/30 Computation Model" • Streaming computations treated as a series of deterministic batch computations on small time intervals. • Data received in each interval is stored reliably across the cluster to form input datatsets • At the end of each interval dataset is subjected to deterministic parallel operations and two things can happen – new dataset representing program output which is pushed out to stable storage – intermediate state stored as resilient distributed datasets (RDDs) Slide 5/30 D-Stream processing model" Slide 6/30 What are D-Streams ?" • sequence of immutable, partitioned datasets (RDDs) that can be acted on by deterministic transformations • transformations yield new D-Streams, and may create intermediate state in the form of RDDs • Example :- – pageViews = readStream("http://...", "1s") – ones = pageViews.map(event => (event.url, 1)) – counts = ones.runningReduce((a, b) => a + b) Slide 7/30 High-level overview of Spark Streaming system" Slide 8/30 Recovery" • D-Streams & RDDs track their lineage, that is, the graph of deterministic operations used to build them. -

The Design and Implementation of Declarative Networks

The Design and Implementation of Declarative Networks by Boon Thau Loo B.S. (University of California, Berkeley) 1999 M.S. (Stanford University) 2000 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the GRADUATE DIVISION of the UNIVERSITY OF CALIFORNIA, BERKELEY Committee in charge: Professor Joseph M. Hellerstein, Chair Professor Ion Stoica Professor John Chuang Fall 2006 The dissertation of Boon Thau Loo is approved: Professor Joseph M. Hellerstein, Chair Date Professor Ion Stoica Date Professor John Chuang Date University of California, Berkeley Fall 2006 The Design and Implementation of Declarative Networks Copyright c 2006 by Boon Thau Loo Abstract The Design and Implementation of Declarative Networks by Boon Thau Loo Doctor of Philosophy in Computer Science University of California, Berkeley Professor Joseph M. Hellerstein, Chair In this dissertation, we present the design and implementation of declarative networks. Declarative networking proposes the use of a declarative query language for specifying and implementing network protocols, and employs a dataflow framework at runtime for com- munication and maintenance of network state. The primary goal of declarative networking is to greatly simplify the process of specifying, implementing, deploying and evolving a network design. In addition, declarative networking serves as an important step towards an extensible, evolvable network architecture that can support flexible, secure and efficient deployment of new network protocols. Our main contributions are as follows. First, we formally define the Network Data- log (NDlog) language based on extensions to the Datalog recursive query language, and propose NDlog as a Domain Specific Language for programming network protocols. -

Apache Spark: a Unified Engine for Big Data Processing

contributed articles DOI:10.1145/2934664 for interactive SQL queries and Pregel11 This open source computing framework for iterative graph algorithms. In the open source Apache Hadoop stack, unifies streaming, batch, and interactive big systems like Storm1 and Impala9 are data workloads to unlock new applications. also specialized. Even in the relational database world, the trend has been to BY MATEI ZAHARIA, REYNOLD S. XIN, PATRICK WENDELL, move away from “one-size-fits-all” sys- TATHAGATA DAS, MICHAEL ARMBRUST, ANKUR DAVE, tems.18 Unfortunately, most big data XIANGRUI MENG, JOSH ROSEN, SHIVARAM VENKATARAMAN, applications need to combine many MICHAEL J. FRANKLIN, ALI GHODSI, JOSEPH GONZALEZ, different processing types. The very SCOTT SHENKER, AND ION STOICA nature of “big data” is that it is diverse and messy; a typical pipeline will need MapReduce-like code for data load- ing, SQL-like queries, and iterative machine learning. Specialized engines Apache Spark: can thus create both complexity and inefficiency; users must stitch together disparate systems, and some applica- tions simply cannot be expressed effi- ciently in any engine. A Unified In 2009, our group at the Univer- sity of California, Berkeley, started the Apache Spark project to design a unified engine for distributed data Engine for processing. Spark has a programming model similar to MapReduce but ex- tends it with a data-sharing abstrac- tion called “Resilient Distributed Da- Big Data tasets,” or RDDs.25 Using this simple extension, Spark can capture a wide range of processing workloads that previously needed separate engines, Processing including SQL, streaming, machine learning, and graph processing2,26,6 (see Figure 1). -

A Ditya a Kella

A D I T Y A A K E L L A [email protected] Computer Science Department http://www.cs.cmu.edu/∼aditya Carnegie Mellon University Phone: 412-818-3779 5000 Forbes Avenue Fax: 412-268-5576 (Attn: Aditya Akella) Pittsburgh, PA 15232 Education PhD in Computer Science May 2005 Carnegie Mellon University, Pittsburgh, PA (expected) Dissertation: “An Integrated Approach to Optimizing Internet Performance” Advisor: Prof. Srinivasan Seshan Bachelor of Technology in Computer Science and Engineering May 2000 Indian Institute of Technology (IIT), Madras, India Honors and Awards IBM PhD Fellowship 2003-04 & 2004-05 Graduated third among all IIT Madras undergraduates 2000 Institute Merit Prize, IIT Madras 1996-97 19th rank, All India Joint Entrance Examination for the IITs 1996 Gold medals, Regional Mathematics Olympiad, India 1993-94 & 1994-95 National Talent Search Examination (NTSE) Scholarship, India 1993-94 Research Interests Computer Systems and Internetworking PhD Dissertation An Integrated Approach to Optimizing Internet Performance In my thesis research, I adopted a systematic approach to understand how to optimize the perfor- mance of well-connected Internet end-points, such as universities, large enterprises and data centers. I showed that constrained bottlenecks inside and between carrier networks in the Internet could limit the performance of such end-points. I observed that a clever end point-based strategy, called Mul- tihoming Route Control, can help end-points route around these bottlenecks, and thereby improve performance. Furthermore, I showed that the Internet's topology, routing and trends in its growth may essentially worsen the wide-area congestion in the future. To this end, I proposed changes to the Internet's topology in order to guarantee good future performance. -

CDE: Run Any Linux Application On-Demand Without Installation

CDE: Run Any Linux Application On-Demand Without Installation Philip J. Guo Stanford University [email protected] Abstract with compiling, installing, and configuring software and their myriad of dependencies. For example, the official There is a huge ecosystem of free software for Linux, but Google Chrome help forum for “install/uninstall issues” since each Linux distribution (distro) contains a differ- has over 5800 threads. ent set of pre-installed shared libraries, filesystem layout In addition, a study of US labor statistics predicts that conventions, and other environmental state, it is difficult by 2012, 13 million American workers will do program- to create and distribute software that works without has- ming in their jobs, but amongst those, only 3 million will sle across all distros. Online forums and mailing lists be professional software developers [24]. Thus, there are are filled with discussions of users’ troubles with com- potentially millions of people who still need to get their piling, installing, and configuring Linux software and software to run on other machines but who are unlikely their myriad of dependencies. To address this ubiqui- to invest the effort to create one-click installers or wres- tous problem, we have created an open-source tool called tle with package managers, since their primary job is not CDE that automatically packages up the Code, Data, and to release production-quality software. For example: Environment required to run a set of x86-Linux pro- grams on other x86-Linux machines. Creating a CDE • System administrators often hack together ad- package is as simple as running the target application un- hoc utilities comprised of shell scripts and custom- der CDE’s monitoring, and executing a CDE package re- compiled versions of open-source software, in or- quires no installation, configuration, or root permissions. -

IDOL Keyview PDF Export SDK 12.6 C Programming Guide

KeyView Software Version 12.6 PDF Export SDK C Programming Guide Document Release Date: June 2020 Software Release Date: June 2020 PDF Export SDK C Programming Guide Legal notices Copyright notice © Copyright 1997-2020 Micro Focus or one of its affiliates. The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. Documentation updates The title page of this document contains the following identifying information: l Software Version number, which indicates the software version. l Document Release Date, which changes each time the document is updated. l Software Release Date, which indicates the release date of this version of the software. To check for updated documentation, visit https://www.microfocus.com/support-and-services/documentation/. Support Visit the MySupport portal to access contact information and details about the products, services, and support that Micro Focus offers. This portal also provides customer self-solve capabilities. It gives you a fast and efficient way to access interactive technical support tools needed to manage your business. As a valued support customer, you can benefit by using the MySupport portal to: l Search for knowledge documents of interest l Access product documentation l View software vulnerability alerts l Enter into discussions with other software customers l Download software patches l Manage software licenses, downloads, and support contracts l Submit and track service requests l Contact customer support l View information about all services that Support offers Many areas of the portal require you to sign in. -

Forcepoint DLP Supported File Formats and Size Limits

Forcepoint DLP Supported File Formats and Size Limits Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 This article provides a list of the file formats that can be analyzed by Forcepoint DLP, file formats from which content and meta data can be extracted, and the file size limits for network, endpoint, and discovery functions. See: ● Supported File Formats ● File Size Limits © 2021 Forcepoint LLC Supported File Formats Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 The following tables lists the file formats supported by Forcepoint DLP. File formats are in alphabetical order by format group. ● Archive For mats, page 3 ● Backup Formats, page 7 ● Business Intelligence (BI) and Analysis Formats, page 8 ● Computer-Aided Design Formats, page 9 ● Cryptography Formats, page 12 ● Database Formats, page 14 ● Desktop publishing formats, page 16 ● eBook/Audio book formats, page 17 ● Executable formats, page 18 ● Font formats, page 20 ● Graphics formats - general, page 21 ● Graphics formats - vector graphics, page 26 ● Library formats, page 29 ● Log formats, page 30 ● Mail formats, page 31 ● Multimedia formats, page 32 ● Object formats, page 37 ● Presentation formats, page 38 ● Project management formats, page 40 ● Spreadsheet formats, page 41 ● Text and markup formats, page 43 ● Word processing formats, page 45 ● Miscellaneous formats, page 53 Supported file formats are added and updated frequently. Key to support tables Symbol Description Y The format is supported N The format is not supported P Partial metadata -

Comparison of Spark Resource Managers and Distributed File Systems

2016 IEEE International Conferences on Big Data and Cloud Computing (BDCloud), Social Computing and Networking (SocialCom), Sustainable Computing and Communications (SustainCom) Comparison of Spark Resource Managers and Distributed File Systems Solmaz Salehian Yonghong Yan Department of Computer Science & Engineering, Department of Computer Science & Engineering, Oakland University, Oakland University, Rochester, MI 48309-4401, United States Rochester, MI 48309-4401, United States [email protected] [email protected] Abstract— The rapid growth in volume, velocity, and variety Section 4 provides information of different resource of data produced by applications of scientific computing, management subsystems of Spark. In Section 5, Four commercial workloads and cloud has led to Big Data. Traditional Distributed File Systems (DFSs) are reviewed. Then, solutions of data storage, management and processing cannot conclusion is included in Section 6. meet demands of this distributed data, so new execution models, data models and software systems have been developed to address II. CHALLENGES IN BIG DATA the challenges of storing data in heterogeneous form, e.g. HDFS, NoSQL database, and for processing data in parallel and Although Big Data provides users with lot of opportunities, distributed fashion, e.g. MapReduce, Hadoop and Spark creating an efficient software framework for Big Data has frameworks. This work comparatively studies Apache Spark many challenges in terms of networking, storage, management, distributed data processing framework. Our study first discusses analytics, and ethics [5, 6]. the resource management subsystems of Spark, and then reviews Previous studies [6-11] have been carried out to review open several of the distributed data storage options available to Spark. Keywords—Big Data, Distributed File System, Resource challenges in Big Data. -

Alluxio: a Virtual Distributed File System by Haoyuan Li A

Alluxio: A Virtual Distributed File System by Haoyuan Li A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Ion Stoica, Co-chair Professor Scott Shenker, Co-chair Professor John Chuang Spring 2018 Alluxio: A Virtual Distributed File System Copyright 2018 by Haoyuan Li 1 Abstract Alluxio: A Virtual Distributed File System by Haoyuan Li Doctor of Philosophy in Computer Science University of California, Berkeley Professor Ion Stoica, Co-chair Professor Scott Shenker, Co-chair The world is entering the data revolution era. Along with the latest advancements of the Inter- net, Artificial Intelligence (AI), mobile devices, autonomous driving, and Internet of Things (IoT), the amount of data we are generating, collecting, storing, managing, and analyzing is growing ex- ponentially. To store and process these data has exposed tremendous challenges and opportunities. Over the past two decades, we have seen significant innovation in the data stack. For exam- ple, in the computation layer, the ecosystem started from the MapReduce framework, and grew to many different general and specialized systems such as Apache Spark for general data processing, Apache Storm, Apache Samza for stream processing, Apache Mahout for machine learning, Ten- sorflow, Caffe for deep learning, Presto, Apache Drill for SQL workloads. There are more than a hundred popular frameworks for various workloads and the number is growing. Similarly, the storage layer of the ecosystem grew from the Apache Hadoop Distributed File System (HDFS) to a variety of choices as well, such as file systems, object stores, blob stores, key-value systems, and NoSQL databases to realize different tradeoffs in cost, speed and semantics. -

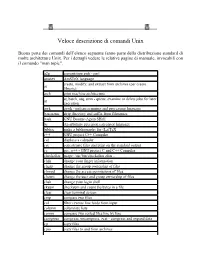

Unix Command

Veloce descrizione di comandi Unix Buona parte dei comandi dell’elenco seguente fanno parte della distribuzione standard di molte architetture Unix. Per i dettagli vedere le relative pagine di manuale, invocabili con il comando "man topic". a2p convertitore awk - perl amstex AmSTeX language create, modify, and extract from archives (per creare ar librerie) arch print machine architecture at, batch, atq, atrm - queue, examine or delete jobs for later at execution awk gawk - pattern scanning and processing language basename strip directory and suffix from filenames bash GNU Bourne-Again SHell bc An arbitrary precision calculator language bibtex make a bibliography for (La)TeX c++ GNU project C++ Compiler cal displays a calendar cat concatenate files and print on the standard output cc gcc, g++ - GNU project C and C++ Compiler checkalias usage: /usr/bin/checkalias alias .. chfn change your finger information chgrp change the group ownership of files chmod change the access permissions of files chown change the user and group ownership of files chsh change your login shell cksum checksum and count the bytes in a file clear clear terminal screen cmp compare two files col filter reverse line feeds from input column columnate lists comm compare two sorted files line by line compress compress, uncompress, zcat - compress and expand data cp copy files cpio copy files to and from archives tcsh - C shell with file name completion and command line csh editing csplit split a file into sections determined by context lines cut remove sections from each