Memory and Cgroup 35 3.1 Storing Data in Main Memory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

NUMA-Aware Thread Migration for High Performance NVMM File Systems

NUMA-Aware Thread Migration for High Performance NVMM File Systems Ying Wang, Dejun Jiang and Jin Xiong SKL Computer Architecture, ICT, CAS; University of Chinese Academy of Sciences fwangying01, jiangdejun, [email protected] Abstract—Emerging Non-Volatile Main Memories (NVMMs) out considering the NVMM usage on NUMA nodes. Besides, provide persistent storage and can be directly attached to the application threads accessing file system rely on the default memory bus, which allows building file systems on non-volatile operating system thread scheduler, which migrates thread only main memory (NVMM file systems). Since file systems are built on memory, NUMA architecture has a large impact on their considering CPU utilization. These bring remote memory performance due to the presence of remote memory access and access and resource contentions to application threads when imbalanced resource usage. Existing works migrate thread and reading and writing files, and thus reduce the performance thread data on DRAM to solve these problems. Unlike DRAM, of NVMM file systems. We observe that when performing NVMM introduces extra latency and lifetime limitations. This file reads/writes from 4 KB to 256 KB on a NVMM file results in expensive data migration for NVMM file systems on NUMA architecture. In this paper, we argue that NUMA- system (NOVA [47] on NVMM), the average latency of aware thread migration without migrating data is desirable accessing remote node increases by 65.5 % compared to for NVMM file systems. We propose NThread, a NUMA-aware accessing local node. The average bandwidth is reduced by thread migration module for NVMM file system. -

Security Assurance Requirements for Linux Application Container Deployments

NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli Computer Security Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 October 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology NISTIR 8176 SECURITY ASSURANCE FOR LINUX CONTAINERS National Institute of Standards and Technology Internal Report 8176 37 pages (October 2017) This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. This p There may be references in this publication to other publications currently under development by NIST in accordance with its assigned statutory responsibilities. The information in this publication, including concepts and methodologies, may be used by federal agencies even before the completion of such companion publications. Thus, until each ublication is available free of charge from: http publication is completed, current requirements, guidelines, and procedures, where they exist, remain operative. For planning and transition purposes, federal agencies may wish to closely follow the development of these new publications by NIST. -

Linux Physical Memory Analysis

Linux Physical Memory Analysis Paul Movall International Business Machines Corporation 3605 Highway 52N, Rochester, MN Ward Nelson International Business Machines Corporation 3605 Highway 52N, Rochester, MN Shaun Wetzstein International Business Machines Corporation 3605 Highway 52N, Rochester, MN Abstract A significant amount of this information can alo be found in the /proc filesystem provided by the kernel. We present a tool suite for analysis of physical memory These /proc entries can be used to obtain many statis- usage within the Linux kernel environment. This tool tics including several related to memory usage. For ex- suite can be used to collect and analyze how the physi- ample, /proc/<pid>/maps can be used to display a pro- cal memory within a Linuxenvironment is being used. cess' virtual memory map. Likewise, the contents of / proc/<pid>/status can be used to retreive statistics about virtual memory usage as well as the Resident Set Size (RSS). Embedded subsystems are common in today's computer In most cases, this process level detail is sufficient to systems. These embedded subsystems range from the analyze memory usage. In systems that do not have very simple to the very complex. In such embedded sys- backing store, more details are often needed to analyze tems, memory is scarce and swap is non-existent. When memory usage. For example, it's useful to know which adapting Linux for use in this environment, we needed pages in a process' address map are resident, not just to keep a close eye on physical memory usage. how many. This information can be used to get some clues on the usage of a shared library. -

Multicore Operating-System Support for Mixed Criticality∗

Multicore Operating-System Support for Mixed Criticality∗ James H. Anderson, Sanjoy K. Baruah, and Bjorn¨ B. Brandenburg The University of North Carolina at Chapel Hill Abstract Different criticality levels provide different levels of assurance against failure. In current avionics designs, Ongoing research is discussed on the development of highly-critical software is kept physically separate from operating-system support for enabling mixed-criticality less-critical software. Moreover, no currently deployed air- workloads to be supported on multicore platforms. This craft uses multicore processors to host highly-critical tasks work is motivated by avionics systems in which such work- (more precisely, if multicore processors are used, and if loads occur. In the mixed-criticality workload model that is such a processor hosts highly-critical applications, then all considered, task execution costs may be determined using but one of its cores are turned off). These design decisions more-stringent methods at high criticality levels, and less- are largely driven by certification issues. For example, cer- stringent methods at low criticality levels. The main focus tifying highly-critical components becomes easier if poten- of this research effort is devising mechanisms for providing tially adverse interactions among components executing on “temporal isolation” across criticality levels: lower levels different cores through shared hardware such as caches are should not adversely “interfere” with higher levels. simply “defined” not to occur. Unfortunately, hosting an overall workload as described here clearly wastes process- ing resources. 1 Introduction In this paper, we propose operating-system infrastruc- ture that allows applications of different criticalities to be The evolution of computational frameworks for avionics co-hosted on a multicore platform. -

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV)

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV) White Paper August 2015 Order Number: 332860-001US YouLegal Lines andmay Disclaimers not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting: http://www.intel.com/ design/literature.htm. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at http:// www.intel.com/ or from the OEM or retailer. Results have been estimated or simulated using internal Intel analysis or architecture simulation or modeling, and provided to you for informational purposes. Any differences in your system hardware, software or configuration may affect your actual performance. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Tests document performance of components on a particular test, in specific systems. -

Containerization Introduction to Containers, Docker and Kubernetes

Containerization Introduction to Containers, Docker and Kubernetes EECS 768 Apoorv Ingle [email protected] Containers • Containers – lightweight VM or chroot on steroids • Feels like a virtual machine • Get a shell • Install packages • Run applications • Run services • But not really • Uses host kernel • Cannot boot OS • Does not need PID 1 • Process visible to host machine Containers • VM vs Containers Containers • Container Anatomy • cgroup: limit the use of resources • namespace: limit what processes can see (hence use) Containers • cgroup • Resource metering and limiting • CPU • IO • Network • etc.. • $ ls /sys/fs/cgroup Containers • Separate Hierarchies for each resource subsystem (CPU, IO, etc.) • Each process belongs to exactly 1 node • Node is a group of processes • Share resource Containers • CPU cgroup • Keeps track • user/system CPU • Usage per CPU • Can set weights • CPUset cgroup • Reserve to CPU to specific applications • Avoids context switch overheads • Useful for non uniform memory access (NUMA) Containers • Memory cgroup • Tracks pages used by each group • Pages can be shared across groups • Pages “charged” to a group • Shared pages “split the cost” • Set limits on usage Containers • Namespaces • Provides a view of the system to process • Controls what a process can see • Multiple namespaces • pid • net • mnt • uts • ipc • usr Containers • PID namespace • Processes within a PID namespace see only process in the same namespace • Each PID namespace has its own numbering staring from 1 • Namespace is killed when PID 1 goes away -

Resource Access Control in Real-Time Systems

Resource Access Control in Real-time Systems Advanced Operating Systems (M) Lecture 8 Lecture Outline • Definitions of resources • Resource access control for static systems • Basic priority inheritance protocol • Basic priority ceiling protocol • Enhanced priority ceiling protocols • Resource access control for dynamic systems • Effects on scheduling • Implementing resource access control 2 Resources • A system has ρ types of resource R1, R2, …, Rρ • Each resource comprises nk indistinguishable units; plentiful resources have no effect on scheduling and so are ignored • Each unit of resource is used in a non-preemptive and mutually exclusive manner; resources are serially reusable • If a resource can be used by more than one job at a time, we model that resource as having many units, each used mutually exclusively • Access to resources is controlled using locks • Jobs attempt to lock a resource before starting to use it, and unlock the resource afterwards; the time the resource is locked is the critical section • If a lock request fails, the requesting job is blocked; a job holding a lock cannot be preempted by a higher priority job needing that lock • Critical sections may nest if a job needs multiple simultaneous resources 3 Contention for Resources • Jobs contend for a resource if they try to lock it at once J blocks 1 Preempt J3 J1 Preempt J3 J2 blocks J2 J3 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 Priority inversion EDF schedule of J1, J2 and J3 sharing a resource protected by locks (blue shading indicated critical sections). -

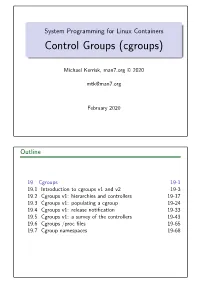

Control Groups (Cgroups)

System Programming for Linux Containers Control Groups (cgroups) Michael Kerrisk, man7.org © 2020 [email protected] February 2020 Outline 19 Cgroups 19-1 19.1 Introduction to cgroups v1 and v2 19-3 19.2 Cgroups v1: hierarchies and controllers 19-17 19.3 Cgroups v1: populating a cgroup 19-24 19.4 Cgroups v1: release notification 19-33 19.5 Cgroups v1: a survey of the controllers 19-43 19.6 Cgroups /procfiles 19-65 19.7 Cgroup namespaces 19-68 Outline 19 Cgroups 19-1 19.1 Introduction to cgroups v1 and v2 19-3 19.2 Cgroups v1: hierarchies and controllers 19-17 19.3 Cgroups v1: populating a cgroup 19-24 19.4 Cgroups v1: release notification 19-33 19.5 Cgroups v1: a survey of the controllers 19-43 19.6 Cgroups /procfiles 19-65 19.7 Cgroup namespaces 19-68 Goals Cgroups is a big topic Many controllers V1 versus V2 interfaces Our goal: understand fundamental semantics of cgroup filesystem and interfaces Useful from a programming perspective How do I build container frameworks? What else can I build with cgroups? And useful from a system engineering perspective What’s going on underneath my container’s hood? System Programming for Linux Containers ©2020, Michael Kerrisk Cgroups 19-4 §19.1 Focus We’ll focus on: General principles of operation; goals of cgroups The cgroup filesystem Interacting with the cgroup filesystem using shell commands Problems with cgroups v1, motivations for cgroups v2 Differences between cgroups v1 and v2 We’ll look briefly at some of the controllers System Programming for Linux Containers ©2020, Michael Kerrisk Cgroups 19-5 §19.1 -

A Hybrid Swapping Scheme Based on Per-Process Reclaim for Performance Improvement of Android Smartphones (August 2018)

Received August 19, 2018, accepted September 14, 2018, date of publication October 1, 2018, date of current version October 25, 2018. Digital Object Identifier 10.1109/ACCESS.2018.2872794 A Hybrid Swapping Scheme Based On Per-Process Reclaim for Performance Improvement of Android Smartphones (August 2018) JUNYEONG HAN 1, SUNGEUN KIM1, SUNGYOUNG LEE1, JAEHWAN LEE2, AND SUNG JO KIM2 1LG Electronics, Seoul 07336, South Korea 2School of Software, Chung-Ang University, Seoul 06974, South Korea Corresponding author: Sung Jo Kim ([email protected]) This work was supported in part by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education under Grant 2016R1D1A1B03931004 and in part by the Chung-Ang University Research Scholarship Grants in 2015. ABSTRACT As a way to increase the actual main memory capacity of Android smartphones, most of them make use of zRAM swapping, but it has limitation in increasing its capacity since it utilizes main memory. Unfortunately, they cannot use secondary storage as a swap space due to the long response time and wear-out problem. In this paper, we propose a hybrid swapping scheme based on per-process reclaim that supports both secondary-storage swapping and zRAM swapping. It attempts to swap out all the pages in the working set of a process to a zRAM swap space rather than killing the process selected by a low-memory killer, and to swap out the least recently used pages into a secondary storage swap space. The main reason being is that frequently swap- in/out pages use the zRAM swap space while less frequently swap-in/out pages use the secondary storage swap space, in order to reduce the page operation cost. -

An Evolutionary Study of Linux Memory Management for Fun and Profit Jian Huang, Moinuddin K

An Evolutionary Study of Linux Memory Management for Fun and Profit Jian Huang, Moinuddin K. Qureshi, and Karsten Schwan, Georgia Institute of Technology https://www.usenix.org/conference/atc16/technical-sessions/presentation/huang This paper is included in the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16). June 22–24, 2016 • Denver, CO, USA 978-1-931971-30-0 Open access to the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16) is sponsored by USENIX. An Evolutionary Study of inu emory anagement for Fun and rofit Jian Huang, Moinuddin K. ureshi, Karsten Schwan Georgia Institute of Technology Astract the patches committed over the last five years from 2009 to 2015. The study covers 4587 patches across Linux We present a comprehensive and uantitative study on versions from 2.6.32.1 to 4.0-rc4. We manually label the development of the Linux memory manager. The each patch after carefully checking the patch, its descrip- study examines 4587 committed patches over the last tions, and follow-up discussions posted by developers. five years (2009-2015) since Linux version 2.6.32. In- To further understand patch distribution over memory se- sights derived from this study concern the development mantics, we build a tool called MChecker to identify the process of the virtual memory system, including its patch changes to the key functions in mm. MChecker matches distribution and patterns, and techniues for memory op- the patches with the source code to track the hot func- timizations and semantics. Specifically, we find that tions that have been updated intensively. -

A Container-Based Approach to OS Specialization for Exascale Computing

A Container-Based Approach to OS Specialization for Exascale Computing Judicael A. Zounmevo∗, Swann Perarnau∗, Kamil Iskra∗, Kazutomo Yoshii∗, Roberto Gioiosay, Brian C. Van Essenz, Maya B. Gokhalez, Edgar A. Leonz ∗Argonne National Laboratory fjzounmevo@, perarnau@mcs., iskra@mcs., [email protected] yPacific Northwest National Laboratory. [email protected] zLawrence Livermore National Laboratory. fvanessen1, maya, [email protected] Abstract—Future exascale systems will impose several con- view is essential for scalability, enabling compute nodes to flicting challenges on the operating system (OS) running on manage and optimize massive intranode thread and task par- the compute nodes of such machines. On the one hand, the allelism and adapt to new memory technologies. In addition, targeted extreme scale requires the kind of high resource usage efficiency that is best provided by lightweight OSes. At the Argo introduces the idea of “enclaves,” a set of resources same time, substantial changes in hardware are expected for dedicated to a particular service and capable of introspection exascale systems. Compute nodes are expected to host a mix of and autonomic response. Enclaves will be able to change the general-purpose and special-purpose processors or accelerators system configuration of nodes and the allocation of power tailored for serial, parallel, compute-intensive, or I/O-intensive to different nodes or to migrate data or computations from workloads. Similarly, the deeper and more complex memory hierarchy will expose multiple coherence domains and NUMA one node to another. They will be used to demonstrate nodes in addition to incorporating nonvolatile RAM. That the support of different levels of fault tolerance—a key expected workload and hardware heterogeneity and complexity concern of exascale systems—with some enclaves handling is not compatible with the simplicity that characterizes high node failures by means of global restart and other enclaves performance lightweight kernels.