Deep Learning Method for Denoising Monte Carlo Renders for VR Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

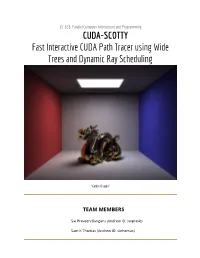

CUDA-SCOTTY Fast Interactive CUDA Path Tracer Using Wide Trees and Dynamic Ray Scheduling

15-618: Parallel Computer Architecture and Programming CUDA-SCOTTY Fast Interactive CUDA Path Tracer using Wide Trees and Dynamic Ray Scheduling “Golden Dragon” TEAM MEMBERS Sai Praveen Bangaru (Andrew ID: saipravb) Sam K Thomas (Andrew ID: skthomas) Introduction Path tracing has long been the select method used by the graphics community to render photo-realistic images. It has found wide uses across several industries, and plays a major role in animation and filmmaking, with most special effects rendered using some form of Monte Carlo light transport. It comes as no surprise, then, that optimizing path tracing algorithms is a widely studied field, so much so, that it has it’s own top-tier conference (HPG; High Performance Graphics). There are generally a whole spectrum of methods to increase the efficiency of path tracing. Some methods aim to create better sampling methods (Metropolis Light Transport), while others try to reduce noise in the final image by filtering the output (4D Sheared transform). In the spirit of the parallel programming course 15-618, however, we focus on a third category: system-level optimizations and leveraging hardware and algorithms that better utilize the hardware (Wide Trees, Packet tracing, Dynamic Ray Scheduling). Most of these methods, understandably, focus on the ray-scene intersection part of the path tracing pipeline, since that is the main bottleneck. In the following project, we describe the implementation of a hybrid non-packet method which uses Wide Trees and Dynamic Ray Scheduling to provide an 80x improvement over a 8-threaded CPU implementation. Summary Over the course of roughly 3 weeks, we studied two non-packet BVH traversal optimizations: Wide Trees, which involve non-binary BVHs for shallower BVHs and better Warp/SIMD Utilization and Dynamic Ray Scheduling which involved changing our perspective to process rays on a per-node basis rather than processing nodes on a per-ray basis. -

Practical Path Guiding for Efficient Light-Transport Simulation

Eurographics Symposium on Rendering 2017 Volume 36 (2017), Number 4 P. Sander and M. Zwicker (Guest Editors) Practical Path Guiding for Efficient Light-Transport Simulation Thomas Müller1;2 Markus Gross1;2 Jan Novák2 1ETH Zürich 2Disney Research Vorba et al. MSE: 0.017 Reference Ours (equal time) MSE: 0.018 Training: 5.1 min Training: 0.73 min Rendering: 4.2 min, 8932 spp Rendering: 4.2 min, 11568 spp Figure 1: Our method allows efficient guiding of path-tracing algorithms as demonstrated in the TORUS scene. We compare equal-time (4.2 min) renderings of our method (right) to the current state-of-the-art [VKv∗14, VK16] (left). Our algorithm automatically estimates how much training time is optimal, displays a rendering preview during training, and requires no parameter tuning. Despite being fully unidirectional, our method achieves similar MSE values compared to Vorba et al.’s method, which trains bidirectionally. Abstract We present a robust, unbiased technique for intelligent light-path construction in path-tracing algorithms. Inspired by existing path-guiding algorithms, our method learns an approximate representation of the scene’s spatio-directional radiance field in an unbiased and iterative manner. To that end, we propose an adaptive spatio-directional hybrid data structure, referred to as SD-tree, for storing and sampling incident radiance. The SD-tree consists of an upper part—a binary tree that partitions the 3D spatial domain of the light field—and a lower part—a quadtree that partitions the 2D directional domain. We further present a principled way to automatically budget training and rendering computations to minimize the variance of the final image. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

Killing the Competition

The Press, Christchurch March 3, 2009 7 Gun happy: Steven ter Heide and Eric Boltjes, of Killzone 2.If you die and restart, the artifical intelligence is different. Killing the competition Gerard Campbell: So, the game’s in test it in game’’. The whole immers- ter Heide: It also comes down to ter Heide: At certain times it is Gerard Campbell the bag. How are you feeling now ion and being in first person really replayability. If you die and restart maxing out the PS3, but I think in caught up with the game is finished? started to work for people. It sounds and the AI is predictable and moves five or six years time people will be Steven ter Heide (senior producer really simple but a lot of other games in the same way, that’s a problem. doing things on PS3 that people Steven ter Heide at Guerrilla Games): We’ve been are branching out to other genres, We wanted the AI to appear (can) not imagine now. It is still early working on this for a good long time but we just wanted to go back to the natural. We wanted every play days. and Eric Boltjes of now and to finally have a boxed copy basics and polish it until we could see through to be different. in our hands is a good feeling. our faces in it and give an experience GC: Who is harder on game top game Killzone 2 that was fun. GC: The first Killzone was hyped up developers: the media or gamers GC: Who put more pressure on as a Halo killer (Halo being the themselves? to talk about self- you to succeed with Killzone 2: GC: The original Killzone seemed successful first-person shooter on Boltjes: Gamers can be brutally imposed pressure, yourselves or Sony executives? an ambitious title for the ageing Microsoft’s Xbox platform). -

Megakernels Considered Harmful: Wavefront Path Tracing on Gpus

Megakernels Considered Harmful: Wavefront Path Tracing on GPUs Samuli Laine Tero Karras Timo Aila NVIDIA∗ Abstract order to handle irregular control flow, some threads are masked out when executing a branch they should not participate in. This in- When programming for GPUs, simply porting a large CPU program curs a performance loss, as masked-out threads are not performing into an equally large GPU kernel is generally not a good approach. useful work. Due to SIMT execution model on GPUs, divergence in control flow carries substantial performance penalties, as does high register us- The second factor is the high-bandwidth, high-latency memory sys- age that lessens the latency-hiding capability that is essential for the tem. The impressive memory bandwidth in modern GPUs comes at high-latency, high-bandwidth memory system of a GPU. In this pa- the expense of a relatively long delay between making a memory per, we implement a path tracer on a GPU using a wavefront formu- request and getting the result. To hide this latency, GPUs are de- lation, avoiding these pitfalls that can be especially prominent when signed to accommodate many more threads than can be executed in using materials that are expensive to evaluate. We compare our per- any given clock cycle, so that whenever a group of threads is wait- formance against the traditional megakernel approach, and demon- ing for a memory request to be served, other threads may be exe- strate that the wavefront formulation is much better suited for real- cuted. The effectiveness of this mechanism, i.e., the latency-hiding world use cases where multiple complex materials are present in capability, is determined by the threads’ resource usage, the most the scene. -

An Advanced Path Tracing Architecture for Movie Rendering

RenderMan: An Advanced Path Tracing Architecture for Movie Rendering PER CHRISTENSEN, JULIAN FONG, JONATHAN SHADE, WAYNE WOOTEN, BRENDEN SCHUBERT, ANDREW KENSLER, STEPHEN FRIEDMAN, CHARLIE KILPATRICK, CLIFF RAMSHAW, MARC BAN- NISTER, BRENTON RAYNER, JONATHAN BROUILLAT, and MAX LIANI, Pixar Animation Studios Fig. 1. Path-traced images rendered with RenderMan: Dory and Hank from Finding Dory (© 2016 Disney•Pixar). McQueen’s crash in Cars 3 (© 2017 Disney•Pixar). Shere Khan from Disney’s The Jungle Book (© 2016 Disney). A destroyer and the Death Star from Lucasfilm’s Rogue One: A Star Wars Story (© & ™ 2016 Lucasfilm Ltd. All rights reserved. Used under authorization.) Pixar’s RenderMan renderer is used to render all of Pixar’s films, and by many 1 INTRODUCTION film studios to render visual effects for live-action movies. RenderMan started Pixar’s movies and short films are all rendered with RenderMan. as a scanline renderer based on the Reyes algorithm, and was extended over The first computer-generated (CG) animated feature film, Toy Story, the years with ray tracing and several global illumination algorithms. was rendered with an early version of RenderMan in 1995. The most This paper describes the modern version of RenderMan, a new architec- ture for an extensible and programmable path tracer with many features recent Pixar movies – Finding Dory, Cars 3, and Coco – were rendered that are essential to handle the fiercely complex scenes in movie production. using RenderMan’s modern path tracing architecture. The two left Users can write their own materials using a bxdf interface, and their own images in Figure 1 show high-quality rendering of two challenging light transport algorithms using an integrator interface – or they can use the CG movie scenes with many bounces of specular reflections and materials and light transport algorithms provided with RenderMan. -

Getting Started (Pdf)

GETTING STARTED PHOTO REALISTIC RENDERS OF YOUR 3D MODELS Available for Ver. 1.01 © Kerkythea 2008 Echo Date: April 24th, 2008 GETTING STARTED Page 1 of 41 Written by: The KT Team GETTING STARTED Preface: Kerkythea is a standalone render engine, using physically accurate materials and lights, aiming for the best quality rendering in the most efficient timeframe. The target of Kerkythea is to simplify the task of quality rendering by providing the necessary tools to automate scene setup, such as staging using the GL real-time viewer, material editor, general/render settings, editors, etc., under a common interface. Reaching now the 4th year of development and gaining popularity, I want to believe that KT can now be considered among the top freeware/open source render engines and can be used for both academic and commercial purposes. In the beginning of 2008, we have a strong and rapidly growing community and a website that is more "alive" than ever! KT2008 Echo is very powerful release with a lot of improvements. Kerkythea has grown constantly over the last year, growing into a standard rendering application among architectural studios and extensively used within educational institutes. Of course there are a lot of things that can be added and improved. But we are really proud of reaching a high quality and stable application that is more than usable for commercial purposes with an amazing zero cost! Ioannis Pantazopoulos January 2008 Like the heading is saying, this is a Getting Started “step-by-step guide” and it’s designed to get you started using Kerkythea 2008 Echo. -

LUNCH BREAK Looks Andfeelsgood

9:30 KILLZONE: SHADOW FALL Arjan Bak • Guerrilla Games Misja Baas • Guerrilla Games ic PRO OUT WITH THE OLD AND IN WITH THE OWL: Principal game-Designer and Environment Art Art Director, Killzone Shadow Fall BUILDING A BRIGHT NEW FUTURE Director, Killzone Shadow Fall An alumnus of the Willem de Kooning Academy of Arts in 2 An industry veteran at age 29, Arjan Bak worked in design and art Rotterdam, Misja Baas first entered the games industry in 2001 as In “Out With The Old And In With The Owl: Building A Bright New roles for Valve and Ubisoft before joining Guerrilla in 2007. Since an Artist for Frontier Developments. After rising to the position of Future”, Arjan Bak and Misja Baas will talk about the new direction then he has played a pivotal role in the graphical realization of Senior Artist at Elixir Studios, he joined Guerrilla in 2005 to initially of Killzone Shadow Fall. Their presentation provides insight into the Killzone series, rising from Senior Artist on Killzone 2 to Lead work on the environments, and later on the vehicles and weapons, the challenges of developing a new direction for a franchise while Environment Artist on Killzone 3 and Environment Art Director on of Killzone 2. In 2009 Misja became the Assistant Art Director on 9:30 remainingKILLZONE: true to SHADOW its roots, using FALL the implementation of new key KillzoneArjan BakShadow • Guerrilla Fall. In addition, Games Arjan was one of the Principal KillzoneMisja Baas3, assuming • Guerrilla responsibility Games for all outsourcing and internal ic PRO featuresOUT WITH like the THE OWL andOLD the ANDconstruction IN WITH of a believable THE OWL: futuristic GamePrincipal Designers game-Designer responsible for Killzone and Environment Shadow Fall’s new Art assetArt Director, development. -

Cutscenes, Agency and Innovation Ben Browning a Thesis In

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Concordia University Research Repository Should I Skip This?: Cutscenes, Agency and Innovation Ben Browning A Thesis in The Mel Hoppenheim School of Cinema Presented in Partial Fulfillment of the Requirements for the Degree of Master of Arts (Film Studies) at Concordia University Montreal, Quebec, Canada April 2016 © Ben Browning CONCORDIA UNIVERSITY School of Graduate Studies This is to certify that the thesis prepared By: Ben Browning Entitled: Should I Skip This?: Cutscenes, Agency and Innovation and submitted in partial fulfillment of the requirements for the degree of Master of Arts (Film Studies) complies with the regulations of the University and meets the accepted standards with respect to originality and quality. Signed by the final examining committee: Chair Darren Wershler External Examiner Peter Rist Examiner Marc Steinberg Supervisor Approved by Haidee Wasson Graduate Program Director Catherine Wild Dean of the Faculty of Fine Arts Date ___________________________________ iii ABSTRACT Should I Skip This?: Cutscenes, Agency and Innovation Ben Browning The cutscene is a frequently overlooked and understudied device in video game scholarship, despite its prominence in a vast number of games. Most gaming literature and criticism concludes that cutscenes are predetermined narrative devices and nothing more. Interrogating this general critical dismissal of the cutscene, this thesis argues that it is a significant device that can be used to re-examine a number of important topics and debates in video game studies. Through an analysis of cutscenes deriving from the Metal Gear Solid (Konami, 1998) and Resident Evil (Capcom, 1996) franchises, I demonstrate the cutscene’s importance within (1) studies of video game agency and (2) video game promotion. -

Chris Riddell

84 Greville Road, Cambridge, Chris Cambridgeshire, CB1 3QL Mobile: +44 (0)7395 484351 Riddell E-Mail: [email protected] Portfolio: www.axisphere.art -3D Artist- Profile As a highly motivated and self-taught CG artist I have always pushed the industries current tools to produce real-time art work of the upmost quality. Being interested in game engines, I have always enjoyed working with cutting edge technology to push the envelope of real- time graphics. I continue to provide creative input into producing some of the industry's best tools and rendering technology to produce work of excellent merit. As a keen learner and avid tech fanatic I always seek and pursue innovation. Experience Virtual Arts Limited 2017 - Present - Principal Artist/Tech Art I joined a new startup in Cambridge for a fresh challenge in Mobile VR/AR. My role at Virtual Arts Limited is creating shader networks, Unity tech art, content creation, inputting into creating our own engine/tool pipeline and to schedule artwork. Sony Interactive Entertainment 2004-2017 - Principal Artist I joined SCEE/SIE as a standard level artist and progressed to principal level. My 13-year period saw a lot of projects with some very challenging situations creating games for brand new platforms/technology, the toughest challenge being to create a Virtual Reality title (RIGS) which would be fast paced without creating nausea and achieve a solid 60 FPS throughout the experience. In my career at Sony I’ve inputted into developing our internal engines and tools, create 2D/3D graphics for our products, produce basic concept art to focus prototypes for future projects, write technical documentation and tutorials to educate the art team, worked closely with marketing to provide resources to advertise and create online social media presence, entertained VIP visitors, and educated myself in legal issues regarding design and copyright issues. -

Horizon Zero Dawn: the Frozen Wilds: Svelata La Data Di Uscita

Horizon Zero Dawn: The Frozen Wilds: svelata la data di uscita È stata annunciata la data di uscita di Horizon Zero Dawn: The Frozen Wilds, primo DLC della nuova IP, esclusiva PlayStation 4, prodotta da Guerrilla Games. L’espansione uscirà il 7 novembre 2017, ma ancora, dall’E3 di Los Angeles, non sono stati forniti molti dettagli, tranne che questo DLC amplierà il mondo di gioco, aggiungendo una nuova area, e che ci saranno nuove minacce da affrontare e nuovi misteri. Per saperne di più, con molta probabilità dovremmo aspettare il Gamescom di Colonia. La nuova esclusiva Microsoft potrebbe essere in stile Horizon: Zero Dawn I principali annunci di lavoro oggi passano da LinkedIn, e il mondo videoludico non fa eccezione; e quando si tratta di tecnici qualificati gli annunci lavorativi nel settore sono spesso forieri di un titolo work in progress. E pare proprio lasciare pochi dubbi l’annuncio dell’Head of Recruitment di Microsoft, Sandor Roberts, il quale scrive che a Redmond un Lead Environment Artist per un titolo nextgen tripla A in pieno stile Horizon: Zero Dawn. L’ultimo titolo di Guerrilla Games sembra aver già lasciato il segno nel mercato videoludico e, considerando anche le dichiarazioni rilasciate al Telegraph da Shawn Layden, secondo il quale il marchio Horizon: Zero Dawn ci accompagnerà per lungo tempo, possiamo ormai affermare con una certa sicurezza che la storia di Aloy è già entrata di diritto nell’Olimpo dei videogame più rilevanti di sempre. La saga di Horizon: Zero Dawn avrà un sequel Durante un’intervista rilasciata al Telegraph, il Presidente e CEO di Sony Interactive Entertainment America, Shawn Layden, ha tracciato un personale resoconto dell’E3, parlato di Death Stranding, affermando di averlo già provato in anteprima, e di tanto altro riguardo il futuro del console gaming e di Playstation. -

Kerkythea 2007 Rendering System

GETTING STARTED PHOTO REALISTIC RENDERS OF YOUR 3D MODELS Available for Ver. 1.01 © Kerkythea 2008 Echo Date: April 24th, 2008 GETTING STARTED Page 1 of 41 Written by: The KT Team GETTING STARTED Preface: Kerkythea is a standalone render engine, using physically accurate materials and lights, aiming for the best quality rendering in the most efficient timeframe. The target of Kerkythea is to simplify the task of quality rendering by providing the necessary tools to automate scene setup, such as staging using the GL real-time viewer, material editor, general/render settings, editors, etc., under a common interface. Reaching now the 4th year of development and gaining popularity, I want to believe that KT can now be considered among the top freeware/open source render engines and can be used for both academic and commercial purposes. In the beginning of 2008, we have a strong and rapidly growing community and a website that is more "alive" than ever! KT2008 Echo is very powerful release with a lot of improvements. Kerkythea has grown constantly over the last year, growing into a standard rendering application among architectural studios and extensively used within educational institutes. Of course there are a lot of things that can be added and improved. But we are really proud of reaching a high quality and stable application that is more than usable for commercial purposes with an amazing zero cost! Ioannis Pantazopoulos January 2008 Like the heading is saying, this is a Getting Started “step-by-step guide” and it’s designed to get you started using Kerkythea 2008 Echo.