Draft CEN Workshop Agreement: Global Ebusiness Interoperability

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Semantics Developer's Guide

MarkLogic Server Semantic Graph Developer’s Guide 2 MarkLogic 10 May, 2019 Last Revised: 10.0-8, October, 2021 Copyright © 2021 MarkLogic Corporation. All rights reserved. MarkLogic Server MarkLogic 10—May, 2019 Semantic Graph Developer’s Guide—Page 2 MarkLogic Server Table of Contents Table of Contents Semantic Graph Developer’s Guide 1.0 Introduction to Semantic Graphs in MarkLogic ..........................................11 1.1 Terminology ..........................................................................................................12 1.2 Linked Open Data .................................................................................................13 1.3 RDF Implementation in MarkLogic .....................................................................14 1.3.1 Using RDF in MarkLogic .........................................................................15 1.3.1.1 Storing RDF Triples in MarkLogic ...........................................17 1.3.1.2 Querying Triples .......................................................................18 1.3.2 RDF Data Model .......................................................................................20 1.3.3 Blank Node Identifiers ..............................................................................21 1.3.4 RDF Datatypes ..........................................................................................21 1.3.5 IRIs and Prefixes .......................................................................................22 1.3.5.1 IRIs ............................................................................................22 -

PDF Formats; the XHTML Version Has Active Links That You Can Follow

Semantic Web @ W3C: Activities, Recommendations and State of Adoption Athens, GA, USA, 2006-11-09 Ivan Herman, W3C Ivan Herman, W3C RDF(S), tools We have a solid specification since 2004: well defined (formal) semantics, clear RDF/XML syntax Lots of tools are available. Are listed on W3C’s wiki: RDF programming environment for 14+ languages, including C, C++, Python, Java, Javascript, Ruby, PHP,… (no Cobol or Ada yet ) 13+ Triple Stores, ie, database systems to store datasets 16+ general development tools (specialized editors, application builders, …) etc Ivan Herman, W3C RDF(S), tools (cont.) Note the large number of large corporations among the tool developers: Adobe, IBM, Software AG, Oracle, HP, Northrop Grumman, … …but the small companies and independent developers also play a major role! Some of the tools are Open Source, some are not; some are very mature, some are not : it is the usual picture of software tools, nothing special any more! Anybody can start developing RDF-based applications today Ivan Herman, W3C RDF(S), tools (cont.) There are lots of tutorials, overviews, or books around the wiki page on books lists 20+ (English) textbooks; 19+ proceedings for 2005 & 2006 alone… again, some of them good, some of them bad, just as with any other areas… Active developers’ communities Ivan Herman, W3C Large datasets are accumulating IngentaConnect bibliographic metadata storage: over 200 million triplets UniProt Protein Database: 262 million triplets RDF version of Wikipedia: more than 47 million triplets RDFS/OWL Representation -

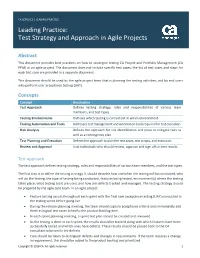

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team. -

Enhancing JSON to RDF Data Conversion with Entity Type Recognition

Enhancing JSON to RDF Data Conversion with Entity Type Recognition Fellipe Freire, Crishane Freire and Damires Souza Academic Unit of Informatics, Federal Institute of Education, Science and Technology of Paraiba, João Pessoa, Brazil Keywords: RDF Data Conversion, Entity Type Recognition, Semi-Structured Data, JSON Documents, Semantics Usage. Abstract: Nowadays, many Web data sources and APIs make their data available on the Web in semi-structured formats such as JSON. However, JSON data cannot be directly used in the Web of data, where principles such as URIs and semantically named links are essential. Thus it is necessary to convert JSON data into RDF data. To this end, we have to consider semantics in order to provide data reference according to domain vocabularies. To help matters, we present an approach which identifies JSON metadata, aligns them with domain vocabulary terms and converts data into RDF. In addition, along with the data conversion process, we provide the identification of the semantically most appropriate entity types to the JSON objects. We present the definitions underlying our approach and results obtained with the evaluation. 1 INTRODUCTION principles of this work is using semantics provided by the knowledge domain of the data to enhance The Web has evolved into an interactive information their conversion to RDF data. To this end, we use network, allowing users and applications to share recommended open vocabularies which are data on a massive scale. To help matters, the Linked composed by a set of terms, i.e., classes and Data principles define a set of practices for properties useful to describe specific types of things publishing structured data on the Web aiming to (LOV Documentation, 2016). -

Linked Data Schemata: Fixing Unsound Foundations

Linked data schemata: fixing unsound foundations. Kevin Feeney, Gavin Mendel Gleason, Rob Brennan Knowledge and Data Engineering Group & ADAPT Centre, School of Computer Science & Statistics, Trinity College Dublin, Ireland Abstract. This paper describes an analysis, and the tools and methods used to produce it, of the practical and logical implications of unifying common linked data vocabularies into a single logical model. In order to support any type of reasoning or even just simple type-checking, the vocabularies that are referenced by linked data statements need to be unified into a complete model wherever they reference or reuse terms that have been defined in other linked data vocabularies. Strong interdependencies between vocabularies are common and a large number of logical and practical problems make this unification inconsistent and messy. However, the situation is far from hopeless. We identify a minimal set of necessary fixes that can be carried out to make a large number of widely-deployed vocabularies mutually compatible, and a set of wider-ranging recommendations for linked data ontology design best practice to help alleviate the problem in future. Finally we make some suggestions for improving OWL’s support for distributed authoring and ontology reuse in the wild. Keywords: Linked Data, Reasoning, Data Quality 1. Introduction One of the central tenets of the Linked Data movement is the reuse of terms from existing well- known vocabularies [Bizer09] when developing new schemata or datasets. The semantic web infrastructure, and the RDF, RDFS and OWL languages, support this with their inherently distributed and modular nature. In practice, through vocabulary reuse, linked data schemata adopt knowledge models that are based on multiple, independently devised ontologies that often exhibit varying definitional semantics [Hogan12]. -

Building Linked Data for Both Humans and Machines∗

Building Linked Data For Both Humans and Machines∗ y z x Wolfgang Halb Yves Raimond Michael Hausenblas Institute of Information Centre for Digital Music Institute of Information Systems & Information London, UK Systems & Information Management Management Graz, Austria Graz, Austria ABSTRACT Existing linked datasets such as [3] are slanted towards ma- In this paper we describe our experience with building the chines as the consumer. Although there are exceptions to riese dataset, an interlinked, RDF-based version of the Eu- this machine-first approach (cf. [13]), we strongly believe rostat data, containing statistical data about the European that satisfying both humans and machines from a single Union. The riese dataset (http://riese.joanneum.at), aims source is a necessary path to follow. at serving roughly 3 billion RDF triples, along with mil- lions of high-quality interlinks. Our contribution is twofold: We subscribe to the view that every LOD dataset can be Firstly, we suggest using RDFa as the main deployment understood as a Semantic Web application. Every Semantic mechanism, hence serving both humans and machines to Web application in turn is a Web application in the sense effectively and efficiently explore and use the dataset. Sec- that it should support a certain task for a human user. With- ondly, we introduce a new way of enriching the dataset out offering a state-of-the-art Web user interface, potential with high-quality links: the User Contributed Interlinking, end-users are scared away. Hence a Semantic Web applica- a Wiki-style way of adding semantic links to data pages. tion needs to have a nice outfit, as well. -

Linked Data Schemata: Fixing Unsound Foundations

Linked data schemata: fixing unsound foundations Editor(s): Amrapali Zaveri, Stanford University, USA; Dimitris Kontokostas, Universität Leipzig, Germany; Sebastian Hellmann, Universität Leipzig, Germany; Jürgen Umbrich, Wirtschaftsuniversität Wien, Austria Solicited review(s): Mathieu d’Aquin, The Open University, UK; Peter F. Patel-Schneider, Nuance Communications, USA; John McCrae, Insight Centre for Data Analytics, Ireland; One anonymous reviewer Kevin Chekov Feeney, Gavin Mendel Gleason, and Rob Brennan Knowledge and Data Engineering Group & ADAPT Centre, School of Computer Science & Statistics, Trinity College Dublin, Ireland Corresponding author’s e-mail: [email protected] Abstract. This paper describes our tools and method for an evaluation of the practical and logical implications of combining common linked data vocabularies into a single local logical model for the purpose of reasoning or performing quality evalua- tions. These vocabularies need to be unified to form a combined model because they reference or reuse terms from other linked data vocabularies and thus the definitions of those terms must be imported. We found that strong interdependencies between vocabularies are common and that a significant number of logical and practical problems make this model unification inconsistent. In addition to identifying problems, this paper suggests a set of recommendations for linked data ontology design best practice. Finally we make some suggestions for improving OWL’s support for distributed authoring and ontology reuse. Keywords: Linked Data, Reasoning, Data Quality 1. Introduction In order to validate any dataset which uses the adms:Asset term we must combine the adms ontolo- One of the central tenets of the linked data move- gy and the dcat ontology in order to ensure that ment is the reuse of terms from existing well-known dcat:Dataset is a valid class. -

Efficient Black-Box JTAG Discovery

Ca’ Foscari University of Venice Department of Environmental Sciences, Informatics and Statistics Master’s Degree programme Computer Science, Software Dependability and Cyber Security Second Cycle (D.M. 270/2004) Master Thesis Efficient Black-box JTAG Discovery Supervisor: Graduand: Prof. Riccardo Focardi Riccardo Francescato Matriculation Number 857609 Assistant supervisor: Dott. Francesco Palmarini Academic Year 2016 - 2017 Riccardo Francescato Matriculation Number 857609 Efficient Black-box JTAG Discovery, Master Thesis c February 2018. Abstract Embedded devices represent the most widespread form of computing device in the world. Almost every consumer product manufactured in the last decades contains an embedded system, e.g., refrigerators, smart bulbs, activity trackers, smart watches and washing machines. These computing devices are also used in safety and security- critical systems, e.g., autonomous driving cars, cryptographic tokens, avionics, alarm systems. Often, manufacturers do not take much into consideration the attack surface offered by low-level interfaces such as JTAG. In the last decade, JTAG port has been used by the research community as an entry point for a number of attacks and reverse engineering techniques. Therefore, finding and identifying the JTAG port of a device or a de-soldered integrated circuit (IC) can be the first step required for performing a successful attack. In this work, we analyse the design of JTAG port and develop methods and algorithms aimed at searching the JTAG port. More specifically we will provide an introduction to the problem and the related attacks already documented in the literature. Moreover, we will provide to the reader the basics necessary to understand this work (background on JTAG and basic electronic terminology). -

Slideshare.Net/Scorlosquet/Drupal-As-A-Semantic-Web-Platform

Linked Data Publishing with Drupal Joachim Neubert ZBW – German National Library of Economics Leibniz Information Centre for Economics SWIB13 Workshop Hamburg, Germany 25.11.2013 ZBW is member of the Leibniz Association My background • Scientific software developer at ZBW – German National Library for Economics, mainly concerned with Linked Open Data and knowledge organization systems and services • Published 20th Century Press Archives in 2010, with some 100,000 digitized newspaper articles in dossiers (http://zbw.eu/beta/p20, custom application written in Perl) • Published a repository of ZBW Labs projects recently – basicly project descriptions and a blog (http://zbw.eu/labs, Drupal based) Page 2 Workshop Agenda – Part 1 1) Drupal 7 as a Content Management System: Linked Data by Default Hands-on: Get familiar with Drupal and it‘s default RDF mappings 2) Using Drupal 7as a Framework for Content Management Hands-on: Create a custom content type and map it to RDF Page 3 Workshop Agenda – Part 2 Produce other RDF Serialization Formats: RDF/XML, Turtle, Ntriples, JSON-LD Create a SPARQL Endpoint from your Drupal Site Cool URIs Create Out-Links with Web Taxonomy Current limitations of RDF/LD support in Drupal 7 Outlook on Drupal 8 Page 4 Drupal as a CMS (Content Management System) ready for RDF and Linked Data Page 5 Why at all linked data enhanced publishing? • Differentiate the subjects of your web pages and their attributes • Thus, foster data reuse in 3rd party services and applications • Mashups • Search engines • Create meaningful -

A Confused Tester in Agile World … Qa a Liability Or an Asset

A CONFUSED TESTER IN AGILE WORLD … QA A LIABILITY OR AN ASSET THIS IS A WORK OF FACTS & FINDINGS BASED ON TRUE STORIES OF ONE & MANY TESTERS !! J Presented By Ashish Kumar, WHAT’S AHEAD • A STORY OF TESTING. • FROM THE MIND OF A CONFUSED TESTER. • FEW CASE STUDIES. • CHALLENGES IDENTIFIED. • SURVEY STUDIES. • GLOBAL RESPONSES. • SOLUTION APPROACH. • PRINCIPLES AND PRACTICES. • CONCLUSION & RECAP. • Q & A. A STORY OF TESTING IN AGILE… HAVE YOU HEARD ANY OF THESE ?? • YOU DON’T NEED A DEDICATED SOFTWARE TESTING TEAM ON YOUR AGILE TEAMS • IF WE HAVE BDD,ATDD,TDD,UI AUTOMATION , UNIT TEST >> WHAT IS THE NEED OF MANUAL TESTING ?? • WE WANT 100% AUTOMATION IN THIS PROJECT • TESTING IS BECOMING BOTTLENECK AND REASON OF SPRINT FAILURE • REPEATING REGRESSION IS A BIG TASK AND AN OVERHEAD • MICROSOFT HAS NO TESTERS NOT EVEN GOOGLE, FACEBOOK AND CISCO • 15K+ DEVELOPERS /4K+ PROJECTS UNDER ACTIVE • IN A “MOBILE-FIRST AND CLOUD-FIRST WORLD.” DEVELOPMENT/50% CODE CHANGES PER MONTH. • THE EFFORT, KNOWN AS AGILE SOFTWARE DEVELOPMENT, • 5500+ SUBMISSION PER DAY ON AVERAGE IS DESIGNED TO LOWER COSTS AND HONE OPERATIONS AS THE COMPANY FOCUSES ON BUILDING CLOUD AND • 20+ SUSTAINED CODE CHANGES/MIN WITH 60+PEAKS MOBILE SOFTWARE, SAY ANALYSTS • 75+ MILLION TEST CASES RUN PER DAY. • MR. NADELLA TOLD BLOOMBERG THAT IT MAKES MORE • DEVELOPERS OWN TESTING AND DEVELOPERS OWN SENSE TO HAVE DEVELOPERS TEST & FIX BUGS INSTEAD OF QUALITY. SEPARATE TEAM OF TESTERS TO BUILD CLOUD SOFTWARE. • GOOGLE HAVE PEOPLE WHO COULD CODE AND WANTED • SUCH AN APPROACH, A DEPARTURE FROM THE TO APPLY THAT SKILL TO THE DEVELOPMENT OF TOOLS, COMPANY’S TRADITIONAL PRACTICE OF DIVIDING INFRASTRUCTURE, AND TEST AUTOMATION. -

A User-Friendly Interface to Browse and Find DOAP Projects with Doap:Store

A user-friendly interface to browse and find DOAP projects with doap:store Alexandre Passant Universit´eParis IV Sorbonne, Laboratoire LaLICC, Paris, France [email protected] 1 Motivation The DOAP[2] vocabulary is now widely used by people - and organizations - to describe their projects using Semantic Web standards. Yet, since files are spread around the Web, there is no easy way to find a project regarding its metadata. Recently, Ping The Semantic Web1 (PTSW) and Semantic Radar2 plugin for Firefox introduced a new way to discover Semantic Web documents[1]: by brows- ing the Web, users ping the PTSW service so that it can maintain a contineously updated list of Semantic Web document URIs. Thus, the idea of doap:store - http://doapstore.org is to provide a user- friendly interface, easily accessible for not RDF-aware users, to find and browse DOAP projects, using PTSW as a provider of data sources. This way, users do not have to register to promote a project as in freshmeat3 or related services, but just need to publish some DOAP files on their websites to benefit of this distributed architecture. doap:store is the first implemented service using PTSW data sources to provide such browsing and querying features. 2 Architecture doap:store involves 3 main components: – A crawler: Running hourly, a tiny script parses the list of latest DOAP pings received by PTSW and then put each related RDF files into a triple-store; – A triple-store: The core of the system, storing RDF files retrieved thanks to the crawler, and providing SPARQL capabilities to be used by the user- interface that is plugged on the endpoint; – A user-interface: A simple interface, offering a list of latest retrieved projects, a case-insensitive tagcloud of programming languages, and a search engine to find DOAP projects regarding various criteria in a easy way. -

Acceptance Testing Based on Relationships Among Use Cases Susumu Kariyuki, Atsuto Kubo Mikio Suzuki Hironori Washizaki, Aoyama Media Laboratory TIS Inc

5th World Congress for Software Quality – Shanghai, China – November 2011 Acceptance Testing based on Relationships among Use Cases Susumu Kariyuki, Atsuto Kubo Mikio Suzuki Hironori Washizaki, Aoyama Media Laboratory TIS Inc. Yoshiaki Fukazawa Tokyo, Japan Tokyo, Japan Waseda University [email protected]. [email protected] Tokyo, Japan jp [email protected] [email protected] Abstract In software development using use cases, such as use-case-driven object-oriented development, test scenarios for the acceptance test can be built from use cases. However, the manual listing of execution flows of complex use cases sometimes results in incomplete coverage of the possible execution flows, particularly if the relationships between use cases are complicated. Moreover, the lack of a widely accepted coverage definition for the acceptance test results in the ambiguous judgment of acceptance test completion. We propose a definition of acceptance test coverage using use cases and an automated generation procedure for test scenarios and skeleton codes under a specified condition for coverage while automatically identifying the execution flows of use cases. Our technique can reduce the incomplete coverage of execution flows of use cases and create objective standards for judging acceptance test completion. Moreover, we expect an improvement of the efficiency of acceptance tests because of the automated generation procedure for test scenarios and skeleton codes. 1. Introduction An acceptance test is carried out by users who take the initiative in confirming whether the developed system precisely performs the requirements of the users.2) As a method of representing functional requirements, use cases have been used.3) Use cases are descriptions of external functions of a system from the viewpoint of the users and external systems (both are called actors).