ISCR Subcontract Research Summaries

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2017 HPC Annual Report Team Would Like to Acknowledge the Invaluable Assistance Provided by John Noe

sandia national laboratories 2017 HIGH PERformance computing The 2017 High Performance Computing Annual Report is dedicated to John Noe and Dino Pavlakos. Building a foundational framework Editor in high performance computing Yasmin Dennig Contributing Writers Megan Davidson Sandia National Laboratories has a long history of significant contributions to the high performance computing Mattie Hensley community and industry. Our innovative computer architectures allowed the United States to become the first to break the teraflop barrier—propelling us to the international spotlight. Our advanced simulation and modeling capabilities have been integral in high consequence US operations such as Operation Burnt Frost. Strong partnerships with industry leaders, such as Cray, Inc. and Goodyear, have enabled them to leverage our high performance computing capabilities to gain a tremendous competitive edge in the marketplace. Contributing Editor Laura Sowko As part of our continuing commitment to provide modern computing infrastructure and systems in support of Sandia’s missions, we made a major investment in expanding Building 725 to serve as the new home of high performance computer (HPC) systems at Sandia. Work is expected to be completed in 2018 and will result in a modern facility of approximately 15,000 square feet of computer center space. The facility will be ready to house the newest National Nuclear Security Administration/Advanced Simulation and Computing (NNSA/ASC) prototype Design platform being acquired by Sandia, with delivery in late 2019 or early 2020. This new system will enable continuing Stacey Long advances by Sandia science and engineering staff in the areas of operating system R&D, operation cost effectiveness (power and innovative cooling technologies), user environment, and application code performance. -

NNSA — Weapons Activities

Corporate Context for National Nuclear Security Administration (NS) Programs This section on Corporate Context that is included for the first time in the Department’s budget is provided to facilitate the integration of the FY 2003 budget and performance measures. The Department’s Strategic Plan published in September 2000 is no longer relevant since it does not reflect the priorities laid out in President Bush’s Management Agenda, the 2001 National Energy Policy, OMB’s R&D project investment criteria or the new policies that will be developed to address an ever evolving and challenging terrorism threat. The Department has initiated the development of a new Strategic Plan due for publication in September 2002, however, that process is just beginning. To maintain continuity of our approach that links program strategic performance goals and annual targets to higher level Departmental goals and Strategic Objectives, the Department has developed a revised set of Strategic Objectives in the structure of the September 2000 Strategic Plan. For more than 50 years, America’s national security has relied on the deterrent provided by nuclear weapons. Designed, built, and tested by the Department of Energy (DOE) and its predecessor agencies, these weapons helped win the Cold War, and they remain a key component of the Nation’s security posture. The Department’s National Nuclear Security Administration (NNSA) now faces a new and complex set of challenges to its national nuclear security missions in countering the threats of the 21st century. One of the most critical challenges is being met by the Stockpile Stewardship program, which is maintaining the effectiveness of our nuclear deterrent in the absence of underground nuclear testing. -

Kull: Llnl's Asci Inertial Confinement Fusion Simulation Code

KULL: LLNL'S ASCI INERTIAL CONFINEMENT FUSION SIMULATION CODE James A. Rathkopf, Douglas S. Miller, John M. Owen, Linda M. Stuart, Michael R. Zika, Peter G. Eltgroth, Niel K. Madsen, Kathleen P. McCandless, Paul F. Nowak, Michael K. Nemanic, Nicholas A. Gentile, and Noel D. Keen Lawrence Livermore National Laboratory P.O. Box 808, L-18 Livermore, California 94551-0808 [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected]; [email protected] Todd S. Palmer Department of Nuclear Engineering Oregon State University Corvallis, Oregon 97331 [email protected] ABSTRACT KULL is a three dimensional, time dependent radiation hydrodynamics simulation code under development at Lawrence Livermore National Laboratory. A part of the U.S. Department of Energy’s Accelerated Strategic Computing Initiative (ASCI), KULL’s purpose is to simulate the physical processes in Inertial Confinement Fusion (ICF) targets. The National Ignition Facility, where ICF experiments will be conducted, and ASCI are part of the experimental and computa- tional components of DOE’s Stockpile Stewardship Program. This paper provides an overview of ASCI and describes KULL, its hydrodynamic simulation capability and its three methods of simulating radiative transfer. Particular emphasis is given to the parallelization techniques essen- tial to obtain the performance required of the Stockpile Stewardship Program and to exploit the massively parallel processor machines that ASCI is procuring. 1. INTRODUCTION With the end of underground nuclear testing, the United States must rely solely on non-nuclear experiments and numerical simulations, together with archival underground nuclear test data, to certify the continued safety, performance, and reliability of the nation’s nuclear stockpile. -

Delivering Insight: the History of the Accelerated Strategic Computing

Lawrence Livermore National Laboratory Computation Directorate Dona L. Crawford Computation Associate Director Lawrence Livermore National Laboratory 7000 East Avenue, L-559 Livermore, CA 94550 September 14, 2009 Dear Colleague: Several years ago, I commissioned Alex R. Larzelere II to research and write a history of the U.S. Department of Energy’s Accelerated Strategic Computing Initiative (ASCI) and its evolution into the Advanced Simulation and Computing (ASC) Program. The goal was to document the first 10 years of ASCI: how this integrated and sustained collaborative effort reached its goals, became a base program, and changed the face of high-performance computing in a way that independent, individually funded R&D projects for applications, facilities, infrastructure, and software development never could have achieved. Mr. Larzelere has combined the documented record with first-hand recollections of prominent leaders into a highly readable, 200-page account of the history of ASCI. The manuscript is a testament to thousands of hours of research and writing and the contributions of dozens of people. It represents, most fittingly, a collaborative effort many years in the making. I’m pleased to announce that Delivering Insight: The History of the Accelerated Strategic Computing Initiative (ASCI) has been approved for unlimited distribution and is available online at https://asc.llnl.gov/asc_history/. Sincerely, Dona L. Crawford Computation Associate Director Lawrence Livermore National Laboratory An Equal Opportunity Employer • University of California • P.O. Box 808 Livermore, California94550 • Telephone (925) 422-2449 • Fax (925) 423-1466 Delivering Insight The History of the Accelerated Strategic Computing Initiative (ASCI) Prepared by: Alex R. -

High Speed Computing LANL • LLNL

LA-13474-C Conference Proceedings from the Conference on High Speed Computing LANL • LLNL The Art of High Speed Computing April 20–23, 1998 Architecture Algorithms Language Salishan Lodge Gleneden Beach, Oregon Los Alamos NATIONAL LABORATORY Los Alamos National Laboratory is operated by the University of California for the United States Department of Energy under contract W-7405-ENG-36. Photocomposition by Wendy Burditt, Group CIC-1 Special thanks to Orlinie Velasquez and Verna VanAken for coordinating this effort. An Affirmative Action/Equal Opportunity Employer This report was prepared as an account of work sponsored by an agency of the United States Government. Neither The Regents of the University of California, the United States Government nor any agency thereof, nor any of their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise, does not necessarily constitute or imply its endorsement, recommendation, or favoring by The Regents of the University of California, the United States Government, or any agency thereof. The views and opinions of authors expressed herein do not necessarily state or reflect those of The Regents of the University of California, the United States Government, or any agency thereof. Los Alamos National Laboratory strongly supports academic freedom and a researcher's right to publish; as an institution, however, the Laboratory does not endorse the viewpoint of a publication or guarantee its technical correctness. -

MICROCOMP Output File

S. HRG. 107–355, PT. 7 DEPARTMENT OF DEFENSE AUTHORIZATION FOR APPROPRIATIONS FOR FISCAL YEAR 2002 HEARINGS BEFORE THE COMMITTEE ON ARMED SERVICES UNITED STATES SENATE ONE HUNDRED SEVENTH CONGRESS FIRST SESSION ON S. 1416 AUTHORIZING APPROPRIATIONS FOR FISCAL YEAR 2002 FOR MILITARY ACTIVITIES OF THE DEPARTMENT OF DEFENSE, FOR MILITARY CON- STRUCTION, AND FOR DEFENSE ACTIVITIES OF THE DEPARTMENT OF ENERGY, TO PRESCRIBE PERSONNEL STRENGTHS FOR SUCH FISCAL YEAR FOR THE ARMED FORCES, AND FOR OTHER PURPOSES PART 7 STRATEGIC APRIL 25; JUNE 26; JULY 11, 25, 2001 ( Printed for the use of the Committee on Armed Services VerDate 11-SEP-98 17:29 Sep 06, 2002 Jkt 000000 PO 00000 Frm 00001 Fmt 6011 Sfmt 6011 75352.CON SARMSER2 PsN: SARMSER2 DEPARTMENT OF DEFENSE AUTHORIZATION FOR APPROPRIATIONS FOR FISCAL YEAR 2002—Part 7 STRATEGIC VerDate 11-SEP-98 17:29 Sep 06, 2002 Jkt 000000 PO 00000 Frm 00002 Fmt 6019 Sfmt 6019 75352.CON SARMSER2 PsN: SARMSER2 S. HRG. 107–355, PT. 7 DEPARTMENT OF DEFENSE AUTHORIZATION FOR APPROPRIATIONS FOR FISCAL YEAR 2002 HEARINGS BEFORE THE COMMITTEE ON ARMED SERVICES UNITED STATES SENATE ONE HUNDRED SEVENTH CONGRESS FIRST SESSION ON S. 1416 AUTHORIZING APPROPRIATIONS FOR FISCAL YEAR 2002 FOR MILITARY ACTIVITIES OF THE DEPARTMENT OF DEFENSE, FOR MILITARY CON- STRUCTION, AND FOR DEFENSE ACTIVITIES OF THE DEPARTMENT OF ENERGY, TO PRESCRIBE PERSONNEL STRENGTHS FOR SUCH FISCAL YEAR FOR THE ARMED FORCES, AND FOR OTHER PURPOSES PART 7 STRATEGIC APRIL 25; JUNE 26; JULY 11, 25, 2001 ( Printed for the use of the Committee on Armed Services U.S. -

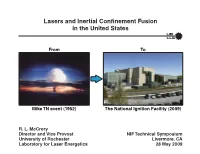

Lasers and Inertial Confinement Fusion in the United States

Lasers and Inertial Confinement Fusion in the United States From To Mike TN event (1952) The National Ignition Facility (2009) R. L. McCrory Director and Vice Provost NIF Technical Symposium University of Rochester Livermore, CA Laboratory for Laser Energetics 28 May 2009 Summary The understanding of inertial confinement fusion( ICF) has grown as successively larger lasers have been built • The ICF era began with a successful thermonuclear test in 1952. • The demonstration of the laser in 1960 began the quest to develop ICF and, eventually, create thermonuclear fusion ignition in the laboratory. • ICF lasers have continued to become larger and more technically sophisticated. • The NIF represents the next great leap in laser development – Congratulations to Ed Moses and the entire team! • The improved understanding of ICF physics provides confidence for achieving ignition on the NIF – Nova technical contract – OMEGA cryogenic target implosions • New laser and target physics concepts continue to advance the ICF program in the United States The LLNL/LLE collaboration in the development of ICF lasers and target physics has brought the U.S. to the next step—poised for ignition on the NIF. I1859 The successful test of the 10-MT Mike thermonuclear device began the Inertial Confinement Fusion Era • Stanislaw Ulam and Edward Teller developed the principle of a radiation implosion in January 1951. • On 31 October 1952, Mike exploded with a yield of 10.4 megatons on the Enewetak Atoll. • The island of Elugelap disappeared. • This successful test ushered in the era of multimegaton nuclear weapons. • It was the first step in developing ICF. I1860 From http://www.lanl.gov/history/postwar/ The demonstration of the laser spurred the development of ICF in the laboratory • T. -

House Report 105-851

U.S. NATIONAL SECURITY AND MILITARY/COMMERCIAL CONCERNS WITH THE PEOPLE’S REPUBLIC OF CHINA VOLUME I SELECT COMMITTEE UNITED STATES HOUSE OF REPRESENTATIVES U.S. NATIONAL SECURITY AND MILITARY/COMMERCIAL CONCERNS WITH THE PEOPLE’S REPUBLIC OF CHINA VOLUME I SELECT COMMITTEE UNITED STATES HOUSE OF REPRESENTATIVES 105TH CONGRESS REPORT HOUSE OF REPRESENTATIVES 2d Session } { 105-851 REPORT OF THE SELECT COMMITTEE ON U.S. NATIONAL SECURITY AND MILITARY/COMMERCIAL CONCERNS WITH THE PEOPLE’S REPUBLIC OF CHINA SUBMITTED BY MR. COX OF CALIFORNIA, CHAIRMAN January 3, 1999 — Committed to the Committee of the Whole House on the State of the Union and ordered to be printed (subject to declassification review) May 25, 1999 — Declassified, in part, pursuant to House Resolution 5, as amended, 106th Congress, 1st Session –––––––––– U.S. GOVERNMENT PRINTING OFFICE WASHINGTON : 1999 09-006 A NOTE ON REDACTION The Final Report of the Select Committee on U.S. National Security and Military/Commercial Concerns with the Peoples Republic of China was unanimously approved by the five Republicans and four Democrats who served on the Select Committee. This three-volume Report is a declassified, redacted version of the Final Report. The Final Report was classified Top Secret when issued on January 3, 1999, and remains so today. Certain source materials included in the Final Report were submitted to the Executive branch during the period August–December 1998 for declassification review in order to facilitate the production of a declassified report. The Select Committee sought declassification review of the entire report on January 3, 1999. The House of Representatives extended the life of the Select Committee for 90 days for the purpose of continuing to work with the Executive Branch to declassify the Final Report. -

WORKSHOP REPORT December 2008

Risk Management Techniques and Practice Workshop WORKSHOP REPORT December 2008 Compiled by Terri Quinn and Mary Zosel Lawrence Livermore National Laboratory LLNL-TR-409240 Workshop Steering Committee Arthur Bland, ORNL; Vince Dattoria, SC/ASCR/DOE HQ; Bill Kramer, LBNL; Sander Lee, NNSA/ASC/DOE HQ; Terri Quinn, LLNL (workshop chair); Randal Rheinheimer, LANL; Mark Seager, LLNL; Yukiko Sekine, SC/ASCR/DOE HQ; Jeffery Sims, ANL; Jon Stearly, SNL; and Mary Zosel, LLNL (host organizer) Workshop Group Chairs Ann Baker, ORNL; Robert Ballance, SNL; Kathlyn Boudwin, ORNL; Susan Coghlan, ANL; James Craw, LBNL; Candace Culhane, DoD; Kimberly Cupps, LLNL; Brent Draney, LBNL; Ira Goldberg, ANL; Patricia Kovatch, UTenn/NICS; Robert Pennington, NCSA; Kevin Regimbal, PNNL; Randal Rheinheimer, LANL; Gary Skouson, PNNL; Jon Stearly, SNL; and Manuel Vigil, LANL December 2008 This work performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. This document was prepared as an account of work sponsored by an agency of the United States government. Neither the United States government nor Lawrence Livermore National Security, LLC, nor any of their employees makes any warranty, expressed or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States government or Lawrence Livermore National Security, LLC. -

Petawattpetawatt Thresholdthreshold

Livermore, California 94551 California Livermore, P.O. 808,L-664 Box LivermoreNationalLaboratoryLawrence Review Technology Science & December 1996 Lawrence Livermore National Laboratory CrossingCrossing thethe PetawattPetawatt ThresholdThreshold Also in this issue: Permit No.Permit 154 Livermore, CA Livermore, Nonprofit Org. Nonprofit U. S. Postage PAID • Stockpile Surveillance • Earth Core Studies Printed on recycled paper. • 3-D Computer Motion Modeling About the Cover December 1996 S&TR Staff December 1996 Lawrence Livermore Adjusting a diagnostic lens in Lawrence National Lawrence Livermore’s new ultrashort-pulse laser, called Laboratory SCIENTIFIC EDITOR Ravi Upadhye Livermore the Petawatt, is laser physicist Deanna National Pennington. World-record peak power of 1.25 Crossing the UBLICATION DITOR Laboratory petawatts (1.25 quadrillion watts) was reached Petawatt Threshold P E May 23, 1996. In this issue beginning on p. 4, Sue Stull we discuss the challenges and development of this extraordinary laser. WRITERS Bart Hacker, Arnie Heller, Dale Sprouse, Katie Walter, and Gloria Wilt 2 The Laboratory in the News ART DIRECTOR George Kitrinos 3 Commentary by E. Michael Campbell Opportunities for Science from the Petawatt Laser DESIGNERS Also in this issue: • Stockpile Surveillance Paul Harding, George Kitrinos, and • Earth Core Studies Features • 3-D Computer Motion Ray Marazzi Modeling 4 Crossing the Petawatt Threshold Cover photo: Bryan L. Quintard GRAPHIC ARTIST The new Petawatt laser may make it possible to achieve fusion using much Treva Carey less energy than currently envisioned. INTERNET DESIGNER 12 High Explosives in Stockpile Surveillance Indicate Constancy Kathryn Tinsley A well-established portion of the Stockpile Evaluation Program performs extensive tests on the high-explosive components and materials in COMPOSITOR Louisa Cardoza Livermore-designed nuclear weapons. -

Advanced Simulation and Computing FY11–12 Implementation Plan

Rev. 0 NA-ASC-120R-10-Vol.2-Rev.0-IP LLNL-TR-429026 Advanced Simulation and Computing FY11–12 Implementation Plan Volume 2, Rev. 0 May 5, 2010 FY11–FY12 ASC Implementation Plan, Vol. 2 Page i Rev. 0 This work performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. This document was prepared as an account of work sponsored by an agency of the United States government. Neither the United States government nor Lawrence Livermore National Security, LLC, nor any of their employees makes any warranty, expressed or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States government or Lawrence Livermore National Security, LLC. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States government or Lawrence Livermore National Security, LLC, and shall not be used for advertising or product endorsement purposes. FY11–FY12 ASC Implementation Plan, Vol. 2 Page ii Rev. 0 Advanced Simulation and Computing FY11–12 IMPLEMENTATION PLAN Volume 2, Rev. 0 May 5, 2010 Approved by: Robert Meisner, NNSA ASC Program Director (acting) May 5, 2010 Signature Date Julia Phillips, SNL ASC Executive Signature Date Michel McCoy, LLNL ASC Executive Signature Date John Hopson, LANL ASC Executive Signature Date ASC Focal Point IP Focal Point Robert Meisner Atinuke Arowojolu NA 121.2 NA 121.2 Tele.: 202-586-0908 Tele.: 202-586-0787 FAX: 202-586-0405 FAX: 202-586-7754 [email protected] [email protected] FY11–FY12 ASC Implementation Plan, Vol. -

A Look Back on 30 Years of the Gordon Bell Prize

Research Paper The International Journal of High Performance Computing Applications 2017, Vol. 31(6) 469–484 A look back on 30 years of the Gordon ª The Author(s) 2017 Reprints and permissions: Bell Prize sagepub.co.uk/journalsPermissions.nav DOI: 10.1177/1094342017738610 journals.sagepub.com/home/hpc Gordon Bell1, David H Bailey2, Jack Dongarra4,5, Alan H Karp6 and Kevin Walsh3 Abstract The Gordon Bell Prize is awarded each year by the Association for Computing Machinery to recognize outstanding achievement in high-performance computing (HPC). The purpose of the award is to track the progress of parallel computing with particular emphasis on rewarding innovation in applying HPC to applications in science, engineering, and large-scale data analytics. Prizes may be awarded for peak performance or special achievements in scalability and time-to- solution on important science and engineering problems. Financial support for the US$10,000 award is provided through an endowment by Gordon Bell, a pioneer in high-performance and parallel computing. This article examines the evolution of the Gordon Bell Prize and the impact it has had on the field. Keywords High Performance Computing, HPC Cost-Performance, HPC Progress, HPC Recognition, HPPC Award. HPC Prize, Gordon Bell Prize, Computational Science, Technical Computing, Benchmarks, HPC special hardware 1. Introduction of authors associated with the prize-winning papers in recent years. The prize has also had a positive effect on the The Association for Computing Machinery’s (ACM) Gor- careers of many of the participants. don Bell Prize (ACM Gordon Bell Prize Recognizes Top Accomplishments in Running Science Apps on HPC, 2016; BELL Award Winners, n.d.).