Natural Language Generation from Structured Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Our Handbook Is a Continuation of Thoughtful, Candid, and Encouraging Engagement of That Deliberative Process

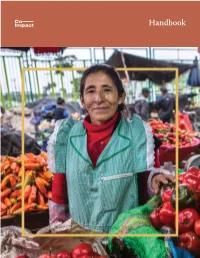

Handbook Front cover www.co-impact.org Luzmila Elba Rojas Morales sells fresh produce in the local municipality [email protected] of La Victoria. She is the president of the Asociación 20 de junio, which comprises 150 perishable produce vendors. The Association assists For the for the latest Co-Impact updates their members by engaging with the authorities to obtain permits to and announcements, please visit our website sell. If they don´t pay, they face the risk of being evicted. Luzmila’s www.co-impact.org, sign up for our email mailing list, organization is part of the National Network of Women and Men and be sure to follow us on Twitter and LinkedIn. Self-employed Workers (Red Nacional de Trabajadoras y Trabajadores Autoempleados, RENATTA). This Revised Edition of the Co-Impact Handbook published June 2021. Courtesy of Juan Arredondo/Getty Images/Images of Empowerment This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. You are free to Share — copy and redistribute the material in any medium or format or Adapt — remix, transform, and build upon the material, under the following terms: a) Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use and b) NonCommercial — You may not use the material for commercial purposes. Table of Contents Foreword and Acknowledgements 4 Purpose of this Handbook 5 Glossary 6 SECTION ONE Who -

ACCOUNTING and AUDITING in ROMAN SOCIETY Lance Elliot

ACCOUNTING AND AUDITING IN ROMAN SOCIETY Lance Elliot LaGroue A dissertation thesis submitted to the faculty at the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of History. Chapel Hill 2014 Approved by: Richard Talbert Fred Naiden Howard Aldrich Terrence McIntosh © 2014 Lance Elliot LaGroue ALL RIGHTS RESERVED II ABSTRACT Lance LaGroue: Accounting and Auditing in Roman Society (Under the direction of Richard Talbert) This dissertation approaches its topic from the pathbreaking dual perspective of a historian and of an accountant. It contributes to our understanding of Roman accounting in several notable ways. The style and approach of Roman documents are now categorized to reflect differing levels of complexity and sophistication. With the aid of this delineation, and by comparison with the practices of various other premodern societies, we can now more readily appreciate the distinct attributes present at each level in Roman accounting practices. Additionally, due to the greater accessibility of Roman accounting documents in recent years – in particular, through John Matthews’ work on the Journey of Theophanes, Dominic Rathbone’s study of the Heroninos archive, and the reading of the Vindolanda tablets -- it becomes easier to appreciate such differences among the few larger caches of accounting documents. Moreover, the dissertation seeks to distinguish varying grades of accountant. Above all, it emphasizes the need to separate the functions of accounting and auditing, and to gauge the essential characteristics and roles of both. In both regards, it is claimed, the Roman method showed competency. The dissertation further shows how economic and accounting theory has influenced perceptions about Roman accounting practices. -

Beaweblogic Portal™®

BEAWebLogic Portal™® White Paper: Content Personalization Version 8.1 Document Revised: May 2004 By: Greg Smith Copyright Copyright © 2004 BEA Systems, Inc. All Rights Reserved. Restricted Rights Legend This software and documentation is subject to and made available only pursuant to the terms of the BEA Systems License Agreement and may be used or copied only in accordance with the terms of that agreement. It is against the law to copy the software except as specifically allowed in the agreement. This document may not, in whole or in part, be copied, photocopied, reproduced, translated, or reduced to any electronic medium or machine readable form without prior consent, in writing, from BEA Systems, Inc. Use, duplication or disclosure by the U.S. Government is subject to restrictions set forth in the BEA Systems License Agreement and in subparagraph (c)(1) of the Commercial Computer Software-Restricted Rights Clause at FAR 52.227-19; subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013, subparagraph (d) of the Commercial Computer Software--Licensing clause at NASA FAR supplement 16-52.227-86; or their equivalent. Information in this document is subject to change without notice and does not represent a commitment on the part of BEA Systems. THE SOFTWARE AND DOCUMENTATION ARE PROVIDED “AS IS” WITHOUT WARRANTY OF ANY KIND INCLUDING WITHOUT LIMITATION, ANY WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. FURTHER, BEA Systems DOES NOT WARRANT, GUARANTEE, OR MAKE ANY REPRESENTATIONS REGARDING THE USE, OR THE RESULTS OF THE USE, OF THE SOFTWARE OR WRITTEN MATERIAL IN TERMS OF CORRECTNESS, ACCURACY, RELIABILITY, OR OTHERWISE. -

UI Customization and Translation

LISTSERV Maestro Admin Tech Doc 17 UI Customization and Translation January 24, 2020 | © L-Soft Sweden AB lsoft.com This document is a LISTSERV Maestro Admin Tech Doc. Each admin tech doc documents a certain facet of the LISTERV Maestro administration on a technical level. This document is number 17 of the collection of admin tech docs and explains the topic “UI Customization and Translation”. Last updated for LISTSERV Maestro 9.0-1 on January 24, 2020. The information in this document also applies to later LISTSERV Maestro versions, unless a newer version of the document supersedes it. Information in this document is subject to change without notice. Companies, names, and data used in examples herein are fictitious unless otherwise noted. L-Soft Sweden AB does not endorse or approve the use of any of the product names or trademarks appearing in this document. Permission is granted to copy this document, at no charge and in its entirety, provided that the copies are not used for commercial advantage, that the source is cited, and that the present copyright notice is included in all copies so that the recipients of such copies are equally bound to abide by the present conditions. Prior written permission is required for any commercial use of this document, in whole or in part, and for any partial reproduction of the contents of this document exceeding 50 lines of up to 80 characters, or equivalent. The title page, table of contents and index, if any, are not considered part of the document for the purposes of this copyright notice, and can be freely removed if present. -

A Long Overdue Oppy News Email, Oppy Facts Sir Hubert

Oppy News Email #3 OPPY NEW YEAR! 28/01/18 Dear Fellow Queenslanders: a long overdue Oppy News Email, Oppy Facts Sir Hubert Ferdinand Opperman was Australia's most famous endurance cyclist. He was the Patron of Audax Australia until his death at 91 in 1996, while happily riding his exercise bike. The Fleche Opperman All Day Trial (The "Oppy") is named after him. (Casually Deliberate: Thy name is Oppy) Opperman impressed at the 1928 Tour de France, but it was his performance one month later at the Bol d'Or (Golden Bowl) — a non-stop race over 24 hours — that galvanised his reputation. During the event, his rivals sabotaged his bicycles, filing the chains so thin that they snapped under pressure. Forced to ride a heavy touring bike while his racing machines were repaired, Oppy lost valuable distance to his opponents. Enraged at the foul play, he cycled on, refusing to leave the track in order to relieve himself. After hours of non-stop riding, spectators saw a golden stream flowing from Oppy’s wheel onto the track and into the path of his rivals. They roared in support and chanted "Oppy" all the way to his eventual victory. Oppy had completed just over 950 km in 24 hours, but when he tried to stop at the end of the race Bruce Small (Oppy's coach & later the Mayor of the Gold Coast) convinced him to continue riding to break the 1000 km record. The crowd chanted into the night, "Allez Oppy, Allez!" In 1928 he was voted Europe's most popular sportsman. -

Batch Control V.8.20

zenon manual Batch Control v.8.20 © 2020 Ing. Punzenberger COPA-DATA GmbH All rights reserved. Distribution and/or reproduction of this document or parts thereof in any form are permitted solely with the written permission of the company COPA-DATA. Technical data is only used for product description and are not guaranteed properties in the legal sense. Subject to change, technical or otherwise. Contents 1 Welcome to COPA-DATA help ............................................................................................................... 7 2 Batch Control .............................................................................................................................................. 7 3 Introduction ................................................................................................................................................. 9 4 Terminology ............................................................................................................................................... 11 5 Configure and apply batch recipes ...................................................................................................... 14 6 Engineering in the Editor ........................................................................................................................ 15 6.1 Unit placeholders: Concept and how it works .................................................................................... 16 6.1.1 Units - unit classes - unit instances .............................................................................................................. -

Funding Headed for Revote

WWW.KEYSNET.COM SATURDAY,SEPTEMBER 14, 2013 VOLUME 60, NO. 74 ● 25 CENTS MARATHON Funding headed for revote By RYAN McCARTHY decreased Original Marathon TDC confirms reconsideration board’s desire to reconsider ter to the Monroe County [email protected] Seafood Festival funding 40 its decision. Commission, urging it to percent, from $50,000 to of festival money coming Oct. 9 Many have criticized the overturn the DAC’s deci- It appears the Monroe $30,000, while tripling the DAC 3 board in the wake of sion. The commission has County Tourist Development Middle Keys group’s alloca- makes one stop in Marathon Oct. 9 at Hawks Cay Resort its Aug. 14 vote, and final say on DAC funding Council’s Middle Keys adviso- tion to Fantasy Fest in Key at the Island Fish Co. on Duck Key. Marathon City Councilman recommendations. ry committee has had a change West from $10,000 to $30,000. Several sources have told Greater Marathon Chamber Dick Ramsay put a discus- It was clear during of heart about cutting funding On a split vote at last the Keynoter that at least of Commerce Chief Executive sion item on Tuesday’s regu- Tuesday’s discussion that for its marquee events. month’s meeting, the DAC 3 two members of the nine- Officer Daniel Samess told the lar meeting agenda to dis- many believe the Aug. 14 According to the TDC, the committee also committed member DAC board wish to Keynoter that TDC Director cuss it further. vote will be overturned. DAC 3 board has decided to $20,000 to the annual Key take up a new vote at its next Harold Wheeler sent him an e- Ramsay wanted the coun- revisit its decision that West Poker Run, which regular meeting, scheduled mail last week confirming the cil to approve sending a let- See TDC, 3A HOME PESTS SANCTUARY Millipedes invade Keys homes — again Invasives first arrived in Key West about 2001 By KEVIN WADLOW Senior Staff Writer [email protected] They brazenly wander inside and crawl underfoot across tile floors. -

FEMA/EMI Courseware Template Instructions Version 2.0 March 2010

FEMA/EMI Courseware Template Instructions Version 2.0 March 2010 FEMA Table of Contents Table of Contents .........................................................................................................................1 Creating a Course: The Basic Structure ......................................................................................3 Installing and Using the Template Files ....................................................................................5 index.htm ..................................................................................................................................7 indexMenu.htm..........................................................................................................................8 mainCode.js ..............................................................................................................................9 glossary.htm ............................................................................................................................10 Using the Templates ...................................................................................................................12 MainContent ............................................................................................................................13 MainContentFluffRight ............................................................................................................14 MainContentBigVideo .............................................................................................................15 -

Pseudoanonymous Influence Operations and Networked Social

Journal of Design and Science • Issue 6: Unreal On the Internet, Nobody Knows You’re a Bot: Pseudoanonymous Inuence Operations and Networked Social Movements Brian Friedberg, Joan Donovan Published on: Aug 10, 2019 DOI: 10.21428/7808da6b.45957184 License: Creative Commons Attribution 4.0 International License (CC-BY 4.0) On the Internet, Nobody Knows You’re a Bot: Pseudoanonymous Inuence Operations and Journal of Design and Science • Issue 6: Unreal Networked Social Movements Brian Friedberg is an investigative ethnographer whose work focuses on the impacts that alternative media, anonymous communities and popular cultures have on political communication and organization. Brian works with Dr. Joan Donovan, who heads one of the world’s leading teams focused on understanding and combating online disinformation and extremism, based at Harvard’s Shorenstein Center on Media, Politics and Public Policy. In this essay, Brian and Joan explore a challenge the Unreal has presented for study of activism online, the question of whether an online actor is real or synthetic. In this essay, they explore what happens when politically motivated humans impersonate vulnerable people or populations online to exploit their voices, positionality and power. — Ethan Zuckerman, Editor It is not true that in order to live one has to believe in one's own existence. There is no necessity to that. No matter what, our consciousness is never the echo of our own reality, of an existence set in "real time." But rather it is its echo in "delayed time," the screen of the dispersion of the subject and of its identity — only in our sleep, our unconscious, and our death are we identical to ourselves. -

Instructions for Completing the Oregon Report of Live Birth on Paper

Center for Health Statistics Center for Public Health Practice Public Health Division Oregon Health Authority Instructions for completing the Oregon Report of Live Birth on paper These instructions are intended for births occurring outside of a licensed facility reported on paper reports of live birth (Certificate of Live Birth Form 45-1). Mailing Address Oregon Vital Records P.O. Box 14050 Portland, OR 97293-0050 Registration Manager: Marsha Trump 971-673-1191 [email protected] Document Change Activity The following is a record of the changes that have occurred on this document from the time of its original approval Rev. date Change Description Author Date May 13, 2011 Revised instructions regarding attendant certifier for Hampton 8/3/11 out of facility birth to comply with ORS 432.206(3) October 1, Removed items as reportable to match national Hampton 8/15/14 2014 standards; recognition of marriage between persons of same sex November 24, Revised instructions to match most recent Certificate Jackson 11/30/15 2015 of Live Birth Form 45-1. March 3, 2018 Revised instructions on new sex designation Zapata 03/03/18 May 11, 2020 Revised contact information Ruden 5/11/20 GENERAL INFORMATION – OREGON REPORTS OF LIVE BIRTH Births that occur outside a licensed facility create specific legal obligations and practical limitations. The attending physician, midwife, or other person as identified by statute (below) also referred to as the certifier of the birth, is required to report births that occur outside of a facility to the Center for Health Statistics within five days. This is different from births occurring in a licensed facility where the facility has the legal obligation to report the birth. -

Start Smart. Finish Strong

START SMART. FINISH STRONG. Quality Enhancement Plan 2020–2025 Well into our second century of achievement, SMU looks to the future and is more committed than ever in these unprecedented times to the pursuit of academic quality. We are building upon and reaffirming our founding principles and values as we rally behind our commitment to the continued improvement of our programs and the creation of unparalleled opportunities for our students – tomorrow’s innovators, leaders and informed citizens – to successfully achieve their educational objectives. It is in support of these goals that we share “SMU in Four” – SMU’s Quality Enhancement Plan. Refining the Student Experience at SMU Quality Enhancement Plan 2020–2025 Prepared for <Placeholder: Name of Accreditation Agency here> <Placeholder Month XX, 2021> On-site review: <Placeholder Month XX–Month XX, 2021> SMU’s Office of Student Academic Engagement and Success (SAES) is pleased to submit to <Placeholder Name of Accreditation Agency here> the Quality Enhancement Plan (QEP) for the University. This proposal addresses SMU’s efforts to better monitor and support our students’ academic progress and improve our first-year retention and four-year graduation rates. Table of contents TAB 1: EXECUTIVE SUMMARY TAB 2: PROCESS USED TO DEVELOP THE QUALITY ENHANCEMENT PLAN (QEP) 4 2015: Launch of the SMU Strategic Plan 8 2017: Campus-wide Dialogue on Academic Quality and Academic Success 10 2018: Supporting Student Academic Engagement and Success 12 2019: Developing the QEP – SMU in Four 20 2020: Anticipated -

Intralinks Designer User Guide for Intralinks Platform

Intralinks Designer User Guide For Intralinks and Intralinks Classic Exchanges Intralinks 24x7x365 support US: + (1) 212 543 7800 UK: +44 (0)20 7623 8500. See Intralinks login page for other national numbers Copyright © 2018 Intralinks, Inc. Version 5.5.2 June 2018 Table of Contents Chapter 1: Welcome.......................................................................................4 Chapter 2: Getting started .............................................................................6 Before you use Intralinks Designer.....................................................................................6 Starting Intralinks Designer ................................................................................................6 Ending your current Intralinks Designer session ................................................................9 Checking for updates to Intralinks Designer.......................................................................9 Getting to know the Intralinks Designer interface .............................................................10 The Intralinks Designer screen ..................................................................................10 The toolbar .................................................................................................................12 The menu bar.............................................................................................................12 Right-click menus.......................................................................................................12