Predicting Outcome of Cricket Matches Using Classification Learners

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beach Wrestlers Go for Gold in Greece As World Series Hits Katerini Date: September 8, 2021 at 10:09 AM To: [email protected]

From: Beach Wrestling World Series Media [email protected] Subject: Beach Wrestlers go for gold in Greece as World Series hits Katerini Date: September 8, 2021 at 10:09 AM To: [email protected] View Email in Browser BEACH WRESTLERS GO FOR GOLD IN GREECE AS WORLD SERIES HITS KATERINI Ancient Greece is where the sport of Wrestling was invented, and legends created. Throughout the years it’s taken many styles and forces and the latest makes its way to the Olympic cradle as the 3rd leg of the 2021 Beach Wrestling World Series arrives at Paralia Beach on the Aegean Sea this Friday and Saturday. Beach Wrestling World Series Stop 3 - Greece | Katerini #BEACHWRESTLING Ancient Greece is where the sport of Wrestling was invented, and legends created. Throughout the years it’s taken many styles and forces and the latest makes its way to the Olympic cradle as the 3rd leg of the 2021 Beach Wrestling World Series arrives at Paralia Beach on the Aegean Sea this Friday and Saturday. Hot on the heels of last weekend’s 2nd leg on Lido di Ostia in Rome, 63 competitors from 12 countries will take to the sand over eight weight categories in the penultimate leg of this year’s season looking to earn crucial ranking points to take to Mamaia Beach in Constanta, Romania for the final at the end of the month. Given Greece’s history with wrestling it is no surprise they come with a strong team of 12 men and 6 women looking to press home home advantage. -

All-Time Team Records

Lindsey Wilson College Volleyball All-Time Team History Updated: 12/21/19 All-Time Category Record Date National Championships 1 2017 Regional Championships 0 2011, 2013, 2014, 2015, 2016, 2017, 2018, Mid-South Conference Championships 8 2019 MSC Tournament Championships 7 2011, 2012, 2015, 2016, 2017, 2018, 2019 KIAC Championships 1 1999 KIAC Tournament Championships 0 NAIA All-Americans 14 1994-2019 NAIA All-Region 7 1994-2007 All Mid-South Conference 79 2000-2019 All KIAC 6 1997-99 Record 561-372 1994-2019 Home Record 206-82 1994-2019 Road Record 167-144 1994-2019 Neutral Record 188-146 1994-2019 Most Consecutive Wins 42 Aug. 18, 2017 - Aug. 31, 2018 Most Consecutive Losses 17 Sept. 10 – Oct. 20, 2005 Most Consecutive Home Wins 74 Oct. 11, 2013 – Aug. 20, 2019 Most Consecutive Home Losses 7 Sept. 12 – Oct. 20, 2004 Most Consecutive Road Wins 19 Oct. 10, 2012 – Aug. 27, 2014 Sept. 5 – Oct. 20, 2003 Most Consecutive Road Losses 9 Sept. 12 – Nov. 1, 2005 Most Consecutive Neutral Wins 16 Oct. 6, 2017 - Aug. 31, 2018 Most Consecutive Neutral Losses 11 Nov. 11, 2008 - Oct. 9, 2009 Season Category Record Date Most Matches Played 45 1999, 2003 Most Games Played 164 1999 Most Wins 37 2015 Most Losses 27 2003, 2005 Most Home Wins 16 2016 Most Home Losses 9 1998 Most Road Wins 13 2011, 2013 Most Road Losses 14 1996 Most Neutral Wins 14 1996, 2018, 2019 Most Neutral Losses 18 1999 Longest Winning Streak 35 Aug. 18 - Dec. 2, 2017 Longest Losing Streak 17 Sept. -

Kodaira, Krol and Mantia Hold the Aces As Japan's Team Pursuit

November 23, 2019 Tomaszów Mazowiecki, Poland Kodaira, Krol and Mantia hold the aces as Japan’s Team Pursuit ladies crash and burn Nao Kodaira (JPN) returned to her winning ways in the 500m, but her compatriots in the ladies' Team Pursuit suffered a first loss after three seasons of invincibility at the ISU World Cup Speed Skating in Tomaszów Mazowiecki, Poland, on Saturday. The Russian ladies took advantage of a weakened Japanese line-up, edging out the Netherlands by 0.12 seconds to win gold. Joey Mantia (USA) crossed the line first to win the men's Mass Start after a hard day's work. In the 1500m he had finished 16th, with Thomas Krol (NED) taking gold in a track record, just 0.19 seconds ahead of team-mate and last week's winner Kjeld Nuis (NED). Kodaira calm and composed Winning the 500m in a track record time of 37.77, Kodaira made amends for the bronze medal that broke a streak of 23 World Cup golds in the 500m last week in Minsk. "That was a bit unlucky," the victorious Japanese said about her race in Belarus. "I was too busy in my head. A lot of things happened. I had to start three times. This week it was better, I only focused on my own skating." Kodaira was referring to her race in Minsk versus Vanessa Herzog (AUT). The Austrian was disqualified after two false starts and was relegated to the B Division. Herzog also bounced back from disappointment confidently winning the B Division race in Poland. -

CCC Match Report V Wiltshire Queries 10.06.18

CCC v Wiltshire Queries – Sunday 10th June, 2018 Chilmark’s winning streak came to an inglorious end on Sunday as an over strength Wiltshire Queries cricketed far better than the home team. The writing was on the wall early when pictures filtered through from lakeside in France, where CCC chairman Carl Jacobs had bagged himself a carp the size of a submarine. With the world’s supply of luck being channelled by Jacobs, there wasn’t much to go around Cleeves Farm. This showed on the first ball of the day, when Pete Corbin managed to tempt the batsman into a hook shot that resulted in a top edge. Wicketkeeper Jason Stearman lost sight of it and first slip brand King was still picking his breakfast from his teeth. The ball fell safely and the batsman went on to score 26 before Paul Butler took a sharp caught and bowled chance. It was that kind of day. Butler’s success was followed by an over that saw four sixes hit from it. Seeing this carnage captain Patrick Craig-McFeely decided Reg ‘Golden Arm’ Allen should be injected into the attack. Four more sixes promptly followed. By this time the opener responsible for the onslaught had reached his century (racing from 54 to 102 in 10 balls) and mercifully retired. It was a good time to be tossed the ball. Brand King and Jake Taylor benefitted and started bringing some respectability back to proceedings. When King jagged one between bat and pad Chilmark had their second wicket at the princely sum of 155 runs. -

Statistical Previews Day 12 – September 13, 2014

STATISTICAL PREVIEWS DAY 12 – SEPTEMBER 13, 2014 Pool E: Serbia - France (September 13) Head-to-Head · France have won both matches against Serbia at the World Championships, in 1956 and in the bronze medal match in 2002, when France won their only medal at the World Championships. · Serbia have won seven of their last nine encounters with France in major tournaments, including the last time they met, in the quarterfinals of the 2011 European Championship, when Serbia went on to win gold. Serbia · Serbia have lost their first and their latest match at these championships, winning five matches in between. · They will reach 200 set wins at the World Championships if they win this match. This includes Serbia, Serbia and Montenegro and Yugoslavia. · They have not lost two matches in a row since the 2010 first round. France · France can win six matches in a row for the first time. They also had a run of five wins in 1970. · With six wins so far in Poland, France need two more to equal 2006 when they won a total of eight matches at a single World Championship. They had seven wins in 1970 and 2002. · Antonin Rouzier is the 2014 top scorer having scored 139 points. FIVB Men’s World Championship Poland 2014 Page 1 Pool E: Argentina - Italy (September 13) Head-to-Head · Both teams can no longer progress to the next round. · Italy have won two of their three World Championship matches against Argentina, in 1990 and in 2002 (5/6th place). They lost a group stage match against them, also in 2002. -

Understanding Baseball Team Standings and Streaks

Eur. Phys. J. B 67, 473–481 (2009) DOI: 10.1140/epjb/e2008-00405-5 Understanding baseball team standings and streaks C. Sire and S. Redner Eur. Phys. J. B 67, 473–481 (2009) DOI: 10.1140/epjb/e2008-00405-5 THE EUROPEAN PHYSICAL JOURNAL B Regular Article Understanding baseball team standings and streaks C. Sire1 and S. Redner2,a 1 Laboratoire de Physique Th´eorique - IRSAMC, CNRS, Universit´e Paul Sabatier, 31062 Toulouse, France 2 Center for Polymer Studies and Department of Physics, Boston University, Boston, Massachusetts 02215, USA Received 29 July 2008 / Received in final form 8 October 2008 Published online 5 November 2008 – c EDP Sciences, Societ`a Italiana di Fisica, Springer-Verlag 2008 ! Abstract. Can one understand the statistics of wins and losses of baseball teams? Are their consecutive- game winning and losing streaks self-reinforcing or can they be described statistically? We apply the Bradley-Terry model, which incorporates the heterogeneity of team strengths in a minimalist way, to answer these questions. Excellent agreement is found between the predictions of the Bradley-Terry model and the rank dependence of the average number team wins and losses in major-league baseball over the past century when the distribution of team strengths is taken to be uniformly distributed over a finite range. Using this uniform strength distribution, we also find very good agreement between model predictions and the observed distribution of consecutive-game team winning and losing streaks over the last half-century; however, the agreement is less good for the previous half-century. The behavior of the last half-century supports the hypothesis that long streaks are primarily statistical in origin with little self-reinforcing component. -

Lin Dan Vs Lee Chong Wei: Lin 22 Wins with the Longest Winning Streak Four Times in a Row

Lin Dan vs Lee Chong Wei: Lin 22 wins with the longest winning streak four times in a row In the early morning of March 12 of Beijing time, 2012 All England Open Badminton Championships had started the men's singles finals of the competition, Once again, the final showdown between Lin and Lee had begun. Lin won the opening game with a close score of 21-19. Unfortunately in the second game with Lin leading 6-2, Lee Chong Wei had to retire. Thus Lin claimed the championship this year without further resistance. This is the fifth All England men's singles title won by Lin Dan. He has also made history winning the most All England men's singles gold medals in the last 36 years. Lin and Lee are undoubtedly the most successful and spectacular players in today’s world badminton men’s singles. The fight between them is also known as the "Lin-Lee Wars”; this is not only the indication of the intensity of their fights; it is also a good description of the smell of the gunpowder produced from their countless encounters. So far, they have met a total of 31 times; Lin Dan has a record of 22 wins and 9 losses against Lee Chong Wei, an obvious advantage on the win-loss ratio. The first meeting was at the Thomas Cup in 2004, when Lin Dan won 2-1. They last played against each other at the 2012 South Korea's Super Series, Lin Dan was overturned by his opponent 1-2. -

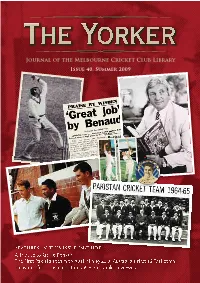

Issue 40: Summer 2009/10

Journal of the Melbourne Cricket Club Library Issue 40, Summer 2009 This Issue From our Summer 2009/10 edition Ken Williams looks at the fi rst Pakistan tour of Australia, 45 years ago. We also pay tribute to Richie Benaud's role in cricket, as he undertakes his last Test series of ball-by-ball commentary and wish him luck in his future endeavours in the cricket media. Ross Perry presents an analysis of Australia's fi rst 16-Test winning streak from October 1999 to March 2001. A future issue of The Yorker will cover their second run of 16 Test victories. We note that part two of Trevor Ruddell's article detailing the development of the rules of Australian football has been delayed until our next issue, which is due around Easter 2010. THE EDITORS Treasures from the Collections The day Don Bradman met his match in Frank Thorn On Saturday, February 25, 1939 a large crowd gathered in the Melbourne District competition throughout the at the Adelaide Oval for the second day’s play in the fi nal 1930s, during which time he captured 266 wickets at 20.20. Sheffi eld Shield match of the season, between South Despite his impressive club record, he played only seven Australia and Victoria. The fans came more in anticipation games for Victoria, in which he captured 24 wickets at an of witnessing the setting of a world record than in support average of 26.83. Remarkably, the two matches in which of the home side, which began the game one point ahead he dismissed Bradman were his only Shield appearances, of its opponent on the Shield table. -

Curling Action Continues

Curling action continues – Friday 26 August There was plenty of action at the Maniototo Curling International ice rink today as the curling teams fought for a place in the semi-finals. The Men’s Fours were played yesterday afternoon. Australia played the New Zealand Juniors and led from the start. On their last delivery of the sixth the Australian team set up a guard to protect their shot stone and two other stones in the house. Sam Miller delivered a beautiful hit and roll to get shot to take 1 point. In the seventh the Juniors had shot stone with four Australian stones also in the house and delivered their last stone to sit front of house. The Australians last stone was tight and hit the guard leaving the Juniors with shot stone and giving them another point. On the last delivery of the eighth the Juniors stone was too heavy and finished at the back of the house. The Australians picked up 3 points and an 11-3 win. The New Zealand Seniors played Japan. At the end of the sixth Japan was 5 points ahead 7-2. The Seniors had shot and another in the house and set up a guard on their last delivery. Japan took out the Seniors’ second stone leaving them with shot and 1 point. Japan answered with 4 points over the next two ends giving them the game at 11-3. David Greer from the New Zealand team put it down to opportunity. “We had our chances – they gave us a lot and we took one or two but missed quite a few too. -

2006-07 HOFSTRA UNIVERSITY WRESTLING SCHEDULE As of March 2, 2007 ± 18-4-2, 7-0

2007 CAA Wrestling Championships Schedule Friday, March 2 1 p.m. – First round 4 p.m. – Quarterfinals 8 p.m. – First round consolations Saturday, March 3 10 a.m. – Second round consolations Noon – Semifinals 2 p.m. – Third round consolations 2006-07 HOFSTRA 4 p.m. – Consolation finals 6 p.m. - Finals UNIVERSITY WRESTLING After finals, true second place matches #9 Hofstra Pride (18-4-2) HOFSTRA THIS YEAR: The Pride will be going after their at sixth consecutive Colonial Athletic Association Wrestling title Colonial Athletic Association Championships and their seventh straight conference title (2001 in the ECWA) Linn Gymnasium - George Mason University this weekend at the 2007 CAA Wrestling Championships at Friday-Saturday, March 2-3, 2007 - Fairfax, VA George Mason University. Hofstra, which is coming off a 21-13 loss at #15 Oklahoma on February 17 in Norman, OK, was 18- 4-2 in dual matches this year. The Pride concluded another 2006-07 HOFSTRA perfect conference regular season with a 29-9 victory over Colonial Athletic Association preseason favorite Rider on WRESTLING SCHEDULE/RESULTS February 13. Prior to the Rider match, the Pride dropped a 22-18 Nov. 14 at Wagner * 56-0 W decision to Cornell, snapping a seven-match winning streak. Nov. 15 ARMY 41-0 W Hofstra recorded a 31-3 victory over #14 Penn and a 30-6 Nov. 18 at East Stroudsburg Open All Day victory over #24 Lehigh. Hofstra recorded a 5-0 mark on Nov. 25 at Journeymen/Brute Northeast Duals January 19-20 at the Colonial Athletic Association Duals at vs. -

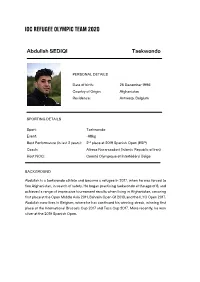

IOC REFUGEE Olympic TEAM 2020

IOC REFUGEE OLYMPiC TEAM 2020 Abdullah SEDIQI Taekwondo PERSONAL DETAILS Date of birth: 25 December 1996 Country of Origin: Afghanistan Residence: Antwerp, Belgium SPORTING DETAILS Sport: Taekwondo Event: -68kg Best Performance (in last 2 years): 2nd place at 2019 Spanish Open (ESP) Coach: Alireza Nassrazadani (Islamic Republic of Iran) Host NOC: Comité Olympique et Interfédéral Belge BACKGROUND Abdullah is a taekwondo athlete and became a refugee in 2017, when he was forced to flee Afghanistan, in search of safety. He began practising taekwondo at the age of 8, and achieved a range of impressive tournament results when living in Afghanistan, securing first place at the Open Middle Asia 2011, Bahrein Open G1 2013, and the ILYO Open 2017. Abdullah now lives in Belgium, where he has continued his winning streak, winning first place at the International Brussels Cup 2017 and Tess Cup 2017. More recently, he won silver at the 2019 Spanish Open. IOC REFUGEE OLYMPiC TEAM 2020 Ahmad ALIKAJ Judo PERSONAL DETAILS Date of birth: 5 June 1991 Country of origin: Syria Residence: Hamburg, Germany SPORTING DETAILS Sport: Judo Discipline: Mixed team Best performance (in last 2 years): N/A Coach: Fadi Darwish Host: IJF – Germany BACKGROUND Ahmad Alikaj was born in Syria, where he grew up before the war broke out. The 29 year-old judoka is now living and training in Germany and competes in the -73kg category. He was included in the IJF Refugee Team at the 2019 Budapest Grand Prix and participated in the 2019 World Championships in Tokyo, as well as the Paris Grand Slam and Düsseldorf Grand Slam in 2020. -

In This Month's Issue

Issue 56 | APRIL 2018 | WWW.SCOTTISHCURLING.ORG Yo u r keepingCurler you connected with Scottish Curling In this month’s issue... COMPETITIONS FEATURES A full round up of the Team Mouat Win Bronze action in Oestrusund, in Vegas Sweden CLUBS & RINKS Greenacres Young Curlers - Where Are You Now? www.scottishcurling.org I ssue 56 | April 2018 | www.scottishcurling.org | FEATURES FEATURESFEATURES A WORD FROM OUR CEO This has been a great season for Scottish Curling, with lots of new people coming to the sport through CurlFest, TryCurling and existing curlers introducing friends, to the sport we love. Our disability programmes are leading the way in getting new people active in sport and ice rinks around the country have been using innovative ways to attract new people to get involved in curling. All of this will help to sustain participation at Scotland’s curling facilities which need new players to come to the sport to replace those who retire from being active in curling. Over the coming summer months we will be doing a number of consultations with members to help inform our thinking on key topics of competitions, membership and the priorities for our next strategic plan for 2019-2023. We hope that you will respond to the surveys that we will use and perhaps come to our AGM on Saturday 16th June in Kelso or open meetings, later in the summer. BRUCE CRAWFORD, CHIEF EXECUTIVE OFFICER TEAM MOUAT WIN BRONZE AT THE WORLD MEN’S CURLING CHAMPIONSHIP, IN LAS VEGAS Scotland had a stunning run at the recent World Men’s Curling Championship in Las Vegas, coming away with a Bronze Medal for their sheer determination and huge talent.