On the Importance of Ecologically Valid Usable Security Research for End Users and IT Workers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Confronting the Challenges of Participatory Culture: Media Education for the 21St Century

An occasional paper on digital media and learning Confronting the Challenges of Participatory Culture: Media Education for the 21st Century Henry Jenkins, Director of the Comparative Media Studies Program at the Massachusetts Institute of Technology with Katie Clinton Ravi Purushotma Alice J. Robison Margaret Weigel Building the new field of digital media and learning The MacArthur Foundation launched its five-year, $50 million digital media and learning initiative in 2006 to help determine how digital technologies are changing the way young people learn, play, socialize, and participate in civic life.Answers are critical to developing educational and other social institutions that can meet the needs of this and future generations. The initiative is both marshaling what it is already known about the field and seeding innovation for continued growth. For more information, visit www.digitallearning.macfound.org.To engage in conversations about these projects and the field of digital learning, visit the Spotlight blog at spotlight.macfound.org. About the MacArthur Foundation The John D. and Catherine T. MacArthur Foundation is a private, independent grantmaking institution dedicated to helping groups and individuals foster lasting improvement in the human condition.With assets of $5.5 billion, the Foundation makes grants totaling approximately $200 million annually. For more information or to sign up for MacArthur’s monthly electronic newsletter, visit www.macfound.org. The MacArthur Foundation 140 South Dearborn Street, Suite 1200 Chicago, Illinois 60603 Tel.(312) 726-8000 www.digitallearning.macfound.org An occasional paper on digital media and learning Confronting the Challenges of Participatory Culture: Media Education for the 21st Century Henry Jenkins, Director of the Comparative Media Studies Program at the Massachusetts Institute of Technology with Katie Clinton Ravi Purushotma Alice J. -

M&A @ Facebook: Strategy, Themes and Drivers

A Work Project, presented as part of the requirements for the Award of a Master Degree in Finance from NOVA – School of Business and Economics M&A @ FACEBOOK: STRATEGY, THEMES AND DRIVERS TOMÁS BRANCO GONÇALVES STUDENT NUMBER 3200 A Project carried out on the Masters in Finance Program, under the supervision of: Professor Pedro Carvalho January 2018 Abstract Most deals are motivated by the recognition of a strategic threat or opportunity in the firm’s competitive arena. These deals seek to improve the firm’s competitive position or even obtain resources and new capabilities that are vital to future prosperity, and improve the firm’s agility. The purpose of this work project is to make an analysis on Facebook’s acquisitions’ strategy going through the key acquisitions in the company’s history. More than understanding the economics of its most relevant acquisitions, the main research is aimed at understanding the strategic view and key drivers behind them, and trying to set a pattern through hypotheses testing, always bearing in mind the following question: Why does Facebook acquire emerging companies instead of replicating their key success factors? Keywords Facebook; Acquisitions; Strategy; M&A Drivers “The biggest risk is not taking any risk... In a world that is changing really quickly, the only strategy that is guaranteed to fail is not taking risks.” Mark Zuckerberg, founder and CEO of Facebook 2 Literature Review M&A activity has had peaks throughout the course of history and different key industry-related drivers triggered that same activity (Sudarsanam, 2003). Historically, the appearance of the first mergers and acquisitions coincides with the existence of the first companies and, since then, in the US market, there have been five major waves of M&A activity (as summarized by T.J.A. -

Privacy and You the Facts and the Myths

Privacy and You The facts and the myths Bill Bowman and Katrina Prohaszka Clarkston Independence District Library 1 Overview ● What is privacy? ● Why should you care? ● Privacy laws, regulations, and protections ● Privacy and libraries ● How to protect your privacy → need-to-know settings 2 “[Privacy is] the right to What is Privacy? be let alone” - Warren & Brandeis, 1890 3 What is Privacy cont’d - Alan Westin (1967) on privacy: - “[privacy is] the right of individuals to control, edit, manage, and delete information about themselves, and to decide when, how, and to what extent information is communicated to others.” - Privacy provides a space for discussion, growth, and learning - Privacy is the ability to control your information and maintain boundaries 4 DEMO → Ghostory 5 What Privacy Is NOT - common myths Myth: Privacy and secrecy Myth: Privacy and security are the same are the same - Privacy is about being - Privacy is about unobserved safeguarding a user’s identity - Secrecy is about intentionally hiding - Security is about something protecting a user’s information & data 6 Evolving Concerns - Persistence of cameras and microphones - Think 1984 by George Orwell - “big brother” is always watching, and “it’s okay” - Social media culture - “Tagging” people without knowledge - Sharing photos without asking - Data as currency - 23andMe, Ancestry.com, Google, etc. 7 “Arguing that you don’t Why should you care about privacy because you have nothing to hide is no different than care? saying you don’t care about free speech because Why -

Keepass Password Safe Help

KeePass Password Safe KeePass: Copyright © 2003-2011 Dominik Reichl. The program is OSI Certified Open Source Software. OSI Certified is a certification mark of the Open Source Initiative. For more information see the License page. Introduction Today you need to remember many passwords. You need a password for the Windows network logon, your e-mail account, your website's FTP password, online passwords (like website member account), etc. etc. etc. The list is endless. Also, you should use different passwords for each account. Because if you use only one password everywhere and someone gets this password you have a problem... A serious problem. He would have access to your e-mail account, website, etc. Unimaginable. But who can remember all those passwords? Nobody, but KeePass can. KeePass is a free, open source, light-weight and easy-to-use password manager for Windows. The program stores your passwords in a highly encrypted database. This database consists of only one file, so it can be easily transferred from one computer to another. KeePass supports password groups, you can sort your passwords (for example into Windows, Internet, My Website, etc.). You can drag&drop passwords into other windows. The powerful auto-type feature will type user names and passwords for you into other windows. The program can export the database to various formats. It can also import data from various other formats (more than 20 different formats of other password managers, a generic CSV importer, ...). Of course, you can also print the password list or current view. Using the context menu of the password list you can quickly copy password or user name to the Windows clipboard. -

Keeper Security G2 Competitive Comparison Report

Keeper Security G2 Competitive Comparison Report Keeper is the leading cybersecurity platform for preventing password-related data breaches and cyberthreats. This report is based on ratings and reviews from real G2 users. Keeper vs. Top Competitors: User Satisfaction Ratings See how Keeper wins in customer satisfaction based on the ratings in the below G2 categories. Keeper LastPass Dashlane 1Password 93% 85% Ease of Use 92% 91% 92% 82% Mobile App Usability 82% 88% 93% 83% Ease of Setup 89% 88% 95% 92% Meets Requirements 94% 94% 91% 82% Quality of Support 89% 90% 0% 20% 40% 60% 80% 100% See the full reports: Keeper vs. LastPass Keeper vs. Dashlane Keeper vs. 1Password G2 Grid: Keeper Listed as a Leader G2 scores products and vendors based on reviews gathered from the user community, as well as data aggregated from online sources and social networks. Together, these scores are mapped on the G2 Grid, which you can use to compare products. As seen on the grid, Keeper is currently rated as a “Leader,” scoring highly in both market presence and satisfaction. Contenders Leaders Market Presence Market Niche High Performers Satisfaction View the Expanded Grid Keeper User Reviews & Testimonials See what G2 users have to say about their experience with Keeper. Best password manager on the market “Keeper was the first password manager I could find that supported the U2F hardware keys that we use and this was a non-negotiable requirement at the time and still is. The support is really excellent and above expectations - On all my questions and concerns, I have received a reply within an hour and I am situated in Southern Africa. -

Keepass Instructions

Introduction to KeePass What is KeePass? KeePass is a safe place for all your usernames, passwords, software licenses, confirmations from vendors and even credit card information. Why Use a Password Safe? • It makes and remembers excellent passwords for every site you visit. These passwords will be random and long. • It is very dangerous to either try and remember your passwords or re-use the same password on multiple sites. Using KeePass eliminates these problems. • It helps you log into websites • It stores license codes and other critical information from software vendors • It protects all your licenses and passwords with state of the art encryption making it unbreakable as long as you have a good passphrase. I made a 5 minute introductory video screencast . Go ahead and watch it. http://www.screencast.com/t/RgJjbdYF0p Copyright(c) 2011 by Steven Shank Why switch from my OCS Passwords safe to Keepass? Keepass is much better than my program. It is much more secure. My OCS Passwords is not using state of the art encryption. My program is crackable. In addition to being safer, it is even easier to use than my program and has some great extra features. In short, while OCS passwords was a good program in its time, its time has passed. Among the many advanced features, KeePass lets you add fields, copy username and passwords into websites and programs more easily, group your passwords and launch websites directly from KeePass. How Do You Switch from OCS Password to KeePass? What I've done • I worked with a programmer to write a program to convert current password databases into a text file I could import into KeePass. -

April, 2021 Spring

VVoolulummee 116731 SeptemAbperril,, 22002201 Goodbye LastPass, Hello BitWarden Analog Video Archive Project Short Topix: New Linux Malware Making The Rounds Inkscape Tutorial: Chrome Text FTP With Double Commander: How To Game Zone: Streets Of Rage 4: Finaly On PCLinuxOS! PCLinuxOS Recipe Corner: Chicken Parmesan Skillet Casserole Beware! A New Tracker You Might Not Be Aware Of And More Inside... In This Issue... 3 From The Chief Editor's Desk... 4 Screenshot Showcase The PCLinuxOS name, logo and colors are the trademark of 5 Goodbye LastPass, Hello BitWarden! Texstar. 11 PCLinuxOS Recipe Corner: The PCLinuxOS Magazine is a monthly online publication containing PCLinuxOS-related materials. It is published primarily for members of the PCLinuxOS community. The Chicken Parmesean Skillet Casserole magazine staff is comprised of volunteers from the 12 Inkscape Tutorial: Chrome Text PCLinuxOS community. 13 Screenshot Showcase Visit us online at http://www.pclosmag.com 14 Analog Video Archive Project This release was made possible by the following volunteers: Chief Editor: Paul Arnote (parnote) 16 Screenshot Showcase Assistant Editor: Meemaw Artwork: ms_meme, Meemaw Magazine Layout: Paul Arnote, Meemaw, ms_meme 17 FTP With Double Commander: How-To HTML Layout: YouCanToo 20 Screenshot Showcase Staff: ms_meme Cg_Boy 21 Short Topix: New Linux Malware Making The Rounds Meemaw YouCanToo Pete Kelly Daniel Meiß-Wilhelm 24 Screenshot Showcase Alessandro Ebersol 25 Repo Review: MiniTube Contributors: 26 Good Words, Good Deeds, Good News David Pardue 28 Game Zone: Streets Of Rage 4: Finally On PCLinuxOS! 31 Screenshot Showcase 32 Beware! A New Tracker You Might Not Be Aware Of The PCLinuxOS Magazine is released under the Creative Commons Attribution-NonCommercial-Share-Alike 3.0 36 PCLinuxOS Recipe Corner Bonus: Unported license. -

Privacy Handout by Bill Bowman & Katrina Prohaszka

Privacy Handout By Bill Bowman & Katrina Prohaszka RECOMMENDED PROGRAM SETTINGS 2 WEB BROWSER SETTINGS 2 WINDOWS 10 4 SMARTPHONES & TABLETS 4 EMAIL 5 SOCIAL MEDIA SETTINGS 5 Instagram 5 TikTok 6 Twitter 6 Snapchat 7 Venmo 7 Facebook 8 RECOMMENDED PRIVACY TOOLS 10 WEB BROWSERS 10 SEARCH ENGINES 10 VIRTUAL PRIVATE NETWORKS (VPNS) 10 ANTI-VIRUS/ANTI-MALWARE 10 PASSWORD MANAGERS 11 TWO-FACTOR AUTHENTICATION 11 ADDITIONAL PRIVACY RESOURCES 12 1 RECOMMENDED PRIVACY TOOLS WEB BROWSERS ● Tor browser -- https://www.torproject.org/download/ (advanced users) ● Brave browser -- https://brave.com/ ● Firefox -- https://www.mozilla.org/en-US/exp/firefox/ ● Chrome & Microsoft Edge (Chrome-based) - Not recommended unless additional settings are changed SEARCH ENGINES ● DuckDuckGo -- https://duckduckgo.com/ ● Qwant -- https://www.qwant.com/?l=en ● Swisscows -- https://swisscows.com/ ● Google -- Not private, uses algorithm based on your information VIRTUAL PRIVATE NETWORKS (VPNS) ● NordVPN -- https://nordvpn.com/ ● ExpressVPN -- https://www.expressvpn.com/ ● 1.1.1.1 -- https://1.1.1.1/ ● Firefox VPN -- https://vpn.mozilla.org/ ● OpenVPN -- https://openvpn.net/ ● Sophos VPN -- https://www.sophos.com/en-us/products/free-tools/sophos-utm-home-edition.aspx ANTI-VIRUS/ANTI-MALWARE ● Malwarebytes -- https://www.malwarebytes.com/ ● Symantec -- https://securitycloud.symantec.com/cc/#/landing ● CCleaner -- https://www.ccleaner.com/ ● ESET -- https://www.eset.com/us/ ● Sophos -- https://home.sophos.com/en-us.aspx ● Windows Defender -- https://www.microsoft.com/en-us/windows/comprehensive-security (built-in to Windows 10) 2 PASSWORD MANAGERS ● Lastpass -- https://www.lastpass.com/ ● KeePass -- https://keepass.info/ ● KeeWeb -- https://keeweb.info/ ● Dashlane -- https://www.dashlane.com/ TWO-FACTOR AUTHENTICATION ● Authy -- https://authy.com/ ● Built-in two-factor authentication (some emails like Google mail, various social media, etc. -

Online Security and Privacy

Security & Privacy Guide Security and Privacy Guide When thinking about security and privacy settings you should consider: What do you want to protect? Who do you want to protect it from? Do you need to protect it? How bad are the consequences if you fail to protect it? How much trouble are you prepared to go to? These questions should be asked whilst considering what information you are accessing (which websites), how you are accessing the information, (what device you are using) and where you are accessing the information (at home, work, public place). Security & Privacy When looking at your Digital Security you are protecting your information against malicious attacks and malware. (Malware is software intentionally designed to cause damage to a computer). Digital Privacy is different as you are deciding what information you are prepared to share with a website or App (or its third party partners) that you are already using. Permission to share this information can be implicit once you start using a website or App. Some websites or Apps will allow you to control how they use your information. Security Physical access: How secure is the device you are using? Is it kept in a locked building, at home, or do you use it when you are out and about? Does anyone else have access to the device? Do you require a passcode or password to unlock your device? Virtual access: Have you updated your IOS software (on an iPad) or installed the latest anti-virus software on your device? Most devices will prompt you when an update is available. -

Take Control of 1Password (5.0) SAMPLE

EBOOK EXTRAS: v5.0 Downloads, Updates, Feedback TAKE CONTROL OF 1PASSWORD by JOE KISSELL $14.99 5th Click here to buy the full 180-page “Take Control of 1Password” for only $14.99! EDITION Table of Contents Read Me First ............................................................... 5 Updates and More ............................................................. 5 Basics .............................................................................. 6 What’s New in the Fifth Edition ............................................ 6 Introduction ................................................................ 8 1Password Quick Start .............................................. 10 Meet 1Password ........................................................ 11 Understand 1Password Versions ........................................ 11 License 1Password ........................................................... 13 Learn About 1Password Accounts ....................................... 15 Configure 1Password ........................................................ 17 Explore the 1Password Components ................................... 25 Learn How Logins Work .................................................... 36 Find Your Usage Pattern ................................................... 46 Set Up Syncing ............................................................... 49 Check for Updates ........................................................... 59 Learn What 1Password Isn’t Good For ................................ 59 Understand Password Security -

Password Managers an Overview

Peter Albin Lexington Computer and Technology Group March 13, 2019 Agenda One Solution 10 Worst Passwords of 2018 Time to Crack Password How Hackers Crack Passwords How Easy It Is To Crack Your Password How Do Password Managers Work What is a Password Manager Why use a Password Manager? Cloud Based Password Managers Paid Password Managers Free Password Managers How to Use LastPass How to Use Dashlane How to Use Keepass Final Reminder References March 13, 2019 2 One Solution March 13, 2019 3 10 Worst Passwords of 2018 1. 123456 2. password 3. 123456789 4. 12345678 5. 12345 6. 111111 7. 1234567 8. sunshine 9. qwerty 10. iloveyou March 13, 2019 4 Time to Crack Password March 13, 2019 5 Time to Crack Password March 13, 2019 6 Time to Crack Password March 13, 2019 7 Time to Crack Password Time to crack password "security1" 1600 1400 1200 1000 Days 800 Days 600 400 200 0 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 Year March 13, 2019 8 How Hackers Crack Passwords https://youtu.be/YiRPt4vrSSw March 13, 2019 9 How Easy It Is To Crack Your Password https://youtu.be/YiRPt4vrSSw March 13, 2019 10 How Do Password Managers Work https://youtu.be/DI72oBhMgWs March 13, 2019 11 What is a Password Manager A password manager will generate, retrieve, and keep track of super-long, crazy-random passwords across countless accounts for you, while also protecting all your vital online info—not only passwords but PINs, credit-card numbers and their three-digit CVV codes, answers to security questions, and more … And to get all that security, you’ll only need to remember a single password March 13, 2019 12 Why use a Password Manager? We are terrible at passwords We suck at creating them the top two most popular remain “123456” and “password” We share them way too freely We forget them all the time We forget them all the time A password manager relieves the burden of thinking up and memorizing unique, complex logins—the hallmark of a secure password. -

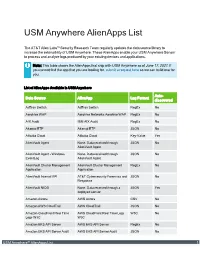

USM Anywhere Alienapps List

USM Anywhere AlienApps List The AT&T Alien Labs™ Security Research Team regularly updates the data source library to increase the extensibility of USM Anywhere. These AlienApps enable your USM Anywhere Sensor to process and analyze logs produced by your existing devices and applications. Note: This table shows the AlienApps that ship with USM Anywhere as of June 17, 2021. If you cannot find the app that you are looking for, submit a request here so we can build one for you. List of AlienApps Available in USM Anywhere Auto- Data Source AlienApp Log Format discovered AdTran Switch AdTran Switch RegEx No Aerohive WAP Aerohive Networks Aerohive WAP RegEx No AIX Audit IBM AIX Audit RegEx No Akamai ETP Akamai ETP JSON No Alibaba Cloud Alibaba Cloud Key-Value Yes AlienVault Agent None. Data received through JSON No AlienVault Agent AlienVault Agent - Windows None. Data received through JSON No EventLog AlienVault Agent AlienVault Cluster Management AlienVault Cluster Management RegEx No Application Application AlienVault Internal API AT&T Cybersecurity Forensics and JSON No Response AlienVault NIDS None. Data received through a JSON Yes deployed sensor Amazon Aurora AWS Aurora CSV No Amazon AWS CloudTrail AWS CloudTrail JSON No Amazon CloudFront Real Time AWS CloudFront Real Time Logs W3C No Logs W3C W3C Amazon EKS API Server AWS EKS API Server RegEx No Amazon EKS API Server Audit AWS EKS API Server Audit JSON No USM Anywhere™ AlienApps List 1 List of AlienApps Available in USM Anywhere (Continued) Auto- Data Source AlienApp Log Format discovered