Noise in Integrate-And-Fire Neurons: from Stochastic Input to Escape Rates

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Stochastic Resonance Model of Auditory Perception: a Unified Explanation of Tinnitus Development, Zwicker Tone Illusion, and Residual Inhibition

bioRxiv preprint doi: https://doi.org/10.1101/2020.03.27.011163; this version posted March 29, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. The Stochastic Resonance model of auditory perception: A unified explanation of tinnitus development, Zwicker tone illusion, and residual inhibition. Achim Schilling1,2, Konstantin Tziridis1, Holger Schulze1, Patrick Krauss1,2,3,4 1 Neuroscience Lab, Experimental Otolaryngology, Friedrich-Alexander University Erlangen-Nürnberg (FAU), Germany 2 Cognitive Computational Neuroscience Group at the Chair of English Philology and Linguistics, Friedrich-Alexander University Erlangen-Nürnberg (FAU), Germany 3 FAU Linguistics Lab, Friedrich-Alexander University Erlangen-Nürnberg (FAU), Germany 4 Department of Otorhinolaryngology/Head and Neck Surgery, University of Groningen, University Medical Center Groningen, The Netherlands Keywords: Auditory Phantom Perception, Somatosensory Projections, Dorsal Cochlear Nucleus, Speech Perception Abstract Stochastic Resonance (SR) has been proposed to play a major role in auditory perception, and to maintain optimal information transmission from the cochlea to the auditory system. By this, the auditory system could adapt to changes of the auditory input at second or even sub- second timescales. In case of reduced auditory input, somatosensory projections to the dorsal cochlear nucleus would be disinhibited in order to improve hearing thresholds by means of SR. As a side effect, the increased somatosensory input corresponding to the observed tinnitus-associated neuronal hyperactivity is then perceived as tinnitus. In addition, the model can also explain transient phantom tone perceptions occurring after ear plugging, or the Zwicker tone illusion. -

Stochastic Resonance and Finite Resolution in a Network of Leaky

Stochastic resonance and finite resolution in a network of leaky integrate-and-fire neurons Nhamoinesu Mtetwa Department of Computing Science and Mathematics University of Stirling, Scotland Thesis submitted for the degree of Doctor of Philosophy 2003 ProQuest Number: 13917115 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest 13917115 Published by ProQuest LLC(2019). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States C ode Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106- 1346 Declaration I declare that this thesis was composed by me and that the work contained therein is my own. Any other people’s work has been explicitly acknowledged. i Acknowledgements This thesis wouldn’t have been possible without the assistance of the people acknowledged below. To my supervisor, Prof. Leslie S. Smith, I wish to say thank you for being the best supervisor one could ever wish for. I am specifically thankful for giving me the freedom to walk into your office anytime. This made it easy for me to approach you and discuss any research issues as they arose. Dr. Amir Hussain, a note of thanks for advice on the signal processing aspects of my research. -

Stochastic Resonance: Noise-Enhanced Order

Physics ± Uspekhi 42 (1) 7 ± 36 (1999) #1999 Uspekhi Fizicheskikh Nauk, Russian Academy of Sciences REVIEWS OF TOPICAL PROBLEMS PACS numbers: 02.50.Ey, 05.40.+j, 05.45.+b, 87.70.+c Stochastic resonance: noise-enhanced order V S Anishchenko, A B Neiman, F Moss, L Schimansky-Geier Contents 1. Introduction 7 2. Response to a weak signal. Theoretical approaches 10 2.1 Two-state theory of SR; 2.2 Linear response theory of SR 3. Stochastic resonance for signals with a complex spectrum 13 3.1 Response of a stochastic bistable system to multifrequency signal; 3.2 Stochastic resonance for signals with a finite spectral linewidth; 3.3 Aperiodic stochastic resonance 4. Nondynamical stochastic resonance. Stochastic resonance in chaotic systems 15 4.1 Stochastic resonance as a fundamental threshold phenomenon; 4.2 Stochastic resonance in chaotic systems 5. Synchronization of stochastic systems 18 5.1 Synchronization of a stochastic bistable oscillator; 5.2 Forced stochastic synchronization of the Schmitt trigger; 5.3 Mutual stochastic synchronization of coupled bistable systems; 5.4 Forced and mutual synchronization of switchings in chaotic systems 6. Stochastic resonance and synchronization of ensembles of stochastic resonators 24 6.1 Linear response theory for arrays of stochastic resonators; 6.2 Synchronization of an ensemble of stochastic resonators by a weak periodic signal; 6.3 Stochastic resonance in a chain with finite coupling between elements 7. Stochastic synchronization as noise-enhanced order 28 7.1 Dynamical entropy and source entropy in the regime of stochastic synchronization; 7.2 Stochastic resonance and Kullback entropy; 7.3 Enhancement of the degree of order in an ensemble of stochastic oscillators in the SR regime 8. -

Neuronal Dynamics

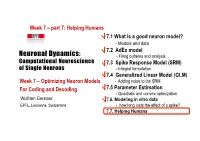

Week 7 – part 7: Helping Humans 7.1 What is a good neuron model? - Models and data 7.2 AdEx model Neuronal Dynamics: - Firing patterns and analysis Computational Neuroscience 7.3 Spike Response Model (SRM) of Single Neurons - Integral formulation 7.4 Generalized Linear Model (GLM) Week 7 – Optimizing Neuron Models - Adding noise to the SRM For Coding and Decoding 7.5 Parameter Estimation - Quadratic and convex optimization Wulfram Gerstner 7.6. Modeling in vitro data EPFL, Lausanne, Switzerland - how long lasts the effect of a spike? 7.7. Helping Humans Week 7 – part 7: Helping Humans 7.1 What is a good neuron model? - Models and data 7.2 AdEx model - Firing patterns and analysis 7.3 Spike Response Model (SRM) - Integral formulation 7.4 Generalized Linear Model (GLM) - Adding noise to the SRM 7.5 Parameter Estimation - Quadratic and convex optimization 7.6. Modeling in vitro data - how long lasts the effect of a spike? 7.7. Helping Humans Neuronal Dynamics – Review: Models and Data -Predict spike times -Predict subthreshold voltage -Easy to interpret (not a ‘black box’) -Variety of phenomena -Systematic: ‘optimize’ parameters BUT so far limited to in vitro Neuronal Dynamics – 7.7 Systems neuroscience, in vivo Now: extracellular recordings visual cortex A) Predict spike times, given stimulus B) Predict subthreshold voltage C) Easy to interpret (not a ‘black box’) Model of ‘Encoding’ D) Flexible enough to account for a variety of phenomena E) Systematic procedure to ‘optimize’ parameters Neuronal Dynamics – 7.7 Estimation of receptive fields Estimation of spatial (and temporal) receptive fields ut() k I u LNP =+∑ kKk− rest firing intensity ρϑ()tfutt= ( ()− ()) Neuronal Dynamics – 7.7 Estimation of Receptive Fields visual LNP = stimulus Linear-Nonlinear-Poisson Special case of GLM= Generalized Linear Model GLM for prediction of retinal ganglion ON cell activity Pillow et al. -

Statistical Analysis of Stochastic Resonance in a Thresholded Detector

AUSTRIAN JOURNAL OF STATISTICS Volume 32 (2003), Number 1&2, 49–70 Statistical Analysis of Stochastic Resonance in a Thresholded Detector Priscilla E. Greenwood, Ursula U. Muller,¨ Lawrence M. Ward, and Wolfgang Wefelmeyer Arizona State University, Universitat¨ Bremen, University of British Columbia, and Universitat¨ Siegen Abstract: A subthreshold signal may be detected if noise is added to the data. The noisy signal must be strong enough to exceed the threshold at least occasionally; but very strong noise tends to drown out the signal. There is an optimal noise level, called stochastic resonance. We explore the detectability of different signals, using statistical detectability measures. In the simplest setting, the signal is constant, noise is added in the form of i.i.d. random variables at uniformly spaced times, and the detector records the times at which the noisy signal exceeds the threshold. We study the best estimator for the signal from the thresholded data and determine optimal con- figurations of several detectors with different thresholds. In a more realistic setting, the noisy signal is described by a nonparametric regression model with equally spaced covariates and i.i.d. errors, and the de- tector records again the times at which the noisy signal exceeds the threshold. We study Nadaraya–Watson kernel estimators from thresholded data. We de- termine the asymptotic mean squared error and the asymptotic mean average squared error and calculate the corresponding local and global optimal band- widths. The minimal asymptotic mean average squared error shows a strong stochastic resonance effect. Keywords: Stochastic Resonance, Partially Observed Model, Thresholded Data, Noisy Signal, Efficient Estimator, Fisher Information, Nonparametric Regression, Kernel Density Estimator, Optimal Bandwidth. -

Hebbian Learning and Spiking Neurons

PHYSICAL REVIEW E VOLUME 59, NUMBER 4 APRIL 1999 Hebbian learning and spiking neurons Richard Kempter* Physik Department, Technische Universita¨tMu¨nchen, D-85747 Garching bei Mu¨nchen, Germany Wulfram Gerstner† Swiss Federal Institute of Technology, Center of Neuromimetic Systems, EPFL-DI, CH-1015 Lausanne, Switzerland J. Leo van Hemmen‡ Physik Department, Technische Universita¨tMu¨nchen, D-85747 Garching bei Mu¨nchen, Germany ~Received 6 August 1998; revised manuscript received 23 November 1998! A correlation-based ~‘‘Hebbian’’! learning rule at a spike level with millisecond resolution is formulated, mathematically analyzed, and compared with learning in a firing-rate description. The relative timing of presynaptic and postsynaptic spikes influences synaptic weights via an asymmetric ‘‘learning window.’’ A differential equation for the learning dynamics is derived under the assumption that the time scales of learning and neuronal spike dynamics can be separated. The differential equation is solved for a Poissonian neuron model with stochastic spike arrival. It is shown that correlations between input and output spikes tend to stabilize structure formation. With an appropriate choice of parameters, learning leads to an intrinsic normal- ization of the average weight and the output firing rate. Noise generates diffusion-like spreading of synaptic weights. @S1063-651X~99!02804-4# PACS number~s!: 87.19.La, 87.19.La, 05.65.1b, 87.18.Sn I. INTRODUCTION In contrast to the standard rate models of Hebbian learn- ing, we introduce and analyze a learning rule where synaptic Correlation-based or ‘‘Hebbian’’ learning @1# is thought modifications are driven by the temporal correlations be- to be an important mechanism for the tuning of neuronal tween presynaptic and postsynaptic spikes. -

Stochastic Resonance

Stochastic resonance Luca Gammaitoni Dipartimento di Fisica, Universita` di Perugia, and Istituto Nazionale di Fisica Nucleare, Sezione di Perugia, VIRGO-Project, I-06100 Perugia, Italy Peter Ha¨nggi Institut fu¨r Physik, Universita¨t Augsburg, Lehrstuhl fu¨r Theoretische Physik I, D-86135 Augsburg, Germany Peter Jung Department of Physics and Astronomy, Ohio University, Athens, Ohio 45701 Fabio Marchesoni Department of Physics, University of Illinois, Urbana, Illinois 61801 and Istituto Nazionale di Fisica della Materia, Universita` di Camerino, I-62032 Camerino, Italy Over the last two decades, stochastic resonance has continuously attracted considerable attention. The term is given to a phenomenon that is manifest in nonlinear systems whereby generally feeble input information (such as a weak signal) can be be amplified and optimized by the assistance of noise. The effect requires three basic ingredients: (i) an energetic activation barrier or, more generally, a form of threshold; (ii) a weak coherent input (such as a periodic signal); (iii) a source of noise that is inherent in the system, or that adds to the coherent input. Given these features, the response of the system undergoes resonance-like behavior as a function of the noise level; hence the name stochastic resonance. The underlying mechanism is fairly simple and robust. As a consequence, stochastic resonance has been observed in a large variety of systems, including bistable ring lasers, semiconductor devices, chemical reactions, and mechanoreceptor cells in the tail fan of a crayfish. In this paper, the authors report, interpret, and extend much of the current understanding of the theory and physics of stochastic resonance. -

Connectivity Reflects Coding: a Model of Voltage-‐Based Spike-‐ Timing-‐Dependent-‐P

Connectivity reflects Coding: A Model of Voltage-based Spike- Timing-Dependent-Plasticity with Homeostasis Claudia Clopath, Lars Büsing*, Eleni Vasilaki, Wulfram Gerstner Laboratory of Computational Neuroscience Brain-Mind Institute and School of Computer and Communication Sciences Ecole Polytechnique Fédérale de Lausanne 1015 Lausanne EPFL, Switzerland * permanent address: Institut für Grundlagen der Informationsverarbeitung, TU Graz, Austria Abstract Electrophysiological connectivity patterns in cortex often show a few strong connections, sometimes bidirectional, in a sea of weak connections. In order to explain these connectivity patterns, we use a model of Spike-Timing-Dependent Plasticity where synaptic changes depend on presynaptic spike arrival and the postsynaptic membrane potential, filtered with two different time constants. The model describes several nonlinear effects in STDP experiments, as well as the voltage dependence of plasticity. We show that in a simulated recurrent network of spiking neurons our plasticity rule leads not only to development of localized receptive fields, but also to connectivity patterns that reflect the neural code: for temporal coding paradigms with spatio-temporal input correlations, strong connections are predominantly unidirectional, whereas they are bidirectional under rate coded input with spatial correlations only. Thus variable connectivity patterns in the brain could reflect different coding principles across brain areas; moreover simulations suggest that plasticity is surprisingly fast. Keywords: Synaptic plasticity, STDP, LTP, LTD, Voltage, Spike Introduction Experience-dependent changes in receptive fields1-2 or in learned behavior relate to changes in synaptic strength. Electrophysiological measurements of functional connectivity patterns in slices of neural tissue3-4 or anatomical connectivity measures5 can only present a snapshot of the momentary connectivity -- which may change over time6. -

Stochastic Resonance and the Benefit of Noise in Nonlinear Systems

Noise, Oscillators and Algebraic Randomness – From Noise in Communication Systems to Number Theory. pp. 137-155 ; M. Planat, ed., Lecture Notes in Physics, Vol. 550, Springer (Berlin) 2000. Stochastic resonance and the benefit of noise in nonlinear systems Fran¸cois Chapeau-Blondeau Laboratoire d’Ing´enierie des Syst`emes Automatis´es (LISA), Universit´ed’Angers, 62 avenue Notre Dame du Lac, 49000 ANGERS, FRANCE. web : http://www.istia.univ-angers.fr/ chapeau/ ∼ Abstract. Stochastic resonance is a nonlinear effect wherein the noise turns out to be beneficial to the transmission or detection of an information-carrying signal. This paradoxical effect has now been reported in a large variety of nonlinear systems, including electronic circuits, optical devices, neuronal systems, material-physics phenomena, chemical reactions. Stochastic resonance can take place under various forms, according to the types considered for the noise, for the information-carrying signal, for the nonlinear system realizing the transmission or detection, and for the quantitative measure of performance receiving improvement from the noise. These elements will be discussed here so as to provide a general overview of the effect. Various examples will be treated that illustrate typical types of signals and nonlinear systems that can give rise to stochastic resonance. Various measures to quantify stochastic resonance will also be presented, together with analytical approaches for the theoretical prediction of the effect. For instance, we shall describe systems where the output signal-to-noise ratio or the input–output information capacity increase when the noise level is raised. Also temporal signals as well as images will be considered. Perspectives on current developments on stochastic resonance will be evoked. -

Information Length As a New Diagnostic of Stochastic Resonance †

proceedings Proceedings Information Length as a New Diagnostic of Stochastic Resonance † Eun-jin Kim 1,*,‡ and Rainer Hollerbach 2 1 School of Mathematics and Statistics, University of Sheffield, Sheffield S3 7RH, UK 2 Department of Applied Mathematics, University of Leeds, Leeds LS2 9JT, UK; [email protected] * Correspondence: [email protected]; Tel.: +44-2477-659-041 † Presented at the 5th International Electronic Conference on Entropy and Its Applications, 18–30 November 2019; Available online: https://ecea-5.sciforum.net/. ‡ Current address: Fluid and Complex Systems Research Centre, Coventry University, Coventry CV1 2TT, UK. Published: 17 November 2019 Abstract: Stochastic resonance is a subtle, yet powerful phenomenon in which noise plays an interesting role of amplifying a signal instead of attenuating it. It has attracted great attention with a vast number of applications in physics, chemistry, biology, etc. Popular measures to study stochastic resonance include signal-to-noise ratios, residence time distributions, and different information theoretic measures. Here, we show that the information length provides a novel method to capture stochastic resonance. The information length measures the total number of statistically different states along the path of a system. Specifically, we consider the classical double-well model of stochastic resonance in which a particle in a potential V(x, t) = [−x2/2 + x4/4 − A sin(wt) x] is subject to an additional stochastic forcing that causes it to occasionally jump between the two wells at x ≈ ±1. We present direct numerical solutions of the Fokker–Planck equation for the probability density function p(x, t) for w = 10−2 to 10−6, and A 2 [0, 0.2] and show that the information length shows a very clear signal of the resonance. -

Neural Coding and Integrate-And-Fire Model

motor Introduction to computationalneuroscience. cortex Doctoral school Oct-Nov 2006 association visual cortex cortex to motor Wulfram Gerstner output http://diwww.epfl.ch/w3mantra/ Computational Neuroscience 10 000 neurons Signal: 3 km wires action potential (spike) 1mm behavior behavior action potential neurons signals computational model molecules ion channels Swiss Federal Institute of Technology Lausanne, EPFL Laboratory of Computational Neuroscience, LCN, CH 1015 Lausanne Hodgkin-Huxley type models Integrate-and-fire type models Spike emission j i u i J Spike reception -70mV action potential Na+ K+ -spikes are events Ca2+ -threshold Ions/proteins -spike/reset/refractoriness 1 Models of synaptic Plasticity Computational Neuroscience pre post i Synapse j behavior Neurons synapses computational model molecules ion channels Ecole Polytechnique Fédérale de Lausanne, EPFL Laboratory of Computational Neuroscience, LCN, CH 1015 Lausanne Introduction to computationalneuroscience Background: What is brain-style computation? Lecture 1: Passive membrane and Integrate-and-Fire model Brain Computer Lecture 2: Hodgkin-Huxley models (detailed models) Lecture 3: Two-dimensional models (FitzHugh Nagumo) Lecture 4: synaptic plasticity Lecture 5: noise, network dynamics, associative memory Wulfram Gerstner http://diwww.epfl.ch/w3mantra/ Systems for computing and information processing Systems for computing and information processing Brain Computer Brain Computer memory Tasks: CPU slow Mathematical fast æ7p ö input 5cosç ÷ è 5 ø Distributed architecture -

Eligibility Traces and Three-Factor Rules of Synaptic Plasticity

Technische Universität München ENB Elite Master Program Neuroengineering (MSNE) Invited Presentation Prof. Dr. Wulfram Gerstner Ecole polytechnique fédérale de Lausanne (EPFL) Eligibility traces and three-factor rules of synaptic plasticity Abstract: Hebbian plasticity combines two factors: presynaptic activity must occur together with some postsynaptic variable (spikes, voltage deflection, calcium elevation ...). In three-factor learning rules the combi- nation of the two Hebbian factors is not sufficient, but leaves a trace at the synapses (eligibility trace) which decays over a few seconds; only if a third factor (neuromodulator signal) is present, either simultaneously or within a short a delay, the actual change of the synapse via long-term plasticity is triggered. After a review of classic theories and recent evidence of plasticity traces from plasticity experiments in rodents, I will discuss two studies from my own lab: the first one is a modeling study of reward-based learning with spiking neurons using an actor-critic architecture; the second one is a joint theory-experimental study showing evidence for eligibility traces in human behavior and pupillometry. Extensions from reward-based learning to surprise-based learning will be indicated. Biography: Wulfram Gerstner is Director of the Laboratory of Computational Neuroscience LCN at the EPFL. He studied physics at the universities of Tubingen and Munich and received a PhD from the Technical University of Munich. His re- search in computational neuroscience concentrates on models of spiking neurons and spike-timing dependent plasticity, on the problem of neuronal coding in single neurons and populations, as well as on the role of spatial representation for naviga- tion of rat-like autonomous agents.