Miniatured Inertial Motion and Position Tracking and Visualization Systems Using Android Wear Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Survey of Smartwatch Platforms from a Developer's Perspective

Grand Valley State University ScholarWorks@GVSU Technical Library School of Computing and Information Systems 2015 A Survey of Smartwatch Platforms from a Developer’s Perspective Ehsan Valizadeh Grand Valley State University Follow this and additional works at: https://scholarworks.gvsu.edu/cistechlib ScholarWorks Citation Valizadeh, Ehsan, "A Survey of Smartwatch Platforms from a Developer’s Perspective" (2015). Technical Library. 207. https://scholarworks.gvsu.edu/cistechlib/207 This Project is brought to you for free and open access by the School of Computing and Information Systems at ScholarWorks@GVSU. It has been accepted for inclusion in Technical Library by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. A Survey of Smartwatch Platforms from a Developer’s Perspective By Ehsan Valizadeh April, 2015 A Survey of Smartwatch Platforms from a Developer’s Perspective By Ehsan Valizadeh A project submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Information Systems At Grand Valley State University April, 2015 ________________________________________________________________ Dr. Jonathan Engelsma April 23, 2015 ABSTRACT ................................................................................................................................................ 5 INTRODUCTION ...................................................................................................................................... 6 WHAT IS A SMARTWATCH -

![Arxiv:1809.10387V1 [Cs.CR] 27 Sep 2018 IEEE TRANSACTIONS on SUSTAINABLE COMPUTING, VOL](https://docslib.b-cdn.net/cover/6402/arxiv-1809-10387v1-cs-cr-27-sep-2018-ieee-transactions-on-sustainable-computing-vol-586402.webp)

Arxiv:1809.10387V1 [Cs.CR] 27 Sep 2018 IEEE TRANSACTIONS on SUSTAINABLE COMPUTING, VOL

IEEE TRANSACTIONS ON SUSTAINABLE COMPUTING, VOL. X, NO. X, MONTH YEAR 0 This work has been accepted in IEEE Transactions on Sustainable Computing. DOI: 10.1109/TSUSC.2018.2808455 URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8299447&isnumber=7742329 IEEE Copyright Notice: c 2018 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. arXiv:1809.10387v1 [cs.CR] 27 Sep 2018 IEEE TRANSACTIONS ON SUSTAINABLE COMPUTING, VOL. X, NO. X, MONTH YEAR 1 Identification of Wearable Devices with Bluetooth Hidayet Aksu, A. Selcuk Uluagac, Senior Member, IEEE, and Elizabeth S. Bentley Abstract With wearable devices such as smartwatches on the rise in the consumer electronics market, securing these wearables is vital. However, the current security mechanisms only focus on validating the user not the device itself. Indeed, wearables can be (1) unauthorized wearable devices with correct credentials accessing valuable systems and networks, (2) passive insiders or outsider wearable devices, or (3) information-leaking wearables devices. Fingerprinting via machine learning can provide necessary cyber threat intelligence to address all these cyber attacks. In this work, we introduce a wearable fingerprinting technique focusing on Bluetooth classic protocol, which is a common protocol used by the wearables and other IoT devices. Specifically, we propose a non-intrusive wearable device identification framework which utilizes 20 different Machine Learning (ML) algorithms in the training phase of the classification process and selects the best performing algorithm for the testing phase. -

Smartwatch Security Research TREND MICRO | 2015 Smartwatch Security Research

Smartwatch Security Research TREND MICRO | 2015 Smartwatch Security Research Overview This report commissioned by Trend Micro in partnership with First Base Technologies reveals the security flaws of six popular smartwatches. The research involved stress testing these devices for physical protection, data connections and information stored to provide definitive results on which ones pose the biggest risk with regards to data loss and data theft. Summary of Findings • Physical device protection is poor, with only the Apple Watch having a lockout facility based on a timeout. The Apple Watch is also the only device which allowed a wipe of the device after a set number of failed login attempts. • All the smartwatches had local copies of data which could be accessed through the watch interface when taken out of range of the paired smartphone. If a watch were stolen, any data already synced to the watch would be accessible. The Apple Watch allowed access to more personal data than the Android or Pebble devices. • All of the smartwatches we tested were using Bluetooth encryption and TLS over WiFi (for WiFi enabled devices), so consideration has obviously been given to the security of data in transit. • Android phones can use ‘trusted’ Bluetooth devices (such as smartwatches) for authentication. This means that the smartphone will not lock if it is connected to a trusted smartwatch. Were the phone and watch stolen together, the thief would have full access to both devices. • Currently smartwatches do not allow the same level of interaction as a smartphone; however it is only a matter of time before they do. -

Mobius Smatwatches.Key

Разработка для Smart Watches: Apple WatchKit, Android Wear и TizenOS Agenda History Tizen for Wearable Apple Watch Android Wear QA History First Smart Watches Samsung SPH-WP10 1999 IBM Linux Watch 2000 Microsoft SPOT 2003 IBM Linux Watches Today Tizen for Wearable • Display: 360x380; 320x320 • Hardware: 512MB, 4GB, 1Ghz dual-core • Sensors • Accelerometer • Gyroscope • Compass (optional) • Heart Rate monitor (optional) • Ambient Light (optional) • UV (optional) • Barometer (optional) • Camera (optional) • Input • Touch • Microphone • Connectivity: BLE • Devices: Samsung Gear 2, Gear S, Gear, Gear Neo • Compatibility: Samsung smartphones Tizen: Samsung Gear S Tizen for Wearable: Development Tizen for Wearable: Development Tizen IDE Tizen Emulator Apple Watch • Display: 390x312; 340x272 • Hardware: 256M, 1 (2)Gb; Apple S 1 • Sensors: • Accelerometer • Gyroscope • Heart Rate monitor • Barometer • Input • digital crown • force touch • touch • microphone • Compatibility: iOS 8.2 • Devices: 24 types Apple Watch Apple Watch Kit Apple Watch kit: Watch Sim Android Wear • Display: Round; Rect • 320x290; 320x320, 280x280 • Hardware: 512MB, 4GB, 1Ghz (TIOMAP, Qualcomm) • Sensors • Accelerometer • Gyroscope (optional) • Compass (optional) • Heart Rate monitor (optional) • Ambient Light (optional) • UV (optional) • Barometer (optional) • GPS (optional) • Input • Touch • Microphone • Connectivity: BLE • Devices: Moto 360, LG G Watch, Gear Live, ZenWatch, Sony Smartwatch 3, LG G Watch R • Compatibility: Android 4.3 Android Wear Android Wear IDE Android -

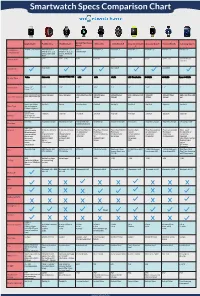

Smartwatch Specs Comparison Chart

Smartwatch Specs Comparison Chart Apple Watch Pebble Time Pebble Steel Alcatel One Touch Moto 360 LG G Watch R Sony Smartwatch Asus ZenWatch Huawei Watch Samsung Gear S Watch 3 Smartphone iPhone 5 and Newer Android OS 4.1+ Android OS 4.1+ iOS 7+ Android 4.3+ Android 4.3+ Android 4.3+ Android 4.3+ Android 4.3+ Android 4.3+ iPhone 4, 4s, 5, 5s, iPhone 4, 4s, 5, 5s, Android 4.3+ Compatibility and 5c, iOS 6 and and 5c, iOS 6 and iOS7 iOS7 Price in USD $349+ $299 $149 - $180 $149 $249.99 $299 $200 $199 $349 - 399 $299 $149 (w/ contract) May 2015 June 2015 June 2015 Availability Display Type Screen Size 38mm: 1.5” 1.25” 1.25” 1.22” 1.56” 1.3” 1.6” 1.64” 1.4” 2” 42mm: 1.65” 38mm: 340x272 (290 ppi) 144 x 168 pixel 144 x 168 pixel 204 x 204 Pixel 258 320x290 pixel 320x320 pixel 320 x 320 pixel 269 320x320 400x400 Pixel 480 x 360 Pixel 300 Screen Resolution 42mm: 390x312 (302 ppi) ppi 205 ppi 245 ppi ppi 278ppi 286 ppi ppi Sport: Ion-X Glass Gorilla 3 Gorilla Corning Glass Gorilla 3 Gorilla 3 Gorilla 3 Gorilla 3 Sapphire Gorilla 3 Glass Type Watch: Sapphire Edition: Sapphire 205mah Approx 18 hrs 150 mah 130 mah 210 mah 320 mah 410 mah 420 mah 370 mah 300 mah 300 mah Battery Up to 72 hrs on Battery Reserve Mode Wireless Magnetic Charger Magnetic Charger Intergrated USB Wireless Qi Magnetic Charger Micro USB Magnetic Charger Magnetic Charger Charging Cradle Charging inside watch band Hear Rate Sensors Pulse Oximeter 3-Axis Accelerome- 3-Axis Accelerome- Hear Rate Monitor Hear Rate Monitor Heart Rate Monitor Ambient light Heart Rate monitor Heart -

Bedienungsanleitung Sony Smartwatch 3

Bedienungsanleitung SmartWatch 3 SWR50 Inhaltsverzeichnis Erste Schritte..................................................................................4 Einführung...........................................................................................4 Überblick.............................................................................................4 Laden.................................................................................................4 Ein- und Ausschalten..........................................................................5 Einrichten der SmartWatch 3...............................................................5 Aneignen der Grundlagen..............................................................8 Verwenden des Touchscreens............................................................8 Ab- und Einblenden des Bildschirms...................................................8 Startbildschirm....................................................................................9 Karten.................................................................................................9 Status-Symbole anzeigen..................................................................10 Grundlegende Einstellungen........................................................12 Zugreifen auf Einstellungen................................................................12 Bildschirmeinstellungen.....................................................................12 Flugzeugmodus................................................................................13 -

Op E N So U R C E Yea R B O O K 2 0

OPEN SOURCE YEARBOOK 2016 ..... ........ .... ... .. .... .. .. ... .. OPENSOURCE.COM Opensource.com publishes stories about creating, adopting, and sharing open source solutions. Visit Opensource.com to learn more about how the open source way is improving technologies, education, business, government, health, law, entertainment, humanitarian efforts, and more. Submit a story idea: https://opensource.com/story Email us: [email protected] Chat with us in Freenode IRC: #opensource.com . OPEN SOURCE YEARBOOK 2016 . OPENSOURCE.COM 3 ...... ........ .. .. .. ... .... AUTOGRAPHS . ... .. .... .. .. ... .. ........ ...... ........ .. .. .. ... .... AUTOGRAPHS . ... .. .... .. .. ... .. ........ OPENSOURCE.COM...... ........ .. .. .. ... .... ........ WRITE FOR US ..... .. .. .. ... .... 7 big reasons to contribute to Opensource.com: Career benefits: “I probably would not have gotten my most recent job if it had not been for my articles on 1 Opensource.com.” Raise awareness: “The platform and publicity that is available through Opensource.com is extremely 2 valuable.” Grow your network: “I met a lot of interesting people after that, boosted my blog stats immediately, and 3 even got some business offers!” Contribute back to open source communities: “Writing for Opensource.com has allowed me to give 4 back to a community of users and developers from whom I have truly benefited for many years.” Receive free, professional editing services: “The team helps me, through feedback, on improving my 5 writing skills.” We’re loveable: “I love the Opensource.com team. I have known some of them for years and they are 6 good people.” 7 Writing for us is easy: “I couldn't have been more pleased with my writing experience.” Email us to learn more or to share your feedback about writing for us: https://opensource.com/story Visit our Participate page to more about joining in the Opensource.com community: https://opensource.com/participate Find our editorial team, moderators, authors, and readers on Freenode IRC at #opensource.com: https://opensource.com/irc . -

Sniffing Your Smartwatch Passwords Via Deep Sequence Learning

152 Snoopy: Sniffing Your Smartwatch Passwords via Deep Sequence Learning CHRIS XIAOXUAN LU, University of Oxford, UK BOWEN DU and HONGKAI WEN∗, University of Warwick, USA SEN WANG, Heriot-Watt University, UK ANDREW MARKHAM and IVAN MARTINOVIC, University of Oxford, UK YIRAN SHEN, Harbin Engineering University, China NIKI TRIGONI, University of Oxford, UK Demand for smartwatches has taken off in recent years with new models which can run independently from smartphones and provide more useful features, becoming first-class mobile platforms. One can access online banking or even make payments on a smartwatch without a paired phone. This makes smartwatches more attractive and vulnerable to malicious attacks, which to date have been largely overlooked. In this paper, we demonstrate Snoopy, a password extraction and inference system which is able to accurately infer passwords entered on Android/Apple watches within 20 attempts, just by eavesdropping on motion sensors. Snoopy uses a uniform framework to extract the segments of motion data when passwords are entered, and uses novel deep neural networks to infer the actual passwords. We evaluate the proposed Snoopy system in the real-world with data from 362 participants and show that our system offers a ∼ 3-fold improvement in the accuracy of inferring passwords compared to the state-of-the-art, without consuming excessive energy or computational resources. We also show that Snoopy is very resilient to user and device heterogeneity: it can be trained on crowd-sourced motion data (e.g. via Amazon Mechanical Turk), and then used to attack passwords from a new user, even if they are wearing a different model. -

Comparison Chart Microsoft Band – Kevin Martin Sony Smartwatch 3

What Wearable Device Do I Buy and Why? PC Retreat 2015 What Do You Want in a Smartwatch? • Fitness Tracking • Phone notifications • Tell time • Long battery life • Style What Wearable Device • Affordability Do I Buy and Why? • More? Kevin Martin, Jonathan Lewis, Parag Joshi Comparison Chart Microsoft Band –Kevin Martin Motorola Apple Pebble Fitbit Sony Microsoft • Windows 8.1, iOS 7.1+, Android 4.3+ Moto 360 Watch Classic Charge HR SmartWatch 3 Band • Battery Life: 2 days Operating Windows Vista+, Windows 8.1+, Android 2.3+ Mac OS X 10.6+, System Android 4.3+ iOS 8+ Android 4.1+ Android 4.1+ iOS • Screen Size: 11mm x 33mm iOS 5+ iOS 6+, Android Syncs With 7.1 4.1+ • Wireless Connectivity: Bluetooth Battery Life 1.5 days 1 day 7 days 5 days 2 days 2 days Screen Size 1.56 inches 1.32 inches 1.26 inches .83 inches 1.6 inches 11mm x 33mm • Splash proof Wireless Bluetooth and Bluetooth Bluetooth and Bluetooth, NFC Bluetooth Bluetooth Connectivity WiFi and WiFi USB cable and WiFi • Built‐in fitness tracking, heart rate monitor and GPS Waterproof? Up to 30m No Up to 50m No Up to 1.5m No • Virtual keyboard Fitness Built‐in + HR + Built‐in + HR + UV + Built‐in + HR Built‐in With app Built‐in Tracking? Sleep Tracking GPS • Notifications: Alarm, Calendar Reminder, E‐mail, Facebook, Incoming Notifications? Yes Yes Yes Yes Yes Yes Call, Missed Call, Text Message, Timer, Twitter, and Weather Starting Price $300 $350 $100 $150 $180 $160 • Starting at $160 with $40 rebate Sony SmartWatch 3 – Jonathan Lewis Apple Watch – Parag Joshi • iOS 8+ • Android -

FREEDOM MICRO Flextech

FREEDOM MICRO FlexTech The new Freedom Micro FlexTech Sensor Cable allows you to integrate the wearables FlexSensor™ into the Freedom Micro Alarming Puck, providing both power and security for most watches. T: 800.426.6844 | www.MTIGS.com © 2019 MTI All Rights Reserved FREEDOM MICRO FlexTech ALLOWS FOR INTEGRATION OF THE WEARABLES FLEXSENSOR, PROVIDING BOTH POWER AND SECURITY FOR MOST WATCHES. KEY FEATURES AND BENEFITS Security for Wearables - FlexTech • Available in both White and Black • Powers and Secures most watches via the Wearables FlexSensor • To be used only with the Freedom Micro Alarming Puck: – Multiple Alarming points: » Cable is cut » FlexSensor is cut » Cable is removed from puck Complete Merchandising Flexibility • Innovative Spin-and-Secure Design Keeps Device in Place • Small, Self-contained System (Security, Power, Alarm) Minimizes Cables and Clutter • Available in White or Black The Most Secure Top-Mount System Available • Industry’s Highest Rip-Out Force via Cut-Resistant TruFeel AirTether™ • Electronic Alarm Constantly Attached to Device for 1:1 Security PRODUCT SPECIFICATIONS FlexTech Head 1.625”w x 1.625”d x .5”h FlexTech Cable Length: 6” Micro Riser Dimensions 1.63”w x 1.63”d x 3.87”h Micro Mounting Plate 2.5”w x 2.5”d x 0.075”h Dimensions: Micro Puck Dimensions: 1.5”w x 1.5”d x 1.46”h Powered Merchandising: Powers up to 5.2v at 2.5A Cable Pull Length: 30” via TruFeel AirTether™ Electronic Security: Alarm stays on the device Alarm: Up to 100 Db Allowable Revolutions: Unlimited (via swivel) TO ORDER: Visual System -

02/10/2019 Sali

3 kitap Kadir KAYIKÇI 0505 0354 212 29 99 alana 0505 254 72 59 610 58 29 1 kitap “sektöründebedava Medrese öncü” Mah. Lise Cad. No.12 (Yer Altında) Fotokopi makinelerinizin YOZGAT garantili tamir ve bakımı Medrese Mah. Lise Cad. No.12 (yer altında) YOZGAT 02 EKİM 2019 ÇARŞAMBA SAYI: 2262 FİYATI: 1.00 TL www.yozgatcamlik.com Fatih Tabiat Par- kı’nı ziyaret eden İzmir Orman Bölge Müdür Yardımcısı hem- şerimiz Mehmet Erol, “Yozgat’a geldiğinizde mutlaka buralara uğrayın” mesajı verdi.>>2’DE Yozgat’ın tescilli ve yöresel ürünü ‘testi kebabı’na Nevşehirliler sahip çıktı. Testi kebabı- nın kendi yöresel lezzetleri olduğunu iddia eden Nevşehirlilere karşı Yozgatlılar tescilli ve yöresel ürünlerine sahip çıktı. OSMANLI’DAN YOZGATLILARA MİRAS İlçesine büyük bir seferberlik Testi kebabının Osmanlı’dan Yozgatlılara hareketi ile doğal- miras kalan yöresel bir lezzet olduğunu belirten gazı kazandıran Zafer Türk Mutfağı sahibi Zafer Özışık, “Testi Sarıkaya Belediye kebabı dünde, bugün de bizimdi, yarında bizim Başkanı Ömer olacaktır” dedi.>>>3.SAYFADA Açıkel, doğalgaz çalışması ile bozu- lan yolları için kol- ları sıvadı.>2’DE kitap, kırtasiye ve fotokopide BAYATÖRENLİLERİ AŞURE AŞI BİRLEŞTİRDİ en uygun “Aşure” etkinliğine Türkiye’nin farklı yerlerinden ve yurt fiyatlara hizmet dışından katılım oldu.>>4’DE veren tek mağaza 0354 Çamlık Gazetesi esnaf köşesinin konuğu 30 yaşın- MUTLULUKLAR 212 29 99 daki MSA Oto Cam sahibi Süleyman 0505 Hazırlık kitaplarında Altınok oldu. 15 yıldır değişik iş- DİLİYORUZ 254 72 59 lerde esnaflık yapan ve şuan- Yozgat’ın yetiştirdiği fotokopi makinelerinizin da oto cam üzerine hizmet doktorlardan Göksu 4 kitap alana veren Süleyman Altınok’a Öztürk, Emre Akpınar ile evlilik yolunda ilk adımı garantili tamir ve bakımları yapılmaktadır.. -

The Complete 2017 Smartwatch Buyer's Guide

The Complete 2017 Smartwatch Buyer’s Guide The smartwatch ecosystem is more diverse than ever. Fashion designers and fitness trackers have joined the ranks of electronics brands and computer manufacturers, offering watches designed for every taste and lifestyle – from office executive to fitness nut to outdoor adventurer, and just about everyone in between. Which is the right smartwatch for you? The answer depends on which features are most important to you. That’s why we’ve broken this guide down into six categories, and reviewed the top two smartwatches in each of them, in detail. Let’s dive The Complete 2017 Smartwatch Buyer’s Guide Compatibility Almost all smartwatches on the market are designed to pair with smartphones. Even most apps designed to run natively on watches still need to sync with their phone counterparts – and some smartwatches are completely dependent on phones to display the messages of their alerts and notifications. This means your smartwatch will be all but useless if it’s not compatible with your phone’s OS – so compatibility is the first feature you need to be absolutely sure about. As you’d expect, most Apple Watches works seamlessly with most iPhones. The situation gets slightly more complex in the Android smartwatch world, where all watches are compatible with Android phones, but vary in their compatibility with iPhones. For example, Samsung’s Gear S3 works with many Android devices, but not with an iPhone – while certain watches by LG and Huawei can display notifications on an iPhone, but can’t connect to the phone’s wi-fi or sync data with many iOS apps.