3D Medical Image Segmentation in Virtual Reality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Management of Large Sets of Image Data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak

Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak Download free books at Karol Kozak Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Download free eBooks at bookboon.com 2 Management of large sets of image data: Capture, Databases, Image Processing, Storage, Visualization 1st edition © 2014 Karol Kozak & bookboon.com ISBN 978-87-403-0726-9 Download free eBooks at bookboon.com 3 Management of large sets of image data Contents Contents 1 Digital image 6 2 History of digital imaging 10 3 Amount of produced images – is it danger? 18 4 Digital image and privacy 20 5 Digital cameras 27 5.1 Methods of image capture 31 6 Image formats 33 7 Image Metadata – data about data 39 8 Interactive visualization (IV) 44 9 Basic of image processing 49 Download free eBooks at bookboon.com 4 Click on the ad to read more Management of large sets of image data Contents 10 Image Processing software 62 11 Image management and image databases 79 12 Operating system (os) and images 97 13 Graphics processing unit (GPU) 100 14 Storage and archive 101 15 Images in different disciplines 109 15.1 Microscopy 109 360° 15.2 Medical imaging 114 15.3 Astronomical images 117 15.4 Industrial imaging 360° 118 thinking. 16 Selection of best digital images 120 References: thinking. 124 360° thinking . 360° thinking. Discover the truth at www.deloitte.ca/careers Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. -

Medical Image Processing Software

Wohlers Report 2018 Medical Image Processing Software Medical image Patient-specific medical devices and anatomical models are almost always produced using radiological imaging data. Medical image processing processing software is used to translate between radiology file formats and various software AM file formats. Theoretically, any volumetric radiological imaging dataset by Andy Christensen could be used to create these devices and models. However, without high- and Nicole Wake quality medical image data, the output from AM can be less than ideal. In this field, the old adage of “garbage in, garbage out” definitely applies. Due to the relative ease of image post-processing, computed tomography (CT) is the usual method for imaging bone structures and contrast- enhanced vasculature. In the dental field and for oral- and maxillofacial surgery, in-office cone-beam computed tomography (CBCT) has become popular. Another popular imaging technique that can be used to create anatomical models is magnetic resonance imaging (MRI). MRI is less useful for bone imaging, but its excellent soft tissue contrast makes it useful for soft tissue structures, solid organs, and cancerous lesions. Computed tomography: CT uses many X-ray projections through a subject to computationally reconstruct a cross-sectional image. As with traditional 2D X-ray imaging, a narrow X-ray beam is directed to pass through the subject and project onto an opposing detector. To create a cross-sectional image, the X-ray source and detector rotate around a stationary subject and acquire images at a number of angles. An image of the cross-section is then computed from these projections in a post-processing step. -

Avizo Software for Industrial Inspection

Avizo Software for Industrial Inspection Digital inspection and materials analysis Digital workflow Thermo Scientific™ Avizo™ Software provides a comprehensive set of tools addressing the whole research-to-production cycle: from materials research in off-line labs to automated quality control in production environments. 3D image data acquisition Whatever the part or material you need to inspect, using © RX Solutions X-ray CT, radiography, or microscopy, Avizo Software is the solution of choice for materials characterization and defect Image processing detection in a wide range of areas (additive manufacturing, aerospace, automotive, casting, electronics, food, manufacturing) and for many types of materials (fibrous, porous, metals and alloys, ceramics, composites and polymers). Avizo Software also provides dimensional metrology with Visual inspection Dimensional metrology Material characterization advanced measurements; an extensive set of programmable & defect analysis automated analysis workflows (recipes); reporting and traceability; actual/nominal comparison by integrating CAD models; and a fully automated in-line inspection framework. With Avizo Software, reduce your design cycle, inspection times, and meet higher-level quality standards at a lower cost. + Creation and automation of inspection and analysis workflows + Full in-line integration Reporting & traceability On the cover: Porosity analysis and dimensional metrology on compressor housing. Data courtesy of CyXplus 2 3 Avizo Software for Industrial Inspection Learn more at thermofisher.com/amira-avizo Integrating expertise acquired over more than 10 years and developed in collaboration with major industrial partners in the aerospace, Porosity analysis automotive, and consumer goods industries, Avizo Software allows Imaging techniques such as CT, FIB-SEM, SEM, and TEM, allow detection of structural defects in the to visualize, analyze, measure and inspect parts and materials. -

Respiratory Adaptation to Climate in Modern Humans and Upper Palaeolithic Individuals from Sungir and Mladeč Ekaterina Stansfeld1*, Philipp Mitteroecker1, Sergey Y

www.nature.com/scientificreports OPEN Respiratory adaptation to climate in modern humans and Upper Palaeolithic individuals from Sungir and Mladeč Ekaterina Stansfeld1*, Philipp Mitteroecker1, Sergey Y. Vasilyev2, Sergey Vasilyev3 & Lauren N. Butaric4 As our human ancestors migrated into Eurasia, they faced a considerably harsher climate, but the extent to which human cranial morphology has adapted to this climate is still debated. In particular, it remains unclear when such facial adaptations arose in human populations. Here, we explore climate-associated features of face shape in a worldwide modern human sample using 3D geometric morphometrics and a novel application of reduced rank regression. Based on these data, we assess climate adaptations in two crucial Upper Palaeolithic human fossils, Sungir and Mladeč, associated with a boreal-to-temperate climate. We found several aspects of facial shape, especially the relative dimensions of the external nose, internal nose and maxillary sinuses, that are strongly associated with temperature and humidity, even after accounting for autocorrelation due to geographical proximity of populations. For these features, both fossils revealed adaptations to a dry environment, with Sungir being strongly associated with cold temperatures and Mladeč with warm-to-hot temperatures. These results suggest relatively quick adaptative rates of facial morphology in Upper Palaeolithic Europe. Te presence and the nature of climate adaptation in modern humans is a highly debated question, and not much is known about the speed with which these adaptations emerge. Previous studies demonstrated that the facial morphology of recent modern human groups has likely been infuenced by adaptation to cold and dry climates1–9. Although the age and rate of such adaptations have not been assessed, several lines of evidence indicate that early modern humans faced variable and sometimes harsh environments of the Marine Isotope Stage 3 (MIS3) as they settled in Europe 40,000 years BC 10. -

An Open Source Freeware Software for Ultrasound Imaging and Elastography

Proceedings of the eNTERFACE’07 Workshop on Multimodal Interfaces, Istanbul,˙ Turkey, July 16 - August 10, 2007 USIMAGTOOL: AN OPEN SOURCE FREEWARE SOFTWARE FOR ULTRASOUND IMAGING AND ELASTOGRAPHY Ruben´ Cardenes-Almeida´ 1, Antonio Tristan-Vega´ 1, Gonzalo Vegas-Sanchez-Ferrero´ 1, Santiago Aja-Fernandez´ 1, Veronica´ Garc´ıa-Perez´ 1, Emma Munoz-Moreno˜ 1, Rodrigo de Luis-Garc´ıa 1, Javier Gonzalez-Fern´ andez´ 2, Dar´ıo Sosa-Cabrera 2, Karl Krissian 2, Suzanne Kieffer 3 1 LPI, University of Valladolid, Spain 2 CTM, University of Las Palmas de Gran Canaria 3 TELE Laboratory, Universite´ catholique de Louvain, Louvain-la-Neuve, Belgium ABSTRACT • Open source code: to be able for everyone to modify and reuse the source code. UsimagTool will prepare specific software for the physician to change parameters for filtering and visualization in Ultrasound • Efficiency, robust and fast: using a standard object ori- Medical Imaging in general and in Elastography in particular, ented language such as C++. being the first software tool for researchers and physicians to • Modularity and flexibility for developers: in order to chan- compute elastography with integrated algorithms and modular ge or add functionalities as fast as possible. coding capabilities. It will be ready to implement in different • Multi-platform: able to run in many Operating systems ecographic systems. UsimagTool is based on C++, and VTK/ITK to be useful for more people. functions through a hidden layer, which means that participants may import their own functions and/or use the VTK/ITK func- • Usability: provided with an easy to use GUI to interact tions. as easy as possible with the end user. -

Modelling of the Effects of Entrainment Defects on Mechanical Properties in Al-Si-Mg Alloy Castings

MODELLING OF THE EFFECTS OF ENTRAINMENT DEFECTS ON MECHANICAL PROPERTIES IN AL-SI-MG ALLOY CASTINGS By YANG YUE A dissertation submitted to University of Birmingham for the degree of DOCTOR OF PHILOSOPHY School of Metallurgy and Materials University of Birmingham June 2014 University of Birmingham Research Archive e-theses repository This unpublished thesis/dissertation is copyright of the author and/or third parties. The intellectual property rights of the author or third parties in respect of this work are as defined by The Copyright Designs and Patents Act 1988 or as modified by any successor legislation. Any use made of information contained in this thesis/dissertation must be in accordance with that legislation and must be properly acknowledged. Further distribution or reproduction in any format is prohibited without the permission of the copyright holder. Abstract Liquid aluminium alloy is highly reactive with the surrounding atmosphere and therefore, surface films, predominantly surface oxide films, easily form on the free surface of the melt. Previous researches have highlighted that surface turbulence in liquid aluminium during the mould-filling process could result in the fold-in of the surface oxide films into the bulk liquid, and this would consequently generate entrainment defects, such as double oxide films and entrapped bubbles in the solidified casting. The formation mechanisms of these defects and their detrimental e↵ects on both mechani- cal properties and reproducibility of properties of casting have been studied over the past two decades. However, the behaviour of entrainment defects in the liquid metal and their evolution during the casting process are still unclear, and the distribution of these defects in casting remains difficult to predict. -

An Open-Source Visualization System

OpenWalnut { An Open-Source Visualization System Sebastian Eichelbaum1, Mario Hlawitschka2, Alexander Wiebel3, and Gerik Scheuermann1 1Abteilung f¨urBild- und Signalverarbeitung, Institut f¨urInformatik, Universit¨atLeipzig, Germany 2Institute for Data Analysis and Visualization (IDAV), and Department of Biomedical Imaging, University of California, Davis, USA 3 Max-Planck-Institut f¨urKognitions- und Neurowissenschaften, Leipzig, Germany [email protected] Abstract In the last years a variety of open-source software packages focusing on vi- sualization of human brain data have evolved. Many of them are designed to be used in a pure academic environment and are optimized for certain tasks or special data. The open source visualization system we introduce here is called OpenWalnut. It is designed and developed to be used by neuroscientists during their research, which enforces the framework to be designed to be very fast and responsive on the one side, but easily extend- able on the other side. OpenWalnut is a very application-driven tool and the software is tuned to ease its use. Whereas we introduce OpenWalnut from a user's point of view, we will focus on its architecture and strengths for visualization researchers in an academic environment. 1 Introduction The ongoing research into neurological diseases and the function and anatomy of the brain, employs a large variety of examination techniques. The different techniques aim at findings for different research questions or different viewpoints of a single task. The following are only a few of the very common measurement modalities and parts of their application area: computed tomography (CT, for anatomical information using X-ray measurements), magnetic-resonance imag- ing (MRI, for anatomical information using magnetic resonance esp. -

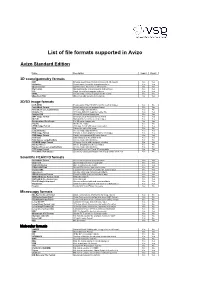

List of File Formats Supported in Avizo

List of file formats supported in Avizo Avizo Standard Edition Name Description Import Export 3D scene/geometry formats DXF Drawing Interchange Format for AutoCAD 3D models Yes Yes HxSurface Avizo's native format for triangular surfaces Yes Yes Open Inventor Open Inventor file format for 3D models Yes Yes Ply Format Stanford triangle format for points and surfaces Yes Yes STL Simple format for triangular surfaces Yes Yes VRML Virtual reality markup language for 3D models Yes Yes Wavefront OBJ Wavefront OBJ generic 3D file format No Yes 2D/3D image formats ACR-NEMA Predecessor of the DICOM format for medical images Yes No AmiraMesh Format Avizo's native general purpose format Yes Yes AmiraMesh as LargeDiskData Access image data blockwise Yes No Analyze 7.5 3D image data with separate header file Yes Yes AnalyzeAVW 2D and 3D medical image data Yes Yes BMP Image Format Uncompressed Windows bitmap format Yes Yes DICOM Standard file format for medical images Yes Yes Encapsulated PostScript For 2D raster images only No Yes Interfile Interfile file reader Yes No JPEG Image Format 2D image format with lossy compression Yes Yes LDA VolumeViz native file format Yes No LargeDiskData Access image data blockwise Yes Yes PNG Image Format Portable network graphics format for 2D images Yes Yes PNM Image Format Simple uncompressed 2D image format Yes Yes Raw Data Binary data as a 3D uniform field Yes Yes Raw Data as LargeDiskData Access image data blockwise Yes No SGI-RGB Image Format 2D image format with run-length encoding Yes Yes Stacked-Slices Info -

FIB-SEM) Tomography Hui Yuan, B Van De Moortele, Thierry Epicier

Accurate post-mortem alignment for Focused Ion Beam and Scanning Electron Microscopy (FIB-SEM) tomography Hui Yuan, B van de Moortele, Thierry Epicier To cite this version: Hui Yuan, B van de Moortele, Thierry Epicier. Accurate post-mortem alignment for Focused Ion Beam and Scanning Electron Microscopy (FIB-SEM) tomography. 2020. hal-03024445 HAL Id: hal-03024445 https://hal.archives-ouvertes.fr/hal-03024445 Preprint submitted on 25 Nov 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Accurate post-mortem alignment for Focused Ion Beam and Scanning Electron Microscopy (FIB-SEM) tomography H. Yuan1, 2*, B. Van de Moortele2, T. Epicier1,3 § 1. Université de Lyon, INSA-Lyon, Université Claude Bernard Lyon1, MATEIS, umr CNRS 5510, 69621 Villeurbanne Cedex, France 2. Université de Lyon, ENS-Lyon, LGLTPE, umr CNRS 5276, 69364 Lyon 07, France 3. Université de Lyon, Université Claude Bernard Lyon1, IRCELYON, umr CNRS 5256, 69626 Villeurbanne Cedex, France Abstract: Drifts in the three directions (X, Y, Z) during the FIB-SEM slice-and-view tomography is an important issue in 3D-FIB experiments which may induce significant inaccuracies in the subsequent volume reconstruction and further quantification of morphological volume parameters of the sample microstructure. -

A Method of Superimposition of CBCT Volumes in the Posterior Cranial Base

A Method of Superimposition of CBCT Volumes in the Posterior Cranial Base A Thesis Submitted to The Temple University Graduate Board In Partial Fulfillment of the Requirements for the Degree MASTER OF SCIENCE In ORAL BIOLOGY By Jared R. Gianquinto, D.M.D. Orhan C. Tuncay, D.M.D. Advisor, Department of Orthodontics James J. Sciote, D.D.S., Ph.D., M.S., Committee, Department of Orthodontics Jie Yang, D.D.S., M.Med.Sc., M.S., D.M.D. Committee, Department of Radiology August, 2011 i ABSTRACT Three dimensional imaging in the form of Cone Beam Computed Tomography has become prevalent in the field of orthodontics. Analytical methods of resulting volumetric data sets have not kept pace with the technology capable of producing them. Current 3D analysis techniques are largely adaptations of existing 2D methods, offering no clear diagnostic advantage over traditional imaging techniques in light of increased radiation exposure, and cannot be compared with norms generated from 2D image capture sources. In order to study morphology in 3D, data sets must be generated for longitudinal studies and native 3D analytical methods must also be developed. Existing methods of CBCT volume superimposition are cumbersome, involving complex software pipelines and multiple systems to complete the process. The goal of the current study was to develop a reproducible method of CBCT volume superimposition in the posterior cranial base in a single software package, and construct an easy to follow, step-by-step manual to facilitate future studies in craniofacial morphology. Existing anonymized sequential CBCT volumes of three subjects meeting inclusion criteria were obtained from the Kornberg School of Dentistry Department of Radiology. -

Photoshop: Batch Processing of Data to Make 8-Bit Data

SHORT COURSE In association with iDigBio APRIL 30 and MAY 1, 2014 CONTENTS BASICS OF SAMPLE CHOICE/PREPARATION FOR CT SCANNING....................... 1 Size, Shape, and Resolution ............................................................................................ 1 Sample Mounting ............................................................................................................ 2 PROGRAMS USED/AVAILABLE FOR IMAGE PROCESSING ................................... 4 Freeware .......................................................................................................................... 4 Commercial 3D Visualization & Measurement Software .............................................. 4 Animations ...................................................................................................................... 4 PROCESSING WITH ImageJ ............................................................................................ 5 Resizing and Cropping Dataset ....................................................................................... 5 Orthogonally Reslicing Data........................................................................................... 6 Adding Slice Numbers .................................................................................................... 6 Other Useful Features ..................................................................................................... 6 AVIZO ............................................................................................................................... -

ECG Numerical Modelling for Patients with Pathologies

ECG numerical modelling for patients with pathologies Alexander Danilov Institute of Numerical Mathematics, Russian Academy of Sciences Moscow, Russia 6th Russian workshop on mathematical models and numerical methods in biomathematics Alexander Danilov (INM RAS) ECG modelling Biomathematics VI 1 / 17 Bioimpedance measurements Impedance is measured Several electric frequencies Local and segmental electrode schemes Hydration estimation, fat-free mass, muscle mass, etc. Noninvasive, efficient, portable, simple and convenient Research project at INM RAS, Moscow, Russia 2010 { present Alexander Danilov, INM RAS Vasily Kramarenko, MIPT Victoria Salamatova, MIPT Alexandra Yurova, MSU Alexander Danilov (INM RAS) ECG modelling Biomathematics VI 2 / 17 Bioimpedance measurements Impedance is measured Several electric frequencies Local and segmental electrode schemes Hydration estimation, fat-free mass, muscle mass, etc. Noninvasive, efficient, portable, simple and convenient Finite element method and adaptive tetrahedral grids for bioimpedance measurements modelling High-resolution multimaterial 3D models based on real human anatomy Sensitivity analysis for tetrapolar electrode schemes S.Grimnes, O.G.Martinsen. Bioimpedance and Bioelectricity Basics. Elsevier, Amsterdam, 2008. Alexander Danilov (INM RAS) ECG modelling Biomathematics VI 2 / 17 Mathematical model div(CrU) = 0 in Ω Jn = ±I0=S± on Γ± Jn = 0 on @Ω n Γ± U { potential field C { conductivity tensor E = rU { intensity field J = CE { current density field I0 { current injection S± { contact surface of electrodes Alexander Danilov (INM RAS) ECG modelling Biomathematics VI 3 / 17 Bioelectrical conductivity Typical conductivity parameters @ 50kHz (S/m) Blood 0.7 + 0.02·j Muscles 0.36 + 0.035·j Fat 0.0435 + 0.001·j Bones 0.021 + 0.001·j Skin 0.03 + 0.06·j Heart 0.19 + 0.045·j Lungs 0.27 + 0.025·j Gabriel S., Lau R.W., Gabriel C.