Tinsl Is Not a Shading Language

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

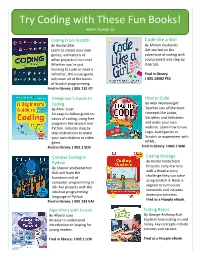

Try Coding with These Fun Books!

Try Coding with These Fun Books! www.mywpl.ca Coding From Scratch Code like a Girl By Rachel Ziter By Miriam Peskowitz Learn to create your own Get started on the games, animations or adventure of coding with other projects in no time! cool projects and step by Whether you’re just step tips. learning to code or need a refresher, this visual guide Find in library: will cover all of the basics J 005.13082 PES of Scratch programming. Find in library: J 005.133 ZIT A Beginner’s Guide to How to Code Coding By Max Wainewright By Marc Scott Teaches you all the basic An easy-to-follow guide to concepts like Loops, basics of coding, using free Variables and Selections programs like Scratch and and make your own Python. Includes step by website. Learn how to use step instructions to make Lego, build games in your own chatbot or video Scratch or experiment with game. HTML. Find in library: J 005.1 SCO Find in library: J 000.1 WAI Creative Coding in Coding Onstage By Kristin Fontichiaro Python By Sheena Vaidyanathan Presents early learners Kids will learn the with a theatre story fundamentals of challenge they can solve computer programming in using Scratch 3. Book is 30+ fun projects with the aligned to curriculum standards and includes intuitive programming language of Python extension activities. Find as a Hoopla eBook. Find in library: J 005.133 VAI Algorithms with Frozen Coding Basics By Allyssa Loya By George Anthony Kulz An easy to understand Explains how coding is used introduction to looping for today, key concepts include young readers. -

Code-Bending: a New Creative Coding Practice

Code-bending: a new creative coding practice Ilias Bergstrom1, R. Beau Lotto2 1EventLAB, Universitat de Barcelona, Campus de Mundet - Edifici Teatre, Passeig de la Vall d'Hebron 171, 08035 Barcelona, Spain. Email: [email protected], [email protected] 2Lottolab Studio, University College London, 11-43 Bath Street, University College London, London, EC1V 9EL. Email: [email protected] Key-words: creative coding, programming as art, new media, parameter mapping. Abstract Creative coding, artistic creation through the medium of program instructions, is constantly gaining traction, a steady stream of new resources emerging to support it. The question of how creative coding is carried out however still deserves increased attention: in what ways may the act of program development be rendered conducive to artistic creativity? As one possible answer to this question, we here present and discuss a new creative coding practice, that of Code-Bending, alongside examples and considerations regarding its application. Introduction With the creative coding movement, artists’ have transcended the dependence on using only pre-existing software for creating computer art. In this ever-expanding body of work, the medium is programming itself, where the piece of Software Art is not made using a program; instead it is the program [1]. Its composing material is not paint on paper or collections of pixels, but program instructions. From being an uncommon practice, creative coding is today increasingly gaining traction. Schools of visual art, music, design and architecture teach courses on creatively harnessing the medium. The number of programming environments designed to make creative coding approachable by practitioners without formal software engineering training has expanded, reflecting creative coding’s widened user base. -

Progressive Rendering of Massive Point Clouds in Webgl 2.0 Compute

Progressive Rendering of Massive Point Clouds in WebGL 2.0 Compute BACHELORARBEIT zur Erlangung des akademischen Grades Bachelor of Science im Rahmen des Studiums Software & Information Engineering eingereicht von Wolfgang Rumpler Matrikelnummer 01526299 an der Fakultät für Informatik der Technischen Universität Wien Betreuung: Associate Prof. Dipl.-Ing. Dipl.-Ing. Dr.techn. Michael Wimmer Mitwirkung: Dipl.-Ing. Markus Schütz Wien, 10. Oktober 2019 Wolfgang Rumpler Michael Wimmer Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Progressive Rendering of Massive Point Clouds in WebGL 2.0 Compute BACHELOR’S THESIS submitted in partial fulfillment of the requirements for the degree of Bachelor of Science in Software & Information Engineering by Wolfgang Rumpler Registration Number 01526299 to the Faculty of Informatics at the TU Wien Advisor: Associate Prof. Dipl.-Ing. Dipl.-Ing. Dr.techn. Michael Wimmer Assistance: Dipl.-Ing. Markus Schütz Vienna, 10th October, 2019 Wolfgang Rumpler Michael Wimmer Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Erklärung zur Verfassung der Arbeit Wolfgang Rumpler Hiermit erkläre ich, dass ich diese Arbeit selbständig verfasst habe, dass ich die verwen- deten Quellen und Hilfsmittel vollständig angegeben habe und dass ich die Stellen der Arbeit – einschließlich Tabellen, Karten und Abbildungen –, die anderen Werken oder dem Internet im Wortlaut oder dem Sinn nach entnommen sind, auf jeden Fall unter Angabe der Quelle als Entlehnung kenntlich gemacht habe. Wien, 10. Oktober 2019 Wolfgang Rumpler v Danksagung An dieser Stelle möchte ich allen Personen danken die mich bei dem Abschluss dieses Studienabschnitts unterstützt haben. -

Creative Coding and Visual Portfolios for CS1 Ira Greenberg

Bryn Mawr College Scholarship, Research, and Creative Work at Bryn Mawr College Computer Science Faculty Research and Computer Science Scholarship 2012 Creative Coding and Visual Portfolios for CS1 Ira Greenberg Deepak Kumar Bryn Mawr College, [email protected] Dianna Xu Bryn Mawr College, [email protected] Let us know how access to this document benefits ouy . Follow this and additional works at: http://repository.brynmawr.edu/compsci_pubs Part of the Computer Sciences Commons Custom Citation Greenberg, I. et al. 2012.Creative coding and visual portfolios for CS1, Proceedings of the 43rd ACM technical symposium on Computer Science Education, February 29-March 03, 2012, Raleigh, North Carolina: 247-252. This paper is posted at Scholarship, Research, and Creative Work at Bryn Mawr College. http://repository.brynmawr.edu/compsci_pubs/59 For more information, please contact [email protected]. Creative Coding and Visual Portfolios for CS1 Ira Greenberg Deepak Kumar Dianna Xu Center of Creative Computation Department of Computer Science Department of Computer Science Dept. of Comp. Sci. & Engineering Bryn Mawr College Bryn Mawr College Southern Methodist University Bryn Mawr, PA (USA) Bryn Mawr, PA (USA) Dallas, TX (USA) (1) 610-526-7485 (1) 610-526-6502 [email protected] [email protected] [email protected] ABSTRACT Notable efforts include media computation [Guzdial 2004], robots In this paper, we present the design and development of a new [Summet et al 2009], games/animation [Moskal et al 2004, approach to teaching the college-level introductory computing Bayless & Stout 2006], and music [Beck et al 2011]. Arguments course (CS1) using the context of art and creative coding. -

Inclusive XR Roadmap Possible Next Steps

Inclusive XR Roadmap Possible next steps ● Research ○ Where? Industry? Acadameia? EU project? RQTF? ● Prototyping & experimentation ○ Collaboration / open source opportunities ● (Pre-) standardization PRIORITIES? Immersive Web Architecture Hooks Immersive Web Application (Best Practices) Engine / Framework (Best Practices, prototypes, patches) Web browser (APIs, Best Practices) XR Platform Operating System Towards standardizing solutions in W3C ● W3C standardizes technologies through its Recommendation process ● Working Groups are responsible for standardization - for W3C Member organizations and Invited Experts ● Before starting standardization efforts, the preference is to incubate the ideas ● W3C has an incubation program open for free to any one - Community Groups Existing Standardization Landscape (W3C) ● Immersive Web Working Group ○ WebXR: Core, Gamepad, Augmented Reality modules ● ARIA Working Group ○ ARIA ● Accessible Platforms Architecture Working Group ○ RQTF ○ XR Accessibility User Requirements (Note) ○ Personalization Task Force ● Timed Text Working Group ○ TTML, IMSC ○ WebVTT ● Audio Working Group ○ Web Audio API ● Accessibility Guidelines Working Group ○ WCAG 2.x, Silver Existing Incubation Landscape (W3C) ● Immersive Web Community Group ○ Potential host for any new proposals related to Immersive Web ○ Ongoing discussion on Define and query properties of "things" #54 ● Immersive Captions Community Group ○ best practices for captions in Immersive Media ● Web Platform Incubator Community Group (WICG) ○ Accessibility Object -

Visual Programming and Creative Code: a Maker Perspective in Software Studies

Visual Programming and Creative Code: A Maker Perspective in Software studies Master thesis MA Mediastudies New Media & Digital Culture Utrecht University Netherlands Jelke de Boer 3884023 2 Abstract If software is the cornerstone of (post) modern society then it is the algorithm that serves as the primary tool of the information age. The field of software studies investigates how software affects culture and society. The initial efforts have contributed to the understanding of the “nature” of code and widespread ‘popular’ or ‘mainstream’ productivity software. This thesis shifts attention to visual programming, a popular tool in artistic performance, interactive installations and electronic music production. How can visual programming be understood as an artistic tool? As an overarching theme three concepts will be addressed: Materiality, Agency and Sociality. Building upon actor network theory it will be argued that, as a tool, visual programming should be understood an act of creative coding that attempts to make hardware performative. Furthermore, it will also be argued that aside of the arts, engineering and design, a new tradition of creators has emerged from maker culture. Keywords Software studies, Visual Programming, Artistic Tools, Creative Code, Agency, Performance, DIY, Maker Culture, Actor Network Theory, Design Fiction. 3 4 Acknowledgments The list of people that somehow deserve to be mentioned for their support is long, including a network of people that covers all three major Educational institutions in the Utrecht area; Utrecht University, the University of Applied Sciences Utrecht and the University of the Arts Utrecht. The Utrecht area offers a wonderful climate for students, lecturers and artists, three roles that I have tried to combine following the New Media & Digital Culture program. -

Code Bending: a New Creative Coding Practice

g e n e r a l a r t i c l e Code Bending A New Creative Coding Practice I l ias B e R g str o m a n d R . B e A u l o tt o Creative coding, or artistic creation through the medium of program include sketching, oil painting, creating pieces from found instructions, is constantly gaining traction, and there is a steady stream objects and live painting. Note the notions of art practice of new resources emerging to support it. However, the question of how and of technique overlap somewhat: A painter may regard creative coding is carried out still deserves more attention. In what ways ABSTRACT may the act of program development be rendered conducive to artistic applying oil paint with a palette knife instead of a paintbrush creativity? As one possible answer to this question, the authors present as employing a new technique. A painter in his studio ver- and discuss a new creative coding practice, that of code bending, sus a live painter in front of an audience may on the other alongside examples and considerations regarding its applications. hand employ the same techniques, but engage in different practices. While programming has been established as a me- dium for art, there is still much room for analogous discus- With the creative coding movement, artists have transcended sion on how creative coding practice may be carried out. In the dependence on using only pre-existing software for creat- what ways may software development, largely established as ing computer art. -

Interdisciplinary Research As Musical Experimentation: a Case Study in Musicianly Approaches to Sound Corpora

Proceedings of the Electroacoustic Music Studies Network Conference, Florence (Italy), June 20-23, 2018. www.ems-network.org Owen Green, Pierre Alexandre Tremblay, Gerard Roma Interdisciplinary Research as Musical Experimentation: A case study in musicianly approaches to sound corpora Centre for Research into New Music (CeReNeM) University of Huddersfield, UK {o.green, p.a.tremblay, g.roma}@hud.ac.uk Abstract Can a commitment to musical pluralism be embedded as a value in musical technologies? This question has come to structure part of our work during the early stages of a five-year project investigating techniques and tools for ‘Fluid Corpus Manipulation’ (FluCoMa)1. In this paper we frame our thinking about this by considering interdisciplinarity in Electroacoustic Music Studies, before proceeding to apply our thoughts on this to our specific project in terms of practice-led design. We end with questions as seeds for future discussion, rather than categorical findings. Introduction The authors are currently in the early stages of a five-year project concerned with the general topic of making music using collections of recorded sound (corpora, in a broad sense) in creative coding environments (Max, Supercollider, PD). The project aims to animate musical and technical research around this topic by developing new tools and learning resources, and by seeding a community of interest (Fischer 2009), made up of diverse researchers and practitioners. We will develop extensions for creative coding environments that enable techno- fluent musicians to explore and develop new techniques for constructing and manipulating corpora of recordings, and that seek to prioritise divergent, open-ended engagement. -

Combining Live Coding and Vjing for Live Visual Performance

CJing: Combining Live Coding and VJing for Live Visual Performance by Jack Voldemars Purvis A thesis submitted to the Victoria University of Wellington in fulfilment of the requirements for the degree of Master of Science in Computer Graphics. Victoria University of Wellington 2019 Abstract Live coding focuses on improvising content by coding in textual inter- faces, but this reliance on low level text editing impairs usability by not al- lowing for high level manipulation of content. VJing focuses on remixing existing content with graphical user interfaces and hardware controllers, but this focus on high level manipulation does not allow for fine-grained control where content can be improvised from scratch or manipulated at a low level. This thesis proposes the code jockey practice (CJing), a new hybrid practice that combines aspects of live coding and VJing practice. In CJing, a performer known as a code jockey (CJ) interacts with code, graphical user interfaces and hardware controllers to create or manipulate real-time visuals. CJing harnesses the strengths of live coding and VJing to enable flexible performances where content can be controlled at both low and high levels. Live coding provides fine-grained control where con- tent can be improvised from scratch or manipulated at a low level while VJing provides high level manipulation where content can be organised, remixed and interacted with. To illustrate CJing, this thesis contributes Visor, a new environment for live visual performance that embodies the practice. Visor's design is based on key ideas of CJing and a study of live coders and VJs in practice. -

Technologies and Workflow of Creative Coding Projects: Examples from the Google Devart Competition

Technologies and Workflow of Creative Coding Projects: Examples from the Google DevArt Competition Author Keywords Youyang Hou Creative coding; DevArt; Art technology; programming Google 1600 Amphitheatre Parkway, CA 94043, USA CSS Concepts [email protected] • Human-centered computing~Human computer interaction (HCI); Graphical user interface; User Abstract interface programming; User interface toolkits Recently, many artists and creative technologists created computer programming as a goal to create Introduction something expressive instead of something Creative coding is defined as a type of computer functional. In this paper, I analyzed 18 creative programming in which the goal is to create something coding projects from the Google DevArt competition expressive instead of something functional and summarized the critical technologies, types of (https://en.wikipedia.org/wiki/Creative_coding). content, and key workflows of these creative coding Creative coding has been considered as a way of projects. The paper also discusses the potential teaching programming languages [1, 2, 3]. Creative research opportunities for creative coding and art coding opens up opportunities in new areas of art and technologies. design, yet it could hard to understand and execute. To better understand creative coder’s needs for technologies and their workflow, I did a content analysis of 18 projects from the Google DevArt contest. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are Understanding the content, process, and creative not made or distributed for profit or commercial advantage and that coder’s needs will help researchers create better copies bear this notice and the full citation on the first page. -

Rethinking the Usage and Experience of Clustering Markers in Web Mapping

Rethinking the Usage and Experience of Clustering Markers in Web Mapping Lo¨ıcF ¨urhoff1 1University of Applied Sciences Western Switzerland (HES-SO), School of Management and Engineering Vaud (HEIG-VD), Media Engineering Institute (MEI), Yverdon-les-Bains, Switzerland Corresponding author: Lo¨ıc Furhoff¨ 1 Email address: [email protected] ABSTRACT Although the notion of ‘too many markers’ have been mentioned in several research, in practice, displaying hundreds of Points of Interests (POI) on a web map in two dimensions with an acceptable usability remains a real challenge. Web practitioners often make an excessive use of clustering aggregation to overcome performance bottlenecks without successfully resolving issues of perceived performance. This paper tries to bring a broad awareness by identifying sample issues which describe a general reality of clustering, and provide a pragmatic survey of potential technologies optimisations. At the end, we discuss the usage of technologies and the lack of documented client-server workflows, along with the need to enlarge our vision of the various clutter reduction methods. INTRODUCTION Assuredly, Big Data is the key word for the 21st century. Few people realise that a substantial part of these massive quantities of data are categorised as geospatial (Lee & Kang, 2015). Nowadays, satellite- based radionavigation systems (GPS, GLONASS, Galileo, etc.) are omnipresent on all types of new digital devices. Trends like GPS Drawing, location-based game (e.g. Pokemon´ Go and Harry Potter Wizard Unite), Recreational Drone, or Snapchat ‘GeoFilter’ Feature have appeared in the last five years – highlighting each time a new way to consume location. Moreover, scientists and companies have built outstanding web frameworks and tools like KeplerGL to exploit geospatial data. -

Tensorflow.Js: Machine Learning for the Web and Beyond Via Frameworks Like Electron

TENSORFLOW.JS:MACHINE LEARNING FOR THE WEB AND BEYOND Daniel Smilkov * 1 Nikhil Thorat * 1 Yannick Assogba 1 Ann Yuan 1 Nick Kreeger 1 Ping Yu 1 Kangyi Zhang 1 Shanqing Cai 1 Eric Nielsen 1 David Soergel 1 Stan Bileschi 1 Michael Terry 1 Charles Nicholson 1 Sandeep N. Gupta 1 Sarah Sirajuddin 1 D. Sculley 1 Rajat Monga 1 Greg Corrado 1 Fernanda B. Viegas´ 1 Martin Wattenberg 1 ABSTRACT TensorFlow.js is a library for building and executing machine learning algorithms in JavaScript. TensorFlow.js models run in a web browser and in the Node.js environment. The library is part of the TensorFlow ecosystem, providing a set of APIs that are compatible with those in Python, allowing models to be ported between the Python and JavaScript ecosystems. TensorFlow.js has empowered a new set of developers from the extensive JavaScript community to build and deploy machine learning models and enabled new classes of on-device computation. This paper describes the design, API, and implementation of TensorFlow.js, and highlights some of the impactful use cases. 1 INTRODUCTION numeric computation capabilities to JS. While several open source JS platforms for ML have appeared, to our knowl- Machine learning (ML) has become an important tool in edge TensorFlow.js is the first to enable integrated training software systems, enhancing existing applications and en- and inference on the GPU from the browser, and offer full abling entirely new ones. However, the available software Node.js integration for server-side deployment. We have at- platforms for ML reflect the academic and industrial roots tempted to ensure that TensorFlow.js meets a high standard of the technology.