High Tree-Width Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Treewidth and Graph Minors

On Treewidth and Graph Minors Daniel John Harvey Submitted in total fulfilment of the requirements of the degree of Doctor of Philosophy February 2014 Department of Mathematics and Statistics The University of Melbourne Produced on archival quality paper ii Abstract Both treewidth and the Hadwiger number are key graph parameters in structural and al- gorithmic graph theory, especially in the theory of graph minors. For example, treewidth demarcates the two major cases of the Robertson and Seymour proof of Wagner's Con- jecture. Also, the Hadwiger number is the key measure of the structural complexity of a graph. In this thesis, we shall investigate these parameters on some interesting classes of graphs. The treewidth of a graph defines, in some sense, how \tree-like" the graph is. Treewidth is a key parameter in the algorithmic field of fixed-parameter tractability. In particular, on classes of bounded treewidth, certain NP-Hard problems can be solved in polynomial time. In structural graph theory, treewidth is of key interest due to its part in the stronger form of Robertson and Seymour's Graph Minor Structure Theorem. A key fact is that the treewidth of a graph is tied to the size of its largest grid minor. In fact, treewidth is tied to a large number of other graph structural parameters, which this thesis thoroughly investigates. In doing so, some of the tying functions between these results are improved. This thesis also determines exactly the treewidth of the line graph of a complete graph. This is a critical example in a recent paper of Marx, and improves on a recent result by Grohe and Marx. -

Lecture 10: April 20, 2005 Perfect Graphs

Re-revised notes 4-22-2005 10pm CMSC 27400-1/37200-1 Combinatorics and Probability Spring 2005 Lecture 10: April 20, 2005 Instructor: L´aszl´oBabai Scribe: Raghav Kulkarni TA SCHEDULE: TA sessions are held in Ryerson-255, Monday, Tuesday and Thursday 5:30{6:30pm. INSTRUCTOR'S EMAIL: [email protected] TA's EMAIL: [email protected], [email protected] IMPORTANT: Take-home test Friday, April 29, due Monday, May 2, before class. Perfect Graphs k 1=k Shannon capacity of a graph G is: Θ(G) := limk (α(G )) : !1 Exercise 10.1 Show that α(G) χ(G): (G is the complement of G:) ≤ Exercise 10.2 Show that χ(G H) χ(G)χ(H): · ≤ Exercise 10.3 Show that Θ(G) χ(G): ≤ So, α(G) Θ(G) χ(G): ≤ ≤ Definition: G is perfect if for all induced sugraphs H of G, α(H) = χ(H); i. e., the chromatic number is equal to the clique number. Theorem 10.4 (Lov´asz) G is perfect iff G is perfect. (This was open under the name \weak perfect graph conjecture.") Corollary 10.5 If G is perfect then Θ(G) = α(G) = χ(G): Exercise 10.6 (a) Kn is perfect. (b) All bipartite graphs are perfect. Exercise 10.7 Prove: If G is bipartite then G is perfect. Do not use Lov´asz'sTheorem (Theorem 10.4). 1 Lecture 10: April 20, 2005 2 The smallest imperfect (not perfect) graph is C5 : α(C5) = 2; χ(C5) = 3: For k 2, C2k+1 imperfect. -

![Arxiv:2106.16130V1 [Math.CO] 30 Jun 2021 in the Special Case of Cyclohedra, and by Cardinal, Langerman and P´Erez-Lantero [5] in the Special Case of Tree Associahedra](https://docslib.b-cdn.net/cover/3351/arxiv-2106-16130v1-math-co-30-jun-2021-in-the-special-case-of-cyclohedra-and-by-cardinal-langerman-and-p%C2%B4erez-lantero-5-in-the-special-case-of-tree-associahedra-123351.webp)

Arxiv:2106.16130V1 [Math.CO] 30 Jun 2021 in the Special Case of Cyclohedra, and by Cardinal, Langerman and P´Erez-Lantero [5] in the Special Case of Tree Associahedra

LAGOS 2021 Bounds on the Diameter of Graph Associahedra Jean Cardinal1;4 Universit´elibre de Bruxelles (ULB) Lionel Pournin2;4 Mario Valencia-Pabon3;4 LIPN, Universit´eSorbonne Paris Nord Abstract Graph associahedra are generalized permutohedra arising as special cases of nestohedra and hypergraphic polytopes. The graph associahedron of a graph G encodes the combinatorics of search trees on G, defined recursively by a root r together with search trees on each of the connected components of G − r. In particular, the skeleton of the graph associahedron is the rotation graph of those search trees. We investigate the diameter of graph associahedra as a function of some graph parameters. It is known that the diameter of the associahedra of paths of length n, the classical associahedra, is 2n − 6 for a large enough n. We give a tight bound of Θ(m) on the diameter of trivially perfect graph associahedra on m edges. We consider the maximum diameter of associahedra of graphs on n vertices and of given tree-depth, treewidth, or pathwidth, and give lower and upper bounds as a function of these parameters. Finally, we prove that the maximum diameter of associahedra of graphs of pathwidth two is Θ(n log n). Keywords: generalized permutohedra, graph associahedra, tree-depth, treewidth, pathwidth 1 Introduction The vertices and edges of a polyhedron form a graph whose diameter (often referred to as the diameter of the polyhedron for short) is related to a number of computational problems. For instance, the question of how large the diameter of a polyhedron arises naturally from the study of linear programming and the simplex algorithm (see, for instance [27] and references therein). -

The Strong Perfect Graph Theorem

Annals of Mathematics, 164 (2006), 51–229 The strong perfect graph theorem ∗ ∗ By Maria Chudnovsky, Neil Robertson, Paul Seymour, * ∗∗∗ and Robin Thomas Abstract A graph G is perfect if for every induced subgraph H, the chromatic number of H equals the size of the largest complete subgraph of H, and G is Berge if no induced subgraph of G is an odd cycle of length at least five or the complement of one. The “strong perfect graph conjecture” (Berge, 1961) asserts that a graph is perfect if and only if it is Berge. A stronger conjecture was made recently by Conforti, Cornu´ejols and Vuˇskovi´c — that every Berge graph either falls into one of a few basic classes, or admits one of a few kinds of separation (designed so that a minimum counterexample to Berge’s conjecture cannot have either of these properties). In this paper we prove both of these conjectures. 1. Introduction We begin with definitions of some of our terms which may be nonstandard. All graphs in this paper are finite and simple. The complement G of a graph G has the same vertex set as G, and distinct vertices u, v are adjacent in G just when they are not adjacent in G.Ahole of G is an induced subgraph of G which is a cycle of length at least 4. An antihole of G is an induced subgraph of G whose complement is a hole in G. A graph G is Berge if every hole and antihole of G has even length. A clique in G is a subset X of V (G) such that every two members of X are adjacent. -

Results on Independent Sets in Categorical Products of Graphs, The

Results on independent sets in categorical products of graphs, the ultimate categorical independence ratio and the ultimate categorical independent domination ratio Wing-Kai Hon1, Ton Kloks, Hsiang-Hsuan Liu1, Sheung-Hung Poon1, and Yue-Li Wang2 1 Department of Computer Science National Tsing Hua University, Taiwan {wkhon,hhliu,spoon}@cs.nthu.edu.tw 2 Department of Information Management National Taiwan University of Science and Technology [email protected] Abstract. We show that there are polynomial-time algorithms to compute maximum independent sets in the categorical products of two cographs and two splitgraphs. The ultimate categorical independence ratio of a k k graph G is defined as limk→ α(G )/n . The ultimate categorical inde- pendence ratio is polynomial for cographs, permutation graphs, interval graphs, graphs of bounded treewidth∞ and splitgraphs. When G is a planar graph of maximal degree three then α(G K4) is NP-complete. We present a PTAS for the ultimate categorical independence× ratio of planar graphs. We present an O∗(nn/3) exact, exponential algorithm for general graphs. We prove that the ultimate categorical independent domination ratio for complete multipartite graphs is zero, except when the graph is complete bipartite with color classes of equal size (in which case it is1/2). 1 Introduction Let G and H be two graphs. The categorical product also travels under the guise of tensor product, or direct product, or Kronecker product, and even more names arXiv:1306.1656v1 [cs.DM] 7 Jun 2013 have been given to it. It is defined as follows. It is a graph, denoted as G H. -

Algorithmic Graph Theory Part III Perfect Graphs and Their Subclasses

Algorithmic Graph Theory Part III Perfect Graphs and Their Subclasses Martin Milanicˇ [email protected] University of Primorska, Koper, Slovenia Dipartimento di Informatica Universita` degli Studi di Verona, March 2013 1/55 What we’ll do 1 THE BASICS. 2 PERFECT GRAPHS. 3 COGRAPHS. 4 CHORDAL GRAPHS. 5 SPLIT GRAPHS. 6 THRESHOLD GRAPHS. 7 INTERVAL GRAPHS. 2/55 THE BASICS. 2/55 Induced Subgraphs Recall: Definition Given two graphs G = (V , E) and G′ = (V ′, E ′), we say that G is an induced subgraph of G′ if V ⊆ V ′ and E = {uv ∈ E ′ : u, v ∈ V }. Equivalently: G can be obtained from G′ by deleting vertices. Notation: G < G′ 3/55 Hereditary Graph Properties Hereditary graph property (hereditary graph class) = a class of graphs closed under deletion of vertices = a class of graphs closed under taking induced subgraphs Formally: a set of graphs X such that G ∈ X and H < G ⇒ H ∈ X . 4/55 Hereditary Graph Properties Hereditary graph property (Hereditary graph class) = a class of graphs closed under deletion of vertices = a class of graphs closed under taking induced subgraphs Examples: forests complete graphs line graphs bipartite graphs planar graphs graphs of degree at most ∆ triangle-free graphs perfect graphs 5/55 Hereditary Graph Properties Why hereditary graph classes? Vertex deletions are very useful for developing algorithms for various graph optimization problems. Every hereditary graph property can be described in terms of forbidden induced subgraphs. 6/55 Hereditary Graph Properties H-free graph = a graph that does not contain H as an induced subgraph Free(H) = the class of H-free graphs Free(M) := H∈M Free(H) M-free graphT = a graph in Free(M) Proposition X hereditary ⇐⇒ X = Free(M) for some M M = {all (minimal) graphs not in X} The set M is the set of forbidden induced subgraphs for X. -

Classes of Perfect Graphs

This paper appeared in: Discrete Mathematics 306 (2006), 2529-2571 Classes of Perfect Graphs Stefan Hougardy Humboldt-Universit¨atzu Berlin Institut f¨urInformatik 10099 Berlin, Germany [email protected] February 28, 2003 revised October 2003, February 2005, and July 2007 Abstract. The Strong Perfect Graph Conjecture, suggested by Claude Berge in 1960, had a major impact on the development of graph theory over the last forty years. It has led to the definitions and study of many new classes of graphs for which the Strong Perfect Graph Conjecture has been verified. Powerful concepts and methods have been developed to prove the Strong Perfect Graph Conjecture for these special cases. In this paper we survey 120 of these classes, list their fundamental algorithmic properties and present all known relations between them. 1 Introduction A graph is called perfect if the chromatic number and the clique number have the same value for each of its induced subgraphs. The notion of perfect graphs was introduced by Berge [6] in 1960. He also conjectured that a graph is perfect if and only if it contains, as an induced subgraph, neither an odd cycle of length at least five nor its complement. This conjecture became known as the Strong Perfect Graph Conjecture and attempts to prove it contributed much to the developement of graph theory in the past forty years. The methods developed and the results proved have their uses also outside the area of perfect graphs. The theory of antiblocking polyhedra developed by Fulkerson [37], and the theory of modular decomposition (which has its origins in a paper of Gallai [39]) are two such examples. -

Strong Perfect Graph Theorem a Graph G Is Perfect If and Only If Neither G Nor Its Complement G¯ Contains an Induced Odd Circuit of Length ≥ 5

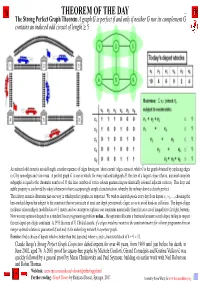

THEOREM OF THE DAY The Strong Perfect Graph Theorem A graph G is perfect if and only if neither G nor its complement G¯ contains an induced odd circuit of length ≥ 5. An induced odd circuit is an odd-length, circular sequence of edges having no ‘short-circuit’ edges across it, while G¯ is the graph obtained by replacing edges in G by non-edges and vice-versa. A perfect graph G is one in which, for every induced subgraph H, the size of a largest clique (that is, maximal complete subgraph) is equal to the chromatic number of H (the least number of vertex colours guaranteeing no identically coloured adjacent vertices). This deep and subtle property is confirmed by today’s theorem to have a surprisingly simple characterisation, whereby the railway above is clearly perfect. The railway scenario illustrates just one way in which perfect graphs are important. We wish to dispatch goods every day from depots v1, v2,..., choosing the best-stocked depots but subject to the constraint that we nominate at most one depot per network clique, so as to avoid head-on collisions. The depot-clique incidence relationship is modelled as a 0-1 matrix and we attempt to replicate our constraint numerically from this as a set of inequalities (far right, bottom). Now we may optimise dispatch as a standard linear programming problem unless... the optimum allocates a fractional amount to each depot, failing to respect the one-depot-per-clique constraint. A 1975 theorem of V. Chv´atal asserts: if a clique incidence matrix is the constraint matrix for a linear programme then an integer optimal solution is guaranteed if and only if the underlying network is a perfect graph. -

Perfect Graphs the American Institute of Mathematics

Perfect Graphs The American Institute of Mathematics This is a hard{copy version of a web page available through http://www.aimath.org Input on this material is welcomed and can be sent to [email protected] Version: Tue Aug 24 11:37:57 2004 0 The chromatic number of a graph G, denoted by Â(G), is the minimum number of colors needed to color the vertices of G in such a way that no two adjacent vertices receive the same color. Clearly Â(G) is bounded from below by the size of a largest clique in G, denoted by !(G). In 1960, Berge introduced the notion of a perfect graph. A graph G is perfect, if for every induced subgraph H of G, Â(H) = !(H). A hole in a graph is a chordless cycle of length greater than 3, and it is even or odd depending on the number of vertices it contains. An antihole is the complement of a hole. It is easily seen that odd holes and odd antiholes are not perfect. Berge conjectured that these are the only minimal imperfect graphs, i.e., a graph is perfect if and only if it does not contain an odd hole nor an odd antihole. (When we say that a graph G contains a graph H, we mean as an induced subgraph). This was known as the Strong Perfect Graph Conjecture (SPGC), whose proof has been announced recently. 0This document was organized by Maria Chudnovsky as part of the lead-in and followup to the ARCC focused workshop \The Perfect Graph Conjecture," October 29 to Novenber 2, 2002. -

An Exact Cutting Plane Algorithm to Solve the Selective Graph Coloring

An Exact Cutting Plane Algorithm to Solve the Selective Graph Coloring Problem in Perfect Graphs ⋆ Oylum S¸ekera,∗, Tınaz Ekimb, Z. Caner Ta¸skınb aDepartment of Mechanical and Industrial Engineering, University of Toronto, Toronto, ON, M5S3G8, Canada bDepartment of Industrial Engineering, Bo˘gazi¸ci University, 34342, Bebek, Istanbul, Turkey Abstract We consider the selective graph coloring problem, which is a generalization of the classical graph coloring problem. Given a graph together with a partition of its vertex set into clusters, we want to choose exactly one vertex per cluster so that the number of colors needed to color the selected set of vertices is minimized. This problem is known to be NP-hard. In this study, we focus on an exact cutting plane algorithm for selective graph coloring in perfect graphs. Since there exists no suite of perfect graph instances to the best of our knowledge, we also propose an algorithm to randomly (but not uniformly) generate perfect graphs, and provide a large collection of instances available online. We conduct computational experiments to test our method on graphs with varying size and densities, and compare our results with a state-of-the-art algorithm from the literature and with solving an integer programming formulation of the problem by CPLEX. Our experiments demonstrate that our solution strategy significantly improves the solvability of the problem. Keywords: Graph theory; selective graph coloring; partition coloring; cutting plane algorithm; perfect graph generation arXiv:1811.12094v3 [cs.DS] 22 Dec 2020 ⋆This study is supported by Bo˘gazi¸ci University Research Fund (grant 11765); and T. -

On Characterizing Game-Perfect Graphs by Forbidden Induced Subgraphs

Volume 7, Number 1, Pages 21{34 ISSN 1715-0868 ON CHARACTERIZING GAME-PERFECT GRAPHS BY FORBIDDEN INDUCED SUBGRAPHS STEPHAN DOMINIQUE ANDRES Abstract. A graph G is called g-perfect if, for any induced subgraph H of G, the game chromatic number of H equals the clique number of H. A graph G is called g-col-perfect if, for any induced subgraph H of G, the game coloring number of H equals the clique number of H. In this paper we characterize the classes of g-perfect resp. g-col-perfect graphs by a set of forbidden induced subgraphs. Moreover, we study similar notions for variants of the game chromatic number, namely B-perfect and [A; B]-perfect graphs, and for several variants of the game coloring number, and characterize the classes of these graphs. 1. Introduction A well-known maker-breaker game is one of Bodlaender's graph coloring games [9]. We are given an initially uncolored graph G and a color set C. Two players, Alice and Bob, move alternately with Alice beginning. A move consists in coloring an uncolored vertex with a color from C in such a way that adjacent vertices receive distinct colors. The game ends if no move is possible any more. The maker Alice wins if the vertices of the graph are completely colored, otherwise, i.e. if there is an uncolored vertex surrounded by colored vertices of each color, the breaker Bob wins. For a graph G, the game chromatic number χg(G) of G is the smallest cardinality of a color set C such that Alice has a winning strategy in the game described above. -

Balancedness of Some Subclasses of Circular-Arc Graphs 1

Balancedness of some subclasses of circular-arc graphs 1 Flavia Bonomo a,2, Guillermo Dur´an b,c,2, Mart´ın D. Safe a,d,2 and Annegret K. Wagler e,3 a CONICET and Departamento de Computaci´on, Facultad de Ciencias Exactas y Naturales, Universidad de Buenos Aires, Buenos Aires, Argentina b CONICET and Departamento de Matem´atica, Facultad de Ciencias Exactas y Naturales, Universidad de Buenos Aires, Buenos Aires, Argentina c Departamento de Ingenier´ıa Industrial, Facultad de Ciencias F´ısicas y Matem´aticas, Universidad de Chile, Santiago, Chile d Instituto de Ciencias, Universidad Nacional de General Sarmiento, Los Polvorines, Argentina e Institut f¨ur Mathematische Optimierung, Fakult¨at f¨ur Mathematik, Otto-von-Guericke-Universit¨at Magdeburg, Germany Abstract A graph is balanced if its clique-vertex incidence matrix is balanced, i.e., it does not contain a square submatrix of odd order with exactly two ones per row and per column. Interval graphs, obtained as intersection graphs of intervals of a line, are well-known examples of balanced graphs. A circular-arc graph is the intersection graph of a family of arcs on a circle. Circular-arc graphs generalize interval graphs, but are not balanced in general. In this work we characterize balanced graphs by minimal forbidden induced subgraphs restricted to graphs that belong to some classes of circular-arc graphs. Keywords: balanced graphs, circular-arc graphs, forbidden subgraphs, perfect graphs 1 Introduction A {0, 1}-matrix A is balanced if it does not contain a square submatrix of odd order with exactly two ones per row and per column.