Any Colour You Like: the History (And Future?) of E.U

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Any Colour You Like

Any Colour You Like The History (and Future?) of Communications Security Policy NYU Security Seminar, New York 22.04.14 https://www.axelarnbak.nl 2013/14 Fellow Berkman Center & CITP, IviR University of Amsterdam ‘Obscured by Clouds’: Cloud Surveillance From Abroad ‘Another Loophole in the Wall’: Traffic Shaping to Circumenvent 4th Amendment Protections for U.S. users With Prof. Sharon Goldberg Securing Communications through Law Increasingly Popular, at least in E.U. ... What is 'security' as regulatory concept? How should regulators conceive it? OUTLINE Why Interested in Concepts?! 40 Years of (E.U.) Communications Security Policy Two Claims: ‘Technical’ & ‘Political’ Security New, Third Claim: 'Human Right' Communications Security Amidst 3 Claims OUTLINE Why Interested in Concepts?! 40 Years of (E.U.) Communications Security Policy Two Claims: ‘Technical’ & ‘Political’ Security New, Third Claim: 'Human Right' Communications Security Amidst 3 Claims Concepts Scope Regulation – Long Term Power Implications 15 years: IP-Address 'Personal Data' Definition? New E.U. Proposal: 'Pseudonimized' Data E.U. Data Protection response to NSA? Lion's Share ≠ 'Personal Data' OUTLINE Why Interested in Concepts?! 40 Years of (E.U.) Communications Security Policy Two Claims: ‘Technical’ & ‘Political’ Security New, Third Claim: 'Human Right' Communications Security Amidst 3 Claims E.U. ‘Security’ Concepts: 5 Cycles Analyzed Definition & Scope 1. Data Protection 2. Telecommunications Law 3. Encryption: Signatures & Certificates 4. Cybercrime 5. ‘Network & Information Security’ You May Wonder, No National Security? Sole Competence E.U. Member States History on One side of Coin: Future on Other side of Coin? E.U. Council / Members States Might enable focus on actually Re-frame it as National Security securing communications Found some gems in mid 90s E.U. -

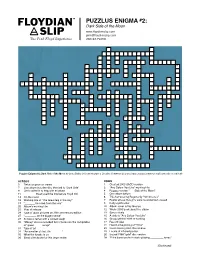

PUZZLUS ENIGMA #2: Dark Side of the Moon [email protected] the Pink Floyd Experience 260.67.FLOYD

PUZZLUS ENIGMA #2: Dark Side of the Moon www.floydianslip.com [email protected] The Pink Floyd Experience 260.67.FLOYD 1 2 3 4 5 6 7 8 9 10 11 12 1 3 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 Puzzlus Enigma #2: Dark Side of the Moon by Craig Bailey is licensed under a Creative Commons License (www.creativecommons.org/licenses/by-nc-nd/3.0/) ACROSS DOWN 3 Twice as good as stereo 1 Created 2003 SACD version 7 Live album includes disc devoted to "Dark Side" 2 "Any Colour You Like" working title 8 Chris called in to help with mixdown 4 Reggae version "___ Side of the Moon" 11 ________ Head used the title before Floyd did 5 One album before 14 On the cover 6 This hat-wearing Roger only "hit him once" 18 Working title of "The Great Gig in the Sky" 7 Beatle whose thoughts were recorded but unused 19 "_______ he cried, from the rear" 9 Early synthesizer 20 Album's working title 10 Album cover art by George 21 Year of release 12 Wrote 2005 book about the album 24 Type of glass pictured on 30th anniversary edition 13 Syncs nicely 27 "________ on the biggest wave" 15 A-side to "Any Colour You Like" 29 Actress's father with a wicked laugh 16 Group behind 2009 re-working 30 "Money" was re-recorded for inclusion on this compilation 17 Record label of "great _____ songs" 22 Heard at beginning of "Time" 31 Type of jet 23 Color missing from the rainbow 34 "As a matter of fact, it's ___ ____" 25 Locale of infrared poster 35 What the lunatic is on 26 Issued 1988 "gold" disc -

Abstract Music Is an Essential Part of Our Lives. the World Wouldn't Be The

Abstract Music is an essential part of our lives. The world wouldn’t be the same without it. Everyone is so enthralled by music that whether they are bored or they are working, music is often times the thing that they want to have playing in the background of their life. A. The wide range of genres allows people to choose the type of music they want to listen to. The music can range from soothing and light forms to the heavy metal instrumentals and vocals. Many singers and artists are working in the music industry, and many of them have served a lot to this industry. Best sellers of the artists are appreciated in the whole world. It is known know, music knows no boundaries are when a singer does a fantastic job; they become famous all across the globe. Sadly, as time goes on musicians pass away however, their legacy remains very much alive have passed, but their names are still alive. The world still listens to their music and appreciates their work. One such singer is Michael Jackson. His album Thriller broke the records and was the best seller. No one can be neat the files of the king of pop. Similarly, Pink Floyd made records by their album The Dark Side of the Moon. It was an enormous economic and critical success. Many teens are still obsessed by the songs of Pink Floyd. AC/DC gave an album called Back in Black. This was also one of the major hits of that era. All these records are still alive. -

APPENDIX Radio Shows

APPENDIX Radio Shows AAA Radio Album Network BBC Cahn Media Capital Radio Ventures Capitol EMI Global Satellite Network In The Studio (Album Network / SFX / Winstar Radio Services / Excelsior Radio Networks / Barbarosa) MJI Radio Premiere Radio Networks Radio Today Entertainment Rockline SFX Radio Network United Stations Up Close (MCA / Media America Radio / Jones Radio Network) Westwood One Various Artists Radio Shows with Pink Floyd and band members PINK FLOYD CD DISCOGRAPHY Copyright © 2003-2010 Hans Gerlitz. All rights reserved. www.pinkfloyd-forum.de/discography [email protected] This discography is a reference guide, not a book on the artwork of Pink Floyd. The photos of the artworks are used solely for the purposes of distinguishing the differences between the releases. The product names used in this document are for identification purposes only. All trademarks and registered trademarks are the property of their respective owners. Permission is granted to download and print this document for personal use. Any other use including but not limited to commercial or profitable purposes or uploading to any publicly accessibly web site is expressly forbidden without prior written consent of the author. APPENDIX Radio Shows PINK FLOYD CD DISCOGRAPHY PINK FLOYD CD DISCOGRAPHY APPENDIX Radio Shows A radio show is usually a one or two (in some cases more) hours programme which is produced by companies such as Album Network or Westwood One, pressed onto CD, and distributed to their syndicated radio stations for broadcast. This form of broadcasting is being used extensively in the USA. In Europe the structure of the radio stations is different, the stations are more locally oriented and the radio shows on CDs are very rare. -

Pink Floyd - Dark Side of the Moon Speak to Me Breathe on the Run Time the Great Gig in the Sky Money Us and Them Any Colour You Like Brain Damage Eclipse

Pink Floyd - Dark Side of the Moon Speak to Me Breathe On the Run Time The Great Gig in the Sky Money Us and Them Any Colour You Like Brain Damage Eclipse Pink Floyd – The Wall In the Flesh The Thin Ice Another Brick in the Wall (Part 1) The Happiest Days of our Lives Another Brick in the Wall (Part 2) Mother Goodbye Blue Sky Empty Spaces Young Lust Another Brick in the Wall (Part 3) Hey You Comfortably Numb Stop The Trial Run Like Hell Laser Queen Bicycle Race Don't Stop Me Now Another One Bites The Dust I Want To Break Free Under Pressure Killer Queen Bohemian Rhapsody Radio Gaga Princes Of The Universe The Show Must Go On 1 Laser Rush 2112 I. Overture II. The Temples of Syrinx III. Discovery IV. Presentation V. Oracle: The Dream VI. Soliloquy VII. Grand Finale A Passage to Bangkok The Twilight Zone Lessons Tears Something for Nothing Laser Radiohead Airbag The Bends You – DG High and Dry Packt like Sardine in a Crushd Tin Box Pyramid song Karma Police The National Anthem Paranoid Android Idioteque Laser Genesis Turn It On Again Invisible Touch Sledgehammer Tonight, Tonight, Tonight Land Of Confusion Mama Sussudio Follow You, Follow Me In The Air Tonight Abacab 2 Laser Zeppelin Song Remains the Same Over the Hills, and Far Away Good Times, Bad Times Immigrant Song No Quarter Black Dog Livin’, Lovin’ Maid Kashmir Stairway to Heaven Whole Lotta Love Rock - n - Roll Laser Green Day Welcome to Paradise She Longview Good Riddance Brainstew Jaded Minority Holiday BLVD of Broken Dreams American Idiot Laser U2 Where the Streets Have No Name I Will Follow Beautiful Day Sunday, Bloody Sunday October The Fly Mysterious Ways Pride (In the Name of Love) Zoo Station With or Without You Desire New Year’s Day 3 Laser Metallica For Whom the Bell Tolls Ain’t My Bitch One Fuel Nothing Else Matters Master of Puppets Unforgiven II Sad But True Enter Sandman Laser Beatles Magical Mystery Tour I Wanna Hold Your Hand Twist and Shout A Hard Day’s Night Nowhere Man Help! Yesterday Octopus’ Garden Revolution Sgt. -

Pink Floyd Echoes Sheet Music Free

1 / 2 Pink Floyd Echoes Sheet Music Free Pink Floyd were a British Psychedelic and Progressive Rock band which formed in 1965. ... album would be "Any Colour You Like", a concept-free instrumental jam piece ... In the middle of "Echoes" the music switches to spooky noises with high ... the line that (according to the lyric sheet) goes "And if I'm in I'll tell you what's .... The last sign of life from Brain Damage was an almost content-free flyer send out to US- ... Exclusive distributors for most Pink Floyd sheet music books:.. PINK FLOYD GUITAR Tab / Echoes / Pink Floyd Guitar Tablature Songbook ... Sold $29.99 Buy It Now, FREE Shipping, 30-Day Returns, eBay Money Back ... Pink Floyd Sheet Music Easy Guitar with Riffs and Solos with Tab 000102911.. Pink Floyd Echoes From The Dark Side Of The Moon 50 Songs Puzzle ... $24.49 FREE shipping ... Pink Floyd Wish You Were Here, Interviews, Songs and Sheet Music, Vintage Pink Floyd, Collectible, Interviews, Songs, Sheet Music.. "For 20 years we provide a free and legal service for free sheet music. ... Nemo Pink Floyd -Comfortably Numb Pink Floyd- Echoes Radiohead -Karma Police.. Sep 29, 2018 — Download and print in PDF or MIDI free sheet music for Echoes by Pink Floyd arranged by Jordan Deneka for Piano (Solo). Mar 11, 2021 - Download Pink Floyd Echoes sheet music notes and printable PDF score arranged for Piano, Vocal & Guitar ... Free preview, SKU 429171. Jan 20, 2013 — Welcome to the page of pink floyd echoes part I , II sheet music score on HamieNET.com Open Educational Music Library. -

Speak to Me Breathe

Speak to me breathe This is a recording of Pink Floyd's Dark Side of the Moon from my personal record collection. This is a rip of. "Speak to Me" is the first track on British progressive rock band Pink Floyd's album, The It leads into the first performance piece on the album, "Breathe".Length: Lyrics to 'Speak To Me/Breathe' by Pink Floyd: Run, rabbit, run Dig that hole, forget the sun And when at last the work is done Don't sit down it's time to dig. Speak to Me Lyrics: I've been mad for fucking years / Absolutely years, been over Been working me buns off for bands / I've always been mad, I know I've been mad Speak to Me/Breathe (In the Air) by Easy Star All-Stars (Ft. Sluggy Ranks). Speak to Me / Breathe Lyrics: Instrumental / I've been mad for fucking years, absolutely years, been over the edge for yonks, been working me buns off for bands. Breathe, breathe in the air / Don't be afraid to care / Leave, don't leave me / Walk around and choose your own ground / Long you live and high you fly. Speak To Me / Breathe(In The Air)(Darkside) I've been mad for fcking years, absolutely years, I've been crazy for yonks I always have been mad, I know I hav. Speak to Me & Breathe by Pink Floyd - discover this song's samples, covers and remixes on WhoSampled. "Speak to Me & Breathe" by Pink Floyd sampled Pink Floyd's "The Great Gig in the Sky". -

Pink Floyd, Co Wiesz Pytania O Pink Floyd Trochę Z Praktyki Słuchania, Trochę Z Różnych Mniej Lub Bardziej Udokumentowanych Faktów

Pink Floyd, co wiesz Pytania o Pink Floyd trochę z praktyki słuchania, trochę z różnych mniej lub bardziej udokumentowanych faktów Poziom trudności: Trudny 1. Jak miał na imię Barrett, jeden z założycieli zespołu Pink Floyd? A - Robert B - Roger C - Syd D - Sidney 2. Którą z poniższych piosenek zaśpiewał jako główny wokalista Nick Mason? A - See Saw B - Scream Thy Last Scream C - Vegetable Man D - Ibiza Bar 3. Ile piosenek Ricka Wrighta trafiło na pierwszych 5 singli wydanych przez zespół w latach 1967 - 1968, które się również ukazały na płycie "The Early Singels"? A - 1 B - 2 C - 3 D - 4 4. Na jakim instrumencie jest grane solo/partia prowadząca we wstępie piosenki "Embryo", wersji studyjnej, oficjalnie nigdy nieopublikowanej? A - Mellotron B - Minimoog C - Gitara elekryczna D - Gitara akustyczna 5. Kto zaśpiewał piosenke "Burning Bridges" z płyty "Obscured By Clouds"? A - Gilmour B - Wates C - Gilmour i Wright D - Gilmour i Waters Copyright © 1995-2021 Wirtualna Polska 6. Czy w roku 1973 zespół podczas występów na żywo wykonywał piosenkę "Childhood's End"? A - Tak B - Nie 7. Jak się nazywała robocza wersja utworu "Atom Heart Mother", grana bez orkiestry i chóru? A - Nothing pts 1-24 B - The Amazing Pudding C - Reaction In G D - Raving And Drooling 8. Na której płycie zespołu całą piosenkę zaśpiewał wokalista spoza Pink Floyd"? A - The Division Bell B - More C - The Dark Side Of The Moon D - Wish You Were Here 9. Po ile utworów zawierały na albumie "Ummagumma" odpowiednio płyta koncertowa i płyta studyjna? A - 4 i 4 B - 4 i 5 C - 4 i 7 D - 4 i 12 10. -

Track List: All Songs Written by Syd Barrett, Except Where Noted. ”UK Release” Side One 1

Track List: All songs written by Syd Barrett, except where noted. ”UK release” Side one 1. "Astronomy Domine" – 4:12 2. "Lucifer Sam" – 3:07 3. "Matilda Mother" – 3:08 4. "Flaming" – 2:46 5. "Pow R. Toc H." (Syd Barrett, Roger Waters, Rick Wright, Nick Mason) – 4:26 6. "Take Up Thy Stethoscope and Walk" (Roger Waters) – 3:05 Side two 1. "Interstellar Overdrive" (Syd Barrett, Roger Waters, Rick Wright, Nick Mason) – 9:41 2. "The Gnome" – 2:13 The Piper at the Gates of Dawn – August 5, 1967 3. "Chapter 24" – 3:42 Syd Barrett – guitar, lead vocals, back cover design 4. "The Scarecrow" – 2:11 5. "Bike" – 3:21 Roger Waters – bass guitar, vocals Rick Wright – Farfisa Compact Duo, Hammond organ, Certifications: piano, vocals TBR Nick Mason – drums, percussion "Astronomy Domine" Lime and limpid green, a second scene A fight between the blue you once knew. Floating down, the sound resounds Around the icy waters underground. Jupiter and Saturn, Oberon, Miranda and Titania. Neptune, Titan, Stars can frighten. Lime and limpid green, a second scene A fight between the blue you once knew. Floating down, the sound resounds Around the icy waters underground. Jupiter and Saturn, Oberon, Miranda and Titania. Neptune, Titan, Stars can frighten. Blinding signs flap, Flicker, flicker, flicker blam. Pow, pow. Stairway scare, Dan Dare, who's there? Lime and limpid green, the sounds around The icy waters under Lime and limpid green, the sounds around The icy waters underground. "Lucifer Sam" Lucifer Sam, siam cat. Always sitting by your side Always by your side. -

Long-Form Analytical Techniques and the Music of Pink Floyd Christopher Everett Onesj

Graduate Theses, Dissertations, and Problem Reports 2017 Tear Down the Wall: Long-Form Analytical Techniques and the Music of Pink Floyd Christopher Everett onesJ Follow this and additional works at: https://researchrepository.wvu.edu/etd Recommended Citation Jones, Christopher Everett, "Tear Down the Wall: Long-Form Analytical Techniques and the Music of Pink Floyd" (2017). Graduate Theses, Dissertations, and Problem Reports. 7098. https://researchrepository.wvu.edu/etd/7098 This Dissertation is brought to you for free and open access by The Research Repository @ WVU. It has been accepted for inclusion in Graduate Theses, Dissertations, and Problem Reports by an authorized administrator of The Research Repository @ WVU. For more information, please contact [email protected]. Tear Down the Wall: Long-Form Analytical Techniques and the Music of Pink Floyd Christopher Everett Jones Thesis submitted to the College of Creative Arts at West Virginia University in partial fulfillment of the requirements for the degree of Doctor of Musical Arts David Taddie, Ph.D., Chair Evan MacCarthy, Ph.D. Matthew Heap, Ph.D. Andrea Houde, M.M. Beth Royall, M.L.I.S., M.M. School of Music Morgantown, West Virginia 2017 Keywords: Music, Pink Floyd, Analysis, Rock music, Progressive Rock, Progressive Rock Analysis Copyright 2017 Abstract Tear Down the Wall: Long-Form Analytical Techniques and the Music of Pink Floyd Christopher Everett Jones This document examines the harmonic and melodic content of four albums by English Progressive Rock band Pink Floyd: Meddle (1971), The Dark Side of the Moon (1973), Wish You Were Here (1975), and The Wall (1979). Each of these albums presents a coherent discourse that is typical of the art music tradition. -

Pink Floyd Mp3 Mp3, Flac, Wma

Pink Floyd Mp3 mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: Mp3 Country: Russia Style: Psychedelic Rock, Experimental, Prog Rock, Classic Rock MP3 version RAR size: 1704 mb FLAC version RAR size: 1269 mb WMA version RAR size: 1171 mb Rating: 4.2 Votes: 950 Other Formats: APE AAC MP4 TTA DMF VOX MIDI Tracklist Hide Credits The Division Bell 1 Cluster One 2 What Do You Want From Me 3 Poles Apart 4 Marooned 5 A Great Day For Freedom 6 Wearing The Inside Out 7 Take It Back 8 Coming Back To Life 9 Keep Talking 10 Lost For Words 11 High Hopes A Momentary Lapse Of Reason 12 Signs Of Life 13 Learning To Fly 14 The Dogs Of War 15 One Slip 16 On The Turning Away 17 Yet Another Movie-Round And Round 18 A New Machine P.I 19 Terminal Frost 20 A New Machine P.II 21 Sorrow The Final Cut 22 The Post War Dream 23 Your Possible Pasts 24 One Of The Few 25 The Hero's Return 26 The Gunner's Dream 27 Paranoyd Eyes 28 Get Your Filthy Hands Off My Desert 29 The Fletcher Memorial Home 30 Southampton Dock 31 The Final Cut 32 Not Now John 33 Two Suns In The Sunset The Wall (CD1) 34 In The Flesh 35 The Thin Ice 36 Another Brick In The Wall P.I 37 The Happiest Days Of Our Lives 38 Another Brick In The Wall P.II 39 Mother 40 Goodbye Blue Sky 41 Empty Spaces 42 Young Lust 43 One Of My Turns 44 Don't Leave Me Now 45 Another Brick In The Wall P.III 46 Goodbye Cruel World The Wall (CD2) 47 Hey You 48 Is There Anybody Out There 49 Nobody Home 50 Vera 51 Bring The Boys Back Home 52 Comfortably Numb 53 The Show Must Go On 54 In The Flesh 55 Run Like Hell 56 -

The Guardian, Week of July 6, 2020

Wright State University CORE Scholar The Guardian Student Newspaper Student Activities 7-6-2020 The Guardian, Week of July 6, 2020 Wright State Student Body Follow this and additional works at: https://corescholar.libraries.wright.edu/guardian Part of the Mass Communication Commons Repository Citation Wright State Student Body (2020). The Guardian, Week of July 6, 2020. : Wright State University. This Newspaper is brought to you for free and open access by the Student Activities at CORE Scholar. It has been accepted for inclusion in The Guardian Student Newspaper by an authorized administrator of CORE Scholar. For more information, please contact [email protected]. DND: The Donut Trail Marissa Couch July 6, 2020 A sweet thing about Dayton is the number of donut shops the area has to choose from. One doesn’t have to travel far to catch a sugar rush. Duck Donuts Duck Donuts is located at 1200 Brown St. Dayton, OH 45409 on the University of Dayton’s campus. The popular franchise originated in North Carolina and has made its way all across the country since the original opening in 2007. While conveniently located, there are plans for a location opening even closer, in Beavercreek. Stan the Donut Man Stan the Donut Man opened in 1975 in Dayton and remains a staple of the area. The shop is located at 1441 Wilmington Ave, Dayton, OH 45420. All donuts are made in-house at the Dayton location. There is an additional storefront in Xenia at 607 N. Detroit St. Xenia, OH 45385. Donut Palace Those looking for a sugary fix can find Donut Palace in both Huber Heights and Trotwood.