ECE 565 Syllabus S11

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Moving Average Filters

CHAPTER 15 Moving Average Filters The moving average is the most common filter in DSP, mainly because it is the easiest digital filter to understand and use. In spite of its simplicity, the moving average filter is optimal for a common task: reducing random noise while retaining a sharp step response. This makes it the premier filter for time domain encoded signals. However, the moving average is the worst filter for frequency domain encoded signals, with little ability to separate one band of frequencies from another. Relatives of the moving average filter include the Gaussian, Blackman, and multiple- pass moving average. These have slightly better performance in the frequency domain, at the expense of increased computation time. Implementation by Convolution As the name implies, the moving average filter operates by averaging a number of points from the input signal to produce each point in the output signal. In equation form, this is written: EQUATION 15-1 Equation of the moving average filter. In M &1 this equation, x[ ] is the input signal, y[ ] is ' 1 % y[i] j x [i j ] the output signal, and M is the number of M j'0 points used in the moving average. This equation only uses points on one side of the output sample being calculated. Where x[ ] is the input signal, y[ ] is the output signal, and M is the number of points in the average. For example, in a 5 point moving average filter, point 80 in the output signal is given by: x [80] % x [81] % x [82] % x [83] % x [84] y [80] ' 5 277 278 The Scientist and Engineer's Guide to Digital Signal Processing As an alternative, the group of points from the input signal can be chosen symmetrically around the output point: x[78] % x[79] % x[80] % x[81] % x[82] y[80] ' 5 This corresponds to changing the summation in Eq. -

Dsp Notes Prepared

DSP NOTES PREPARED BY Ch.Ganapathy Reddy Professor & HOD, ECE Shaikpet, Hyderabad-08 Ch Ganapathy Reddy, Prof and HOD, ECE, GNITS id:[email protected],9052344333 1 DIGITAL SIGNAL PROCESSING A signal is defined as any physical quantity that varies with time, space or another independent variable. A system is defined as a physical device that performs an operation on a signal. System is characterized by the type of operation that performs on the signal. Such operations are referred to as signal processing. Advantages of DSP 1. A digital programmable system allows flexibility in reconfiguring the digital signal processing operations by changing the program. In analog redesign of hardware is required. 2. In digital accuracy depends on word length, floating Vs fixed point arithmetic etc. In analog depends on components. 3. Can be stored on disk. 4. It is very difficult to perform precise mathematical operations on signals in analog form but these operations can be routinely implemented on a digital computer using software. 5. Cheaper to implement. 6. Small size. 7. Several filters need several boards in analog, whereas in digital same DSP processor is used for many filters. Disadvantages of DSP 1. When analog signal is changing very fast, it is difficult to convert digital form .(beyond 100KHz range) 2. w=1/2 Sampling rate. 3. Finite word length problems. 4. When the signal is weak, within a few tenths of millivolts, we cannot amplify the signal after it is digitized. 5. DSP hardware is more expensive than general purpose microprocessors & micro controllers. Ch Ganapathy Reddy, Prof and HOD, ECE, GNITS id:[email protected],9052344333 2 6. -

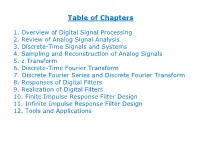

Discrete-Time Fourier Transform 7

Table of Chapters 1. Overview of Digital Signal Processing 2. Review of Analog Signal Analysis 3. Discrete-Time Signals and Systems 4. Sampling and Reconstruction of Analog Signals 5. z Transform 6. Discrete-Time Fourier Transform 7. Discrete Fourier Series and Discrete Fourier Transform 8. Responses of Digital Filters 9. Realization of Digital Filters 10. Finite Impulse Response Filter Design 11. Infinite Impulse Response Filter Design 12. Tools and Applications Chapter 1: Overview of Digital Signal Processing Chapter Intended Learning Outcomes: (i) Understand basic terminology in digital signal processing (ii) Differentiate digital signal processing and analog signal processing (iii) Describe basic digital signal processing application areas H. C. So Page 1 Signal: . Anything that conveys information, e.g., . Speech . Electrocardiogram (ECG) . Radar pulse . DNA sequence . Stock price . Code division multiple access (CDMA) signal . Image . Video H. C. So Page 2 0.8 0.6 0.4 0.2 0 vowel of "a" -0.2 -0.4 -0.6 0 0.005 0.01 0.015 0.02 time (s) Fig.1.1: Speech H. C. So Page 3 250 200 150 100 ECG 50 0 -50 0 0.5 1 1.5 2 2.5 time (s) Fig.1.2: ECG H. C. So Page 4 1 0.5 0 -0.5 transmitted pulse -1 0 0.2 0.4 0.6 0.8 1 time 1 0.5 0 -0.5 received pulse received t -1 0 0.2 0.4 0.6 0.8 1 time Fig.1.3: Transmitted & received radar waveforms H. C. So Page 5 Radar transceiver sends a 1-D sinusoidal pulse at time 0 It then receives echo reflected by an object at a range of Reflected signal is noisy and has a time delay of which corresponds to round trip propagation time of radar pulse Given the signal propagation speed, denoted by , is simply related to as: (1.1) As a result, the radar pulse contains the object range information H. -

Noise Will Be Noise: Or Phase Optimized Recursive Filters for Interference Suppression, Signal Differentiation and State Estimation (Extended Version) Hugh L

Available online at https://arxiv.org/ 1 Noise will be noise: Or phase optimized recursive filters for interference suppression, signal differentiation and state estimation (extended version) Hugh L. Kennedy Abstract— The increased temporal and spectral resolution of oversampled systems allows many sensor-signal analysis tasks to be performed (e.g. detection, classification and tracking) using a filterbank of low-pass digital differentiators. Such filters are readily designed via flatness constraints on the derivatives of the complex frequency response at dc, pi and at the centre frequencies of narrowband interferers, i.e. using maximally-flat (MaxFlat) designs. Infinite-impulse-response (IIR) filters are ideal in embedded online systems with high data-rates because computational complexity is independent of their (fading) ‘memory’. A novel procedure for the design of MaxFlat IIR filterbanks with improved passband phase linearity is presented in this paper, as a possible alternative to Kalman and Wiener filters in a class of derivative-state estimation problems with uncertain signal models. Butterworth poles are used for configurable bandwidth and guaranteed stability. Flatness constraints of arbitrary order are derived for temporal derivatives of arbitrary order and a prescribed group delay. As longer lags (in samples) are readily accommodated in oversampled systems, an expression for the optimal group delay that minimizes the white-noise gain (i.e. the error variance of the derivative estimate at steady state) is derived. Filter zeros are optimally placed for the required passband phase response and the cancellation of narrowband interferers in the stopband, by solving a linear system of equations. Low complexity filterbank realizations are discussed then their behaviour is analysed in a Teager-Kaiser operator to detect pulsed signals and in a state observer to track manoeuvring targets in simulated scenarios. -

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli 1 • FIR Filter Design Problem Given H(z) or H(ejw), find filter coefficients {b0, b1, b2, ….. bN-1} which are equal to {h0, h1, h2, ….hN-1} in the case of FIR filters. 1 z-1 z-1 z-1 z-1 x[n] h0 h1 h2 h3 hN-2 hN-1 1 1 1 1 1 y[n] Consider a general (infinite impulse response) definition: ¥ H (z) = å h[n] z-n n=-¥ 2 From complex variable theory, the inverse transform is: 1 n -1 h[n] = ò H (z)z dz 2pj C Where C is a counterclockwise closed contour in the region of convergence of H(z) and encircling the origin of the z-plane • Evaluating H(z) on the unit circle ( z = ejw ) : ¥ H (e jw ) = åh[n]e- jnw n=-¥ 1 p h[n] = ò H (e jw )e jnwdw where dz = jejw dw 2p -p 3 • Design of an ideal low pass FIR digital filter H(ejw) K -2p -p -wc 0 wc p 2p w Find ideal low pass impulse response {h[n]} 1 p h [n] = H (e jw )e jnwdw LP ò 2p -p 1 wc = Ke jnwdw 2p ò -wc Hence K h [n] = sin(nw ) n = 0, ±1, ±2, …. ±¥ LP np c 4 Let K = 1, wc = p/4, n = 0, ±1, …, ±10 The impulse response coefficients are n = 0, h[n] = 0.25 n = ±4, h[n] = 0 = ±1, = 0.225 = ±5, = -0.043 = ±2, = 0.159 = ±6, = -0.053 = ±3, = 0.075 = ±7, = -0.032 n = ±8, h[n] = 0 = ±9, = 0.025 = ±10, = 0.032 5 Non Causal FIR Impulse Response We can make it causal if we shift hLP[n] by 10 units to the right: K h [n] = sin((n -10)w ) LP (n -10)p c n = 0, 1, 2, …. -

Signals and Systems Lecture 8: Finite Impulse Response Filters

Signals and Systems Lecture 8: Finite Impulse Response Filters Dr. Guillaume Ducard Fall 2018 based on materials from: Prof. Dr. Raffaello D’Andrea Institute for Dynamic Systems and Control ETH Zurich, Switzerland G. Ducard 1 / 46 Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 2 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 3 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations FIR filters : definition The class of causal, LTI finite impulse response (FIR) filters can be captured by the difference equation M−1 y[n]= bku[n − k], Xk=0 where 1 M is the number of filter coefficients (also known as filter length), 2 M − 1 is often referred to as the filter order, 3 and bk ∈ R are the filter coefficients that describe the dependence on current and previous inputs. -

Overview Textbook Prerequisite Course Outline

ESE 337 Digital Signal Processing: Theory Fall 2018 Instructor: Yue Zhao Time and Location: Tuesday, Thursday 7:00pm - 8:20pm, Javits Lecture Center 101 Contact: Email: [email protected], Office: 261 Light Engineering Office Hours: Tuesday, Thursday 1:30pm - 3:00pm, or by appointment Teaching Assistants, and Office Hours: • Jiaming Li ([email protected]): THU 12:30pm - 2:00pm, or by appointment, Location: 208 Light Engineering (Changes of hours, if any, will be updated on Blackboard.) Overview Digital Signal Processing (DSP) lies at the heart of modern information technology in many fields including digital communications, audio/image/video compression, speech recognition, medical imaging, sensing for health, touch screens, space exploration, etc. This class covers the basic principles of digital signal processing and digital filtering. Skills for analyzing and synthesizing algorithms and systems that process discrete time signals will be developed. 3 credits. Textbook • A.V. Oppenheim and R.W. Schafer, Discrete Time Signal Processing, Prentice Hall, Third Edition, 2009 Prerequisite • ESE 305, Deterministic Signals and Systems Course Outline Week 1 DT signals, DT systems and properties, LTI systems Week 2 Convolution, properties of LTI systems Week 3 Examples of LTI systems, eigen functions, frequency response of DT systems, DTFT 1 ESE 337 Syllabus Week 4 Convergence and properties of DTFT Week 5 Theorems of DTFT, useful DTFT pairs, examples, Z-transform Week 6 Examples of Z-transform, properties of ROC Week 7 Inverse Z-transform, -

Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation

Computers, Materials & Continua Tech Science Press DOI:10.32604/cmc.2021.014752 Article Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation Dah-Jing Jwo1, *, Wei-Yeh Chang1 and I-Hua Wu2 1Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University, Keelung, 202-24, Taiwan 2Innovative Navigation Technology Ltd., Kaohsiung, 801, Taiwan *Corresponding Author: Dah-Jing Jwo. Email: [email protected] Received: 01 October 2020; Accepted: 08 November 2020 Abstract: This paper revisits the characteristics of windowing techniques with various window functions involved, and successively investigates spectral leak- age mitigation utilizing the Welch method. The discrete Fourier transform (DFT) is ubiquitous in digital signal processing (DSP) for the spectrum anal- ysis and can be efciently realized by the fast Fourier transform (FFT). The sampling signal will result in distortion and thus may cause unpredictable spectral leakage in discrete spectrum when the DFT is employed. Windowing is implemented by multiplying the input signal with a window function and windowing amplitude modulates the input signal so that the spectral leakage is evened out. Therefore, windowing processing reduces the amplitude of the samples at the beginning and end of the window. In addition to selecting appropriate window functions, a pretreatment method, such as the Welch method, is effective to mitigate the spectral leakage. Due to the noise caused by imperfect, nite data, the noise reduction from Welch’s method is a desired treatment. The nonparametric Welch method is an improvement on the peri- odogram spectrum estimation method where the signal-to-noise ratio (SNR) is high and mitigates noise in the estimated power spectra in exchange for frequency resolution reduction. -

Transformations for FIR and IIR Filters' Design

S S symmetry Article Transformations for FIR and IIR Filters’ Design V. N. Stavrou 1,*, I. G. Tsoulos 2 and Nikos E. Mastorakis 1,3 1 Hellenic Naval Academy, Department of Computer Science, Military Institutions of University Education, 18539 Piraeus, Greece 2 Department of Informatics and Telecommunications, University of Ioannina, 47150 Kostaki Artas, Greece; [email protected] 3 Department of Industrial Engineering, Technical University of Sofia, Bulevard Sveti Kliment Ohridski 8, 1000 Sofia, Bulgaria; mastor@tu-sofia.bg * Correspondence: [email protected] Abstract: In this paper, the transfer functions related to one-dimensional (1-D) and two-dimensional (2-D) filters have been theoretically and numerically investigated. The finite impulse response (FIR), as well as the infinite impulse response (IIR) are the main 2-D filters which have been investigated. More specifically, methods like the Windows method, the bilinear transformation method, the design of 2-D filters from appropriate 1-D functions and the design of 2-D filters using optimization techniques have been presented. Keywords: FIR filters; IIR filters; recursive filters; non-recursive filters; digital filters; constrained optimization; transfer functions Citation: Stavrou, V.N.; Tsoulos, I.G.; 1. Introduction Mastorakis, N.E. Transformations for There are two types of digital filters: the Finite Impulse Response (FIR) filters or Non- FIR and IIR Filters’ Design. Symmetry Recursive filters and the Infinite Impulse Response (IIR) filters or Recursive filters [1–5]. 2021, 13, 533. https://doi.org/ In the non-recursive filter structures the output depends only on the input, and in the 10.3390/sym13040533 recursive filter structures the output depends both on the input and on the previous outputs. -

Understanding Digital Signal Processing

Understanding Digital Signal Processing Richard G. Lyons PRENTICE HALL PTR PRENTICE HALL Professional Technical Reference Upper Saddle River, New Jersey 07458 www.photr,com Contents Preface xi 1 DISCRETE SEQUENCES AND SYSTEMS 1 1.1 Discrete Sequences and Their Notation 2 1.2 Signal Amplitude, Magnitude, Power 8 1.3 Signal Processing Operational Symbols 9 1.4 Introduction to Discrete Linear Time-Invariant Systems 12 1.5 Discrete Linear Systems 12 1.6 Time-Invariant Systems 17 1.7 The Commutative Property of Linear Time-Invariant Systems 18 1.8 Analyzing Linear Time-Invariant Systems 19 2 PERIODIC SAMPLING 21 2.1 Aliasing: Signal Ambiquity in the Frequency Domain 21 2.2 Sampling Low-Pass Signals 26 2.3 Sampling Bandpass Signals 30 2.4 Spectral Inversion in Bandpass Sampling 39 3 THE DISCRETE FOURIER TRANSFORM 45 3.1 Understanding the DFT Equation 46 3.2 DFT Symmetry 58 v vi Contents 3.3 DFT Linearity 60 3.4 DFT Magnitudes 61 3.5 DFT Frequency Axis 62 3.6 DFT Shifting Theorem 63 3.7 Inverse DFT 65 3.8 DFT Leakage 66 3.9 Windows 74 3.10 DFT Scalloping Loss 82 3.11 DFT Resolution, Zero Padding, and Frequency-Domain Sampling 83 3.12 DFT Processing Gain 88 3.13 The DFT of Rectangular Functions 91 3.14 The DFT Frequency Response to a Complex Input 112 3.15 The DFT Frequency Response to a Real Cosine Input 116 3.16 The DFT Single-Bin Frequency Response to a Real Cosine Input 117 3.17 Interpreting the DFT 120 4 THE FAST FOURIER TRANSFORM 125 4.1 Relationship of the FFT to the DFT 126 4.2 Hints an Using FFTs in Practice 127 4.3 FFT Software Programs -

System Identification and Power Spectrum Estimation

182 IEEE TRANSACTIONS ON ACOUSTICS, SPEECH, AND SIGNAL PROCESSING, VOL. ASSP-27, NO. 2, APRIL 1979 Short-TimeFourier Analysis Techniques for FIR System Identification and Power Spectrum Estimation LAWRENCER. RABINER, FELLOW, IEEE, AND JONT B. ALLEN, MEMBER, IEEE Abstract—A wide variety of methods have been proposed for system F[çb] modeling and identification. To date, the most successful of these L = length of w(n) methods have been time domain procedures such as least squares analy- sis, or linear prediction (ARMA models). Although spectral techniques N= length ofDFTF[] have been proposed for spectral estimation and system identification, N' = number of data points the resulting spectral and system estimates have always been strongly N1 = lower limit on sum affected by the analysis window (biased estimates), thereby reducing N7 upper limit on sum the potential applications of this class of techniques. In this paper we M = length of h propose a novel short-time Fourier transform analysis technique in M = estimate of M which the influences of the window on a spectral estimate can essen- tially be removed entirely (an unbiased estimator) by linearly combin- h(n) = systems impulse response ing biased estimates. As a result, section (FFT) lengths for analysis can h(n) = estimate of h(n) be made as small as possible, thereby increasing the speed of the algo- F[.] = Fourier transform operator rithm without sacrificing accuracy. The proposed algorithm has the F'' [] = inverse Fourier transform important property that as the number of samples used in the estimate q1 = number of 0 diagonals below main increases, the solution quickly approaches the least squares (theoreti- cally optimum) solution. -

ESE 531: Digital Signal Processing Today IIR Filter Design Impulse

Today ESE 531: Digital Signal Processing ! IIR Filter Design " Impulse Invariance " Bilinear Transformation Lec 18: March 30, 2017 ! Transformation of DT Filters IIR Filters and Adaptive Filters ! Adaptive Filters ! LMS Algorithm Penn ESE 531 Spring 2017 – Khanna Penn ESE 531 Spring 2017 - Khanna 2 IIR Filter Design Impulse Invariance ! Transform continuous-time filter into a discrete- ! Want to implement continuous-time system in time filter meeting specs discrete-time " Pick suitable transformation from s (Laplace variable) to z (or t to n) " Pick suitable analog Hc(s) allowing specs to be met, transform to H(z) ! We’ve seen this before… impulse invariance Penn ESE 531 Spring 2017 - Khanna 3 Penn ESE 531 Spring 2017 - Khanna 4 Impulse Invariance Impulse Invariance ! With Hc(jΩ) bandlimited, choose ! With Hc(jΩ) bandlimited, choose j ω j ω H(e ω ) = H ( j ), ω < π H(e ω ) = H ( j ), ω < π c T c T ! With the further requirement that T be chosen such ! With the further requirement that T be chosen such that that Hc ( jΩ) = 0, Ω ≥ π / T Hc ( jΩ) = 0, Ω ≥ π / T h[n] = Thc (nT ) Penn ESE 531 Spring 2017 - Khanna 5 Penn ESE 531 Spring 2017 - Khanna 6 1 IIR by Impulse Invariance Example jω ! If Hc(jω)≈0 for |ωd| > π/T, no aliasing and H(e ) = H(jω/T), ω<π jω ! To get a particular H(e ), find corresponding Hc and Td for which above is true (within specs) ! Note: Td is not for aliasing control, used for frequency scaling. Penn ESE 531 Spring 2017 - Khanna 7 Penn ESE 531 Spring 2017 - Khanna 8 Example Example 1 eat ←⎯L→ s − a Penn ESE 531 Spring