Accuracy Aspects of Utilizing Raw Imagery in Photogrammetric Measurement

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Management of Large Sets of Image Data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak

Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak Download free books at Karol Kozak Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Download free eBooks at bookboon.com 2 Management of large sets of image data: Capture, Databases, Image Processing, Storage, Visualization 1st edition © 2014 Karol Kozak & bookboon.com ISBN 978-87-403-0726-9 Download free eBooks at bookboon.com 3 Management of large sets of image data Contents Contents 1 Digital image 6 2 History of digital imaging 10 3 Amount of produced images – is it danger? 18 4 Digital image and privacy 20 5 Digital cameras 27 5.1 Methods of image capture 31 6 Image formats 33 7 Image Metadata – data about data 39 8 Interactive visualization (IV) 44 9 Basic of image processing 49 Download free eBooks at bookboon.com 4 Click on the ad to read more Management of large sets of image data Contents 10 Image Processing software 62 11 Image management and image databases 79 12 Operating system (os) and images 97 13 Graphics processing unit (GPU) 100 14 Storage and archive 101 15 Images in different disciplines 109 15.1 Microscopy 109 360° 15.2 Medical imaging 114 15.3 Astronomical images 117 15.4 Industrial imaging 360° 118 thinking. 16 Selection of best digital images 120 References: thinking. 124 360° thinking . 360° thinking. Discover the truth at www.deloitte.ca/careers Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. -

Camera Raw Workflows

RAW WORKFLOWS: FROM CAMERA TO POST Copyright 2007, Jason Rodriguez, Silicon Imaging, Inc. Introduction What is a RAW file format, and what cameras shoot to these formats? How does working with RAW file-format cameras change the way I shoot? What changes are happening inside the camera I need to be aware of, and what happens when I go into post? What are the available post paths? Is there just one, or are there many ways to reach my end goals? What post tools support RAW file format workflows? How do RAW codecs like CineForm RAW enable me to work faster and with more efficiency? What is a RAW file? In simplest terms is the native digital data off the sensor's A/D converter with no further destructive DSP processing applied Derived from a photometrically linear data source, or can be reconstructed to produce data that directly correspond to the light that was captured by the sensor at the time of exposure (i.e., LOG->Lin reverse LUT) Photometrically Linear 1:1 Photons Digital Values Doubling of light means doubling of digitally encoded value What is a RAW file? In film-analogy would be termed a “digital negative” because it is a latent representation of the light that was captured by the sensor (up to the limit of the full-well capacity of the sensor) “RAW” cameras include Thomson Viper, Arri D-20, Dalsa Evolution 4K, Silicon Imaging SI-2K, Red One, Vision Research Phantom, noXHD, Reel-Stream “Quasi-RAW” cameras include the Panavision Genesis In-Camera Processing Most non-RAW cameras on the market record to 8-bit YUV formats -

Advanced Color Machine Vision and Applications Dr

Advanced Color Machine Vision and Applications Dr. Romik Chatterjee V.P. Business Development Graftek Imaging 2016 V04 - for tutorial 5/4/2016 Outline • What is color vision? Why is it useful? • Comparing human and machine color vision • Physics of color imaging • A model of color image formation • Human color vision and measurement • Color machine vision systems • Basic color machine vision algorithms • Advanced algorithms & applications • Human vision Machine Vision • Case study Important terms What is Color? • Our perception of wavelengths of light . Photometric (neural computed) measures . About 360 nm to 780 nm (indigo to deep red) . “…the rays are not coloured…” - Isaac Newton • Machine vision (MV) measures energy at different wavelengths (Radiometric) nm : nanometer = 1 billionth of a meter Color Image • Color image values, Pi, are a function of: . Illumination spectrum, E(λ) (lighting) λ = Wavelength . Object’s spectral reflectance, R(λ) (object “color”) . Sensor responses, Si(λ) (observer, viewer, camera) . Processing • Human <~> MV . Eye <~> Camera . Brain <~> Processor Color Vision Estimates Object Color • The formation color image values, Pi, has too many unknowns (E(λ) = lighting spectrum, etc.) to directly estimate object color. • Hard to “factor out” these unknowns to get an estimate of object color . In machine vision, we often don’t bother to! • Use knowledge, constraints, and computation to solve for object color estimates . Not perfect, but usually works… • If color vision is so hard, why use it? Material Property • -

Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images 9

Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images 9 ORIGINAL ARTICLE Marshall E Tyler, CRA, FOPS Larry D Hubbard, MAT University of Wisconsin; Madison, WI Wake Forest University Medical Center Boulevard Ken Boydston Winston-Salem, NC 27157-1033 Megavision, Inc.; Santa Barbara, CA [email protected] Anthony J Pugliese Choices for Service in Imaging, Inc. Glen Rock, NJ Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images INTRODUCTION of exposure, a smaller or larger number of photons strikes his article examines the impact of digital fundus cam- each sensel, depending upon the brightness of the incident era system technology on color retinal image quality, light in the scene. Within the dynamic range of the sen- T specifically as it affects tonal resolution (brightness, sor, it can differentiate specific levels of illumination on a contrast, and color balance). We explore three topics: (1) grayscale rather than just black or white. However, that the nature and characteristics of the silicon-based photo range of detection is not infinite; too dark and no light is sensor, (2) the manner in which the digital fundus camera detected to provide pictorial detail; too bright and the sen- incorporates the sensor as part of a complete system, and sor “maxes out”, thus failing to record detail. (3) some considerations as to how to select a digital cam- This latter point requires further explanation. Sensels era system to satisfy your tonal resolution needs, and how have a fixed capacity to hold electrons generated by inci- to accept delivery and training on a new system.1 dent photons. -

Measuring Camera Shannon Information Capacity with a Siemens Star Image

https://doi.org/10.2352/ISSN.2470-1173.2020.9.IQSP-347 © 2020, Society for Imaging Science and Technology Measuring camera Shannon Information Capacity with a Siemens Star Image Norman L. Koren, Imatest LLC, Boulder, Colorado, USA Abstract Measurement background Shannon information capacity, which can be expressed as bits per To measure signal and noise at the same location, we use an pixel or megabits per image, is an excellent figure of merit for pre- image of a sinusoidal Siemens-star test chart consisting of ncycles dicting camera performance for a variety of machine vision appli- total cycles, which we analyze by dividing the star into k radial cations, including medical and automotive imaging systems. Its segments (32 or 64), each of which is subdivided into m angular strength is that is combines the effects of sharpness (MTF) and segments (8, 16, or 24) of length Pseg. The number sine wave noise, but it has not been widely adopted because it has been cycles in each angular segment is 푛 = 푛푐푦푐푙푒푠/푚. difficult to measure and has never been standardized. We have developed a method for conveniently measuring inform- ation capacity from images of the familiar sinusoidal Siemens Star chart. The key is that noise is measured in the presence of the image signal, rather than in a separate location where image processing may be different—a commonplace occurrence with bilateral filters. The method also enables measurement of SNRI, which is a key performance metric for object detection. Information capacity is strongly affected by sensor noise, lens quality, ISO speed (Exposure Index), and the demosaicing algo- rithm, which affects aliasing. -

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M. Hubel, John Liu and Rudolph J. Guttosch Foveon, Inc. Santa Clara, California Abstract Bayer Background We compared the Spatial Frequency Response (SFR) The Bayer pattern, also known as a Color Filter of image sensors that use the Bayer color filter Array (CFA) or a mosaic pattern, is made up of a pattern and Foveon X3 technology for color image repeating array of red, green, and blue filter material capture. Sensors for both consumer and professional deposited on top of each spatial location in the array cameras were tested. The results show that the SFR (figure 1). These tiny filters enable what is normally for Foveon X3 sensors is up to 2.4x better. In a black-and-white sensor to create color images. addition to the standard SFR method, we also applied the SFR method using a red/blue edge. In this case, R G R G the X3 SFR was 3–5x higher than that for Bayer filter G B G B pattern devices. R G R G G B G B Introduction In their native state, the image sensors used in digital Figure 1 Typical Bayer filter pattern showing the alternate sampling of red, green and blue pixels. image capture devices are black-and-white. To enable color capture, small color filters are placed on top of By using 2 green filtered pixels for every red or blue, each photodiode. The filter pattern most often used is 1 the Bayer pattern is designed to maximize perceived derived in some way from the Bayer pattern , a sharpness in the luminance channel, composed repeating array of red, green, and blue pixels that lie mostly of green information. -

Multi-Frame Demosaicing and Super-Resolution of Color Images

1 Multi-Frame Demosaicing and Super-Resolution of Color Images Sina Farsiu∗, Michael Elad ‡ , Peyman Milanfar § Abstract In the last two decades, two related categories of problems have been studied independently in the image restoration literature: super-resolution and demosaicing. A closer look at these problems reveals the relation between them, and as conventional color digital cameras suffer from both low-spatial resolution and color-filtering, it is reasonable to address them in a unified context. In this paper, we propose a fast and robust hybrid method of super-resolution and demosaicing, based on a MAP estimation technique by minimizing a multi-term cost function. The L 1 norm is used for measuring the difference between the projected estimate of the high-resolution image and each low-resolution image, removing outliers in the data and errors due to possibly inaccurate motion estimation. Bilateral regularization is used for spatially regularizing the luminance component, resulting in sharp edges and forcing interpolation along the edges and not across them. Simultaneously, Tikhonov regularization is used to smooth the chrominance components. Finally, an additional regularization term is used to force similar edge location and orientation in different color channels. We show that the minimization of the total cost function is relatively easy and fast. Experimental results on synthetic and real data sets confirm the effectiveness of our method. ∗Corresponding author: Electrical Engineering Department, University of California Santa Cruz, Santa Cruz CA. 95064 USA. Email: [email protected], Phone:(831)-459-4141, Fax: (831)-459-4829 ‡ Computer Science Department, The Technion, Israel Institute of Technology, Israel. -

Demosaicking: Color Filter Array Interpolation [Exploring the Imaging

[Bahadir K. Gunturk, John Glotzbach, Yucel Altunbasak, Ronald W. Schafer, and Russel M. Mersereau] FLOWER PHOTO © PHOTO FLOWER MEDIA, 1991 21ST CENTURY PHOTO:CAMERA AND BACKGROUND ©VISION LTD. DIGITAL Demosaicking: Color Filter Array Interpolation [Exploring the imaging process and the correlations among three color planes in single-chip digital cameras] igital cameras have become popular, and many people are choosing to take their pic- tures with digital cameras instead of film cameras. When a digital image is recorded, the camera needs to perform a significant amount of processing to provide the user with a viewable image. This processing includes correction for sensor nonlinearities and nonuniformities, white balance adjustment, compression, and more. An important Dpart of this image processing chain is color filter array (CFA) interpolation or demosaicking. A color image requires at least three color samples at each pixel location. Computer images often use red (R), green (G), and blue (B). A camera would need three separate sensors to completely meas- ure the image. In a three-chip color camera, the light entering the camera is split and projected onto each spectral sensor. Each sensor requires its proper driving electronics, and the sensors have to be registered precisely. These additional requirements add a large expense to the system. Thus, many cameras use a single sensor covered with a CFA. The CFA allows only one color to be measured at each pixel. This means that the camera must estimate the missing two color values at each pixel. This estimation process is known as demosaicking. Several patterns exist for the filter array. -

Cameras • Video Camera

Outline • Pinhole camera •Film camera • Digital camera Cameras • Video camera Digital Visual Effects, Spring 2007 Yung-Yu Chuang 2007/3/6 with slides by Fredo Durand, Brian Curless, Steve Seitz and Alexei Efros Camera trial #1 Pinhole camera pinhole camera scene film scene barrier film Add a barrier to block off most of the rays. • It reduces blurring Put a piece of film in front of an object. • The pinhole is known as the aperture • The image is inverted Shrinking the aperture Shrinking the aperture Why not making the aperture as small as possible? • Less light gets through • Diffraction effect High-end commercial pinhole cameras Adding a lens “circle of confusion” scene lens film A lens focuses light onto the film $200~$700 • There is a specific distance at which objects are “in focus” • other points project to a “circle of confusion” in the image Lenses Exposure = aperture + shutter speed F Thin lens equation: • Aperture of diameter D restricts the range of rays (aperture may be on either side of the lens) • Any object point satisfying this equation is in focus • Shutter speed is the amount of time that light is • Thin lens applet: allowed to pass through the aperture http://www.phy.ntnu.edu.tw/java/Lens/lens_e.html Exposure Effects of shutter speeds • Two main parameters: • Slower shutter speed => more light, but more motion blur – Aperture (in f stop) – Shutter speed (in fraction of a second) • Faster shutter speed freezes motion Aperture Depth of field • Aperture is the diameter of the lens opening, usually specified by f-stop, f/D, a fraction of the focal length. -

Evaluation of the Foveon X3 Sensor for Astronomy

Evaluation of the Foveon X3 sensor for astronomy Anna-Lea Lesage, Matthias Schwarz [email protected], Hamburger Sternwarte October 2009 Abstract Foveon X3 is a new type of CMOS colour sensor. We present here an evaluation of this sensor for the detection of transit planets. Firstly, we determined the gain , the dark current and the read out noise of each layer. Then the sensor was used for observations of Tau Bootes. Finally half of the transit of HD 189733 b could be observed. 1 Introduction The detection of exo-planet with the transit method relies on the observation of a diminution of the ux of the host star during the passage of the planet. This is visualised in time as a small dip in the light curve of the star. This dip represents usually a decrease of 1% to 3% of the magnitude of the star. Its detection is highly dependent of the stability of the detector, providing a constant ux for the star. However ground based observations are limited by the inuence of the atmosphere. The latter induces two eects : seeing which blurs the image of the star, and scintillation producing variation of the apparent magnitude of the star. The seeing can be corrected through the utilisation of an adaptive optic. Yet the eect of scintillation have to be corrected by the observation of reference stars during the observation time. The perturbation induced by the atmosphere are mostly wavelength independent. Thus, record- ing two identical images but at dierent wavelengths permit an identication of the wavelength independent eects. -

An Overview of State-Of-The-Art Denoising and Demosaicking Techniques: Toward a Unified Framework for Handling Artifacts During Image Reconstruction

AN OVERVIEW OF STATE-OF-THE-ART DENOISING AND DEMOSAICKING TECHNIQUES: TOWARD A UNIFIED FRAMEWORK FOR HANDLING ARTIFACTS DURING IMAGE RECONSTRUCTION Bart Goossens∗, Hiep Luong, Jan Aelterman, Aleksandra Pižurica and Wilfried Philips Ghent University - TELIN - IPI - iMinds Sint-Pietersnieuwstraat 41, B-9000 Ghent, Belgium [email protected] ABSTRACT RAW Updated input image RAW image Pushing the physical limits of the camera sensors brings significant challenges to image post-processing techniques, Degradation model Impose prior knowledge which often have to work under various constraints, such as (blur, signal-dependent noise, demosaicking…) w.r.t. undegraded image (e.g., wavelets, shearlets) low-power, limited memory and computational resources. Other Iterative platforms, e.g., desktop PCs suffer less from these issues, solver Reconstructed allowing extra room for improved reconstruction. Moreover, the image captured images are subject to many sources of imperfection (e.g., noise, blur, saturation) which complicate the processing. In this paper, we give an overview of some recent work aimed at Fig. 1. A unified reconstruction framework. overcoming these problems. We focus especially on denoising, deblurring and demosaicking techniques. techniques have been developed that jointly solve several degradations (e.g., noise, demosaicking, blur, saturation) at I. INTRODUCTION once, using the mathematical framework of estimation theory, leading to much better reconstruction results. Several notable In the past decade, there has been a significant increase in examples are: joint denoising and demosaicking [1]–[4], joint diversity of both display devices and image acquisition devices, demosaicking and deconvolution [5]–[8], high dynamic range going from tiny cameras in mobile phones to 100 megapixel reconstruction and denoising [9], [10] and declipping and (MP) digital cameras. -

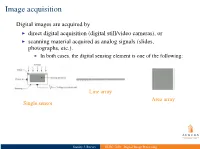

ELEC 7450 - Digital Image Processing Image Acquisition

I indirect imaging techniques, e.g., MRI (Fourier), CT (Backprojection) I physical quantities other than intensities are measured I computation leads to 2-D map displayed as intensity Image acquisition Digital images are acquired by I direct digital acquisition (digital still/video cameras), or I scanning material acquired as analog signals (slides, photographs, etc.). I In both cases, the digital sensing element is one of the following: Line array Area array Single sensor Stanley J. Reeves ELEC 7450 - Digital Image Processing Image acquisition Digital images are acquired by I direct digital acquisition (digital still/video cameras), or I scanning material acquired as analog signals (slides, photographs, etc.). I In both cases, the digital sensing element is one of the following: Line array Area array Single sensor I indirect imaging techniques, e.g., MRI (Fourier), CT (Backprojection) I physical quantities other than intensities are measured I computation leads to 2-D map displayed as intensity Stanley J. Reeves ELEC 7450 - Digital Image Processing Single sensor acquisition Stanley J. Reeves ELEC 7450 - Digital Image Processing Linear array acquisition Stanley J. Reeves ELEC 7450 - Digital Image Processing Two types of quantization: I spatial: limited number of pixels I gray-level: limited number of bits to represent intensity at a pixel Array sensor acquisition I Irradiance incident at each photo-site is integrated over time I Resulting array of intensities is moved out of sensor array and into a buffer I Quantized intensities are stored as a grayscale image Stanley J. Reeves ELEC 7450 - Digital Image Processing Array sensor acquisition I Irradiance incident at each photo-site is integrated over time I Resulting array of intensities is moved out of sensor array and into a buffer I Quantized Two types of quantization: intensities are stored as a I spatial: limited number of pixels grayscale image I gray-level: limited number of bits to represent intensity at a pixel Stanley J.