Real-Time 3D Ambisonics Using Faust, Processing, Pure Data, and Osc

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Embedded Real-Time Audio Signal Processing with Faust Romain Michon, Yann Orlarey, Stéphane Letz, Dominique Fober, Dirk Roosenburg

Embedded Real-Time Audio Signal Processing With Faust Romain Michon, Yann Orlarey, Stéphane Letz, Dominique Fober, Dirk Roosenburg To cite this version: Romain Michon, Yann Orlarey, Stéphane Letz, Dominique Fober, Dirk Roosenburg. Embedded Real- Time Audio Signal Processing With Faust. International Faust Conference (IFC-20), Dec 2020, Paris, France. hal-03124896 HAL Id: hal-03124896 https://hal.archives-ouvertes.fr/hal-03124896 Submitted on 29 Jan 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Proceedings of the 2nd International Faust Conference (IFC-20), Maison des Sciences de l’Homme Paris Nord, France, December 1-2, 2020 EMBEDDED REAL-TIME AUDIO SIGNAL PROCESSING WITH FAUST Romain Michon,a;b Yann Orlarey,a Stéphane Letz,a Dominique Fober,a and Dirk Roosenburgd;e aGRAME – Centre National de Création Musicale, Lyon, France bCenter for Computer Research in Music and Acoustics, Stanford University, USA dTIMARA, Oberlin Conservatory of Music, USA eDepartment of Physics, Oberlin College, USA [email protected] ABSTRACT multi-channel audio, etc.). These products work in conjunction with specialized Linux distributions where audio processing tasks FAUST has been targeting an increasing number of embedded plat- forms for real-time audio signal processing applications in recent are carried out outside of the operating system, allowing for the years. -

Extending the Faust VST Architecture with Polyphony, Portamento and Pitch Bend Yan Michalevsky Julius O

Extending the Faust VST Architecture with Polyphony, Portamento and Pitch Bend Yan Michalevsky Julius O. Smith Andrew Best Department of Electrical Center for Computer Research in Blamsoft, Inc. Engineering, Music and Acoustics (CCRMA), [email protected] Stanford University Stanford University [email protected] AES Fellow [email protected] Abstract VST (Virtual Studio Technology) plugin stan- We introduce the vsti-poly.cpp architecture for dard was released by Steinberg GmbH (famous the Faust programming language. It provides sev- for Cubase and other music and sound produc- eral features that are important for practical use of tion products) in 1996, and was followed by the Faust-generated VSTi synthesizers. We focus on widespread version 2.0 in 1999 [8]. It is a partic- the VST architecture as one that has been used tra- ularly common format supported by many older ditionally and is supported by many popular tools, and newer tools. and add several important features: polyphony, note Some of the features expected from a VST history and pitch-bend support. These features take plugin can be found in the VST SDK code.2 Faust-generated VST instruments a step forward in Examining the list of MIDI events [1] can also terms of generating plugins that could be used in Digital Audio Workstations (DAW) for real-world hint at what capabilities are expected to be im- music production. plemented by instrument plugins. We also draw from our experience with MIDI instruments and Keywords commercial VST plugins in order to formulate sound feature requirements. Faust, VST, Plugin, DAW In order for Faust to be a practical tool for generating such plugins, it should support most 1 Introduction of the features expected, such as the following: Faust [5] is a popular music/audio signal pro- • Responding to MIDI keyboard events cessing language developed by Yann Orlarey et al. -

Audio Signal Processing in Faust

Audio Signal Processing in Faust Julius O. Smith III Center for Computer Research in Music and Acoustics (CCRMA) Department of Music, Stanford University, Stanford, California 94305 USA jos at ccrma.stanford.edu Abstract Faust is a high-level programming language for digital signal processing, with special sup- port for real-time audio applications and plugins on various software platforms including Linux, Mac-OS-X, iOS, Android, Windows, and embedded computing environments. Audio plugin formats supported include VST, lv2, AU, Pd, Max/MSP, SuperCollider, and more. This tuto- rial provides an introduction focusing on a simple example of white noise filtered by a variable resonator. Contents 1 Introduction 3 1.1 Installing Faust ...................................... 4 1.2 Faust Examples ...................................... 5 2 Primer on the Faust Language 5 2.1 Basic Signal Processing Blocks (Elementary Operators onSignals) .......... 7 2.2 BlockDiagramOperators . ...... 7 2.3 Examples ........................................ 8 2.4 InfixNotationRewriting. ....... 8 2.5 Encoding Block Diagrams in the Faust Language ................... 9 2.6 Statements ...................................... ... 9 2.7 FunctionDefinition............................... ...... 9 2.8 PartialFunctionApplication . ......... 10 2.9 FunctionalNotationforOperators . .......... 11 2.10Examples ....................................... 11 1 2.11 Summary of Faust NotationStyles ........................... 11 2.12UnaryMinus ..................................... 12 2.13 Fixing -

Proyecto Fin De Grado

ESCUELA TÉCNICA SUPERIOR DE INGENIERÍA Y SISTEMAS DE TELECOMUNICACIÓN PROYECTO FIN DE GRADO TÍTULO: Implementación de un sintetizador en un kit de desarrollo de bajo coste AUTOR: Arturo Fernández TITULACIÓN: Grado en Ingeniería de Sonido e Imagen TUTOR: Antonio Mínguez Olivares DEPARTAMENTO: Teoría de la señal y Comunicación VºBº Miembros del Tribunal Calificador: PRESIDENTE: Eduardo Nogueira Díaz TUTOR: Antonio Mínguez Olivares SECRETARIO: Francisco Javier Tabernero Gil Fecha de lectura: Calificación: El Secretario, Agradecimientos − A mis padres y mi hermana, por apoyarme en todos los aspectos (con discrepancias inevitables) a lo largo de esta carrera de fondo. − A mis amigos y la novia, por no verme el pelo durante largos periodos de tiempo. − A los profesores que han conseguido despertar mi interés y dedicarme horas en tutorías. − A mi tutor por aceptar mi propuesta y tutelar el proyecto sin pensárselo. − A mi cuñado Rafa, que, a pesar de estar eternamente abarrotado, sacaba un hueco para ayudarme en los momentos más difíciles, motivarme y guiarme a lo largo de todos estos años. Sin su ayuda, esto no habría sido posible. − Finalmente, darme las gracias a mí mismo, por aguantar este ritmo de vida, tener la ambición de seguir y afrontar nuevos retos. Como me dice mi padre: “Tú hasta que no llegues a la Luna, no vas a parar, ¿no?”. Implementación de un sintetizador en un kit de desarrollo de bajo coste Resumen La realización de este proyecto consiste en el diseño de un sintetizador ‘Virtual Analógico’ basado en sintetizadores de los años 60 y 70. Para ello, se estudia la teoría que conforma la topología básica de los sintetizadores sustractivos, así como, los elementos que intervienen en la generación y modificación del sonido. -

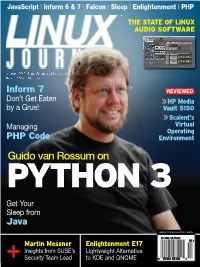

Guido Van Rossum on PYTHON 3 Get Your Sleep From

JavaScript | Inform 6 & 7 | Falcon | Sleep | Enlightenment | PHP LINUX JOURNAL ™ THE STATE OF LINUX AUDIO SOFTWARE LANGUAGES Since 1994: The Original Magazine of the Linux Community OCTOBER 2008 | ISSUE 174 Inform 7 REVIEWED JavaScript | Inform 6 & 7 | Falcon | Sleep Enlightenment PHP Audio | Inform 6 & 7 Falcon JavaScript Don’t Get Eaten HP Media by a Grue! Vault 5150 Scalent’s Managing Virtual Operating PHP Code Environment Guido van Rossum on PYTHON 3 Get Your Sleep from OCTOBER Java www.linuxjournal.com 2008 $5.99US $5.99CAN 10 ISSUE Martin Messner Enlightenment E17 Insights from SUSE’s Lightweight Alternative 174 + Security Team Lead to KDE and GNOME 0 09281 03102 4 MULTIPLY ENERGY EFFICIENCY AND MAXIMIZE COOLING. THE WORLD’S FIRST QUAD-CORE PROCESSOR FOR MAINSTREAM SERVERS. THE NEW QUAD-CORE INTEL® XEON® PROCESSOR 5300 SERIES DELIVERS UP TO 50% 1 MORE PERFORMANCE*PERFORMANCE THAN PREVIOUS INTEL XEON PROCESSORS IN THE SAME POWERPOWER ENVELOPE.ENVELOPE. BASEDBASED ONON THETHE ULTRA-EFFICIENTULTRA-EFFICIENT INTEL®INTEL® CORE™CORE™ MICROMICROARCHITECTURE, ARCHITECTURE IT’S THE ULTIMATE SOLUTION FOR MANAGING RUNAWAY COOLING EXPENSES. LEARN WHYWHY GREAT GREAT BUSINESS BUSINESS COMPUTING COMPUTING STARTS STARTS WITH WITH INTEL INTEL INSIDE. INSIDE. VISIT VISIT INTEL.CO.UK/XEON INTEL.COM/XEON. RELION 2612 s 1UAD #ORE)NTEL®8EON® RELION 1670 s 1UAD #ORE)NTEL®8EON® PROCESSOR PROCESSOR s 5SERVERWITHUPTO4" s )NTEL@3EABURG CHIPSET s )DEALFORCOST EFFECTIVE&ILE$" WITH-(ZFRONTSIDEBUS APPLICATIONS s 5PTO'"2!-IN5CLASS s 2!32ELIABILITY !VAILABILITY LEADINGMEMORYCAPACITY 3ERVICEABILITY s -ANAGEMENTFEATURESTOSUPPORT LARGECLUSTERDEPLOYMENTS 34!24).'!4$2429.00 34!24).'!4$1969.00 Penguin Computing provides turnkey x86/Linux clusters for high performance technical computing applications. -

An Old-School Polyphonic Synthesizer Samplv1

local projects linuxaudio.org Show-off my open-source stuff, mostly of the Linux Audio/MIDI genre Vee One Suite 0.9.4 - A Late Autumn'18 Release 12 December, 2018 - 19:00 — rncbc Greetings! The Vee One Suite of so called old-school software instruments, synthv1, as a polyphonic subtractive synthesizer, samplv1, a polyphonic sampler synthesizer, drumkv1 as yet another drum-kit sampler and padthv1 as a polyphonic additive synthesizer, are being released for the late in Autumn'18. All still available in dual format: a pure stand-alone JACK client with JACK-session, NSM (Non Session management) and both JACK MIDI and ALSA MIDI input support; a LV2 instrument plug-in. The changes for this special season are the following: Make sure all LV2 state sample file references are resolved to their original and canonical file- paths (not symlinks). (applies to samplv1 and drumkv1 only) Fixed a severe bug on saving the LV2 plug-in state: the sample file reference was being saved with the wrong name identifier and thus gone missing on every session or state reload thereafter.(applies to samplv1 only). Sample waveform drawing is a bit more keen to precision. Old deprecated Qt4 build support is no more. Normalized wavetable oscillator phasors. Added missing include <unistd.h> to shut up some stricter compilers from build failures. The Vee One Suite are free, open-source Linux Audio software, distributed under the terms of the GNU General Public License (GPL) version 2 or later. And here they go again! synthv1 - an old-school polyphonic synthesizer synthv1 0.9.4 (late-autumn'18) released! synthv1 is an old-school all-digital 4-oscillator subtractive polyphonic synthesizer with stereo fx. -

Avlinux MX Edition (AVL-MXE) User Manual

AVLinuxAVLinux MXMX EditionEdition (AVL-MXE)(AVL-MXE) UserUser ManualManual Prepared by: Glen MacArthur DISCLAIMER (PLEASE READ) : Debian/GNU Linux comes with no guarantees so consequentially neither does AVL-MXE. I accept no responsibility for any hardware/software malfunctions or data loss resulting from its use. It is important to note that the AVL-MXE ISO may contain software that is non-free and may be distributed under special licensing arrangements with the original developers, re-distributing the AVL-MXE ISO with the non-free content included is a violation of these licenses. AVL-MXE may potentially contain Multimedia Codecs that may be under patent in certain countries, it is the Users responsibility to know the law as it applies to their own respective countries before downloading or installing AVL-MXE. 1 Bookmarks ➔ About This Manual ➔ G etting Help ➔ A New Chapter for AV L inux ! ➔ AVL-MXE Features at a Glance ➔ Included Trusted Debian Repositories ➔ External/Independent Software in AVL-MXE ➔ Specific AVL-MXE Tools and Packages ➔ Known Issues in AVL-MXE ➔ Downloading the AVL-MXE ISO File ➔ Running AVL-MXE as a ‘LiveISO’ ➔ The Network Assistant for WiFi ➔ Installing AVL-MXE ➔ Installation Suggestions ➔ The AVL-MXE Assistant ➔ The Kernel Conundrum ➔ XFCE4 with Openbox ➔ Slim Login Manager ➔ Getting Around in XFCE4 ➔ Thunar File Manager ➔ QT5 Configuration Tool ➔ MX-Snapshot in AVL-MXE ➔ Software Installation Notes ➔ Audio and MIDI in AVL-MXE ➔ Initial Setup of Ardour and Mixbus32C ➔ Running Windows Audio Software ➔ Saving and Restoring JACK Connections ➔ Commercial Software Demos in AVL-MXE ➔ Thanks and Acknowledgements 2 About This Manual This is a new User Manual for a new project, it is currently a Work-In-Progress and will be for some time I’m sure. -

Studioware: Applications List Studioware: Applications List

2021/07/27 06:26 (UTC) 1/4 Studioware: Applications List Studioware: Applications List This is the full list of applications and supporting libraries/plugins etc. plus their location in git and on our package server: audio/amps/guitarix2 audio/codecs/lame audio/desktop/evince audio/development/guile1.8 audio/development/libsigc++ audio/development/libsigc++-legacy12 audio/development/ntk audio/development/phat audio/development/pysetuptools audio/development/scons audio/development/scrollkeeper audio/development/vamp-plugin-sdk audio/drums/hydrogen audio/editors/audacity audio/editors/denemo audio/editors/mscore audio/editors/rosegarden audio/effects/jack-rack audio/effects/rakarrack audio/graphics/fontforge audio/graphics/frescobaldi audio/graphics/goocanvas audio/graphics/lilypond audio/graphics/mftrace audio/graphics/potrace audio/graphics/pygoocanvas audio/graphics/t1utils audio/libraries/atkmm audio/libraries/aubio audio/libraries/cairomm audio/libraries/clalsadrv audio/libraries/clthreads audio/libraries/clxclient audio/libraries/dssi audio/libraries/fltk audio/libraries/glibmm audio/libraries/gnonlin audio/libraries/gst-python audio/libraries/gtkmm audio/libraries/gtksourceview audio/libraries/imlib2 audio/libraries/ladspa_sdk audio/libraries/lash audio/libraries/libavc1394 SlackDocs - https://docs.slackware.com/ Last update: 2019/04/24 17:34 (UTC) studioware:applications_list https://docs.slackware.com/studioware:applications_list audio/libraries/libdca audio/libraries/libgnomecanvas audio/libraries/libgnomecanvasmm audio/libraries/libiec61883 -

Teaching Sound Synthesis in C/C ++ on the Raspberry Pi

Teaching Sound Synthesis in C=C++ on the Raspberry Pi Henrik von Coler and David Runge Audio Communication Group, TU Berlin [email protected] [email protected] Abstract added, they are not considered for exploring ap- For a sound synthesis programming class in C/C++, plied sound synthesis algorithms in this class. a Raspberry Pi 3 was used as runtime and develop- In a more application-based context, graphi- ment system. The embedded system was equipped cal programming environments like Pure Data with an Arch Linux ARM, a collection of libraries (Pd), MAX MSP or others would be the first for sound processing and interfacing, as well as with choice. They allow rapid progress and interme- basic examples. All material used in and created diate results. However, they are not the best for the class is freely available in public git reposito- platform to enhance the knowledge of sound ries. After a unit of theory on sound synthesis and Linux system basics, students worked on projects synthesis algorithms on a sample-wise level by in groups. This paper is a progress report, point- nature. ing out benefits and drawbacks after the first run of the seminar. The concept delivered a system with C/C++ delivers a reasonable compromise be- acceptable capabilities and latencies at a low price. tween low level access and the comfort of using Usability and robustness, however, need to be im- available libraries for easy interfacing with hard- proved in future attempts. ware. For using C/C++ in the development of sound synthesis software, a software devel- Keywords opment kit (SDK) or application programming Jack, Raspberry Pi, C/C++ Programming, Educa- interface (API) is needed in order to offer access tion, Sound Synthesis, MIDI, OSC, Arch Linux to the audio hardware. -

Ardour 3 Download Linux

Ardour 3 download linux on Linux, OS X and Windows. Get Ardour Now Ardour's core user group: people who want to record, edit, mix and master audio and MIDI projects. When you Download · The Ardour Manual · Ardour Community · Features. Just download and run Ardour on your Linux, OS X or Windows computer. But if you're hoping to modify Ardour or get involved in our development process. Before You Start (Linux Edition). Please read EVERYTHING on this page before proceeding to use Ardour. How To Install. If you downloaded source code, these. You can use it to record, edit and mix multi-track audio. You can produce your own CDs, mix video soundtracks, or just experiment with new ideas about music. Download ardour linux packages for ALTLinux, Arch Linux, CentOS, Debian, Fedora, Mageia, OpenMandriva, openSUSE, PCLinuxOS, ROSA, Slackware. Free Download Ardour for Linux - A professional-grade multi-track and multi-channel audio recorder and DAW for Linux and Mac. From Paul Davis: Ardour for Linux features unlimited audio tracks and buses, non-destructive, non-linear editing with unlimited undo, anything-to-anywhere. Anyway, is there a way that I could download Ardour (any of the versions) But in general less than 3% of you do pay. Linux User: Ardour, free digital audio workstation application, has reached the release for almost one month. The new release brings many technical. The big news is, as expected, Ardour 3 will add MIDI recording, .. If you use Linux, you can download the full version from your distro's. In this video, we cover how to install Ardour on Ubuntu Linux. -

DAW Plugins for Web Browsers Jari Kleimola Dept

DAW Plugins for Web Browsers Jari Kleimola Dept. of Media Technology School of Science, Aalto University Otaniementie 17, Espoo, Finland [email protected] ABSTRACT ScriptProcessor node (or its future AudioWorker reincarnation) A large collection of Digital Audio Workstation (DAW) plugins is for custom DSP development in JavaScript. Unfortunately, available on the Internet in open source form. This paper explores scripting support leads to inevitable performance penalties, which their reuse in browser environments, focusing on hosting options can be mitigated by using Mozilla’s high performance asm.js [6] that do not require manual installation. Two options based on and Emscripten [12] virtual machine as an optimized runtime Emscripten and PNaCl are introduced, implemented, evaluated, platform on top of JavaScript. Google’s Portable Native Client and released as open source. We found that ported DAW effect (PNaCl) technology [4] provides another solution for accelerated and sound synthesizer plugins complement and integrate with the custom DSP development. Instead of compiling C/C++ source Web Audio API, and that the existing preset patch collections code into a subset of JavaScript as in Emscripten, PNaCl employs make the plugins readily usable in online contexts. The latency bitcode modules that are embedded into the webpage, and measures are higher than in native plugin implementations, but translated into native executable form ahead of runtime. expected to reduce with the emerging AudioWorker node. Emscripten/asm.js and PNaCl technologies work directly from the open web and do not require manual installation. Categories and Subject Descriptors Since Emscripten and PNaCl are both based on C/C++ source Information systems~Browsers, Applied computing~Sound and code compilation, reuse of existing codebases in web platforms music computing, Software and its engineering~Real-time systems comes as an additional benefit. -

Ambisonics Plug-In Suite for Production and Performance Usage

Ambisonics plug-in suite for production and performance usage Matthias KRONLACHNER Institute of Electronic Music and Acoustics, Graz (student) M.K. Ciurlionioˇ g. 5-15 LT - 03104 Vilnius [email protected] Abstract only) plug-in suite offers 3D encoders for 1st and nd th Ambisonics is a technique for the spatialization of 2 order as well as 2D encoders for 5 order. sound sources within a circular or spherical loud- For the plug-ins created in this work, speaker arrangement. This paper presents a suite of the C++ cross-platform programming library Ambisonics processors, compatible with most stan- JUCE5 [Storer, 2012] has been used to develop 1 dard DAW plug-in formats on Linux, Mac OS X audio plug-ins compatible to most DAWs on and Windows. Some considerations about usabil- Linux, Mac OS X and Windows. JUCE is be- ity did result in features of the user interface and automation possibilities, not available in other sur- ing developed and maintained by Julian Storer, round panning plug-ins. The encoder plug-in may be who used it as the base of the DAW Track- connected to a central program for visualisation and tion. JUCE is released under the GNU Public remote control purposes, displaying the current posi- Licence. A commercial license may be acquired tion and audio level of every single track in the DAW. for closed source projects. It is possible to build To enable monitoring of Ambisonics content without JUCE audio processors as LADSPA, VST, AU, an extensive loudspeaker setup, binaural decoders RTAS and AAX plug-ins or as Jack standalone for headphone playback have been implemented.