Who Is the Master?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Check Is in the Mail June 2007

The Check Is in the Mail June 2007 NOTICE: I will be out of the office from June 16 through June 25 to teach at Castle Chess Camp in Atlanta, Georgia. During that GM Cesar Augusto Blanco-Gramajo time I will be unable to answer any of your email, US mail, telephone calls, or GAME OF THE MONTH any other form of communication. Cesar’s provisional USCF rating is 2463. NOTICE #2 As you watch this game unfold, you can The email address for USCF almost see Blanco’s rating go upwards. correspondence chess matters has changed to [email protected] RUY LOPEZ (C67) White: Cesar Blanco (2463) ICCF GRANDMASTER in 2006 Black: Benjamin Coraretti (0000) ELECTRONIC KNIGHTS 2006 Electronic Knights Cesar Augusto Blanco-Gramajo, born 1.e4 e5 2.Nf3 Nc6 3.Bb5 Nf6 4.0–0 January 14, 1959, is a Guatemalan ICCF Nxe4 5.d4 Nd6 6.Bxc6 dxc6 7.dxe5 Grandmaster now living in the US and Ne4 participating in the 2006 Electronic An unusual sideline that seems to be Knights. Cesar has had an active career gaining in popularity lately. in ICCF (playing over 800 games there) and sports a 2562 ICCF rating along 8.Qe2 with the GM title which was awarded to him at the ICCF Congress in Ostrava in White chooses to play the middlegame 2003. He took part in the great Rest of as opposed to the endgame after 8. the World vs. Russia match, holding Qxd8+. With an unstable Black Knight down Eleventh Board and making two and quick occupancy of the d-file, draws against his Russian opponent. -

World Stars Sharjah Online International Chess Championship 2020

World Stars Sharjah Online International Chess Championship 2020 World Stars 2020 ● Tournament Book ® Efstratios Grivas 2020 1 Welcome Letter Sharjah Cultural & Chess Club President Sheikh Saud bin Abdulaziz Al Mualla Dear Participants of the World Stars Sharjah Online International Chess Championship 2020, On behalf of the Board of Directors of the Sharjah Cultural & Chess Club and the Organising Committee, I am delighted to welcome all our distinguished participants of the World Stars Sharjah Online International Chess Championship 2020! Unfortunately, due to the recent negative and unpleasant reality of the Corona-Virus, we had to cancel our annual live events in Sharjah, United Arab Emirates. But we still decided to organise some other events online, like the World Stars Sharjah Online International Chess Championship 2020, in cooperation with the prestigious chess platform Internet Chess Club. The Sharjah Cultural & Chess Club was founded on June 1981 with the object of spreading and development of chess as mental and cultural sport across the Sharjah Emirate and in the United Arab Emirates territory in general. As on 2020 we are celebrating the 39th anniversary of our Club I can promise some extra-ordinary events in close cooperation with FIDE, the Asian Chess Federation and the Arab Chess Federation for the coming year 2021, which will mark our 40th anniversary! For the time being we welcome you in our online event and promise that we will do our best to ensure that the World Stars Sharjah Online International Chess Championship -

Bulletin Round 6 -08.08.14

Bulletin Round 6 -08.08.14 That Carlsen black magic Blitz and “Media chess attention playing is a tool to seals get people to chess” Photos: Daniel Skog, COT 2014 (Carlsen and Seals) / David Martinez, chess24 (Gelfand) Chess Olympiad Tromsø 2014 – Bulletin Round 6– 08.08.14 Fabiano Caruana and Magnus Carlsen before the start of round 6 Photo: David Llada / COT2014 That Carlsen black magic Norway 1 entertained the home fans with a clean 3-1 over Italy, and with Magnus Carlsen performing some of his patented minimalist magic to defeat a major rival. GM Kjetil Lie put the Norwegians ahead with the kind of robust aggression typical of his best form on board four, and the teams traded wins on boards two and three. All eyes were fixed on the Caruana-Carlsen clash, where Magnus presumably pulled off an opening surprise by adopting the offbeat variation that he himself had faced as White against Nikola Djukic of Montenegro in round three. By GM Jonathan Tisdall Caruana appeared to gain a small but comfortable Caruana is number 3 in the world and someone advantage in a queenless middlegame, but as I've lost against a few times, so it feels incredibly Carlsen has shown so many times before, the good to beat him. quieter the position, the deadlier he is. In typically hypnotic fashion, the position steadily swung On top board Azerbaijan continues to set the Carlsen's way, and suddenly all of White's pawns pace, clinching another match victory thanks to were falling like overripe fruit. Carlsen's pleasure two wins with the white pieces, Mamedyarov with today's work was obvious, as he stopped to beating Jobava in a bare-knuckle brawl, and with high-five colleague Jon Ludvig Hammer on his GM Rauf Mamedov nailing GM Gaioz Nigalidze way into the NRK TV studio. -

White Knight Review Chess E-Magazine January/February - 2012 Table of Contents

Chess E-Magazine Interactive E-Magazine Volume 3 • Issue 1 January/February 2012 Chess Gambits Chess Gambits The Immortal Game Canada and Chess Anderssen- Vs. -Kieseritzky Bill Wall’s Top 10 Chess software programs C Seraphim Press White Knight Review Chess E-Magazine January/February - 2012 Table of Contents Editorial~ “My Move” 4 contents Feature~ Chess and Canada 5 Article~ Bill Wall’s Top 10 Software Programs 9 INTERACTIVE CONTENT ________________ Feature~ The Incomparable Kasparov 10 • Click on title in Table of Contents Article~ Chess Variants 17 to move directly to Unorthodox Chess Variations page. • Click on “White Feature~ Proof Games 21 Knight Review” on the top of each page to return to ARTICLE~ The Immortal Game 22 Table of Contents. Anderssen Vrs. Kieseritzky • Click on red type to continue to next page ARTICLE~ News Around the World 24 • Click on ads to go to their websites BOOK REVIEW~ Kasparov on Kasparov Pt. 1 25 • Click on email to Pt.One, 1973-1985 open up email program Feature~ Chess Gambits 26 • Click up URLs to go to websites. ANNOTATED GAME~ Bareev Vs. Kasparov 30 COMMENTARY~ “Ask Bill” 31 White Knight Review January/February 2012 White Knight Review January/February 2012 Feature My Move Editorial - Jerry Wall [email protected] Well it has been over a year now since we started this publication. It is not easy putting together a 32 page magazine on chess White Knight every couple of months but it certainly has been rewarding (maybe not so Review much financially but then that really never was Chess E-Magazine the goal). -

I Make This Pledge to You Alone, the Castle Walls Protect Our Back That I Shall Serve Your Royal Throne

AMERA M. ANDERSEN Battlefield of Life “I make this pledge to you alone, The castle walls protect our back that I shall serve your royal throne. and Bishops plan for their attack; My silver sword, I gladly wield. a master plan that is concealed. Squares eight times eight the battlefield. Squares eight times eight the battlefield. With knights upon their mighty steed For chess is but a game of life the front line pawns have vowed to bleed and I your Queen, a loving wife and neither Queen shall ever yield. shall guard my liege and raise my shield Squares eight times eight the battlefield. Squares eight time eight the battlefield.” Apathy Checkmate I set my moves up strategically, enemy kings are taken easily Knights move four spaces, in place of bishops east of me Communicate with pawns on a telepathic frequency Smash knights with mics in militant mental fights, it seems to be An everlasting battle on the 64-block geometric metal battlefield The sword of my rook, will shatter your feeble battle shield I witness a bishop that’ll wield his mystic sword And slaughter every player who inhabits my chessboard Knight to Queen’s three, I slice through MCs Seize the rook’s towers and the bishop’s ministries VISWANATHAN ANAND “Confidence is very important—even pretending to be confident. If you make a mistake but do not let your opponent see what you are thinking, then he may overlook the mistake.” Public Enemy Rebel Without A Pause No matter what the name we’re all the same Pieces in one big chess game GERALD ABRAHAMS “One way of looking at chess development is to regard it as a fight for freedom. -

Bccf E-Mail Bulletin #205

BCCF E-MAIL BULLETIN #205 This issue will be the last of 2010; that being the case, I would like to take this opportunity to wish you all the best of the holiday season - see you in 2011! Your editor welcomes any and all submissions - news of upcoming events, tournament reports, and anything else that might be of interest to B.C. players. Thanks to all who contributed to this issue. To subscribe, send me an e-mail ([email protected] ) or sign up via the BCCF webpage (www.chess.bc.ca ); if you no longer wish to receive this Bulletin, just let me know. Stephen Wright HERE AND THERE December Active (December 19) In the absence of the usual suspects organizer Luc Poitras won the December Active with a perfect 4.0/4. Tied for second a point back were Joe Roback, Joe Soliven, Jeremy Hiu, and Alexey Lushchenko (returning to tournament chess after a number of years). Eighteen players participated. Crosstable Portland Winter Open (December 11-12) The Winter version of this quarterly Portland event was won outright by current Canadian U16 Girls' Champion Alexandra Botez, whose 4.0/5 score included a last-round victory over the top seed, NM Steven Breckenridge. Breckenridge tied for second with Bill Heywood and Brian Esler. Crosstable Canadian Chess Player of the Year World Under 10 Champion Jason Cao of Victoria has been named the 2010 Canadian Chess Player of the Year , in a vote "made by Canadian Chess journalists, together with one ballot resulting from a fan poll (starting in 2007)." Nathan Divinsky The latest (December 2010) issue of Chess Life contains a profile of Nathan Divinsky, the "chess godfather of the North," by IM Anthony Saidy. -

Life Cycle Patterns of Cognitive Performance Over the Long

Life cycle patterns of cognitive performance over the long run Anthony Strittmattera,1 , Uwe Sundeb,1,2, and Dainis Zegnersc,1 aCenter for Research in Economics and Statistics (CREST)/Ecole´ nationale de la statistique et de l’administration economique´ Paris (ENSAE), Institut Polytechnique Paris, 91764 Palaiseau Cedex, France; bEconomics Department, Ludwig-Maximilians-Universitat¨ Munchen,¨ 80539 Munchen,¨ Germany; and cRotterdam School of Management, Erasmus University, 3062 PA Rotterdam, The Netherlands Edited by Robert Moffit, John Hopkins University, Baltimore, MD, and accepted by Editorial Board Member Jose A. Scheinkman September 21, 2020 (received for review April 8, 2020) Little is known about how the age pattern in individual perfor- demanding tasks, however, and are limited in terms of compara- mance in cognitively demanding tasks changed over the past bility, technological work environment, labor market institutions, century. The main difficulty for measuring such life cycle per- and demand factors, which all exhibit variation over time and formance patterns and their dynamics over time is related to across skill groups (1, 19). Investigations that account for changes the construction of a reliable measure that is comparable across in skill demand have found evidence for a peak in performance individuals and over time and not affected by changes in technol- potential around ages of 35 to 44 y (20) but are limited to short ogy or other environmental factors. This study presents evidence observation periods that prevent an analysis of the dynamics for the dynamics of life cycle patterns of cognitive performance of the age–performance profile over time and across cohorts. over the past 125 y based on an analysis of data from profes- An additional problem is related to measuring productivity or sional chess tournaments. -

2000/4 Layout

Virginia Chess Newsletter 2000- #4 1 GEORGE WASHINGTON OPEN by Mike Atkins N A COLD WINTER'S NIGHT on Dec 14, 1799, OGeorge Washington passed away on the grounds of his estate in Mt Vernon. He had gone for a tour of his property on a rainy day, fell ill, and was slowly killed by his physicians. Today the Best Western Mt Vernon hotel, site of VCF tournaments since 1996, stands only a few miles away. One wonders how George would have reacted to his name being used for a chess tournament, the George Washington Open. Eighty-seven players competed, a new record for Mt Vernon events. Designed as a one year replacement for the Fredericksburg Open, the GWO was a resounding success in its initial and perhaps not last appearance. Sitting atop the field by a good 170 points were IM Larry Kaufman (2456) and FM Emory Tate (2443). Kudos to the validity of 1 the rating system, as the final round saw these two playing on board 1, the only 4 ⁄2s. Tate is famous for his tactics and EMORY TATE -LARRY KAUFMAN (13...gxf3!?) 14 Nh5 gxf3 15 Kaufman is super solid and FRENCH gxf3 Nf8 16 Rg1+ Ng6 17 Rg4 rarely loses except to brilliancies. 1 e4 e6 2 Nf3 d5 3 Nc3 Nf6 Bd7 18 Kf1 Nd8 19 Qd2 Bb5 Inevitably one recalls their 4 e5 Nfd7 5 Ne2 c5 6 d4 20 Re1 f5 21 exf6 Bb4 22 f7+ meeting in the last round at the Nc6 7 c3 Be7 8 Nf4 cxd4 9 Kxf7 23 Rf4+ Nxf4 24 Qxf4+ 1999 Virginia Open, there also cxd4 Qb6 10 Be2 g5 11 Ke7 25 Qf6+ Kd7 26 Qg7+ on on the top board. -

2016 Year in Review

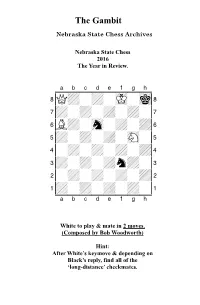

The Gambit Nebraska State Chess Archives Nebraska State Chess 2016 The Year in Review. XABCDEFGHY 8Q+-+-mK-mk( 7+-+-+-+-' 6L+-sn-+-+& 5+-+-+-sN-% 4-+-+-+-+$ 3+-+-+n+-# 2-+-+-+-+" 1+-+-+-+-! xabcdefghy White to play & mate in 2 moves. (Composed by Bob Woodworth) Hint: After White’s keymove & depending on Black’s reply, find all of the ‘long-distance’ checkmates. Gambit Editor- Kent Nelson The Gambit serves as the official publication of the Nebraska State Chess Association and is published by the Lincoln Chess Foundation. Send all games, articles, and editorial materials to: Kent Nelson 4014 “N” St Lincoln, NE 68510 [email protected] NSCA Officers President John Hartmann Treasurer Lucy Ruf Historical Archivist Bob Woodworth Secretary Gnanasekar Arputhaswamy Webmaster Kent Smotherman Regional VPs NSCA Committee Members Vice President-Lincoln- John Linscott Vice President-Omaha- Michael Gooch Vice President (Western) Letter from NSCA President John Hartmann January 2017 Hello friends! Our beloved game finds itself at something of a crossroads here in Nebraska. On the one hand, there is much to look forward to. We have a full calendar of scholastic events coming up this spring and a slew of promising juniors to steal our rating points. We have more and better adult players playing rated chess. If you’re reading this, we probably (finally) have a functional website. And after a precarious few weeks, the Spence Chess Club here in Omaha seems to have found a new home. And yet, there is also cause for concern. It’s not clear that we will be able to have tournaments at UNO in the future. -

The World Fischer Random Chess Championship Is Now Officially Recognized by FIDE

FOR IMMEDIATE RELEASE Oslo, April 20, 2019. The World Fischer Random Chess Championship is now officially recognized by FIDE This historic event will feature an online qualifying phase on Chess.com, beginning April 28, and is open to all players. The finals will be held in Norway this fall, with a prize fund of $375,000 USD. The International Chess Federation (FIDE) has granted the rights to host the inaugural FIDE World Fischer Random Chess Championship cycle to Dund AS, in partnership with Chess.com. And, for the first time in history, a chess world championship cycle will combine an online, open qualifier and worldwide participation with physical finals. “With FIDE’s support for Fischer Random Chess, we are happy to invite you to join the quest to become the first-ever FIDE World Fischer Random Chess Champion” said Arne Horvei, founding partner in Dund AS. “Anyone can participate online, and we are excited to see if there are any diamonds in the rough out there that could excel in this format of chess,” he said. "It is an unprecedented move that the International Chess Federation recognizes a new variety of chess, so this was a decision that required to be carefully thought out,” said FIDE president Arkady Dvorkovich, who recently visited Oslo to discuss this agreement. “But we believe that Fischer Random is a positive innovation: It injects new energies an enthusiasm into our game, but at the same time it doesn't mean a rupture with our classical chess and its tradition. It is probably for this reason that Fischer Random chess has won the favor of the chess community, including the top players and the world champion himself. -

YEARBOOK the Information in This Yearbook Is Substantially Correct and Current As of December 31, 2020

OUR HERITAGE 2020 US CHESS YEARBOOK The information in this yearbook is substantially correct and current as of December 31, 2020. For further information check the US Chess website www.uschess.org. To notify US Chess of corrections or updates, please e-mail [email protected]. U.S. CHAMPIONS 2002 Larry Christiansen • 2003 Alexander Shabalov • 2005 Hakaru WESTERN OPEN BECAME THE U.S. OPEN Nakamura • 2006 Alexander Onischuk • 2007 Alexander Shabalov • 1845-57 Charles Stanley • 1857-71 Paul Morphy • 1871-90 George H. 1939 Reuben Fine • 1940 Reuben Fine • 1941 Reuben Fine • 1942 2008 Yury Shulman • 2009 Hikaru Nakamura • 2010 Gata Kamsky • Mackenzie • 1890-91 Jackson Showalter • 1891-94 Samuel Lipchutz • Herman Steiner, Dan Yanofsky • 1943 I.A. Horowitz • 1944 Samuel 2011 Gata Kamsky • 2012 Hikaru Nakamura • 2013 Gata Kamsky • 2014 1894 Jackson Showalter • 1894-95 Albert Hodges • 1895-97 Jackson Reshevsky • 1945 Anthony Santasiere • 1946 Herman Steiner • 1947 Gata Kamsky • 2015 Hikaru Nakamura • 2016 Fabiano Caruana • 2017 Showalter • 1897-06 Harry Nelson Pillsbury • 1906-09 Jackson Isaac Kashdan • 1948 Weaver W. Adams • 1949 Albert Sandrin Jr. • 1950 Wesley So • 2018 Samuel Shankland • 2019 Hikaru Nakamura Showalter • 1909-36 Frank J. Marshall • 1936 Samuel Reshevsky • Arthur Bisguier • 1951 Larry Evans • 1952 Larry Evans • 1953 Donald 1938 Samuel Reshevsky • 1940 Samuel Reshevsky • 1942 Samuel 2020 Wesley So Byrne • 1954 Larry Evans, Arturo Pomar • 1955 Nicolas Rossolimo • Reshevsky • 1944 Arnold Denker • 1946 Samuel Reshevsky • 1948 ONLINE: COVID-19 • OCTOBER 2020 1956 Arthur Bisguier, James Sherwin • 1957 • Robert Fischer, Arthur Herman Steiner • 1951 Larry Evans • 1952 Larry Evans • 1954 Arthur Bisguier • 1958 E. -

An Elo-Based Approach to Model Team Players and Predict the Outcome of Games

AN ELO-BASED APPROACH TO MODEL TEAM PLAYERS AND PREDICT THE OUTCOME OF GAMES A Thesis by RICHARD EINSTEIN DOSS MARVELDOSS Submitted to the Office of Graduate and Professional Studies of Texas A&M University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Chair of Committee, Jean Francios Chamberland Committee Members, Gregory H. Huff Thomas R. Ioerger Head of Department, Scott Miller August 2018 Major Subject: Computer Engineering Copyright 2018 Richard Einstein Doss Marveldoss ABSTRACT Sports data analytics has become a popular research area in recent years, with the advent of dif- ferent ways to capture information about a game or a player. Different statistical metrics have been created to quantify the performance of a player/team. A popular application of sport data anaytics is to generate a rating system for all the team/players involved in a tournament. The resulting rating system can be used to predict the outcome of future games, assess player performances, or come up with a tournament brackets. A popular rating system is the Elo rating system. It started as a rating system for chess tour- naments. It’s known for its simple yet elegant way to assign a rating to a particular individual. Over the last decade, several variations of the original Elo rating system have come into existence, collectively know as Elo-based rating systems. This has been applied in a variety of sports like baseball, basketball, football, etc. In this thesis, an Elo-based approach is employed to model an individual basketball player strength based on the plus-minus score of the player.