Towards Full-Pipeline Handwritten Omr with Musical Symbol Detection by U-Nets

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sibelius Artwork Guidelines Contents

Sibelius Artwork Guidelines Contents Conditions of use ...........................................................................................................................3 Important information ..................................................................................................................4 Product names and logos.............................................................................................................5 Example copy..................................................................................................................................6 Endorsees ........................................................................................................................................7 Reviews............................................................................................................................................8 Awards...........................................................................................................................................11 House Style ...................................................................................................................................12 Conditions of use Who may use this material Authorized Sibelius distributors and dealers are permitted to reproduce text and graphics on this CD in order to market Sibelius products or PhotoScore, but only if these guidelines are adhered to, and all artwork is used unmodified and cleared by Sibelius Software before production of final proofs. Acknowledge trademarks Please -

Scanscore 2 Manual

ScanScore 2 Manual Copyright © 2020 by Lugert Verlag. All Rights Reserved. ScanScore 2 Manual Inhaltsverzeichnis Welcome to ScanScore 2 ..................................................................................... 3 Overview ...................................................................................................... 4 Quickstart ..................................................................................................... 4 What ScanScore is not .................................................................................... 6 Scanning and importing scores ............................................................................ 6 Importing files ............................................................................................... 7 Using a scanner ............................................................................................. 7 Using a smartphone ....................................................................................... 7 Open ScanScore project .................................................................................. 8 Multipage import ............................................................................................ 8 Working with ScanScore ..................................................................................... 8 The menu bar ................................................................................................ 8 The File Menu ............................................................................................ 9 The -

Integrating Paper and Digital Music Information Systems Karen Lin and Tim Bell University of Canterbury, Christchurch, New Zealand

Integrating Paper and Digital Music Information Systems Karen Lin and Tim Bell University of Canterbury, Christchurch, New Zealand Abstract Active musicians generally rely on extensive personal paper-based music information retrieval systems containing scores, parts, compositions, and arrangements of published and hand-written music. Many have a bias against using computers to store, edit and retrieve music, and prefer to work in the paper domain rather than using digital documents, despite the flexibility and powerful retrieval opportunities available. In this paper we propose a model of operation that blurs the boundaries between the paper and digital domains, offering musicians the best of both worlds. A survey of musicians identifies the problems and potential of working with digital tools, and we propose a system using colour printing and scanning technology that simplifies the process of moving music documents between the two domains. Keywords : user interfaces, user needs, optical music recognition 1. Introduction Traditionally musicians have stored and retrieved music scores using paper-based systems. Many musicians have built up personal libraries of music books, compositions, arrangements and sheet music. The acquisition or creation of documents is straightforward, but the retrieval or modification of scores is hindered by the inflexibility of the paper medium. A digital music library would have a number of benefits, including convenient retrieval (instead of searching through piles of music), ease of processing (such as part extraction), and communication (sending electronic copies to other performers). However working with digital documents also poses significant barriers for users more familiar with traditional paper documents. In this paper we explore the relationship between the paper and digital domains, and the possibility of allowing easy conversion between the two to allow documents to exist in both domains and be processed in whichever domain is the most convenient. -

Musical Notation Codes Index

Music Notation - www.music-notation.info - Copyright 1997-2019, Gerd Castan Musical notation codes Index xml ascii binary 1. MidiXML 1. PDF used as music notation 1. General information format 2. Apple GarageBand Format 2. MIDI (.band) 2. DARMS 3. QuickScore Elite file format 3. SMDL 3. GUIDO Music Notation (.qsd) Language 4. MPEG4-SMR 4. WAV audio file format (.wav) 4. abc 5. MNML - The Musical Notation 5. MP3 audio file format (.mp3) Markup Language 5. MusiXTeX, MusicTeX, MuTeX... 6. WMA audio file format (.wma) 6. MusicML 6. **kern (.krn) 7. MusicWrite file format (.mwk) 7. MHTML 7. **Hildegard 8. Overture file format (.ove) 8. MML: Music Markup Language 8. **koto 9. ScoreWriter file format (.scw) 9. Theta: Tonal Harmony 9. **bol Exploration and Tutorial Assistent 10. Copyist file format (.CP6 and 10. Musedata format (.md) .CP4) 10. ScoreML 11. LilyPond 11. Rich MIDI Tablature format - 11. JScoreML RMTF 12. Philip's Music Writer (PMW) 12. eXtensible Score Language 12. Creative Music File Format (XScore) 13. TexTab 13. Sibelius Plugin Interface 13. MusiXML: My own format 14. Mup music publication program 14. Finale Plugin Interface 14. MusicXML (.mxl, .xml) 15. NoteEdit 15. Internal format of Finale (.mus) 15. MusiqueXML 16. Liszt: The SharpEye OMR 16. XMF - eXtensible Music 16. GUIDO XML engine output file format Format 17. WEDELMUSIC 17. Drum Tab 17. NIFF 18. ChordML 18. Enigma Transportable Format 18. Internal format of Capella (ETF) (.cap) 19. ChordQL 19. CMN: Common Music 19. SASL: Simple Audio Score 20. NeumesXML Notation Language 21. MEI 20. OMNL: Open Music Notation 20. -

Power Tab Editor ❍ Appendix B - FAQ - a Collection of Frequently Asked Questions About the Power Tab Editor

Help Topics ● Introduction - Program overview and requirements ● What's New? - Program Version history; what was fixed and/or added in each version of the program ● Quick Steps To Creating A New Score - A simple guide to creating a Power Tab Score ● Getting Started ❍ Toolbars - Information on showing/hiding toolbars ❍ Creating A New Power Tab File - Information on how to create a new file ❍ The Score Layout - Describes how each Power Tab Score is laid out ❍ Navigating In Power Tab - Lists the different ways that you can traverse through a Power Tab score. ❍ The Status Bar - Description of what each pane signifies in the status bar. ● Sections and Staves ❍ What Is A Section? - Information on the core component used to construct Power Tab songs ❍ Adding A New Section - Information on how to add a new section to the score ❍ Attaching A Staff To A Section - Describes how attach a staff to a section so multiple guitar parts can be transcribed at the same time ❍ Changing The Number Of Tablature Lines On A Staff - Describes how to change the number of tablature staff lines on an existing staff ❍ Inserting A New Section - Describes how to insert a section within the score (as opposed to adding a section to the end of a score) ❍ Removing A Section Or Staff - Describes how to remove a section or staff from the score ❍ Position Width and Line Height - Describes how to change the width between positions and the distance between lines on the tablature staves ❍ Fills - Not implemented yet ● Song Properties ❍ File Information - How to edit the score -

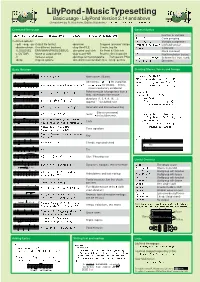

Lilypond Cheatsheet

LilyPond-MusicTypesetting Basic usage - LilyPond Version 2.14 and above Cheatsheet by R. Kainhofer, Edition Kainhofer, http://www.edition-kainhofer.com/ Command-line usage General Syntax lilypond [-l LOGLEVEL] [-dSCMOPTIONS] [-o OUTPUT] [-V] FILE.ly [FILE2.ly] \xxxx function or variable { ... } Code grouping Common options: var = {...} Variable assignment --pdf, --png, --ps Output file format -dpreview Cropped “preview” image \version "2.14.0" LilyPond version -dbackend=eps Use different backend -dlog-file=FILE Create .log file % dots Comment -l LOGLEVEL ERR/WARN/PROG/DEBUG -dno-point-and-click No Point & Click info %{ ... %} Block comment -o OUTDIR Name of output dir/file -djob-count=NR Process files in parallel c\... Postfix-notation (notes) -V Verbose output -dpixmap-format=pngalpha Transparent PNG #'(..), ##t, #'sym Scheme list, true, symb. -dhelp Help on options -dno-delete-intermediate-files Keep .ps files x-.., x^.., x_.. Directions Basic Notation Creating Staves, Voices and Groups \version "2.15.0" c d e f g a b Note names (Dutch) SMusic = \relative c'' { c1\p } Alterations: -is/-es for sharp/flat, SLyrics = \lyricmode { Oh! } cis bes as cisis beses b b! b? -isis/-eses for double, ! forces, AMusic = \relative c' { e1 } ? shows cautionary accidental \relative c' {c f d' c,} Relative mode (change less than a \score { fifth), raise/lower one octave \new ChoirStaff << \new Staff { g1 g2 g4 g8 g16 g4. g4.. durations (1, 2, 4, 8, 16, ...); append “.” for dotted note \new Voice = "Sop" { \dynamicUp \SMusic -

Using Smartscore 2.Pdf

Using SmartScore Scanning Music Be sure you have the necessary scanner drivers installed before attempting to scan from inside SmartScore. Most scanners come with software that enable programs such as SmartScore to control them. TWAIN drivers and/or Mac plug-ins are normally included in the software packaged with most scanners. It may be necessary for certain Mac users to perform a “Custom > TWAIN” installation from the CD accompanying your scan- ner; depending on the manufacturer. NOTE: Scanner drivers are often updated by scanner manufacturers and posted on their web sites. If problems occur during scanning, it is always a good idea to check the Internet for updated scanner drivers before calling Musitek Technical Support. Mac Users: Skip the next section. Turn to “Scanning in Macintosh” on page 6. Scanning in Windows: Using the SmartScore Scanning Interface a. Push the Scan button in the Navigator or in the Main Toolbar. Figure 1: Scan Button b. If there is no response, go to File > Scan Music > Select Scanner and choose appropriate TWAIN driver. If you do not see anything listed in the Select Scanner window, your drivers are probably not installed. Install or replace TWAIN driver from scanner CD or from “Driver Download” area of scanner manufacturer’s website. c. If the scanner still does not operate properly, go to “Choosing an alternative scanning interface” on page 5. USING SmartScore 1 Help > Using SmartScore Your scanner should immediately begin to operate with Scan or Acquire. A low-resolution pre-scan should soon appear in the Preview window. FIGURE 2: SmartScore scanning interface d. -

Guitar Pro 7 User Guide 1/ Introduction 2/ Getting Started

Guitar Pro 7 User Guide 1/ Introduction 2/ Getting started 2/1/ Installation 2/2/ Overview 2/3/ New features 2/4/ Understanding notation 2/5/ Technical support 3/ Use Guitar Pro 7 3/A/1/ Writing a score 3/A/2/ Tracks in Guitar Pro 7 3/A/3/ Bars in Guitar Pro 7 3/A/4/ Adding notes to your score. 3/A/5/ Insert invents 3/A/6/ Adding symbols 3/A/7/ Add lyrics 3/A/8/ Adding sections 3/A/9/ Cut, copy and paste options 3/A/10/ Using wizards 3/A/11/ Guitar Pro 7 Stylesheet 3/A/12/ Drums and percussions 3/B/ Work with a score 3/B/1/ Finding Guitar Pro files 3/B/2/ Navigating around the score 3/B/3/ Display settings. 3/B/4/ Audio settings 3/B/5/ Playback options 3/B/6/ Printing 3/B/7/ Files and tabs import 4/ Tools 4/1/ Chord diagrams 4/2/ Scales 4/3/ Virtual instruments 4/4/ Polyphonic tuner 4/5/ Metronome 4/6/ MIDI capture 4/7/ Line In 4/8 File protection 5/ mySongBook 1/ Introduction Welcome! You just purchased Guitar Pro 7, congratulations and welcome to the Guitar Pro family! Guitar Pro is back with its best version yet. Faster, stronger and modernised, Guitar Pro 7 offers you many new features. Whether you are a longtime Guitar Pro user or a new user you will find all the necessary information in this user guide to make the best out of Guitar Pro 7. 2/ Getting started 2/1/ Installation 2/1/1 MINIMUM SYSTEM REQUIREMENTS macOS X 10.10 / Windows 7 (32 or 64-Bit) Dual-core CPU with 4 GB RAM 2 GB of free HD space 960x720 display OS-compatible audio hardware DVD-ROM drive or internet connection required to download the software 2/1/2/ Installation on Windows Installation from the Guitar Pro website: You can easily download Guitar Pro 7 from our website via this link: https://www.guitar-pro.com/en/index.php?pg=download Once the trial version downloaded, upgrade it to the full version by entering your licence number into your activation window. -

Now Available

NOW AVAILABLE Music Composition and Performance Software Dozens of new features! ■ Video window (64-bit Windows® and Mac®) ■ New Film Score staff and tools ■ Bounce all stems / four more buses / up-sampling audio ■ Support for new VST libraries ■ Custom Rules Editor UI for creating custom VST sound-library rules ■ Studio One® Native Effects™ included: Limiter, Compressor, Pro EQ. ■ Many notation improvements: new enharmonic spelling tool, cross-staff notation, layout and printing improvements, and new shortcut sets ■ Enhanced chord library: more library chords, user-created chords, and recent chord recall feature ■ Six languages: U.S. and UK English, French, German, Japanese, Spanish ■ Mac Retina display and Windows 8 touchscreen optimization Standard Features: ■■Easily compose, play back, and edit music ■■Best playback of any notation product, with orchestral samples recorded by the London Symphony Orchestra and more ■■Perform scores using Notion as a live instrument and save your performance otion™ 5 is the latest version of Entering music NPreSonus’ easy-to-use, great- ■■Use keyboard and mouse from the sounding notation software. Entry palette to get going Notion makes it simple to write your ■■Interactive entry tools: Keyboard, musical ideas quickly, hear your scores Fretboard, Drum Pad, Chord Library ■■Write tab or standard notation played back with superb orchestral and ■■Enter notes in step time with a MIDI instrument other samples, compose to picture, and ■■ Record and notate in real time with a MIDI instrument edit on Mac®, Windows®, and iPad®. Sharing Now Notion allows you to write to ■■Create a score on a Mac or Windows picture with a brand-new video window computer and continue to edit on iPad Notion 5 is available as ■■Import/export files to and from other a boxed copy or electronic download. -

Using Smartscore - Tips from the Techs

Using SmartScore - Tips from the Techs Scanning 1) Learn the proper resolution to scan your scores. Recognition accuracy is directly related to the quality of an image file. Producing the best possible image file for recognition is essential for optimizing your time while using SmartScore. Before you scan your music take a look at the score and ask yourself a few quick questions: How large is the printing of the score? How fine are the lines? What is the condition of your original score? Clean, new scores printed on letter or legal sized pages will recognize very well at 300- 350 dpi. If the printing is small and/ or very fine, you should increase the resolution. Try starting at 400 dpi and decrease the contrast for the final scan by Ð10%. Darkening the final scan will thicken these lines, making them easier to recognize. Be careful, higher resolutions do not always equate to higher accuracy. There is a point of diminishing returns when the optimum resolution is exceeded. At 400+ dpi, recognition accuracy of scores with "normal" font sizes may suffer decreased accuracy. If your score is older or a Xerox copy, there may be some fading or breaking-up of the print. Try the above tips to fatten lines and close possible gaps in the final image file. You may even need to open the image file in the Image Editor to redraw some broken portions of the music (barlines, brackets, stems, etc.). 2) Good scanners make good image files. The next greatest variable is the quality of the scanner used. -

Guitar Pro 6 User’S Guide

Guitar Pro 6 User’s Guide Copyright © 2010 Arobas Music – All rights reserved www.guitar-pro.com Table of Contents Getting started ................................................................................................................................ 1 Installation ............................................................................................................................................. 1 New Features ......................................................................................................................................... 3 Technical Support .................................................................................................................................... 3 Overview ......................................................................................................................................... 4 Introduction ........................................................................................................................................... 4 Understanding Notation ............................................................................................................................ 5 The Main Screen ..................................................................................................................................... 6 Using Guitar Pro .............................................................................................................................. 8 Writing a Score ............................................................................................................................ -

WIKI::SCORE a Collaborative Environment for Music Transcription and Publishing

WIKI::SCORE A collaborative environment for music transcription and publishing J.J. Almeida N.R. Carvalho J.N. Oliveira Ref. [ACO11] — 2011 J.J. Almeida, N.R. Carvalho, J.N. Oliveira. Wiki::score a collaborative environment for music transcription and publishing. Information Services and Use, 31(3–4):177–187, 2011. DOI 10.3233/ISU-2012-0647. Information Services & Use 31 (2011) 177–187 177 DOI 10.3233/ISU-2012-0647 IOS Press WIKI::SCORE A collaborative environment for music transcription and publishing 1 José João Almeida a,∗, Nuno Ramos Carvalho a and José Nuno Oliveira a,b a University of Minho, Braga, Portugal b HASLab/INESC TEC E-mails: {jj, narcarvalho, jno}@di.uminho.pt Abstract. Music sources are most commonly shared in music scores scanned or printed on paper sheets. These artifacts are rich in information, but since they are images it is hard to re-use and share their content in todays’ digital world. There are modern languages that can be used to transcribe music sheets, this is still a time consuming task, because of the complexity involved in the process and the typical huge size of the original documents. WIKI::SCORE is a collaborative environment where several people work together to transcribe music sheets to a shared medium, using the notation. This eases the process of transcribing huge documents, and stores the document in a well known notation, that can be used later on to publish the whole content in several formats, such as a PDF document, images or audio files for example. Keywords: Music transcription, ABC, wiki, collaborative work, music publishing 1.