Contour Based Reconstruction of Underwater Structures Using Sonar, Visual, Inertial, and Depth Sensor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Winds, Waves, and Bubbles at the Air-Sea Boundary

JEFFREY L. HANSON WINDS, WAVES, AND BUBBLES AT THE AIR-SEA BOUNDARY Subsurface bubbles are now recognized as a dominant acoustic scattering and reverberation mechanism in the upper ocean. A better understanding of the complex mechanisms responsible for subsurface bubbles should lead to an improved prediction capability for underwater sonar. The Applied Physics Laboratory recently conducted a unique experiment to investigate which air-sea descriptors are most important for subsurface bubbles and acoustic scatter. Initial analyses indicate that wind-history variables provide better predictors of subsurface bubble-cloud development than do wave-breaking estimates. The results suggest that a close coupling exists between the wind field and the upper-ocean mixing processes, such as Langmuir circulation, that distribute and organize the bubble populations. INTRODUCTION A multiyear series of experiments, conducted under the that, in the Gulf of Alaska wintertime environment, the auspices of the Navy-sponsored acoustic program, Crit amount of wave-breaking activity may not be an ideal ical Sea Test (CST), I has been under way since 1986 with indicator of deep bubble-cloud formation. Instead, the the charter to investigate environmental, scientific, and penetration of bubbles is more closely tied to short-term technical issues related to the performance of low-fre wind fluctuations, suggesting a close coupling between quency (100-1000 Hz) active acoustics. One key aspect the wind field and upper-ocean mixing processes that of CST is the investigation of acoustic backscatter and distribute and organize the bubble populations within the reverberation from upper-ocean features such as surface mixed layer. waves and bubble clouds. -

Wetsuits Raises the Bar Once Again, in Both Design and Technological Advances

Orca evokes the instinct and prowess of the powerful ruler of the seas. Like the Orca whale, our designs have always been organic, streamlined and in tune with nature. Our latest 2016 collection of wetsuits raises the bar once again, in both design and technological advances. With never before seen 0.88Free technology used on the Alpha, and the ultimate swim assistance WETSUITS provided by the Predator, to a more gender specific 3.8 to suit male and female needs, down to the latest evolution of the ever popular S-series entry-level wetsuit, Orca once again has something to suit every triathlete’s needs when it comes to the swim. 10 11 TRIATHLON Orca know triathletes and we’ve been helping them to conquer the WETSUITS seven seas now for more than twenty years.Our latest collection of wetsuits reflects this legacy of knowledge and offers something for RANGE every level and style of swimmer. Whether you’re a good swimmer looking for ultimate flexibility, a struggling swimmer who needs all the buoyancy they can get, or a weekend warrior just starting out, Orca has you covered. OPENWATER Swimming in the openwater is something that has always drawn those types of swimmers that find that the largest pool is too small for them. However open water swimming is not without it’s own challenges and Orca’s Openwater collection is designed to offer visibility, and so security, to those who want to take on this sport. 016 SWIMRUN The SwimRun endurance race is a growing sport and the wetsuit requirements for these competitors are unique. -

Supplementary Report to the Final Report of the Coral Reef Expert Group: S6

The Great Barrier Reef Marine Park Authority acknowledges the continuing sea country management and custodianship of the Great Barrier Reef by Aboriginal and Torres Strait Islander Traditional Owners whose rich cultures, heritage values, enduring connections and shared efforts protect the Reef for future generations. © Commonwealth of Australia (Australian Institute of Marine Science) 2020 Published by the Great Barrier Reef Marine Park Authority ISBN 9780648721406 This document is licensed for use under a Creative Commons Attribution-NonCommercial 4.0 International licence with the exception of the Coat of Arms of the Commonwealth of Australia, the logos of the Great Barrier Reef Marine Park Authority and the Queensland Government, any other material protected by a trademark, content supplied by third parties and any photographs. For licence conditions see: https://creativecommons.org/licenses/by-nc/4.0/ A catalogue record for this publication is available from the National Library of Australia This publication should be cited as: Gonzalez-Rivero, M., Roelfsema, C., Lopez-Marcano, S., Castro-Sanguino,C., Bridge, T., and Babcock, R. 2020, Supplementary Report to the Final Report of the Coral Reef Expert Group: S6. Novel technologies in coral reef monitoring, Great Barrier Reef Marine Park Authority, Townsville. Front cover image: Underwater reefscape view of Lodestone Reef, Townsville region © Commonwealth of Australia (GBRMPA), photographer: Joanna Hurford. DISCLAIMER While reasonable effort has been made to ensure that the contents of this publication are factually correct, the Commonwealth of Australia, represented by the Great Barrier Reef Marine Park Authority, does not accept responsibility for the accuracy or completeness of the contents, and shall not be liable for any loss or damage that may be occasioned directly or indirectly through the use of, or reliance on, the contents of this publication. -

Diving Center Rates

IndividualIndividual RatesRates PackagePackage RatesRates Reservation and Transportation Information "LADY KEY DIVER" 40' Custom Dive Boat awaits your Charter at Special Low Rates COAST GUARD INSPECTED & APPROVED • QUALIFIED CREW LEAD-SHOT RENTAL WEIGHTS ON BOARD• FRESHWATER SHOWER SEATS WITH TANKS AT BACK AND GEAR STORAGE UNDER STEREO SOUND SYSTEM • EASY ENTRY AND EXIT • FAST • ROOMY • COMFORTABLE BobBob BrBraayman'syman's Where there are more things to do underwater than in any other place in the United States. OVER 40 SPECIES OF CORAL WRECK DIVING • SHALLOW OR DEEP DIVING • SHELTERED AREAS YEAR-ROUND SUMMER TEMPERATURES 5050 Overseas Highway • MM 50 SPEARFISH • LOBSTER • NIGHT DIVING Marathon, FL. Keys 33050 PLENTIFUL MARINE LIFE • NITROX 1-800-331-HALL(4255) TREASURE HUNTING • REBREATHERS FAX (305) 743-8168 TROPICAL FISH COLLECTING (305) 743-5929 E-Mail: [email protected] Volume 29 - June 2019 Website: www.Hallsdiving.com Reservations & Booking Diving Reef Trips Daily for Scuba or Snorkeling RESERVATIONS: Call (305) 743-5929 or 1-800-331-HALL Two Locations with Time for Diving Two Tanks of Air (4255), FAX (305)743-8168 • 9 A.M. to 6 P.M. For complete details, on the Dive Center offerings, see our complete maga- DAY DIVES: zine brochure. Sombrero, Delta Shoal Area, Thunderbolt Wreck, Coffins Patch DEPOSITS: A $100.00 deposit per person or the total amount or any area less than 10 miles away (4 hours trip time) (whichever is lower) is due 7 days from the request for Check-In: 9:00 A.M. or 1:00 in the afternoon reservations. Departs: 9:30 A.M. and 1:30 in the afternoon FINAL PAYMENT: The final payment is due 14 days before arrival. -

Fusion System Components

A Step Change in Military Autonomous Technology Introduction Commercial vs Military AUV operations Typical Military Operation (Man-Portable Class) Fusion System Components User Interface (HMI) Modes of Operation Typical Commercial vs Military AUV (UUV) operations (generalisation) Military Commercial • Intelligence gathering, area survey, reconnaissance, battlespace preparation • Long distance eg pipeline routes, pipeline surveys • Mine countermeasures (MCM), ASW, threat / UXO location and identification • Large areas eg seabed surveys / bathy • Less data, desire for in-mission target recognition and mission adjustment • Large amount of data collected for post-mission analysis • Desire for “hover” ability but often use COTS AUV or adaptations for specific • Predominantly torpedo shaped, require motion to manoeuvre tasks, including hull inspection, payload deployment, sacrificial vehicle • Errors or delays cost money • Errors or delays increase risk • Typical categories: man-portable, lightweight, heavy weight & large vehicle Image courtesy of Subsea Engineering Associates Typical Current Military Operation (Man-Portable Class) Assets Equipment Cost • Survey areas of interest using AUV & identify targets of interest: AUV & Operating Team USD 250k to USD millions • Deploy ROV to perform detailed survey of identified targets: ROV & Operating Team USD 200k to USD 450k • Deploy divers to deal with targets: Dive Team with Nav Aids & USD 25k – USD 100ks Diver Propulsion --------------------------------------------------------------------------------- -

Stone Aerospace Saves 12 Weeks in Cabling Design by Implementing a Digital Design Process

SUCCESS STORY ® Zuken’s software solution for electrical wiring, control systems and fluid engineering. Stone Aerospace saves 12 weeks in cabling design by implementing a digital design process “We achieved substantial productivity gains because engineers had a very clear picture of what one another were doing, and because of the automated checks performed by the software.“ John Harman, Electrical Engineer, Stone Aerospace ZUKEN - The Partner for Success SUCCESS STORY Stone Aerospace saves 12 weeks in cabling design by implementing a digital design process Stone Aerospace faced the pressure of a tight schedule in designing Results a one-of-a-kind underwater autonomous vehicle (AUV) capable • Elimination of $20,000 in cable of traveling 15km under the Antarctic ice shelf. The AUV acts as a rework and expedited delivery costs testing ground to validate an aircraft-mounted radar system that • Design cycle reduction by 12 weeks will be used in a space mission. The time needed to design the wiring • Ability to view the electrical and harness for the AUV was reduced by around 12 weeks and $20,000 physical design of the entire craft in was saved by using E3.series to automate many aspects of the design a single hierarchical view process, while integrating the logical and physical design on a single • E ective design team collaboration platform. created common nomenclature improving quality and cutting errors The search for life in space aspects such as temperature, depth and water current velocities, and to identify • Automated checks ensured correct Scientists believe that underneath the microbiological communities. connector selections. icy surface of Europa, one of Jupiter’s moons, is a vast ocean thought to be the Cable design challenges most likely location for finding life in our solar system. -

Low-Frequency Active Towed Sonar

LOW-FREQUENCY ACTIVE TOWED SONAR Full-feature, long-range, low-frequency active and passive variable depth sonar (VDS) The Low-Frequency Active Sonar (LFATS) system is used on ships to detect, track and engage all types of submarines. L3Harris specifically designed the system to perform at a lower operating frequency against modern diesel-electric submarine threats. FEATURES > Compact size - LFATS is a small, > Full 360° coverage - a dual parallel array lightweight, air-transportable, ruggedized configuration and advanced signal system processing achieve instantaneous, > Specifically designed for easy unambiguous left/right target installation on small vessels. discrimination. > Configurable - LFATS can operate in a > Space-saving transmitter tow-body stand-alone configuration or be easily configuration - innovative technology integrated into the ship’s combat system. achieves omnidirectional, large aperture acoustic performance in a compact, > Tactical bistatic and multistatic capability sleek tow-body assembly. - a robust infrastructure permits interoperability with the HELRAS > Reverberation suppression - the unique helicopter dipping sonar and all key transmitter design enables forward, aft, sonobuoys. port and starboard directional LFATS has been successfully deployed on transmission. This capability diverts ships as small as 100 tons. > Highly maneuverable - own-ship noise energy concentration away from reduction processing algorithms, coupled shorelines and landmasses, minimizing with compact twin-line receivers, enable reverb and optimizing target detection. short-scope towing for efficient maneuvering, fast deployment and > Sonar performance prediction - a unencumbered operation in shallow key ingredient to mission planning, water. LFATS computes and displays system detection capability based on modeled > Compact Winch and Handling System or measured environmental data. - an ultrastable structure assures safe, reliable operation in heavy seas and permits manual or console-controlled deployment, retrieval and depth- keeping. -

Divemaster Instructor

DIVEMASTER toINSTRUCTOR SpecialtiesPADI Specialty courses are means for which divers to explore interests in specific dive activities. Choose from cavern, deep, equipment, night, research, underwater hunter, search & recovery, research, navigator, photographer, wreck, boat, drift, dry suit, multilevel, naturalist, peak performance buoyancy, videography, enriched air, diver propulsion vehicle, Oxygen provider, Project Aware specialty, aware fish identification. These courses improve a divers knowledge in certain fields of diving and act as a foundation for further education allowing you as a diver to learn the skills and knowledge to gain more experience independently. Specialty programs can be conducted in 1-2 days and can credit with the Adventures in Diving certification. Please enquire to the Utila Dive Centre for information about a specific specialty program you may be interested in taking. Divemaster course At Utila Dive Centre we regularly update and are constantly evolving our Divemaster course to be one of the most cutting edge and dynamic programs worldwide. As one of the world’s leading PADI Career Development Centres there are additional workshops, and benefits to taking the course with Utila Dive Centre, such as our specialty programs, or the Project Aware Coral reef surveying program, or Discover Tec and rebreather workshops.The PADI Divemaster program consists of 3 separate modules; 1. Knowledge development (That can take place with e-learning ahead of the course start date) 9 academic topics including dive theory topics, and Instructor led class sessions. Divemaster course... 2. Watermanship - Stamina exercises, Rescue evaluation, Problem solving and Skills development. 3. Practical training; • 5 practical skill sessions (Dive site management, Map making, Briefing a dive, Search & Recovery dive and a Deep dive). -

Sonar for Environmental Monitoring of Marine Renewable Energy Technologies

Sonar for environmental monitoring of marine renewable energy technologies FRANCISCO GEMO ALBINO FRANCISCO UURIE 350-16L ISSN 0349-8352 Division of Electricity Department of Engineering Sciences Licentiate Thesis Uppsala, 2016 Abstract Human exploration of the world oceans is ever increasing as conventional in- dustries grow and new industries emerge. A new emerging and fast-growing industry is the marine renewable energy. The last decades have been charac- terized by an accentuated development rate of technologies that can convert the energy contained in stream flows, waves, wind and tides. This growth ben- efits from the fact that society has become notably aware of the well-being of the environment we all live in. This brings a human desire to implement tech- nologies which cope better with the natural environment. Yet, this environ- mental awareness may also pose difficulties in approving new renewable en- ergy projects such as offshore wind, wave and tidal energy farms. Lessons that have been learned is that lack of consistent environmental data can become an impasse when consenting permits for testing and deployments marine renew- able energy technologies. An example is the European Union in which a ma- jority of the member states requires rigorous environmental monitoring pro- grams to be in place when marine renewable energy technologies are commis- sioned and decommissioned. To satisfy such high demands and to simultane- ously boost the marine renewable sector, long-term environmental monitoring framework that gathers multi-variable data are needed to keep providing data to technology developers, operators as well as to the general public. Technol- ogies based on active acoustics might be the most advanced tools to monitor the subsea environment around marine manmade structures especially in murky and deep waters where divining and conventional technologies are both costly and risky. -

1SOFSS Life VOL

MYHURLBURT.COMMYHUMYHURLBURTURLR BUURT.CCOM JULY 2016 1SOFSS Life VOL. 2 ISSUE 7 Security Forces Takes Over Story Hour...pg10 Hurlburt Field’s Dive Shop...pg 14 New Firearms Rules...pg 23 2 | JULY 2016 • MYHURLBURTLife Bring Your Swimsuit! Summer Bash Fri, July 29 • 4-9pm Aquatic Center Free Food SHOWING! Crafts Games Swimming Corn Hole Bouncy Castles SPONSORED IN PART BY: FOR MORE INFO CALL 884-4252 NO PETS ALLOWED NO FEDERAL ENDORSEMENT OF SPONSORS INTENDED MYHURLBURTL i fe • JULY 2016 | 3 Contents 4 Cupcake Wars Winners! 19 FSS WiFi 10 Security Forces Takes Over 23 New Firearm Rules Story Hour 26 Community Connections 14 Hurlburt Field’s Dive Shop OnO the Cover: MYHURLBURTLife (photo provided by Hurlburt Field’s 1SOFSS DiveD Shop) Taryn Felde sits back and relaxes during a 1 SOFSS Commander Lt. Col. Lee A. Comerford openo water dive trip, hosted by Hurlburt Field’s Dive Shop.S To learn more about diving or to get started on 1 SOFSS Deputy Mr. Roger Noyes youry certifi cation, contact the Dive Shop at 881-1576 Marketing Director oro 884-6939. Vas Bora Commercial Sponsorship Stephany Pippin Visual Information Specialists Amanda Kosche Michael Pettus Cristina Scott Marketing Assistant Hurlburt Force Support Barbara Little #MyHurlburt Disclaimer: Contents of MyHurlburt Life are not necessarily the offi cial views of, nor endorsed by, the U.S. Government, the Department of Defense, the Department of the Air Force, or 1st Special Operations Force Support Squadron (1 SOFSS). The appearance of advertising in this publication, including inserts or supplements, does not constitute endorsement by the Department of Defense, the Department of the Air Force or 1st Special Operations Force Support Squadron of the products or services advertised. -

TO View Diving Rates

IndividualIndividual RatesRates PackagePackage RatesRates Reservation and Transportation Information "LADY KEY DIVER" 40' Custom Dive Boat awaits your Charter at Special Low Rates COAST GUARD INSPECTED & APPROVED • QUALIFIED CREW LEAD-SHOT RENTAL WEIGHTS ON BOARD• FRESHWATER SHOWER SEATS WITH TANKS AT BACK AND GEAR STORAGE UNDER STEREO SOUND SYSTEM • EASY ENTRY AND EXIT • FAST • ROOMY • COMFORTABLE BobBob BrBraayman'syman's Where there are more things to do underwater than in any other place in the United States. OVER 40 SPECIES OF CORAL WRECK DIVING • SHALLOW OR DEEP DIVING • SHELTERED AREAS YEAR-ROUND SUMMER TEMPERATURES 5050 Overseas Highway • MM 50 SPEARFISH • LOBSTER • NIGHT DIVING Marathon, FL. Keys 33050 PLENTIFUL MARINE LIFE • NITROX 1-800-331-HALL(4255) TREASURE HUNTING • REBREATHERS FAX (305) 743-8168 TROPICAL FISH COLLECTING (305) 743-5929 E-Mail: [email protected] Volume 30 - February 2020 Website: www.Hallsdiving.com Reservations & Booking Diving Reef Trips Daily for Scuba or Snorkeling RESERVATIONS: Call (305) 743-5929 or 1-800-331-HALL Two Locations with Time for Diving Two Tanks of Air (4255), FAX (305)743-8168 • 9 A.M. to 6 P.M. For complete details, on the Dive Center offerings, see our complete maga- DAY DIVES: zine brochure. Sombrero, Delta Shoal Area, Thunderbolt Wreck, Coffins Patch DEPOSITS: A $100.00 deposit per person or the total amount or any area less than 10 miles away (4 hours trip time) (whichever is lower) is due 7 days from the request for Check-In: 9:00 A.M. or 1:00 in the afternoon reservations. Departs: 9:30 A.M. and 1:30 in the afternoon FINAL PAYMENT: The final payment is due 14 days before arrival. -

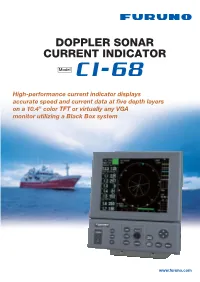

Doppler Sonar Current Indicator 8

DOPPLER SONAR CURRENT INDICATOR 8 Model High-performance current indicator displays accurate speed and current data at five depth layers on a 10.4" color TFT or virtually any VGA monitor utilizing a Black Box system www.furuno.com Obtain highly accurate water current measurements using FURUNO’s reliable acoustic technology. The FURUNO CI-68 is a Doppler Sonar Current The absolute movements of tide Indicator designed for various types of fish and measuring layers are displayed in colors. hydrographic survey vessels. The CI-68 displays tide speed and direction at five depth layers and ship’s speed on a high defi- nition 10.4” color LCD. Using this information, you can predict net shape and plan when to throw your net. Tide vector for Layer 1 The CI-68 has a triple-beam emission system for providing highly accurate current measurement. This system greatly reduces the effects of the Tide vector for Layer 2 rolling, pitching and heaving motions, providing a continuous display of tide information. When ground (bottom) reference is not available Tide vector for Layer 3 acoustically in deep water, the CI-68 can provide true tide current information by receiving position and speed data from a GPS navigator and head- ing data from the satellite (GPS) compass SC- 50/110 or gyrocompass. In addition, navigation Tide vector for Layer 4 information, including position, course and ship ’s track, can also be displayed The CI-68 consists of a display unit, processor Tide vector for Layer 5 unit and transducer. The control unit and display unit can be installed separately for flexible instal- lation.