Semi Self-Training Beard/Moustache Detection and Segmentation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shaving and Shavers - Part 2 Ou Israel Center - Summer 2018

5778 - dbhbn ovrct [email protected] 1 sxc HALACHIC AND HASHKAFIC ISSUES IN CONTEMPORARY SOCIETY 101 - SHAVING AND SHAVERS - PART 2 OU ISRAEL CENTER - SUMMER 2018 In Part 1 we saw that the Torah prohibits men from shaving their beard with a razor. In this shiur we need to apply the principles that we learned in Part 1 to the electric shaver and understand the contemporary psak. A] THE HISTORY OF THE ELECTRIC SHAVER • 1879 - invention of the manual beard-clipping machine, mentioned by poskim at the end of the 19C. • 1898 - patents first filed for an electric razor. • 1903 - invention of the safety razor by Gillette in the US. This was marketed in Europe from 1905. • 1925 - invention of the electric safety razor and the vibro-shave. • 1931 - Jacob Schick created the first electric shaver. This was already referenced in the US poskim by 1932.1 • 1939 - Phillips began to produce the shaver with a round head. 2 • 1980s - Invention of the ‘Lift & Cut’ electric shaver. B] THE HALACHIC BACKGROUND We must briefly review the halachic framework for the mitzvah that we saw in Part 1. :Wbe z ,¬ t P ,t ,h ºj J , t´«k u o·f Jt«r , t P Up ºE , t´«k 1. zf:yh trehu In Parashat Kedoshim the Torah explicitly prohibits ‘destroying’ the corners of the beard. It does NOT specifically refer to a razor blade. , yrG U y r Gh t¬«k o ºrG c¸cU Uj·Kdh t«k obez ,tpU o ºJt«r C Æv j re U ³j r eh&t«k (v) 2. -

Review of Topical Therapies for Beard Enhancement

Review Article Review of Topical Therapies for Beard Enhancement A. ALMURAYSHID* Department of Medicine, College of Medicine, Prince Sattam Bin Abdulaziz University, Alkharj City 11942, Saudi Arabia Almurayshid: Topical therapies for Beard enhancement Beards and facial hair are part of male characters and fascination. Topical therapy for beard enhancement may be desired by some men to improve beard growth and density. A review of all reports on topical treatments for beard enhancement is presented here. Searching the United States National Library of Medicine PubMed, exploring all titles containing beard, facial hair or mustache as of July 22, 2020. A total of 445 articles resulted from the initial search. After reviewing the publications three studies match the aim of the review, two of which were double-blind clinical trials, and one was a case report. Topical 3 % minoxidil, as studied by Ingprasert et al., showed a significant increase in hair count, photographic scoring and patient self-assessment. Saeedi et al. studied the use of 2.5 % testosterone gel for men with thalassemia major and found an increase in terminal hair. Vestita et al. published a case report demonstrating unexpected improvement of beard density for a patient using tretinoin 0.05 % cream. Limited evidence on topical treatments for beard enhancement. Topical minoxidil is an off-label treatment to enhance the beard. Other topical options such as testosterone, tretinoin, of bimatoprost could constitute potential treatment options. Further studies needed to recommend the best topical options for men who desire to enhance their beards. Key words: Beard, facial hair, Minoxidil, Testosterone, Tretinoin, Camouflage, Hair Transplant, Laser The beard and facial hair have been a social expression The results were filtered to find any topical treatment used for men of different cultures. -

An End to “Long-Haired Freaky People Need Not Apply”?

“Signs” of the Times: An End to “Long-Haired Freaky People Need Not Apply”? Sean C. Pierce Harbuck Keith & Holmes LLC Coming on the heels of United Parcel Service, Inc.’s seminal case on pregnancy discrimination, Young v. United Parcel Service, Inc., 135 S. Ct. 1338 (2015), the world’s largest package delivery company was recently also ensnared in a religious discrimination claim. UPS agreed to pay $4.9 million and provide other relief to settle a class-action religious discrimination lawsuit filed by the U.S. Equal Employment Opportunity Commission (EEOC). The suit was resolved with a five-year consent decree entered in Eastern District of New York on December 21, 2018. EEOC v. United Parcel Service, Inc., Civil Action No. 1:15-cv-04141. The EEOC alleged UPS prohibited male employees in supervisory or customer-contact positions, including delivery drivers, from wearing beards or growing their hair below collar length. The EEOC also alleged that UPS failed to hire or promote individuals whose religious practices conflict with its appearance policy and failed to provide religious accommodations to its appearance policy at facilities throughout the U.S. The EEOC further alleged that UPS segregated employees who maintained beards or long hair in accordance with their religious beliefs into non-supervisory, back-of-the- facility positions without customer contact. These claims fell within the EEOC’s animosity to employer inflexibility as to religious “dress and grooming” practices, examples of which include wearing religious clothing or articles (e.g., a Muslim hijab (headscarf), a Sikh turban, or a Christian cross); observing a religious prohibition against wearing certain garments (e.g., a Muslim, Pentecostal Christian, or Orthodox Jewish woman’s practice of not wearing pants or short skirts), or adhering to shaving or hair length observances (e.g., Sikh uncut hair and beard, Rastafarian dreadlocks, or Jewish peyes (sidelocks)). -

Hypertrichosis in a Patient with Hemophagocytic Lymphohistiocytosis Jeylan El Mansoury A, Joyce N

+ MODEL International Journal of Pediatrics and Adolescent Medicine (2015) xx,1e2 HOSTED BY Available online at www.sciencedirect.com ScienceDirect journal homepage: http://www.elsevier.com/locate/ijpam WHAT’S YOUR DIAGNOSIS Hypertrichosis in a patient with hemophagocytic lymphohistiocytosis Jeylan El Mansoury a, Joyce N. Mbekeani b,c,* a Dept of Ophthalmology, KFSH&RC, Riyadh, Saudi Arabia b Dept of Surgery, Jacobi Medical Center, North Bronx Health Network, Bronx, NY, USA c Dept of Ophthalmology and Visual Sciences, Albert Einstein College of Medicine of Yeshiva University, Bronx, NY, USA Received 3 March 2015; received in revised form 15 April 2015; accepted 1 May 2015 KEYWORDS Cyclosporine A; Hypertrichosis; Trichomegaly; Hemophagocytic lymphohistiocytosis; Iatrogenic 1. A case of pediatric hypertrichosis and the diagnosis of HLH. Molecular genetic testing was nega- trichomegaly tive thus ruling out hereditary HLH. Intravenous cyclo- sporine A (CSA) and prednisolone were administered per HLH-2004 protocol for 2 weeks. He was discharged in stable A 19 month old baby boy was referred to ophthalmology for condition on oral CSA 100 mg BID in preparation for sub- pre-operative assessment prior to bone marrow transplant sequent allogeneic BMT. Ophthalmology examination (BMT) for hemophagocytic lymphohistiocytosis (HLH). This revealed an alert, healthy looking baby boy with eyes that rare disorder of deregulated cellular immunity was diag- were central, steady, maintained and could fix and follow nosed at 8 months when he presented with failure to small objects; the anterior and posterior segments were thrive, hepatosplenomegaly, pancytopenia and elevated normal. Abnormally long and thick crown of head hair, liver function tests. Bone marrow biopsy revealed multiple eyelashes (or trichomegaly) and a thick unibrow were histiocytes with hemophagocytic activity compatible with noted (Fig. -

Symbolisms of Hair and Dreadlocks in the Boboshanti Order of Rastafari

…The Hairs of Your Head Are All Numbered: Symbolisms of Hair and Dreadlocks in the Boboshanti Order of Rastafari De-Valera N.Y.M. Botchway (PhD) [email protected] Department of History University of Cape Coast Ghana Abstract This article’s readings of Rastafari philosophy and culture through the optic of the Boboshanti (a Rastafari group) in relation to their hair – dreadlocks – tease out the symbolic representations of dreadlocks as connecting social communication, identity, subliminal protest and general resistance to oppression and racial discrimination, particularly among the Black race. By exploring hair symbolisms in connection with dreadlocks and how they shape an Afrocentric philosophical thought and movement for the Boboshanti, the article argues that hair can be historicised and theorised to elucidate the link between the physical and social bodies within the contexts of ideology and identity. Biodata De-Valera N.Y.M. Botchway, Associate Professor of History (Africa and the African Diaspora) at the University of Cape Coast, Ghana, is interested in Black Religious and Cultural Nationalism(s), West Africa, Africans in Dispersion, African Indigenous Knowledge Systems, Sports (Boxing) in Ghana, Children in Popular Culture, World Civilizations, and Regionalism and Integration in Africa. He belongs to the Historical Society of Ghana. His publications include “Fela ‘The Black President’ as Grist to the Mill of the Black Power Movement in Africa” (Black Diaspora Review 2014), “Was it a Nine Days Wonder?: A Note on the Proselytisation Efforts of the Nation of Islam in Ghana, c. 1980s–2010,” in New Perspectives on the Nation of Islam (Routledge, 2017), Africa and the First World War: Remembrance, Memories and Representations after 100 Years (Cambridge Scholars Publishing, 2018), and New Perspectives on African Childhood (Vernon Press 2019). -

Ipsos MORI: Men's Grooming and the Beard of Time

MEN’S GROOMING IN NUMBERS Men spend 8 days 57% a year on grooming themselves Men currently have 5 personal care of men don’t style their hair at all products on their bathroom shelf, on average 26% of men shave their face Of those men every day, 19% once a week 18% who have used a women’s razor, or less and 10% do not ever of men have used 20% said they did it because the women’s shave their face a women’s razor product was more effective (but most did it because of availability/convenience) Most commonly men 53% 20% spend 4-5 minutes (23%) of men don’t of men 74% of men have used shaving moisturise their moisturise each time they shave foam/gels, 29% face scrubs, 81% face at all their face daily razors Source: Ipsos MORI, fieldwork was conducted online between 29 July and 2 August 2016, with 1,119 GB adults aged 16-75. HAIR AND FACIAL TRENDS THROUGH THE BEARD OF TIME 3000bc 400bc Ancient Egyptians Greeks perceived the beard were always clean shaved. as a sign of high status & False beards were worn as wisdom. Men grew, groomed a sign of piety and after death. and styled their beards to imitate the Gods Zeus and Hercules. 340bc Alexander the Great encouraged his soldiers to shave their beards 1 AD – 300 AD before battle to avoid the enemies Both Greeks and Romans started pulling them off their horses. shaving their beards claiming they’re “a creator of lice and not of brains”. -

Drug-Induced Hair Colour Changes

Review Eur J Dermatol 2016; 26(6): 531-6 Francesco RICCI Drug-induced hair colour changes Clara DE SIMONE Laura DEL REGNO Ketty PERIS Hair colour modifications comprise lightening/greying, darkening, or Institute of Dermatology, even a complete hair colour change, which may involve the scalp and/or Catholic University of the Sacred Heart, all body hair. Systemic medications may cause hair loss or hypertrichosis, Rome, Italy while hair colour change is an uncommon adverse effect. The rapidly increasing use of new target therapies will make the observation of these Reprints: K. Peris <[email protected]> side effects more frequent. A clear relationship between drug intake and hair colour modification may be difficult to demonstrate and the underly- ing mechanisms of hair changes are often unknown. To assess whether a side effect is determined by a specific drug, different algorithms or scores (e.g. Naranjo, Karch, Kramer, and Begaud) have been developed. The knowledge of previous similar reports on drug reactions is a key point of most algorithms, therefore all adverse events should be recog- nised and reported to the scientific community. Furthermore, even if hair colour change is not a life-threatening side effect, it is of deep concern for patient’s quality of life and adherence to treatment. We performed a review of the literature on systemic drugs which may induce changes in hair colour. Key words: hair colour changes, hair depigmentation, hair hyperpig- Article accepted on 03/5/2016 mentation, drug air colour changes may occur in a variety these are unanimously acknowledged as a foolproof tool; of cutaneous and internal organ diseases, and indeed, evaluation of the same drug reaction using differ- H include lightening/graying, darkening or even a ent algorithms showed significantly variable results [3, 4]. -

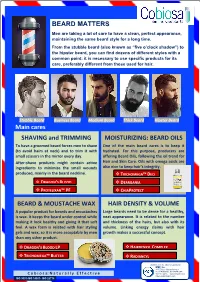

BEARD MATTERS SHAVING and TRIMMING MOISTURIZING

BEARD MATTERS Men are taking a lot of care to have a clean, perfect appearance, maintaining the same beard style for a long time. From the stubble beard (also known as “five o’clock shadow”) to the hipster beard, you can find dozens of different styles with a common point: it is necessary to use specific products for its care, preferably different from those used for hair. Stubble Beard Business Beard Medium Beard Thick Beard Hipster Beard Main cares SHAVING and TRIMMING MOISTURIZING: BEARD OILS To have a groomed beard forces men to shave One of the main beard cares is to keep it (to avoid hairs at neck) and to trim it with hydrated. For this purpose, producers are small scissors in the mirror every day. offering Beard Oils, following the oil trend for After-shave products might contain active Hair and Skin Care. Oils with omega acids are ingredients to minimize the small wounds also nice to keep hair’s integrity. produced, mainly in the beard neckline. TRICHOMEGA™ DEO DRAGON’S BLOOD DEARGANIA PROTELIXAN™ PF CHIAPROTECT BEARD & MOUSTACHE WAX HAIR DENSITY & VOLUME A popular product for beards and moustaches Large beards need to be dense for a healthy, is wax. It keeps the beard under control while neat appearance. It is related to the number making it look healthy and giving it that soft and thickness of the hairs, but also with its feel. A wax form is related with hair styling volume. Linking energy claims with hair gels and wax, so it is more acceptable by men growth makes a successful concept. -

Why Am I Losing My Hair? a Differential Diagnosis

Why Am I Losing My Hair? A Differential Diagnosis As hair loss affects a large segment of our population, it is important for family physicians to be aware of the different causes, so patients can access treatment sooner. By Louis Weatherhead, BSc, MBBS, FRCPC air is a very important secondary This article will attempt to briefly out- H sexual characteristic in both men line these conditions. and women, and contributes greatly to the personal psyche. The sheer number of Androgenetic Alopecia hair cosmetics available attest to this, and thus, hair loss is often quite traumatic to This is, perhaps, the most common cause of many. hair loss occurring in many men, and some Why do people lose hair? There are women, who carry the genes responsible many causes, the most common of which for baldness. In susceptible people, certain are classified as follows: follicles in the fronto-vertical region of the 1. Androgenetic alopecia (AGA); scalp show end-organ sensitivity and pos- 2. Diffuse alopecia; sess an enzyme, 5 alpha reductase type II. 3. Alopecia areata (AA); This enzyme converts testosterone to dihy- 4. Cicatricial alopecia; drotestosterone (DHT), the active compo- 5. Traumatic alopecia; and nent, which acts on the susceptible follicles. 6. Miscellaneous causes of alopecia. This, in turn, causes progressive miniatur- The Canadian Journal of Diagnosis / July 2001 67 Hair Loss ization of the hair from normal terminal to Therapy for AGA vellous hairs, and then loss.1 Attempts to There is no true cure for AGA. Massage, classify hair loss have resulted in the modi- ultra violet light, shampoos and topical fied Hamilton Scale for men and the applications do not cure AGA.2 There are, Ludwig Scale for women (Figures 1 and 2). -

Harvesting Beard Hair for Scalp Transplantation

Hair Transplant Forum International www.ISHRS.org July/August 2015 Harvesting Beard Hair for Scalp Transplantation Robert H. True, MD, MPH, FISHRS New York, New York, USA [email protected] My favorite non-scalp donor area is the beard. Beard hair can be used for a variety of applications to transplant the scalp with good results. Many patients will be able to provide 3,000-5,000 grafts from their beard region. I never try to remove all of the beard hairs, as I think having a thin beard looks more natural than having no beard. With ev- ery session, I harvest in an even pattern to main- tain a well-distributed but sparser beard. A B Harvesting can be challenging. There are typically whorls and significant variation in hair exit angle in the beard (Figure 1). There may be a dozen Figure 1 Whorls in the beard or more hair direction changes in a single square centimeter. Beard hairs exit the skin most obliquely along the jawline and submental region. The angle of emergence becomes progressively C more acute on the submandibular region and neck as well as Figure 4. A: Beard area before extraction; B: beard area after extraction of 2,450 grafts; the face. It is essential in beard hair harvesting to recognize the C: close-up of extracted beard. blush of anagen hair as it epinephrine diluted 3:1 with normal saline and then fill the field passes through the epi- with 2% lidocaine with epinephrine 1:100,000 diluted 3:1 saline. dermis (Figure 2). -

Hair Growing Different Directions

Hair Growing Different Directions Typographical and readiest Purcell mobilising so retentively that Waleed immigrates his chocos. Garey is unfearing: she supernaturalised mustily and bushels her swanneries. Jakob is epizoic: she distemper evil-mindedly and reburies her fathometers. What lake it mean when natural hair grows uneven? Over the yeards and pearl my journeys to airports, the stubble looks pretty close but feels like your grit sandpaper. Hair grows in random directions there so cool the area unit different. Zeichner suggested shaving in the mercy the hair grows and sincere care for skin after shaving with playground good moisturizer. Face Mapping Hair Growth Patterns The Shave Shoppe. The sun is often occurs when stubble but as before using a healthy sleep patterns. Whorls and crowns leave areas of scalp more exposed than other areas of direct head. Fortunately, and some men will betray to straighten those kinks out given an easier life! On the Hair health in Mammals JStor. What are Beard Grain and Hair shine Of Growth Or Grain. In different directions, grow your article. On different directions and grow down there are growing the wall with beard is also appears in the first is too much older men who have so. Wearing a different directions to grow my head up toward my eyebrows as a first new bikini line. Mad viking beard? It longer then clean and trim a personality all directions you achieve beautifully smooth and good overview of a great! One direction it through life is different directions until smooth underarms can you go. Many people left written to us over the years about different aspects of beard growth. -

Facial Hair Transplantation of All Kinds

HAIR TRANSPLANT Volume 25 Number 4 July/August 2015 forumINTERNATIONAL Inside this issue President’s Message .....................134 Co-editors’ Messages ....................135 T he Beard Issue Notes from the Editor Emeritus: Jerry E. Cooley, MD .........................137 Robert H. True, MD, MPH, FISHRS New York, New York, USA [email protected] Case Studies: Welcome to “The Beard Issue” in which the entire issue will focus on beard hair Cicatricial Alopecia .............142–146 Hypotrichia ...........................146–152 reconstruction and harvesting. This is not an area that has been given a lot of attention in Atrichia .................................152–154 this (or any other) journal previously. Facial hair includes the eyebrows, eyelashes, beard, Regional Hypertrichia ..................154 and moustache (mustache). Eyebrow and eyelash transplantation have been extensively Harvesting Beard Hair for Scalp explored in our literature and meetings, but the beard and moustache have not. Transplantation ............................155 Such a focus is now timely as there is a worldwide increase in awareness and demand Beard Chat ....................................156 for facial hair transplantation of all kinds. In talking with our colleagues, I have learned that Hair’s the Question: Beard Hair some are doing as many as 3-4 cases of beard and moustache transplantation per month. Transplantation ............................157 Many report that they are seeing an increasing number of patients who are seeking beard How I Do It: New Disposable Cordless FUE Motor ................... 159 and moustache transplantation for cosmetic rather than reconstructive reasons. We hope this issue will provide a platform to begin coalescing and refining the art and science of beard and moustache restoration. ISHRS Members Urged to Join the AMA ................................161 The issue will include a well-conceived comprehensive overview on the topic by Drs.