Detecting Agility of Open Source Projects Through Developer Engagement

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ultumix GNU/Linux 0.0.1.7 32 Bit!

Welcome to Ultumix GNU/Linux 0.0.1.7 32 Bit! What is Ultumix GNU/Linux 0.0.1.7? Ultumix GNU/Linux 0.0.1.7 is a full replacement for Microsoft©s Windows and Macintosh©s Mac OS for any Intel based PC. Of course we recommend you check the system requirements first to make sure your computer meets our standards. The 64 bit version of Ultumix GNU/Linux 0.0.1.7 works faster than the 32 bit version on a 64 bit PC however the 32 bit version has support for Frets On Fire and a few other 32 bit applications that won©t run on 64 bit. We have worked hard to make sure that you can justify using 64 bit without sacrificing too much compatibility. I would say that Ultumix GNU/Linux 0.0.1.7 64 bit is compatible with 99.9% of all the GNU/Linux applications out there that will work with Ultumix GNU/Linux 0.0.1.7 32 bit. Ultumix GNU/Linux 0.0.1.7 is based on Ubuntu 8.04 but includes KDE 3.5 as the default interface and has the Mac4Lin Gnome interface for Mac users. What is Different Than Windows and Mac? You see with Microsoft©s Windows OS you have to defragment your computer, use an anti-virus, and run chkdsk or a check disk manually or automatically once every 3 months in order to maintain a normal Microsoft Windows environment. With Macintosh©s Mac OS you don©t have to worry about fragmentation but you do have to worry about some viruses and you still should do a check disk on your system every once in a while or whatever is equivalent to that in Microsoft©s Windows OS. -

Improving Security Through Egalitarian Binary Recompilation

Improving Security Through Egalitarian Binary Recompilation David Williams-King Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy under the Executive Committee of the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2021 © 2021 David Williams-King All Rights Reserved Abstract Improving Security Through Egalitarian Binary Recompilation David Williams-King In this thesis, we try to bridge the gap between which program transformations are possible at source-level and which are possible at binary-level. While binaries are typically seen as opaque artifacts, our binary recompiler Egalito (ASPLOS 2020) enables users to parse and modify stripped binaries on existing systems. Our technique of binary recompilation is not robust to errors in disassembly, but with an accurate analysis, provides near-zero transformation overhead. We wrote several demonstration security tools with Egalito, including code randomization, control-flow integrity, retpoline insertion, and a fuzzing backend. We also wrote Nibbler (ACSAC 2019, DTRAP 2020), which detects unused code and removes it. Many of these features, including Nibbler, can be combined with other defenses resulting in multiplicatively stronger or more effective hardening. Enabled by our recompiler, an overriding theme of this thesis is our focus on deployable software transformation. Egalito has been tested by collaborators across tens of thousands of Debian programs and libraries. We coined this term egalitarian in the context of binary security. Simply put, an egalitarian analysis or security mechanism is one that can operate on itself (and is usually more deployable as a result). As one demonstration of this idea, we created a strong, deployable defense against code reuse attacks. -

Kde-Guide-De-Developpement.Web.Pdf

KDE Published : 2017-06-26 License : GPLv2+ 1 KDE DU POINT DE VUE D'UN DÉVELOPPEUR 1. AVEZ-VOUS BESOIN DE CE LIVRE ? 2. LA PHILOSOPHIE DE KDE 3. COMMENT OBTENIR DE L'AIDE 2 1. AVEZ-VOUS BESOIN DE CE LIVRE ? Vous devriez lire ce livre si vous voulez développer pour KDE. Nous utilisons le terme développement très largement pour couvrir tout ce qui peut conduire à un changement dans le code source, ce qui inclut : Soumettre une correction de bogue Écrire une nouvelle application optimisée par la technologie KDE Contribuer à un projet existant Ajouter de la fonctionnalité aux bibliothèques de développement de KDE Dans ce livre, nous vous livrerons les bases dont vous avez besoin pour être un développeur productif. Nous décrirons les outils que vous devrez installer, montrer comment lire la documentation (et écrire la vôtre propre, une fois que vous aurez créé la nouvelle fonctionnalité !) et comment obtenir de l'aide par d'autres moyens. Nous vous présenterons la communauté KDE, qui est essentielle pour comprendre KDE parce que nous sommes un projet « open source », libre (gratuit). Les utilisateurs finaux du logiciel n'ont PAS besoin de ce livre ! Cependant, ils pourraient le trouver intéressant pour les aider à comprendre comment les logiciels complexes et riches en fonctionnalités qu'ils utilisent ont vu le jour. 3 2. LA PHILOSOPHIE DE KDE Le succès de KDE repose sur une vue globale, que nous avons trouvée à la fois pratique et motivante. Les éléments de cette philosophie de développement comprennent : L'utilisation des outils disponibles plutôt que de ré-inventer ceux existants : beaucoup des bases dont vous avez besoin pour travailler font déjà partie de KDE, comme les bibliothèques principales ou les « Kparts », et sont tout à fait au point. -

Anexaminationofthebenefitsofus

An Examination 1 Running head: AN EXAMINATION OF THE BENEFITS OF USING OPEN SOURCE An Examination of the Benefits of Using Open Source Software in Schools Charles E. Craig, Jr. Tennessee Tech University July 21, 2011 An Examination 2 Abstract This research is an examination of ways that open source software can bring educational value and significant cost savings to K-12 schools. Existing case studies and other research are examined. Particular attention is given to K-12 schools which have implemented the free and open source Linux operating system to replace Microsoft Windows on older computers, as well as schools which have replaced Microsoft Office with OpenOffice. An Examination 3 Table of Contents Abstract …………………………………………………………………………………………2 List of Tables/Figures ………………………………………………………………………….. 4 Chapter I. Introduction …………………………………………………………………………..5 Chapter II. Methodology ………………………………………………………………………...8 Chapter III. A Comparison and Contrast of Proprietary Software and Open Source Software …9 Chapter IV. Educational Benefits of Open Source Software …………………………………..12 Chapter V. Cost Benefits of Open Source Software ……………………………………….…..29 Chapter VI. Summary, Conclusions, Recommendations …………...………………………….33 References ……………………………………………………………………………………...38 An Examination 4 List of Tables/Figures TABLES: Table 1. A Comparison of RAM and CPU Usage in Various Linux Desktop Environments and Window Managers …………………………………………………………………...….14 FIGURES: Figure 1. The Ubuntu Linux desktop……………………………………………………………13 Figure 2. A screenshot of the LibreOffice Writer word processor running in Linux……………17 Figure 3. A screenshot of the Kalzium periodic table software ..……………………………….18 Figure 4. Kanagram ……………………………………………………………………………..19 Figure 5. KGeography ………………………………………………………………………..…20 Figure 6. The KStars desktop planetarium ………………………………………………..…….21 Figure 7. The TuxMath interactive educational game …………………………………………..22 Figure 8. TuxPaint ……………………………………………………………………………....23 Figure 9. Peppermint OS Two running on an eight year old Dell desktop ……………………..35 Figure 10. -

Why Be a KDE Project? Martin Klapetek David Edmundson

Why be a KDE Project? Martin Klapetek David Edmundson What is KDE? KDE is not a desktop, it's a community „Community of technologists, designers, writers and advocates who work to ensure freedom for all people through our software“ --The KDE Manifesto What is a KDE Project? Project needs more than just good code What will you get as a KDE Project? Git repository Git repository plus „scratch repos“ (your personal playground) Creating a scratch repo git push –all kde:scratch/username/reponame Git repository plus web interface (using GitPHP) Git repository plus migration from Gitorious.org Bugzilla (the slightly prettier version) Review Board Integration of git with Bugzilla and Review Board Integration of git with Bugzilla and Review Board Using server-side commit hooks ● BUG: 24578 ● CCBUG: 29456 ● REVIEW: 100345 ● CCMAIL: [email protected] Communication tools Mailing lists Wiki pages Forums Single sign-on to all services Official IRC channels #kde-xxxxx (on Freenode) IRC cloak me@kde/developer/mklapetek [email protected] email address Support from sysadmin team Community support Development support Translations (71 translation teams) Testing support (Active Jenkins and EBN servers, plus Quality Team) Project continuation (when you stop developing it) KDE e.V. support Financial and organizational help Trademark security Project's licence defense via FLA Promo support Stories in official KDE News site (Got the Dot?) Your blog aggregated at Planet KDE Promo through social channels Web hosting under kde.org domain Association with one of the best -

KDE Galaxy 4.13

KDE Galaxy 4.13 - Devaja Shah About Me ●3rd Year Alienatic Student at DA- !"# Gandhinagar ●Dot-editor %or KDE &romo "ea' ●Member of KDE e.(. ●&a))ion for Technology# Literature ●+un the Google Developer Group in !olle$e ●-rganizin$ Tea' of KDE Meetup# con%./de.in 14 -/ay, sooooo....... ●Ho1 many of you are %an) of Science Fiction3 ●Astronomy3 ● 0o1 is it Related to KDE3 ●That i) precisely 1hat the talk is about. ●Analogy to $et you to kno1 everythin$ that you should about ● “Galaxy KDE 4.13” 4ait, isn't it 4.14? ●KDE5) late)t ver)ion S! 4.14 6 7ove'ber 8914 ●KDE Soft1are !o',ilation ::.xx ●Significance o% +elea)e) ●- -r$ani.ed# )y)te'atic co',ilation o% %eature) < develo,'ent) ●- 2ive )erie) of relea)e) till date. ●7o Synchronized +elea)e) Any lon$er: ● - KDE 2ra'e1ork) > ?'onthly@ ● - KDE &la)'a > ?3 'onth)@ ● - KDE Ap,lication) ?date ba)ed@ ●Au)t *i/e Ap, (er)ion) But, 1hat am I to do o% the Galaxy 7umber? ●4ork in a "eam ●4ork acros) a Deadline ●-%;ce Space Si'ulation ●Added 'petus %or Deliverin$ your 2eature) ●You 1ork a) a ,art of the C!oreD Developer "ea' ● nstils Discipline ●Better +e),onse# Better 2eedbac/ ●Better Deliverance ●Synchronized 1ork with other C)ea)onedD developer) Enough of the bore....... ●Ho1 do $et started3 ● - Hope you didn't )nooze yesterday ● +!# Subscribe to Mailing Lists ●Mentoring Progra') ●GsoC# Season of KDE, O2W Progra') ●Bootstra,pin$ Training Session) Strap yourself onto the Rocket ●And Blast O%%......... ● ● ● Entered A 4ormhole and Ea,ped into the KDE Galaxy ●No1 what? ●Pick a Planet to nhabit ●But.... -

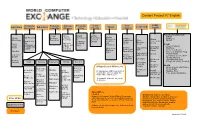

Content Project V7 English

Content Project V7 English Public Computer Entrepre- Climate Program- Solar Water Tech Leadership Agriculture Recycling Education neurship Change ming Energy Literacy Health Potential and Growth CD3W Videos BFOIT CD3W IEARN We Recycle Business Lessons Introduction Resources CD3W CD3W Resources Getting Where GirlRising Resources Resources Readings to on Solar Started with Class UNGEI How to Set Computer Energy on Water There Is No and Ubuntu Manage- Doctor Peace Up Nat’l Geo Program- 10.04 ment STEM Computer a Climate.gov ming Sanitation Corps UNESCO Khan Academy Books Refurbishing CLEAN Getting Empowering Center and more UN Lesson HIV/AIDS WikiSlice Plans on Started with Girls Library Ck-12 Textbooks Adrodok Water OpenOffice Intro to Computer Prog Empowering Medline Thinkersmith Vegetable How to Girls-School Computer Sci in a Box Garden Assemble a Computer Ubuntu Manual Life Skills Childhood LibreOffice Guides Healthy Illness Harvest Math Science Reference Writing Soc.Sci. Promoting Comp. for Class. Guidesl Powerful Health for People Health Wikipedia and Wikibooks Children Hesperian Health Guides Khan Video BlueMall Medline Learning Volunteer- Teach AIDS Khan Video Lessons Basic 20 Gigabytes with thousands of Health Medline Plus Lessons Spelling Center ism Activities for Language searchable articles using the Pop. Council Activities Ck-12 Kiwix offline wiki-reader Primary Gr. Ck-12 Textbooks Dictionary Common World Map Textbooks Sense Project HIV Toolkit Wiki HowTo Thousands of books for youth WikiSlice Compositio of all ages Unesco -Animals -

Praise for the Official Ubuntu Book

Praise for The Official Ubuntu Book “The Official Ubuntu Book is a great way to get you started with Ubuntu, giving you enough information to be productive without overloading you.” —John Stevenson, DZone Book Reviewer “OUB is one of the best books I’ve seen for beginners.” —Bill Blinn, TechByter Worldwide “This book is the perfect companion for users new to Linux and Ubuntu. It covers the basics in a concise and well-organized manner. General use is covered separately from troubleshooting and error-handling, making the book well-suited both for the beginner as well as the user that needs extended help.” —Thomas Petrucha, Austria Ubuntu User Group “I have recommended this book to several users who I instruct regularly on the use of Ubuntu. All of them have been satisfied with their purchase and have even been able to use it to help them in their journey along the way.” —Chris Crisafulli, Ubuntu LoCo Council, Florida Local Community Team “This text demystifies a very powerful Linux operating system . in just a few weeks of having it, I’ve used it as a quick reference a half dozen times, which saved me the time I would have spent scouring the Ubuntu forums online.” —Darren Frey, Member, Houston Local User Group This page intentionally left blank The Official Ubuntu Book Sixth Edition This page intentionally left blank The Official Ubuntu Book Sixth Edition Benjamin Mako Hill Matthew Helmke Amber Graner Corey Burger With Jonathan Jesse, Kyle Rankin, and Jono Bacon Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. -

Review of Digital Globes 2015

A Digital Earth Globe REVIEW OF DIGITAL GLOBES 2015 JESSICA KEYSERS MARCH 2015 ACCESS AND AVAILABILITY The report is available in PDF format at http://www.crcsi.com.au We welcome your comments regarding the readability and usefulness of this report. To provide feedback, please contact us at [email protected] CITING THIS REPORT Keysers, J. H. (2015), ‘Digital Globe Review 2015’. Published by the Australia and New Zea- land Cooperative Research Centre for Spatial Information. ISBN (online) 978-0-9943019-0-1 Author: Ms Jessica Keysers COPYRIGHT All material in this publication is licensed under a Creative Commons Attribution 3.0 Aus- tralia Licence, save for content supplied by third parties, and logos. Creative Commons Attribution 3.0 Australia Licence is a standard form licence agreement that allows you to copy, distribute, transmit and adapt this publication provided you attribute the work. The full licence terms are available from creativecommons.org/licenses/by/3.0/au/legal- code. A summary of the licence terms is available from creativecommons.org/licenses/ by/3.0/au/deed.en. DISCLAIMER While every effort has been made to ensure its accuracy, the CRCS does not offer any express or implied warranties or representations as to the accuracy or completeness of the information contained herein. The CRCSI and its employees and agents accept no liability in negligence for the information (or the use of such information) provided in this report. REVIEW OF DIGITAL GLOBES 2015 table OF CONTENTS 1 PURPOSE OF THIS PAPER ..............................................................................5 -

Wireless Evolution •..••••.•.•...•....•.•..•.•••••••...••••••.•••.••••••.••.•.••.••••••• 4

Department of Justice ,"'''''''''<11 Bureau of Investigation ,Operational Technology Division WIRELESS EVDLUTIDN IN THIS Iselil-it:: .. WIRELESS EVOLUTIDN I!I TECH BYTES • LONG TERM EVOLUTIQN ill CLDUD SERVICES • 4G TECHNOLOGY ill GESTURE-RECOGNITION • FCC ON BROADBAND • ACTIVITY-BASED NAVIGATION 'aw PUIi! I' -. q f. 8tH'-.1 Waa 8RI,. (!.EIi/RiW81 R.d-nl)) - 11 - I! .el " Ij MESSAGE FROM MANAGEMENT b7E he bou~~aries of technology are constantly expanding. develop technical tools to combat threats along the Southwest Recognizing the pathway of emerging technology is Border. a key element to maintaining relevance in a rapidly changing technological environment. While this The customer-centric approach calls for a high degree of T collaboration among engineers, subject matter experts (SMEs), proficiency is fundamentally important in developing strategies that preserve long-term capabilities in the face of emerging and the investigator to determine needs and requirements. technologies, equally important is delivering technical solutions To encourage innovation, the technologists gain a better to meet the operational needs of the law enforcement understanding of the operational and investigative needs customer in a dynamic 'threat' environment. How can technical and tailor the technology to fit the end user's challenges. law enforcement organizations maintain the steady-state Rather than developing solutions from scratch, the customer production of tools and expertise for technical collection, while centric approach leverages and modifies the technoloe:v to infusing ideas and agility into our organizations to improve our fit the customer's nFlFlrt~.1 ability to deliver timely, relevant, and cutting edge tools to law enforcement customers? Balancing these two fundamentals through an effective business strategy is both a challenge and an opportunity for the Federal Bureau of Investigation (FBI) and other Federal, state, and local law enforcement agencies. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Opensuse® – What's New? 13.2 and the Future

openSUSE® – What's New? 13.2 and the Future Richard Brown Chairman openSUSE [email protected] Topics Introduction to openSUSE® openSUSE & SUSE® Linux Enterprise What's new in openSUSE 13.2 Base OS Desktop Server Cloud The Future 2 Introduction to openSUSE® openSUSE® • Open Source Community Project sponsored by SUSE® • Founded 9th August 2005 • “Promotes the use of Linux everywhere” • Produces the openSUSE distribution 4 snapper wicked openQA Evergreen 5 One Project, Three Distributions openSUSE ‒ Regular Releases (Every 8-12 months) ‒ 13.2 just released (4th November)! openSUSE Tumbleweed ‒ 'Rolling Release' (Constant Updates) ‒ Formerly based on latest openSUSE Regular Release ‒ Now a 'pure' rolling release based on openSUSE Factory openSUSE Evergreen ‒ 'Long Term Support' (Patches for extra years) 6 openSUSE® Distribution • Successor to the 'SUSE® Linux' Boxed distribution • Consumer/Enthusiast focus • First release was 'SUSE Linux 10.0' in October 2005 • Renamed 'openSUSE' with 10.2 in December 2006 • openSUSE 13.2 was released on 4th November 2014 ‒ Our 14th release! ‒ Over 7000 packages (+more in OBS) 7 openSUSE® Tumbleweed • Originally a 'rolling release' based on stable releases created by Greg Kroah-Hartman • Merged with the 'Factory' rolling release on November 4th 2014, which leverages OBS and openQA to deliver a 'true' rolling release • Developer/Contributor focus 8 Open Build Service • Free, Open Platform for packaging software for open source distributions • Started in February 2006 • Used to build the openSUSE® distribution