Using Proximal Policy Optimization to Play Bomberman

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bomberman Story Ds Walkthrough Guide

Bomberman story ds walkthrough guide Continue Comments Share Hudson Soft (JP), Ubisoft (International) One player, multiplayer Japan: May 19, 2005 North America: June 21, 2005 Europe: July 1, 2005 Bomberman is a game in the Bomberman series released in 2005 for the Nintendo DS. One player This game is very similar to the original Bomberman. The player must navigate the mazes and defeat the enemies before finding a way out. In this game, there is more than one item in each level and they are stored in inventory on the second screen. By clicking on the subject with a stylus, the player can activate the item at any time. They may choose to use the items immediately or be more conservative with them. There are 10 worlds each with 10 stages. The last stage is always the boss and there is a bonus stage after the fifth stage. In bonus level, the player is invincible and must defeat all enemies on one of the screens. They can then move to another screen to collect as many items as they can with the remaining time. Worlds/Bosses This section is a stub. You can help by expanding it. Multiplayer this game can wirelessly communicate with other Nintedo DS systems to play multiplayer. You can play a multi-card game or play the same card. There is no difference in gameplay depending on one card or multiple cards to play. With a wireless connection, you can play with up to 8 players at a time. When you die in a multiplayer game, you send to the bottom screen to shoot missiles at other players still alive. -

10-Yard Fight 1942 1943

10-Yard Fight 1942 1943 - The Battle of Midway 2048 (tsone) 3-D WorldRunner 720 Degrees 8 Eyes Abadox - The Deadly Inner War Action 52 (Rev A) (Unl) Addams Family, The - Pugsley's Scavenger Hunt Addams Family, The Advanced Dungeons & Dragons - DragonStrike Advanced Dungeons & Dragons - Heroes of the Lance Advanced Dungeons & Dragons - Hillsfar Advanced Dungeons & Dragons - Pool of Radiance Adventure Island Adventure Island II Adventure Island III Adventures in the Magic Kingdom Adventures of Bayou Billy, The Adventures of Dino Riki Adventures of Gilligan's Island, The Adventures of Lolo Adventures of Lolo 2 Adventures of Lolo 3 Adventures of Rad Gravity, The Adventures of Rocky and Bullwinkle and Friends, The Adventures of Tom Sawyer After Burner (Unl) Air Fortress Airwolf Al Unser Jr. Turbo Racing Aladdin (Europe) Alfred Chicken Alien 3 Alien Syndrome (Unl) All-Pro Basketball Alpha Mission Amagon American Gladiators Anticipation Arch Rivals - A Basketbrawl! Archon Arkanoid Arkista's Ring Asterix (Europe) (En,Fr,De,Es,It) Astyanax Athena Athletic World Attack of the Killer Tomatoes Baby Boomer (Unl) Back to the Future Back to the Future Part II & III Bad Dudes Bad News Baseball Bad Street Brawler Balloon Fight Bandai Golf - Challenge Pebble Beach Bandit Kings of Ancient China Barbie (Rev A) Bard's Tale, The Barker Bill's Trick Shooting Baseball Baseball Simulator 1.000 Baseball Stars Baseball Stars II Bases Loaded (Rev B) Bases Loaded 3 Bases Loaded 4 Bases Loaded II - Second Season Batman - Return of the Joker Batman - The Video Game -

Download 80 PLUS 4983 Horizontal Game List

4 player + 4983 Horizontal 10-Yard Fight (Japan) advmame 2P 10-Yard Fight (USA, Europe) nintendo 1941 - Counter Attack (Japan) supergrafx 1941: Counter Attack (World 900227) mame172 2P sim 1942 (Japan, USA) nintendo 1942 (set 1) advmame 2P alt 1943 Kai (Japan) pcengine 1943 Kai: Midway Kaisen (Japan) mame172 2P sim 1943: The Battle of Midway (Euro) mame172 2P sim 1943 - The Battle of Midway (USA) nintendo 1944: The Loop Master (USA 000620) mame172 2P sim 1945k III advmame 2P sim 19XX: The War Against Destiny (USA 951207) mame172 2P sim 2010 - The Graphic Action Game (USA, Europe) colecovision 2020 Super Baseball (set 1) fba 2P sim 2 On 2 Open Ice Challenge (rev 1.21) mame078 4P sim 36 Great Holes Starring Fred Couples (JU) (32X) [!] sega32x 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) fba 2P sim 3D Crazy Coaster vectrex 3D Mine Storm vectrex 3D Narrow Escape vectrex 3-D WorldRunner (USA) nintendo 3 Ninjas Kick Back (U) [!] megadrive 3 Ninjas Kick Back (U) supernintendo 4-D Warriors advmame 2P alt 4 Fun in 1 advmame 2P alt 4 Player Bowling Alley advmame 4P alt 600 advmame 2P alt 64th. Street - A Detective Story (World) advmame 2P sim 688 Attack Sub (UE) [!] megadrive 720 Degrees (rev 4) advmame 2P alt 720 Degrees (USA) nintendo 7th Saga supernintendo 800 Fathoms mame172 2P alt '88 Games mame172 4P alt / 2P sim 8 Eyes (USA) nintendo '99: The Last War advmame 2P alt AAAHH!!! Real Monsters (E) [!] supernintendo AAAHH!!! Real Monsters (UE) [!] megadrive Abadox - The Deadly Inner War (USA) nintendo A.B. -

5794 Games.Numbers

Table 1 Nintendo Super Nintendo Sega Genesis/ Master System Entertainment Sega 32X (33 Sega SG-1000 (68 Entertainment TurboGrafx-16/PC MAME Arcade (2959 Games) Mega Drive (782 (281 Games) System/NES (791 Games) Games) System/SNES (786 Engine (94 Games) Games) Games) Games) After Burner Ace of Aces 3 Ninjas Kick Back 10-Yard Fight (USA, Complete ~ After 2020 Super 005 1942 1942 Bank Panic (Japan) Aero Blasters (USA) (Europe) (USA) Europe) Burner (Japan, Baseball (USA) USA) Action Fighter Amazing Spider- Black Onyx, The 3 Ninjas Kick Back 1000 Miglia: Great 10-Yard Fight (USA, Europe) 6-Pak (USA) 1942 (Japan, USA) Man, The - Web of Air Zonk (USA) 1 on 1 Government (Japan) (USA) 1000 Miles Rally (World, set 1) (v1.2) Fire (USA) 1941: Counter 1943 Kai: Midway Addams Family, 688 Attack Sub 1943 - The Battle of 7th Saga, The 18 Holes Pro Golf BC Racers (USA) Bomb Jack (Japan) Alien Crush (USA) Attack Kaisen The (Europe) (USA, Europe) Midway (USA) (USA) 90 Minutes - 1943: The Battle of 1944: The Loop 3 Ninjas Kick Back 3-D WorldRunner Borderline (Japan, 1943mii Aerial Assault (USA) Blackthorne (USA) European Prime Ballistix (USA) Midway Master (USA) (USA) Europe) Goal (Europe) 19XX: The War Brutal Unleashed - 2 On 2 Open Ice A.S.P. - Air Strike 1945k III Against Destiny After Burner (World) 6-Pak (USA) 720 Degrees (USA) Above the Claw Castle, The (Japan) Battle Royale (USA) Challenge Patrol (USA) (USA 951207) (USA) Chaotix ~ 688 Attack Sub Chack'n Pop Aaahh!!! Real Blazing Lazers 3 Count Bout / Fire 39 in 1 MAME Air Rescue (Europe) 8 Eyes (USA) Knuckles' Chaotix 2020 Super Baseball (USA, Europe) (Japan) Monsters (USA) (USA) Suplex bootleg (Japan, USA) Abadox - The Cyber Brawl ~ AAAHH!!! Real Champion Baseball ABC Monday Night 3ds 4 En Raya 4 Fun in 1 Aladdin (Europe) Deadly Inner War Cosmic Carnage Bloody Wolf (USA) Monsters (USA) (Japan) Football (USA) (USA) (Japan, USA) 64th. -

Nintendo NES

Nintendo NES Last Updated on September 24, 2021 Title Publisher Qty Box Man Comments 10-Yard Fight: 5 Screw - System TM Nintendo 10-Yard Fight: 3 Screw Nintendo 10-Yard Fight: 5 Screw Nintendo 10-Yard Fight: 3 Screw - Part No. Nintendo 1007 Bolts Neodolphino 1942: 5 Screw Capcom 1942: 3 Screw - Round Seal Capcom 1942: 3 Screw - Oval Seal Capcom 1943: The Battle of Midway: Round Seal Capcom 1943: The Battle of Midway: Oval Seal Capcom 3-D WorldRunner: 5 Screw Acclaim 3-D WorldRunner: 3 Screw Acclaim 6 in 1 Caltron 720°: Seal ™ Mindscape 720°: Seal ® Mindscape 8 Bit Xmas 2012 RetroZone 8 Eyes Taxan Abadox: The Deadly Inner War Milton Bradley Action 52: Green Board - Clear Label Active Enterprises Action 52: Black Board Active Enterprises Action 52: Green Board - Blue Label Active Enterprises Action 53 Vol. 3: Revenge of the Twins Infinite NES Lives Action 53 Vol. 3: Revenge of the Twins: Limited Edition Infinite NES Lives Action 53 Vol. 3: Revenge of the Twins: Famicom Cart Infinite NES Lives Action 53 Vol. 3: Revenge of the Twins: Contributor Cart Infinite NES Lives Addams Family, The Ocean Addams Family, The: Pugsley's Scavenger Hunt Ocean Advanced Dungeons & Dragons: DragonStrike FCI Advanced Dungeons & Dragons: Heroes of the Lance FCI Advanced Dungeons & Dragons: Hillsfar FCI Advanced Dungeons & Dragons: Pool of Radiance FCI Adventure in Numberland, Mickey's Hi-Tech Expressions Adventure Island 3 Hudson Soft Adventure Island II Hudson Soft Adventure Island, Hudson's: Round Seal Hudson Soft Adventure Island, Hudson's: Oval Seal Hudson Soft Adventures in the Magic Kingdom, Disney's Capcom Adventures of Bayou Billy, The Konami Adventures of Dino Riki, The Hudson Soft Adventures of Gilligan's Island, The Bandai Adventures of Lolo HAL America Adventures of Lolo 2 HAL America Adventures of Lolo 3 HAL America Adventures of Rad Gravity, The Activision Adventures of Rocky and Bullwinkle and Friends, The THQ Adventures of Tom Sawyer Seta After Burner Tengen Air Fortress HAL America Airball RetroZone Airwolf Acclaim Al Unser Jr. -

1942 10-Yard Fight 1943 : the Battle of Midway 720 Degrees 8 Eyes A

1942 10-Yard Fight 1943 : The Battle of Midway 720 Degrees 8 Eyes A Boy and his Blob : Trouble on Blobolonia A Nightmare on Elm Street Abadox : The Deadly Inner War Advanced Dungeons & Dragons - Dragon Strike Advanced Dungeons & Dragons - Heroes of the Lance Advanced Dungeons & Dragons - Hillsfar Advanced Dungeons & Dragons - Pool of Radiance Adventure Island Adventure Island 3 Adventure Island II Adventures In The Magic Kingdom Adventures of Lolo Adventures of Lolo 2 Adventures of Lolo 3 Adventures of Tom Sawyer Air Fortress Airwolf Al Unser Jr. Turbo Racing Alfred Chicken Alien 3 All-Pro Basketball Alpha Mission Amagon American Gladiators Anticipation Arch Rivals : A Basketbrawl! Archon - The Light And The Dark Arkanoid Arkista's Ring Astyanax Athena Attack of the Killer Tomatoes Back To The Future Back to the Future Part II & III Bad Dudes Bad News Baseball Bad Street Brawler Balloon Fight Bandai Golf : Challenge Pebble Beach Bandit Kings of Ancient China Barbie Barker Bill's Trick Shooting Baseball Baseball Simulator 1.000 Baseball Stars Baseball Stars II Bases Loaded Bases Loaded 3 Bases Loaded 4 Bases Loaded II : Second Season Batman : Return of the Joker Batman : The Video Game Batman Returns Battle Chess Battleship Battletoads Battletoads & Double Dragon Beetlejuice Best of the Best : Championship Karate Bigfoot Bill & Ted's Excellent Video Game Adventure Bill Elliott's NASCAR Challenge Bionic Commando Blades of Steel Blaster Master Bo Jackson Baseball Bomberman Bomberman II Bonk's Adventure Boulder Dash Bram Stoker's Dracula -

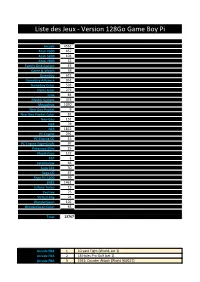

Liste Des Jeux - Version 128Go Game Boy Pi

Liste des Jeux - Version 128Go Game Boy Pi Arcade 4562 Atari 2600 457 Atari 5200 101 Atari 7800 52 Family Disk System 43 Game & Watch 58 Gameboy 621 Gameboy Advance 951 Gameboy Color 502 Game Gear 277 Lynx 84 Master System 373 Megadrive 1030 Neo-Geo Pocket 9 Neo-Geo Pocket Color 81 Neo-Geo 152 N64 78 NES 1822 PC-Engine 291 PC-Engine CD 15 PC-Engine SuperGrafx 97 Pokemon Mini 25 Playstation 122 PSP 2 Satellaview 66 Sega 32X 30 Sega CD 47 Sega SG-1000 59 SNES 1461 Sufami Turbo 15 Vectrex 75 Virtual Boy 24 WonderSwan 102 WonderSwan Color 83 Total 13767 Arcade FBA 1 10-yard Fight (World, set 1) Arcade FBA 2 18 Holes Pro Golf (set 1) Arcade FBA 3 1941: Counter Attack (World 900227) Arcade FBA 4 1942 (Revision B) Arcade FBA 5 1943 Kai: Midway Kaisen (Japan) Arcade FBA 6 1943: The Battle of Midway (Euro) Arcade FBA 7 1943: The Battle of Midway Mark II (US) Arcade FBA 8 1943mii Arcade FBA 9 1944 : The Loop Master (USA 000620) Arcade FBA 10 1944: The Loop Master (USA 000620) Arcade FBA 11 1945k III Arcade FBA 12 1945k III (newer, OPCX2 PCB) Arcade FBA 13 19XX : The War Against Destiny (USA 951207) Arcade FBA 14 19XX: The War Against Destiny (USA 951207) Arcade FBA 15 2020 Super Baseball Arcade FBA 16 2020 Super Baseball (set 1) Arcade FBA 17 3 Count Bout Arcade FBA 18 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) Arcade FBA 19 3x3 Puzzle (Enterprise) Arcade FBA 20 4 En Raya (set 1) Arcade FBA 21 4 Fun In 1 Arcade FBA 22 4-D Warriors (315-5162) Arcade FBA 23 4play Arcade FBA 24 64th. -

Games List32.Pdf

games list 32 GB Freeway Thrust Frogger Thunderground Frogs and Flies Time Pilot ATARI 2600 Frostbite Time Warp Galaxian by Jess Ragan Tomcat - The F-14 Flight Simulator Acid Drop Ghostbusters Tooth Protectors Adventure G.I. Joe - Cobra Strike Towering Inferno Adventures of Tron Glacier Patrol Track and Field Airlock Glib Trick Shot Air Raid Golf Tunnel Runner Air-Sea Battle Gopher Turmoil Alien Grand Prix UFO Patrol Amidar Gravitar Universal Chaos Aquaventure Guardian Wall Ball Assault Halloween Wall Defender Asteroids Haunted House Wing War Astroblast Hell Driver Winter Games Astrowar Home Run Zaxxon Atlantis Ice Hockey Backgammon Indy 500 Bank Heist Infiltrate Baseball James Bond 007 ATARI 7800 Basic Programming Jawbreaker Basketball Journey - Escape Ace of Aces Battlezone Jr. Pac-Man Asteroids Beamrider Kangaroo Centipede Beany Bopper Karate Donkey Kong Beat ‘Em and Eat ‘Em King Kong Fatal Run Berzerk Knight on the Town Galaga Blackjack Kool Aid Man Kung Fu Master Blueprint Kung Fu Master Pole Position II BMX Air Master Malagai Bowling Mangia’ Boxing Marauder GAMEBOY ADVANCE Breakout - Breakaway IV Marine Wars Bumper Bash Mario Bros Bump ‘N’ Jump Master Builder Castlevania - Aria of Sorrow Burgertime Mega Force Donkey Kong Country Cakewalk Megamania Dragon Ball Z - Buu’s Fury California Games Missile Command Dragon Ball Z - The Legacy of Goku II Canyon Bomber Missile Control Final Fantasy I & II - Dawn of Souls Cat Trax Mr. Pac-Man Final Fantasy V Advance Centipede Night Driver Final Fantasy VI Advance Challenge of...NEXAR Nightmare Fire Emblem Championship Soccer No Escape! Golden Sun Checkers Pac Kong Grand Theft Auto Advance Chopper Command Pac-Man Harvest Moon - Friends of Mineral Town Chuck Norris Superkicks Phoenix Kingdom Hearts - Chain of Memories Circus Atari Pitfall! Mario And Luigi Superstar Saga Commando Pitfall II - Lost Caverns Mario Kart - Super Circuit Commando Raid Pole Position Mario Vs. -

Atari Lynx Ocean's Robocop Bombs Away!

RG18 Cover UK.qxd:RG18 Cover UK.qxd 20/9/06 17:32 Page 1 retro gamer COMMODORE SEGA NINTENDO ATARI SINCLAIR ARCADE * VOLUME TWO ISSUE SIX Atari Lynx Power in the palm of your hand Ocean’s Robocop I’d buy that for a dollar! Bombs Away! Bomberman blasts back Little Computer People Creator David Crane interviewed Retro Gamer 18 £5.99 UK $14.95 AUS V2 $27.70 NZ 06 Untitled-1 1 1/9/06 12:55:47 RG18 Intro/Contents.qxd:RG18 Intro/Contents.qxd 20/9/06 17:40 Page 3 <EDITORIAL> Editor = Martyn Carroll ([email protected]) Deputy Editor = Aaron Birch ([email protected]) Art Editor = Craig Chubb Sub Editors = Rachel White + James Clark Contributors = Richard Burton + David Crookes Paul Drury + Frank Gasking Peter Latimer + Craig Lewis Robert Mellor +PerArne Sandvik Spanner Spencer + John Szczepaniak The Boy Warde + Kim Wild <PUBLISHING & ADVERTISING> Operations Manager = Glen Urquhart Group Sales Manager = Linda Henry Advertising Sales = Danny Bowler Accounts Manager = Karen Battrick Circulation Manager = elcome to another editorial, then that’s exactly Zzap!64 tribute magazine (see Steve Hobbs feature-packed what it is. Subscriptions really page 13 if you’re not sure what Marketing Manager = helloissue of Retro are the lifeblood of any I’m blabbering on about). What’s Iain "Chopper" Anderson Editorial Director = Gamer. I’d like to magazine, and they keep Retro more, we have similar Wayne Williams W start this issue by Gamer ticking along nicely, promotions lined up over the Publisher = pointing out our new especially during the rather coming months… Robin Wilkinson subscriptions offer. -

Maze Spill Liste : Stem P㥠Dine Favoritter

Maze Spill Liste Pac-Man https://no.listvote.com/lists/games/pac-man-2626874 Crazy Arcade https://no.listvote.com/lists/games/crazy-arcade-489918 Digger https://no.listvote.com/lists/games/digger-1224616 The Return of Ishtar https://no.listvote.com/lists/games/the-return-of-ishtar-7760408 Mr. Do! https://no.listvote.com/lists/games/mr.-do%21-1557589 Robin of the Wood https://no.listvote.com/lists/games/robin-of-the-wood-2159940 Pac-Man Vs. https://no.listvote.com/lists/games/pac-man-vs.-3282474 Jr. Pac-Man https://no.listvote.com/lists/games/jr.-pac-man-1572086 Alien https://no.listvote.com/lists/games/alien-3611745 Batman (1990 video game) https://no.listvote.com/lists/games/batman-%281990-video-game%29-2891476 Cratermaze https://no.listvote.com/lists/games/cratermaze-5182648 https://no.listvote.com/lists/games/ms.-pac-man%3A-quest-for-the-golden- Ms. Pac-Man: Quest for the Golden Maze maze-6930104 Pac-Man: Adventures in Time https://no.listvote.com/lists/games/pac-man%3A-adventures-in-time-7121841 Lady Bug https://no.listvote.com/lists/games/lady-bug-1800074 Hover Bovver https://no.listvote.com/lists/games/hover-bovver-1631626 Traxx https://no.listvote.com/lists/games/traxx-7836476 Perplexity https://no.listvote.com/lists/games/perplexity-7169579 Nibbler https://no.listvote.com/lists/games/nibbler-3339529 Jack the Giantkiller https://no.listvote.com/lists/games/jack-the-giantkiller-16259705 Fast Food https://no.listvote.com/lists/games/fast-food-1655991 Dandy https://no.listvote.com/lists/games/dandy-5215764 Munchkin https://no.listvote.com/lists/games/munchkin-652549 -

NES Nintendo Bomberman Bomber Man II 2 Item # 3011568157 Consumer Electronics:Video Games:Nintendo NES:Games:Action, Adventure

NES Nintendo Bomberman Bomber man II 2 Item # 3011568157 Consumer Electronics:Video Games:Nintendo NES:Games:Action, Adventure Current bid Starting US $1.00 US $1.00 bid Quantity 1 # of bids 0 Bid history Time left 9 days, 21 hours + Location P. Lakes, NJ 07442 Country United States Started Mar-04-03 17:18:44 PST Mail this auction to a friend Ends Mar-14-03 17:18:44 PST Watch this item portnoyd (9) Feedback rating: 9 with 90.9% positive feedback reviews (Read all reviews) Seller (rating) Member since: Jan-02-03. Registered in United States View seller's other items | Ask seller a question | Safe Trading Tips High bidder -- Payment Money order/cashiers check, or see item description for payment methods accepted. Shipping Buyer pays for all shipping costs. Will ship to United States only. Seller Revise item | Sell similar item services Item Revised To review revisions made to this item by the seller, click here . Description Up for auction is a copy of Bomberman II, loose, for the NES! Bomberman II is the only game for sale, the following is NOT for sale: Stadium Events, Rodland, a Genesis Blockbuster Championship II Cart, a Final Fantasy III manual, 4 Metal Gear Solid promo cards, an old Western Digital 3 gig Hard drive (Chock full of naughty bits), a broken Casio Cassiopeia E-100, an old Nokia 6860 dummy phone, a cassette copy of Weird Al's "Even Worse", an Ancient Chinese Master, my Zippo lighter, the world's most unlucky D&D dice (two sets), a small bottle of Strawberry flavored vodka, a semiused C battery, a sewing kit, Billy Mays's GOPHER DELUXE, a tin of Cinnamon Altoids, a copy of Forrest Gump, read 1/2 times, 4 airline cookies, a deck of Luxor Las Vegas playing cards, an Arizona bell, an empty bottle of BUTTHEAD root beer, a Darth Maul action figure in a Monkey Mug, a 8 ball bouncy ball, Mad Magazine Toilet Paper, a Blockbuster receipt from 2 years ago (Yet another used DVD purchase.. -

Video Game Trader Magazine | April 2008 |

$4.99 US / $6.99 Canada Issue #4—April 2008 Published by www.VideoGameTrader.com 1 | Video Game Trader Magazine | April 2008 | www.VideoGameTrader.com 2 | Video Game Trader Magazine | April 2008 | www.VideoGameTrader.com P. Ian Nicholson Publisher / Editor in Chief a.k.a VectrexMad What’s inside #4? Thomas Sansone Only a recent retro gamer, Ian is Vectrex- Mad! And you can usually find him making Price Guide Research Team comment on the rec.games.vectrex news- System Profile—Atari 2600 Thomas Sansone group. When he is not playing on his 5 Vectrex, he is on a continual quest to find 6 Nolan Bushnell: Entrepreneur, Pioneer… out new information on everything Vectrex Video Game Trader Staff Legend related and has just recently set up a Jim Combs website www.vectrex.co.uk to capture this. 11 Don’t Throw Away that Old TV, Your Clas- Jim is a hardcore passionate gamer. You sic Console will Hate You! Review Staff can find his works in the Digital Press 12 I Have a confession to make... Collector's Guide Advance Edition & Tips & Tricks Magazine. In 2005, Jim was Agents J & K 14 Full Circle recognized at CG Expo as their special Agents J and K are part of the dynamic Video Game Historian Alumni guest. duo known for nesarchives.com. However, 16 Do Sex & Video Games Mix? little is known about either one of these 28 Game over…. Continue? Dan M avid NESers. After you are done reading Dan's been playing video games since he VGT, head on over to their website was panel high to an Asteroids machine (www.nesarchives.com) and read their but especially loves the "16 Bit Wars" of amazing NES reviews.