Software Engineering and Human-Computer Interaction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

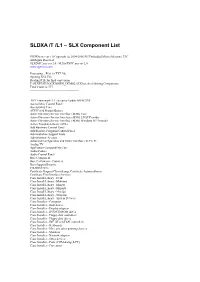

SLDXA /T /L1 – SLX Component List

SLDXA /T /L1 – SLX Component List SLDXA.exe ver 1.0 Copyright (c) 2004-2006 SJJ Embedded Micro Solutions, LLC All Rights Reserved SLXDiffC.exe ver 2.0 / SLXtoTXTC.exe ver 2.0 www.sjjmicro.com Processing... File1 to TXT file. Opening XSL File Reading RTF for final conversion F:\SLXTEST\LOCKDOWN_DEMO2.SLX has the following Components Total Count is: 577 -------------------------------------------------- .NET Framework 1.1 - Security Update KB887998 Accessibility Control Panel Accessibility Core ACPI Fixed Feature Button Active Directory Service Interface (ADSI) Core Active Directory Service Interface (ADSI) LDAP Provider Active Directory Service Interface (ADSI) Windows NT Provider Active Template Library (ATL) Add Hardware Control Panel Add/Remove Programs Control Panel Administration Support Tools Administrator Account Advanced Configuration and Power Interface (ACPI) PC Analog TV Application Compatibility Core Audio Codecs Audio Control Panel Base Component Base Performance Counters Base Support Binaries CD-ROM Drive Certificate Request Client & Certificate Autoenrollment Certificate User Interface Services Class Install Library - Desk Class Install Library - Mdminst Class Install Library - Mmsys Class Install Library - Msports Class Install Library - Netcfgx Class Install Library - Storprop Class Install Library - System Devices Class Installer - Computer Class Installer - Disk drives Class Installer - Display adapters Class Installer - DVD/CD-ROM drives Class Installer - Floppy disk controllers Class Installer - Floppy disk drives -

(12) United States Patent (10) Patent No.: US 8,074,184 B2 Garside Et Al

USOO8074184B2 (12) United States Patent (10) Patent No.: US 8,074,184 B2 Garside et al. (45) Date of Patent: Dec. 6, 2011 (54) MODIFYING ELECTRONIC DOCUMENTS 6,065,026 A * 5/2000 Cornelia et al. .............. 715,531 WITH RECOGNIZED CONTENT OR OTHER 6,081,814 A * 6/2000 Mangatet al. ... 715,205 6,108,272 A * 8/2000 Fox .................... ... 367,131 ASSOCATED DATA 6,111,985 A * 8/2000 Hullender et al. ... 382,229 6,340,967 B1 * 1/2002 Maxted .......... ... 345,179 (75) Inventors: Adrian James Garside, Sammamish, 6,411,733 B1* 6/2002 Saund ............ ... 382,190 WA (US); David Vaughn Winkler, 6,493.464 B1* 12/2002 Hawkins et al. ... ... 382,189 6,766,494 B1* 7/2004 Price et al. ......... T15,203 Seattle, WA (US); Joshua Clow, 6,836,759 B1* 12/2004 Williamson et al. TO4/235 Bellevue, WA (US) 6,903,751 B2 * 6/2005 Saund et al. ....... ... 345,619 7,283,670 B2 * 10/2007 Wakeam et al. ... ... 382, 186 (73) Assignee: Mocrosoft Corporation, Redmond, WA 7.468,801 B2 * 12/2008 Wakeam et al. ... ... 358,114 (US) 7,502,805 B2 * 3/2009 Wakeam et al. ...................... 1.1 (*) Notice: Subject to any disclaimer, the term of this (Continued) patent is extended or adjusted under 35 OTHER PUBLICATIONS U.S.C. 154(b) by 933 days. ask-search-q linked+Support+document&search=&qsrc=0—Aug. (21) Appl. No.: 10/703,081 14, 2011.* (22) Filed: Nov. 7, 2003 (Continued) (65) Prior Publication Data Primary Examiner — Boris Pesin US 2005/OO99398 A1 May 12, 2005 Assistant Examiner — John Heffington (74) Attorney, Agent, or Firm — Shook, Hardy & Bacon (51) Int. -

A Chinese Mobile Phone Input Method Based on the Dynamic and Self-Study Language Model

A Chinese Mobile Phone Input Method Based on the Dynamic and Self-study Language Model Qiaoming Zhu, Peifeng Li, Gu Ping, and Qian Peide School of Computer Science & Technology of Soochow University, Suzhou, 215006 {qmzhu, pfli, pgu, pdqian}@suda.edu.cn Abstract. This paper birefly introduces a Chinese digital input method named as CKCDIM (CKC Digital Input Method) and then applies it to the Symbian OS as an example, and it also proposes a framework of input method which adopted the Client/Server architecture for the handheld computers. To improve the performance of CKCDIM, this paper puts forward a dynamic and self-study language model which based on a general language model and user language model, and proposes two indexes which are the average number of pressed-keys (ANPK) and the hit rate of first characters (HRFC) to measure the performance of the input method. Meanwhile, this paper brings forward a modified Church-Gale smoothing method to reduce the size of general language model to meet the need of mobile phone. At last, the experiments prove that the dynamic and self-study language model is a steady model and can improve the performance of CKCDIM. Keywords: Chinese Digital Input Method, Architecture of Input Method, Dynamic and Self-study Language Model, HRFC, ANPK. 1 Introduction With the developing of communication technology and the popularization of the mobile phone in China, the use of text message in mobile phone is growing rapidly. According to CCTV financial news report, the total number of Short Message Service use will grow from 300 billions in 2005 to 450 billions in 2006 in China. -

Development of a Windows Device Driver for the Nintendo Wii Remote Entwicklung Eines Windows Treibers Für Die Nintendo Wii Remote

Development of a Windows Device Driver for the Nintendo Wii Remote Entwicklung eines Windows Treibers für die Nintendo Wii Remote Julian Löhr School of Informatics SRH Hochschule Heidelberg Heidelberg, Germany [email protected] Abstract—This paper is about the development of a device The Wii Remote uses Bluetooth for its wireless driver for the Nintendo Wii Remote on Windows PC’s. communication and is thereby connectable with a pc[1]. Windows does recognize the Wii Remote as a game controller, Keywords—Windows driver development, Wii Remote, human but as shown in Fig. 1 no inputs are exposed. Therefore it is not interface device, game controller, Bluetooth usable without any third party support. There are various programs to enable the Wii Remote to be used within video I. INTRODUCTION games, but all of them just map the inputs to keyboard keys[2]. Many PC games do support game controllers. The So this is useful for some single-player games, but does not Nintendo Wii Remote is a wireless controller for the Nintendo support analog input[2]. Additionally if multiple controllers are Wii console and the Nintendo Wii U console. It features needed, e.g. for local multiplayer games like FIFA, this several buttons, acceleration sensors and an infrared sensor. solution is not sufficient enough[2]. Furthermore it is possible to expand the controller via an additional port with various attachments. Those attachments So the objective is to develop a device driver to enable it as are, i.e. the Nunchuk, a controller with additional buttons and a native game controller. -

2 - Player Input Handling Csc 165 Computer Game Architecture Overview

CSc 165 Lecture Notes 2 - Player Input Handling CSc 165 Computer Game Architecture Overview • Device Types • Device Abstractions 2 - Input Handling • Controllers • Input Handling Packages • Event Queues • Input Action Commands (making the inputs do things) 2 CSc 165 Lecture Notes CSc 165 Lecture Notes 2 - Player Input Handling 2 - Player Input Handling Types of Input Devices Input Handling Goals • Keyboard • Steering Wheel Keep games device-independent o Game shouldn’t contain hard-coded device details • Mouse • Dance Pad o Game shouldn’t fail when a particular device is absent • Joystick (“POV”) • Guitar (allow substitution) • “POV Hat Switch” • WiiMote Keep engine device-independent o Engine components should not contain hard-coded • Gamepad • Kinect device details • Paddle • others? … isolate details in an Input Manager 3 4 CSc 165 Lecture Notes CSc 165 Lecture Notes 2 - Player Input Handling 2 - Player Input Handling Device Abstractions “D-pad” (Directional-pad) Axes Two fundamental device types: Discrete axis devices D-pad o Can have either one or two axes . Button – returns pressed or not pressed Frequently represented as 1.0 or 0.0 Single-axis form: one component; U returns one value: L R . Axis – returns a float .25 D Two types of Axis: .125 .375 Dual axis form: two components; each returns a value: . Continuous: returns a value in a range 1.0 0 .5 e.g. { -1 … 1 } or { 0 … 1 } L N R X: . Discrete: returns a value from a set .875 .625 -1 0 +1 e.g. [ 0, 1 ] or [ -1, 0, 1 ] .75 D N U Can be absolute or relative N UL U UR R DR D DL L Y: -1 0 +1 0 .125 .25 .375 .5 .625 .75 .875 1.0 5 6 CSc 165 Lecture Notes CSc 165 Lecture Notes 2 - Player Input Handling 2 - Player Input Handling Controllers Controller Example: GamePad Most “devices” are really collections : o Keyboard: collection of (e.g. -

Beginning .NET Game Programming in En

Beginning .NET Game Programming in en DAVID WELLER, ALEXANDRE SANTOS LOBAo, AND ELLEN HATTON APress Media, LLC Beginning .NET Game Programming in C# Copyright @2004 by David Weller, Alexandre Santos Lobao, and Ellen Hatton Originally published by APress in 2004 All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. ISBN 978-1-59059-319-6 ISBN 978-1-4302-0721-4 (eBook) DOI 10.1007/978-1-4302-0721-4 Trademarked names may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, we use the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. Technical Reviewers: Andrew Jenks, Kent Sharkey, Tom Miller Editorial Board: Steve Anglin, Dan Appleman, Gary Cornell, James Cox, Tony Davis, John Franklin, Chris Mills, Steve Rycroft, Dominic Shakeshaft, Julian Skinner, Jim Sumser, Karen Watterson, Gavin Wray, John Zukowski Assistant Publisher: Grace Wong Project Manager: Sofia Marchant Copy Editor: Ami Knox Production Manager: Kari Brooks Production Editor: JanetVail Proofreader: Patrick Vincent Compositor: ContentWorks Indexer: Rebecca Plunkett Artist: Kinetic Publishing Services, LLC Cover Designer: Kurt Krames Manufacturing Manager: Tom Debolski The information in this book is distributed on an "as is" basis, without warranty. Although every precaution has been taken in the preparation of this work, neither the author(s) nor Apress shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in this work. -

Microsoft Palladium

Microsoft Palladium: A Business Overview Combining Microsoft Windows Features, Personal Computing Hardware, and Software Applications for Greater Security, Personal Privacy, and System Integrity by Amy Carroll, Mario Juarez, Julia Polk, Tony Leininger Microsoft Content Security Business Unit June 2002 Legal Notice This is a preliminary document and may be changed substantially prior to final commercial release of the software described herein. The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information presented after the date of publication. This White Paper is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Microsoft, the furnishing of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property. Unless otherwise noted, the example companies, organizations, products, domain names, e-mail addresses, logos, people, places and events depicted herein are fictitious, and no association with any real company, organization, product, domain name, e-mail address, logo, person, place or event is intended or should be inferred. -

Metadefender Core V4.19.0

MetaDefender Core v4.19.0 © 2019 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 14 Key Features of MetaDefender Core 15 1. Quick Start with MetaDefender Core 16 1.1. Installation 16 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 22 1.3. Process Files with MetaDefender Core 22 2. Installing or Upgrading MetaDefender Core 23 2.1. Recommended System Configuration 23 Microsoft Windows Deployments 24 Unix Based Deployments 26 Data Retention 28 Custom Engines 28 Browser Requirements for the Metadefender Core Management Console 28 2.2. Installing MetaDefender 29 Installation 29 Installation notes 29 2.2.1. MetaDefender Core 4.18.0 or older 30 2.2.2. MetaDefender Core 4.19.0 or newer 33 2.3. Upgrading MetaDefender Core 38 Upgrading from MetaDefender Core 3.x to 4.x 38 Upgrading from MetaDefender Core older version to 4.18.0 (SQLite) 38 Upgrading from MetaDefender Core 4.18.0 or older (SQLite) to 4.19.0 or newer (PostgreSQL): 39 Upgrading from MetaDefender Core 4.19.0 to newer (PostgreSQL): 40 2.4. MetaDefender Core Licensing 41 2.4.1. Activating Metadefender Licenses 41 2.4.2. Checking Your Metadefender Core License 46 2.5. Performance and Load Estimation 47 What to know before reading the results: Some factors that affect performance 47 How test results are calculated 48 Test Reports 48 2.5.1. -

Creating Force Feedback Using C++ Programming Language and Actuators

Creating Force Feedback using C++ programming language and Actuators Behdad Rashidian Design Team 5-Smart Voting Joystick for Accessible Voting Machines April 4, 2013 Facilitator: Dr. John Deller Page 1 Abstract The purpose of this application note is to aid the user create the desired force feedback to different axis of a joystick. This document contains material about programming the force feedback and how the actuators will work based on the code that is provided and the H-Bridge based circuit designed for the motors. Actuator motors will be discussed in detail to give a better perspective of the design of the force feedback. This note also includes relevant schematics and figures to provide the user with visual references to the mentioned components to minimize misinterpretation. Keywords Actuators, C# programming, Motors, Force Feedback, Axis, Joystick, DirectX, DirectInput, H-Bridge circuit Introduction Many varieties of input devices and control interfaces have been developed for powered joystick to satisfy diverse needs for disabled people. However, for some people with severe motor disabilities, it is impossible to use these powered joysticks since they do not provide the desired force feedback. The force feedback is needed in order to control the range of joystick movements. Moving through different selections in a webpage needs Constant type of force feedback so it can help the user with motor disabilities to move easier among selections. In this application note we will discuss developing a program which allows the user to have this constant force with the DirectX library that will be discussed. H-Bridge circuit introduction H bridge circuit enables a voltage to be applied across a load in either direction. -

![[ EVEREST Ultimate Edition ]](https://docslib.b-cdn.net/cover/3493/everest-ultimate-edition-1573493.webp)

[ EVEREST Ultimate Edition ]

[ EVEREST Ultimate Edition ] ---------------------------------------- Versi EVEREST v4.50.1330/id Modul Pengukuran 2.3.224.0 Home page http://www.lavalys.com/ Tipe Laporan Bimbingan laporan Komputer TANSISCOTBK09K Generator Nain Sistem Operasi Microsoft Windows XP Profe ssional 5.1.2600 (WinXP Retail) Tanggal 20110510 Jam 06:23 [ Ringkasan ] ---------------------------------------- Komputer: Tipe Komputer ACPI Multiprocessor PC Sistem Operasi Microsoft Windows XP Pro fessional Paket Layanan OS Service Pack 3 Internet Explorer 6.0.2900.5512 DirectX 4.09.00.0904 (DirectX 9. 0c) Nama Komputer TANSISCOTBK09K Nama Pengguna Nain Domain Masuk TANSISCOTBK09K Tanggal / Jam 20110510 / 06:23 Motherboard: Tipe CPU Intel Pentium 4, 3000 MH z (15 x 200) Nama Motherboard Dell OptiPlex GX270 Chipset Motherboard Intel SpringdaleG i865G Memori Sistem 512 MB (DDR SDRAM) DIMM2: Infineon HYS64D32000GU7 256 MB PC2100 DDR SDRAM (2.5337 @ 142 MHz) (2.0336 @ 133 MHz) DIMM3: Hynix HYMD216 646D6JD43 128 MB PC3200 DDR SDRAM (3.0338 @ 200 MHz) (2.5337 @ 166 MHz) (2.0226 @ 133 MHz) DIMM4: Hynix HYMD216 646D6JD43 128 MB PC3200 DDR SDRAM (3.0338 @ 200 MHz) (2.5337 @ 166 MHz) (2.0226 @ 133 MHz) Tipe BIOS Phoenix (05/17/04) Port Komunikasi Communications Port (COM 1) Port Komunikasi ECP Printer Port (LPT1) Layar: Adapter Video RADEON 9600 Series Secon dary (256 MB) Adapter Video RADEON 9600 Series (256 MB) Akselerator 3D ATI Radeon 9600 (RV350) Monitor NEC V520 [15" CRT] (2Y 00657TB) Multimedia: Adapter Suara Analog Devices AD1981B(L ) @ Intel 82801EB ICH5 AC'97 Audio Controller [A2/A3] Adapter Suara BrookTree Bt878 Video Ca pture Device Audio Section Penyimpanan: Kontroler IDE Intel(R) 82801EB Ultra A TA Storage Controllers Kontroler IDE Intel(R) 82801EB Ultra A TA Storage Controllers Kontroler Penyimpanan A2222176 IDE Controller Drive Disk JetFlash Transcend 8GB U SB Device (7 GB, USB) Drive Disk Kingston DataTraveler 2. -

Course 3D XNA: 3D-Computer Graphics with XNA Chapter C4: Noname Gamepad

1 Course 3D_XNA: 3D-Computer Graphics with XNA Chapter C4: NoName GamePad Copyright © by V. Miszalok, last update: 11-08-2008 NoName-GamePad Project gamepad1 The complete Code of Game1.cs The complete Code of Form1.cs NoName GamePads There is just one generic gamepad support by XNA: the wired USB XBox 360 Controller from Microsoft. Price: ca. 30 €. A talented hacker "SoopahMan" from Germany wrote a Soopah.XNA.Input.dll based on DirectX.DirectInput that allows the use of nearly any other USB-GamePad. Plug it into an USB-Port and check it: Start → Control Panel → Game Controllers → Generic USB Joystick → Properties → Test. If more than one controller is listed, the following project gamepad1 will just recognize the first of them. Problem: The controllers can be very different: arrangement of buttons, no. of analog thumb sticks. Our program arranges the buttons in a technical, not in a logical order. Project gamepad1 1. Main Menu after starting VS 2008: File → New Project... → Project types: XNA GameStudio 3.0 Templates: Windows Game (3.0) → Name: gamepad1 → Location: C:\temp → Create directory for solution: switch it off → OK . Solution Explorer - controller1: Delete the file Program.cs and the default code of Game1.cs. 2.1 If You find no Solution Explorer-window, open it via the main menu: View → Solution Explorer Ctrl+W, S. Inside the Solution Explorer-window click + in front of gamepad1. A tree opens. Look for the branch "References". Click the + in front of References. Right click References → Add Reference → .NET → scroll down until you find Microsoft.DirectX.DirectInput Version 1.0.2902.0 → select it → OK. -

Microsoft Playready Content Access Technology White Paper

Microsoft PlayReady Content Access Technology White Paper Microsoft Corporation July 2008 Applies to: Microsoft® PlayReady™ Content Access Technology Summary: Microsoft PlayReady technology provides the premier platform for applying business models to the distribution and use of digital content. This white paper provides an overview of the features, business scenarios, and the technology ecosystem. Legal Notice Information in this document, including URL and other Internet Web site references, is subject to change without notice. Unless otherwise noted, the companies, organizations, products, domain names, e-mail addresses, logos, people, places, and events depicted in examples herein are fictitious. No association with any real company, organization, product, domain name, e-mail address, logo, person, place, or event is intended or should be inferred. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Microsoft, the furnishing of this document does not give you any