Analysis & Test of Image Acquisition Methods

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CINERAMA: the First Really Big Show

CCINEN RRAMAM : The First Really Big Show DIVING HEAD FIRST INTO THE 1950s:: AN OVERVIEW by Nick Zegarac Above left: eager audience line ups like this one for the “Seven Wonders of the World” debut at the Cinerama Theater in New York were short lived by the end of the 1950s. All in all, only seven feature films were actually produced in 3-strip Cinerama, though scores more were advertised as being shot in the process. Above right: corrected three frame reproduction of the Cypress Water Skiers in ‘This is Cinerama’. Left: Fred Waller, Cinerama’s chief architect. Below: Lowell Thomas; “ladies and gentlemen, this is Cinerama!” Arguably, Cinerama was the most engaging widescreen presentation format put forth during the 1950s. From a visual standpoint it was the most enveloping. The cumbersome three camera set up and three projector system had been conceptualized, designed and patented by Fred Waller and his associates at Paramount as early as the 1930s. However, Hollywood was not quite ready, and certainly not eager, to “revolutionize” motion picture projection during the financially strapped depression and war years…and who could blame them? The standardized 1:33:1(almost square) aspect ratio had sufficed since the invention of 35mm celluloid film stock. Even more to the point, the studios saw little reason to invest heavily in yet another technology. The induction of sound recording in 1929 and mounting costs for producing films in the newly patented 3-strip Technicolor process had both proved expensive and crippling adjuncts to the fluidity that silent B&W nitrate filming had perfected. -

4K Resolution Is Ready to Hit Home

4K RESOLUTION 4K Resolution is ready to Hit Home 4K that became a digital cinema standard in 2005 is now recognized as the next high quality image technology of HD in the market. With a growing selection of 4K televisions and recent announcements of affordable cinema-style camcorders, 4K resolution is poised to become a new standard. VIVEK KEDAR 011 was a year of Tablets and mobile. - Hacktivism On one side where we saw new - 4G LTE technologies emerging, mobile devices became way more powerful than they 4K Buzzword 2were. TVs got better but their LG, Samsung and several others would showcase Technologies, especially, 3D, remained their 2012 range of products at CES 2012. The proprietary. major buzz this year would be about the 3D 4K TVs. 2012 would be all about: Not only these TVs would be large (~60″ they - Quad core Tablets, Mobile Phones would deliver super high definition content with a - 3D TVs with 4k resolution (4096x) smooth picture (~200hz and above). - Mobile payments One of the first companies to embrace the - Rise of NextGen Social networking resolution craze was Oakley founder Jim Jannard's 4K RESOLUTION company, RED DIGITAL CINEMA. In 2007, they strong need of building integrated and distributed released a new camcorder called the RED One that environment for high resolution applications. could shoot at 4K resolutions for a much lower In simple words, the 4K is the resolution of 8-9 MPix price than comparable 4K camcorders. Then, in having about 4 thousands points horizontally. The 2011, the EPIC camera upped the resolution even resolution often depends on the screen aspect further to 5K. -

Film Printing

1 2 3 4 5 6 7 8 9 10 1 2 3 Film Technology in Post Production 4 5 6 7 8 9 20 1 2 3 4 5 6 7 8 9 30 1 2 3 4 5 6 7 8 9 40 1 2 3111 This Page Intentionally Left Blank 1 2 3 Film Technology 4 5 6 in Post Production 7 8 9 10 1 2 Second edition 3 4 5 6 7 8 9 20 1 Dominic Case 2 3 4 5 6 7 8 9 30 1 2 3 4 5 6 7 8 9 40 1 2 3111 4 5 6 7 8 Focal Press 9 OXFORD AUCKLAND BOSTON JOHANNESBURG MELBOURNE NEW DELHI 1 Focal Press An imprint of Butterworth-Heinemann Linacre House, Jordan Hill, Oxford OX2 8DP 225 Wildwood Avenue, Woburn, MA 01801-2041 A division of Reed Educational and Professional Publishing Ltd A member of the Reed Elsevier plc group First published 1997 Reprinted 1998, 1999 Second edition 2001 © Dominic Case 2001 All rights reserved. No part of this publication may be reproduced in any material form (including photocopying or storing in any medium by electronic means and whether or not transiently or incidentally to some other use of this publication) without the written permission of the copyright holder except in accordance with the provisions of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London, England W1P 0LP. Applications for the copyright holder’s written permission to reproduce any part of this publication should be addressed to the publishers British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Library of Congress Cataloging in Publication Data A catalogue record -

Area of 35 Mm Motion Picture Film Used in HDTV Telecines

Rec. ITU-R BR.716-2 1 RECOMMENDATION ITU-R BR.716-2* AREA OF 35 mm MOTION PICTURE FILM USED BY HDTV TELECINES (Question ITU-R 113/11) (1990-1992-1994) Rec. ITU-R BR.716-2 The ITU Radiocommunication Assembly, considering a) that telecines are sometimes used as a television post-production tool for special applications such as the scanning of negative film or other image processing operations, and it is necessary to be able to position the scanned area anywhere on the film exposed area for this application; b) that telecines are also used to televise film programmes with no image post-processing, and it is desirable that the area to be used on the film frame be specified for this application; c) that many formats exist for 35 mm feature films, as listed below, and preferred dimensions for the area used on the frames of all those formats should be recommended: – 1.37:1 (“Academy” format, close to 4:3) – 1.66:1 (European wide-screen format, close to 16:9) – 1.85:1 (United States wide-screen format, close to 16:9) – 2.35:1 (anamorphic “Cinemascope” format); d) the content of ISO Standard 2906 “Image area produced by camera aperture on 35 mm motion picture film”, and that of ISO Standard 2907 “Maximum projectable image area on 35 mm motion picture film” which specifies the dimensions of the projectable area for all the frame formats listed above; e) the content of Recommendation ITU-R BR.713 “Recording of HDTV images on film”, which is based on ISO Standards 2906 and 2907, recommends 1. -

American Society of Cinematographers Motion Imaging Technology Council Progress Report 2018

American Society of Cinematographers Motion Imaging Technology Council Progress Report 2018 By Curtis Clark, ASC; David Reisner; David Stump, Color Encoding System), they can creatively make the ASC; Bill Bennett, ASC; Jay Holben; most of this additional picture information, both color Michael Karagosian; Eric Rodli; Joachim Zell; and contrast, while preserving its integrity throughout Don Eklund; Bill Mandel; Gary Demos; Jim Fancher; the workflow. Joe Kane; Greg Ciaccio; Tim Kang; Joshua Pines; Parallel with these camera and workflow develop- Pete Ludé; David Morin; Michael Goi, ASC; ments are significant advances in display technologies Mike Sanders; W. Thomas Wall that are essential to reproduce this expanded creative canvas for cinema exhibition and TV (both on-demand streaming and broadcast). While TV content distribu- ASC Motion Imaging Technology Council Officers tion has been able to take advantage of increasingly avail- Chair: Curtis Clark, ASC able consumer TV displays that support (“4K”) Ultra Vice-Chair: Richard Edlund, ASC HD with both wide color gamut and HDR, including Vice-Chair: Steven Poster, ASC HDR10 and/or Dolby Vision for consumer TVs, Dolby Secretary & Director of Research: David Reisner, Vision for Digital Cinema has been a leader in devel- [email protected] oping laser-based projection that can display the full range of HDR contrast with DCI P3 color gamut. More recently, Samsung has demonstrated their new emissive Introduction LED-based 35 foot cinema display offering 4K resolu- ASC Motion Imaging Technology Council Chair: tion with full HDR utilizing DCI P3 color gamut. Curtis Clark, ASC Together, these new developments enable signifi- cantly enhanced creative possibilities for expanding The American Society of Cinematographers (ASC) the visual story telling of filmmaking via the art of Motion Imaging Technology Council (MITC – “my cinematography. -

The Essential Reference Guide for Filmmakers

THE ESSENTIAL REFERENCE GUIDE FOR FILMMAKERS IDEAS AND TECHNOLOGY IDEAS AND TECHNOLOGY AN INTRODUCTION TO THE ESSENTIAL REFERENCE GUIDE FOR FILMMAKERS Good films—those that e1ectively communicate the desired message—are the result of an almost magical blend of ideas and technological ingredients. And with an understanding of the tools and techniques available to the filmmaker, you can truly realize your vision. The “idea” ingredient is well documented, for beginner and professional alike. Books covering virtually all aspects of the aesthetics and mechanics of filmmaking abound—how to choose an appropriate film style, the importance of sound, how to write an e1ective film script, the basic elements of visual continuity, etc. Although equally important, becoming fluent with the technological aspects of filmmaking can be intimidating. With that in mind, we have produced this book, The Essential Reference Guide for Filmmakers. In it you will find technical information—about light meters, cameras, light, film selection, postproduction, and workflows—in an easy-to-read- and-apply format. Ours is a business that’s more than 100 years old, and from the beginning, Kodak has recognized that cinema is a form of artistic expression. Today’s cinematographers have at their disposal a variety of tools to assist them in manipulating and fine-tuning their images. And with all the changes taking place in film, digital, and hybrid technologies, you are involved with the entertainment industry at one of its most dynamic times. As you enter the exciting world of cinematography, remember that Kodak is an absolute treasure trove of information, and we are here to assist you in your journey. -

THE STATUS of CINEMATOGRAPHY TODAY the Status of Cinematography Today

THE STATUS OF CINEMATOGRAPHY TODAY The Status of Cinematography Today HISTORICAL PERSPECTIVE ON THE lenses. Both the Sony F900 with on-board HDCam recording and TRANSITION TO DIGITAL MOTION PICTURE the Thomson Viper tethered to an external SRW 5500 studio deck CAMERAS were used to shoot the night scenes for Collateral. The F900 was Curtis Clark, ASC selected only for those scenes where the camera setup required un- restricted mobility free from being tethered to an external recorder. A Controversial Beginning Although Digital Intermediate workflow and Digital Cinema exhi- bition were both in the early stages of implementation, the advent As anyone involved with feature film and/or TV production knows, of DI-based post workflows facilitated an easier integration of digi- cinematography has recently been experiencing acceleration in the tally captured images, especially when combined with film scans routine use of digital motion picture cameras as viable alternatives that were usually 2K 10-bit DPX. to shooting with film. The beginning of this transitional process Mann’s widely publicized desire to reproduce extremely challeng- started with George Lucas in April 2000. ing shadow detail in the nighttime scenes was effectively realized When Lucas received the first 24p Sony F900 HD camera to shoot via the digital cameras’ ability to handle that creative challenge Star Wars Episode II, Attack of the Clones, cinematography was intro- at low light level exposures better than film could. The brighter duced to what was the beginning of perhaps the most disruptive mo- daytime scenes were shot on film, which was better able to repro- tion imaging technology in the history of motion picture production. -

Red Scarlet Operation Guide

RED SCARLET-X® OPERATION GUIDE build v3.2 www.red.com THIS PAGE INTENTIONALLY BLANK TABLE OF CONTENTS TABLE OF CONTENTS ............... 1 Module Installation / MAIN MENU ................................ 67 DISCLAIMER ................................. 3 Removal ............................... 29 FPS .......................................... 67 Copyright Notice ........................ 3 REDMOTE ................................ 33 Varispeed ............................ 67 Trademark Disclaimer ................ 3 Displays .................................... 35 Basic Settings ..................... 68 COMPLIANCE ............................... 4 BOMB EVF and Bomb EVF Advanced Settings .............. 68 Industrial Canada Emission (OLED) ...................................... 35 ISO (Sensitivity) ........................ 69 Compliance Statements ............ 4 Touchscreen LCD .................... 37 F Stop ...................................... 69 Federal Communications BASIC OPERATION ..................... 38 Exposure .................................. 71 Commission (FCC) Power Sources ......................... 38 Basic Settings ..................... 71 Statement .................................. 4 Side Handle ......................... 38 Advanced Settings .............. 72 Australia and New Zealand Quad Battery Module .......... 39 White Balance .......................... 74 Statement .................................. 5 REDVOLT and REDVOLT Basic Settings ..................... 74 Japan Statements ...................... 5 XL ....................................... -

EBU Tech 3289-2001 Preservation and Reuse of Film Material For

Tech 3289 Guidelines for Broadcasters Preservation and Reuse of Filmmaterial for television May 2001 European Broadcasting Union Case postale 45 Ancienne Route 17A CH-1218 Grand-Saconnex Geneva, Switzerland [email protected] PRESERVATION AND REUSE OF FILM MATERIAL FOR TELEVISION Summary Future television production systems will require easier access to archival film material in an electronic digital form, for both preview and production purposes. In order to meet these requirements, broadcasters will need to transfer large collec- tions of film material – a very time-consuming and expensive operation. Regretta- bly, some of the film material in their vaults may already have started to degrade, due to adverse storage conditions. This document offers comprehensive guidelines to broadcasters on the handling, storage and reuse of motion picture film material for television production, both now and in the future. The telecine transfer process is discussed in detail, with special attention being given to its replay and correction properties. The document also discusses the effects of storage conditions on film material, and the problems encountered when handling endangered, degraded, material in a broadcast environ- ment. There is specific advice on how to check, preserve and store film material. Special attention is paid to the so-called “vinegar syndrome”, which is considered the major threat to the future reuse of acetate-based film material. The EBU working group which produced this document would like to acknowledge Eastman Kodak Company, the Image Permanence Institute and the Rochester Institute of Technology for the facts and figures on motion picture film properties and behaviour that are quoted here. -

Wasson, “The Networked Screen” - 69

WASSON, “THE NETWORKED SCREEN” - 69 THE NETWORKED SCREEN: MOVING IMAGES, MATERIALITY, AND THE AESTHETICS OF SIZE Haidee Wasson However conceived—as institution, experience, or aesthetic—the past and the present of moving images are unthinkable without screens. Large or small, comprised of cloth or liquid crystals, screens provide a primary interface between the forms that constitute visual culture and its inhabitants. Animated by celluloid, electronic, and digital sources, these interfaces broker the increasing presence of moving images in private and public life: museums and galleries, stock exchanges, airplane seats, subways, banks, food courts, record stores, gas stations, office desks, and even the palm of one’s hand. Some screens emit light and some reflect it; some are stationary and others mobile. Variations abound. But, one thing is certain. Contemporary culture is host to more screens in more places.1 In film studies, the proliferation of images and screens has largely been addressed by tending to the ways in which cinema is more malleable than WASSON, “THE NETWORKED SCREEN” - 70 previously understood, appearing everywhere, transforming across varied media and sites of consumption. The dominant metaphors used to discuss the multiplication of screens and the images that fill them have been metaphors of variability, ephemerality, dematerialization, or cross-platform compatibility, wherein screens are reconceptualized as readily collapsible, shrinking and expanding windows. Scholars use terms like “content,” “morphing,” and “themed -

Demographics Report I 2 Geographic Breakdown Overview

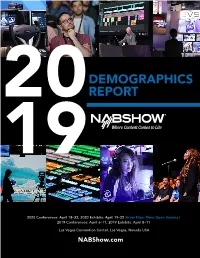

DEMOGRAPHICS 20REPORT 19 2020 Conferences: April 18–22, 2020 Exhibits: April 19–22 Show Floor Now Open Sunday! 2019 Conferences: April 6–11, 2019 Exhibits: April 8–11 Las Vegas Convention Center, Las Vegas, Nevada USA NABShow.com ATTENDANCE HIGHLIGHTS OVERVIEW 27% 63,331 Exhibitors BUYERS 4% Other 24,896 91,921 TOTAL EXHIBITORS 69% TOTAL NAB SHOW REGISTRANTS Buyers Includes BEA registrations 24,086 INTERNATIONAL NAB SHOW REGISTRANTS from 160+ COUNTRIES Full demographic information on International Buyer Attendees on pages 7–9. 1,635* 963,411* 1,361 EXHIBITING NET SQ. FT. PRESS COMPANIES 89,503 m2 *Includes unique companies on the Exhibit Floor and those in Attractions, Pavilions, Meeting Rooms and Suites. 2019 NAB SHOW DEMOGRAPHICS REPORT I 2 GEOGRAPHIC BREAKDOWN OVERVIEW ALL 50 STATES REPRESENTED U.S. ATTENDEE BREAKDOWN 160+ COUNTRIES REPRESENTED 44% Pacific Region 19% Mountain Region 16% Central Region 21% New England/Mid and South Atlantic 24% 26% 30% NON-U.S. ATTENDEE BREAKDOWN 26% Europe 30% Asia 3% 24% N. America (excluding U.S.) 14% 3% 14% S. America 3% Australia 3% Africa 55 45 DELEGATIONS ENROLLED COUNTRIES REPRESENTED BY A DELEGATION COUNTRIESCOUNTRIES REPRESENTEDREPRESENTED Afghanistan Colombia Ethiopia Indonesia Pakistan Thailand Argentina Costa Rica France Ivory Coast Panama Tunisia Benin Democratic Republic Germany Japan Peru Turkey Brazil of Congo Ghana Mexico Russia Uruguay Burkina Faso Dominican Republic Greece Myanmar South Africa Venezuela Caribbean Nations Ecuador Guatemala New Zealand South Korea Chile Egypt Honduras -

Film Types and Formats Film Types and Formats

FILM TYPES AND FORMATS FILM TYPES AND FORMATS A wide variety of camera films are available today, allowing filmmakers to convey exactly the look they envision. Image capture challenges, from routine through extreme, special eJects, and unique processing and projection requirements can be resolved with today’s sophisticated films. TYPES OF MOTION PICTURE FILM There are three major categories of motion picture films: camera, intermediate and laboratory, and print films. All are available as color or black-and-white films. Camera Films Negative and reversal camera films are used in motion picture cameras to capture the original image. Negative film, just as a still camera negative, produces the reverse of the colors and/or tones our eye sees in the scene and must be printed on another film stock or transferred for final viewing. Reversal film gives a positive image directly on the original camera film. The original can be projected and viewed without going through a print process. Reversal film has a higher contrast than a camera negative film. Color Balance Color films are manufactured for use in a variety of light sources without additional filtration. Camera films are balanced for 5500K daylight or 3200K tungsten. Color films designated D are daylight-balanced. Color films designated T are tungsten-balanced. Filtration over the camera lens or over the light source is used when filming in light sources diJerent from the film's balance. Intermediate and Laboratory Films Labs and postproduction facilities use intermediate and print films to produce the intermediate stages needed for special eJects and titling. Once the film has been edited, the cut negative may be transferred to print film.