Hadoop Mapreduce Cookbook.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FREE DOWNLOAD COMPONENTS/THIRD PARTY TERMS and CONDITIONS PROGRAM: Sybase® Replication Server® - Data Assurance Option Version 15.6.X (All Platforms)

FREE DOWNLOAD COMPONENTS/THIRD PARTY TERMS AND CONDITIONS PROGRAM: Sybase® Replication Server® - Data Assurance Option version 15.6.x (all platforms) The Program contains open source and other free download components as identified below. Third party license terms and other third party-required notices are provided below. Apache Software Foundation Components: Derby, Commons Digester and Commons Logging The Program includes software developed by the Apache Software Foundation. The Apache components are provided subject to the Apache License v2.0. Copyright© 1999-2010 The Apache Software Foundation. All rights reserved. A copy of the Apache License v2.0 is attached as Attachment 1. The Derby includes the third party components listed in Attachment 2. Sun Microsystems Component: Java Runtime Environment 1.6 The Java Runtime Environment includes the third party components listed in Attachment 3. Flexera Software Component: InstallAnywhere No third party license terms or additional notices required. Startertool.com Component: Startertool No third party license terms or additional notices required. Sun Microsystems Component: Java Servlets No third party license terms or additional notices required. Sybase Common Security Infrastructure Components This version of the Program includes Common Security Infrastructure v3.1 which contains the following additional open source and free download components: Apache Software Foundation Components: Apache xml-sec, Commons BeanUtils, Commons Digester, Commons Validator, Commons Logging, Commons FileUpload and Struts The Program includes software developed by the Apache Software Foundation. Copyright© 1999- 2004 The Apache Software Foundation. All rights reserved. A copy of the Apache License v2.0 is at Attachment 1. OpenSAML.org Component: OpenSAML The Program includes software made available by OpenSAML.org. -

Security Log Analysis Using Hadoop Harikrishna Annangi Harikrishna Annangi, [email protected]

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by St. Cloud State University St. Cloud State University theRepository at St. Cloud State Culminating Projects in Information Assurance Department of Information Systems 3-2017 Security Log Analysis Using Hadoop Harikrishna Annangi Harikrishna Annangi, [email protected] Follow this and additional works at: https://repository.stcloudstate.edu/msia_etds Recommended Citation Annangi, Harikrishna, "Security Log Analysis Using Hadoop" (2017). Culminating Projects in Information Assurance. 19. https://repository.stcloudstate.edu/msia_etds/19 This Starred Paper is brought to you for free and open access by the Department of Information Systems at theRepository at St. Cloud State. It has been accepted for inclusion in Culminating Projects in Information Assurance by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Security Log Analysis Using Hadoop by Harikrishna Annangi A Starred Paper Submitted to the Graduate Faculty of St. Cloud State University in Partial Fulfillment of the Requirements for the Degree of Master of Science in Information Assurance April, 2016 Starred Paper Committee: Dr. Dennis Guster, Chairperson Dr. Susantha Herath Dr. Sneh Kalia 2 Abstract Hadoop is used as a general-purpose storage and analysis platform for big data by industries. Commercial Hadoop support is available from large enterprises, like EMC, IBM, Microsoft and Oracle and Hadoop companies like Cloudera, Hortonworks, and Map Reduce. Hadoop is a scheme written in Java that allows distributed processes of large data sets across clusters of computers using programming models. A Hadoop frame work application works in an environment that provides storage and computation across clusters of computers. -

Installation Guide

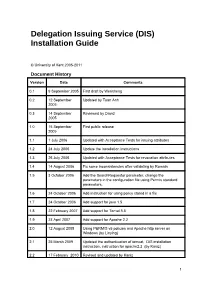

Delegation Issuing Service (DIS) Installation Guide © University of Kent 2005-2011 Document History Version Date Comments 0.1 9 September 2005 First draft by Wensheng 0.2 12 September Updated by Tuan Anh 2005 0.3 14 September Reviewed by David 2005 1.0 15 September First public release 2005 1.1 1 July 2006 Updated with Acceptance Tests for issuing attributes 1.2 24 July 2006 Update the installation instructions 1.3 26 July 2006 Updated with Acceptance Tests for revocation attributes 1.4 14 August 2006 Fix some inconsistencies after validating by Romain 1.5 3 October 2006 Add the SearchRequestor parameter, change the parameters in the configuration file using Permis standard parameters. 1.6 24 October 2006 Add instruction for using policy stored in a file 1.7 24 October 2006 Add support for java 1.5 1.8 22 February 2007 Add support for Tomat 5.5 1.9 23 April 2007 Add support for Apache 2.2 2.0 12 August 2008 Using PERMIS v5 policies and Apache http server on Windows (by Linying) 2.1 25 March 2009 Updated the authentication of tomcat, DIS installation instruction, instruction for apache2.2 (by Kaniz) 2.2 17 February 2010 Revised and updated by Kaniz 1 2.3 16 April 2010 Updated by Mark 2.5 26 October 2010 Install guide rewritten and separated into its own document 2.5.2 2 November 2010 Java installation explained more fully. Tomcat native library installation corrected. Default DIS configuration file inserted. 2.5.4 5 November 2010 Addressed feedback from Kaniz 2.5.5 26 May 2011 Added alternative <FilesMatch> for PHP files. -

Building a Scalable Index and a Web Search Engine for Music on the Internet Using Open Source Software

Department of Information Science and Technology Building a Scalable Index and a Web Search Engine for Music on the Internet using Open Source software André Parreira Ricardo Thesis submitted in partial fulfillment of the requirements for the degree of Master in Computer Science and Business Management Advisor: Professor Carlos Serrão, Assistant Professor, ISCTE-IUL September, 2010 Acknowledgments I should say that I feel grateful for doing a thesis linked to music, an art which I love and esteem so much. Therefore, I would like to take a moment to thank all the persons who made my accomplishment possible and hence this is also part of their deed too. To my family, first for having instigated in me the curiosity to read, to know, to think and go further. And secondly for allowing me to continue my studies, providing the environment and the financial means to make it possible. To my classmate André Guerreiro, I would like to thank the invaluable brainstorming, the patience and the help through our college years. To my friend Isabel Silva, who gave me a precious help in the final revision of this document. Everyone in ADETTI-IUL for the time and the attention they gave me. Especially the people over Caixa Mágica, because I truly value the expertise transmitted, which was useful to my thesis and I am sure will also help me during my professional course. To my teacher and MSc. advisor, Professor Carlos Serrão, for embracing my will to master in this area and for being always available to help me when I needed some advice. -

Tutorial on Web Services

Tutorial on Web Services HY559 Infrastructure Technologies for Large- Scale Service-Oriented Systems Jason Polakis [email protected] Required Software •Eclipse IDE for Java developers EE http://www.eclipse.org/downloads/ •Netbeans IDE http://netbeans.org/downloads/ •Apache Tomcat http://tomcat.apache.org/ •Apache AXIS2 http://axis.apache.org/axis2/java/core/download.cgi •Apache JUDDI http://juddi.apache.org/releases.html Getting Software • Either directly from given links, or: • In Ubuntu (as root) • To search for software – apt -cache search <program name> – Returns list of <packages> with short description • To install software – apt-get install <package> – Installs software, as well as dependencies Web Services “Any piece of software that makes itself available over the Internet and uses a standardized XML messaging system” •Extremely available •Language and platform independent •Distributed application components •Discoverable via a simple find mechanism •Components of Web Services – SOAP (Simple Object Access Protocol) – WSDL (Web Services Description Language) – UDDI (Universal Description, Discovery and Integration) Web Service Architecture •Web Service Protocol Stack –Service transport (transport messages between applications) •HTTP, SMTP, FTP –XML messaging (encode messages in common XML format ) • XML-RPC, WS-Addressing, and SOAP –Service description (describe public interface of service) –Service discovery (centralize services into common registry) •Programming models: –REST-based web services –SOAP-based web services SOAP-based Services •Use SOAP –protocol for exchanging structured information •Use WSDL –xml-based language for describing Web services •WSDL file –created based on the JAVA code in the service –exposed on the net •To use service, must create a client –based on WSDL info •Messages exchanged in SOAP •Java API for XML Web Services (JAX-WS) model also used for SOAP services. -

Natural Language Processing Technique for Information Extraction and Analysis

International Journal of Research Studies in Computer Science and Engineering (IJRSCSE) Volume 2, Issue 8, August 2015, PP 32-40 ISSN 2349-4840 (Print) & ISSN 2349-4859 (Online) www.arcjournals.org Natural Language Processing Technique for Information Extraction and Analysis T. Sri Sravya1, T. Sudha2, M. Soumya Harika3 1 M.Tech (C.S.E) Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 2 Head (I/C) of C.S.E & IT Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 3 M. Tech C.S.E, Assistant Professor, Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] Abstract: In the current internet era, there are a large number of systems and sensors which generate data continuously and inform users about their status and the status of devices and surroundings they monitor. Examples include web cameras at traffic intersections, key government installations etc., seismic activity measurement sensors, tsunami early warning systems and many others. A Natural Language Processing based activity, the current project is aimed at extracting entities from data collected from various sources such as social media, internet news articles and other websites and integrating this data into contextual information, providing visualization of this data on a map and further performing co-reference analysis to establish linkage amongst the entities. Keywords: Apache Nutch, Solr, crawling, indexing 1. INTRODUCTION In today’s harsh global business arena, the pace of events has increased rapidly, with technological innovations occurring at ever-increasing speed and considerably shorter life cycles. -

Horn: a System for Parallel Training and Regularizing of Large-Scale Neural Networks

Horn: A System for Parallel Training and Regularizing of Large-Scale Neural Networks Edward J. Yoon [email protected] I Am ● Edward J. Yoon ● Member and Vice President of Apache Software Foundation ● Committer, PMC, Mentor of ○ Apache Hama ○ Apache Bigtop ○ Apache Rya ○ Apache Horn ○ Apache MRQL ● Keywords: big data, cloud, machine learning, database What is Apache Software Foundation? The Apache Software Foundation is an Non-profit foundation that is dedicated to open source software development 1) What Apache Software Foundation is, 2) Which projects are being developed, 3) What’s HORN? 4) and How to contribute them. Apache HTTP Server (NCSA HTTPd) powers nearly 500+ million websites (There are 644 million websites on the Internet) And Now! 161 Top Level Projects, 108 SubProjects, 39 Incubating Podlings, 4700+ Committers, 550 ASF Members Unknown number of developers and users Domain Diversity Programming Language Diversity Which projects are being developed? What’s HORN? ● Oct 2015, accepted as Apache Incubator Project ● Was born from Apache Hama ● A System for Deep Neural Networks ○ A neuron-level abstraction framework ○ Written in Java :/ ○ Works on distributed environments Apache Hama 1. K-means clustering Hama is 1,000x faster than Apache Mahout At UT Arlington & Oracle 2013 2. PageRank on 10 Billion edges Graph Hama is 3x faster than Facebook’s Giraph At Samsung Electronics (Yoon & Kim) 2015 3. Top-k Set Similarity Joins on Flickr Hama is clearly faster than Apache Spark At IEEE 2015 (University of Melbourne) Why we do this? 1. How to parallelize the training of large models? 2. How to avoid overfitting due to large size of the network, even with large datasets? JonathanNet Distributed Training Parameter Server Parameter Server Parameter Swapping Task 5 Each group performs Task 2 Task 4 Task 3 .. -

Return of Organization Exempt from Income

OMB No. 1545-0047 Return of Organization Exempt From Income Tax Form 990 Under section 501(c), 527, or 4947(a)(1) of the Internal Revenue Code (except black lung benefit trust or private foundation) Open to Public Department of the Treasury Internal Revenue Service The organization may have to use a copy of this return to satisfy state reporting requirements. Inspection A For the 2011 calendar year, or tax year beginning 5/1/2011 , and ending 4/30/2012 B Check if applicable: C Name of organization The Apache Software Foundation D Employer identification number Address change Doing Business As 47-0825376 Name change Number and street (or P.O. box if mail is not delivered to street address) Room/suite E Telephone number Initial return 1901 Munsey Drive (909) 374-9776 Terminated City or town, state or country, and ZIP + 4 Amended return Forest Hill MD 21050-2747 G Gross receipts $ 554,439 Application pending F Name and address of principal officer: H(a) Is this a group return for affiliates? Yes X No Jim Jagielski 1901 Munsey Drive, Forest Hill, MD 21050-2747 H(b) Are all affiliates included? Yes No I Tax-exempt status: X 501(c)(3) 501(c) ( ) (insert no.) 4947(a)(1) or 527 If "No," attach a list. (see instructions) J Website: http://www.apache.org/ H(c) Group exemption number K Form of organization: X Corporation Trust Association Other L Year of formation: 1999 M State of legal domicile: MD Part I Summary 1 Briefly describe the organization's mission or most significant activities: to provide open source software to the public that we sponsor free of charge 2 Check this box if the organization discontinued its operations or disposed of more than 25% of its net assets. -

Projects – Other Than Hadoop! Created By:-Samarjit Mahapatra [email protected]

Projects – other than Hadoop! Created By:-Samarjit Mahapatra [email protected] Mostly compatible with Hadoop/HDFS Apache Drill - provides low latency ad-hoc queries to many different data sources, including nested data. Inspired by Google's Dremel, Drill is designed to scale to 10,000 servers and query petabytes of data in seconds. Apache Hama - is a pure BSP (Bulk Synchronous Parallel) computing framework on top of HDFS for massive scientific computations such as matrix, graph and network algorithms. Akka - a toolkit and runtime for building highly concurrent, distributed, and fault tolerant event-driven applications on the JVM. ML-Hadoop - Hadoop implementation of Machine learning algorithms Shark - is a large-scale data warehouse system for Spark designed to be compatible with Apache Hive. It can execute Hive QL queries up to 100 times faster than Hive without any modification to the existing data or queries. Shark supports Hive's query language, metastore, serialization formats, and user-defined functions, providing seamless integration with existing Hive deployments and a familiar, more powerful option for new ones. Apache Crunch - Java library provides a framework for writing, testing, and running MapReduce pipelines. Its goal is to make pipelines that are composed of many user-defined functions simple to write, easy to test, and efficient to run Azkaban - batch workflow job scheduler created at LinkedIn to run their Hadoop Jobs Apache Mesos - is a cluster manager that provides efficient resource isolation and sharing across distributed applications, or frameworks. It can run Hadoop, MPI, Hypertable, Spark, and other applications on a dynamically shared pool of nodes. -

Neon Core Infrastructure Services

NeOn-project.org NeOn: Lifecycle Support for Networked Ontologies Integrated Project (IST-2005-027595) Priority: IST-2004-2.4.7 – “Semantic-based knowledge and content systems” D6.4.2 NeOn core infrastructure services Deliverable Co-ordinator: Walter Waterfeld Deliverable Co-ordinating Institution: Software AG (SAG) Other Authors: Diana Maynard, University of Sheffield (USFD), Ian Roberts, University of Sheffield (USFD), Michael Gesmann, Software AG (SAG) Document Identifier: NEON/2010/D6.4.2/v1.0 Date due: October 31st, 2009 Class Deliverable: NEON EU-IST-2005-027595 Submission date: January 31st , 2010 Project start date: March 1, 2006 Version: v1.0 Project duration: 4 years State: Final Distribution: Public 2006–2010 © Copyright lies with the respective authors and their institutions. Page 2 of 25 NeOn Integrated Project EU-IST-027595 NeOn Consortium This document is a part of the NeOn research project funded by the IST Programme of the Commission of the European Communities by the grant number IST-2005-027595. The following partners are involved in the project: Open University (OU) – Coordinator Universität Karlsruhe – TH (UKARL) Knowledge Media Institute – KMi Institut für Angewandte Informatik und Formale Berrill Building, Walton Hall Beschreibungsverfahren – AIFB Milton Keynes, MK7 6AA Englerstrasse 11 United Kingdom D-76128 Karlsruhe, Germany Contact person: Enrico Motta Contact person: Andreas Harth E-mail address: [email protected] E-mail address: [email protected] Universidad Politécnica de Madrid (UPM) Software AG (SAG) Campus de Montegancedo Uhlandstrasse 12 28660 Boadilla del Monte 64297 Darmstadt Spain Germany Contact person: Asunción Gómez Pérez Contact person: Walter Waterfeld E-mail address: [email protected] E-mail address: [email protected] Intelligent Software Components S.A. -

Apache AXIS2 Release Notes

AXIS2 for OpenVMS I64 July 2017 1. Introduction Apache AXIS2 for OpenVMS is the core engine for web services. It is a complete redesign and rewrite of the previously used Apache AXIS SOAP stack. This release of AXIS2 for OpenVMS is based on the latest 1.7.3 release from the Apache Software Foundation, and includes various new features and bug fixes. The release also provides a prebuilt Java servlet, which can be deployed within an application server such as Tomcat. Users are strongly encouraged to upgrade to 1.7-3 to take advantage of these improvements. This OpenVMS port of AXIS2 includes all Apache AXIS2 functionality provided by the Open Source 1.7.3 release. 2. Acknowledgements VMS Software Inc. would like to acknowledge the work of the Apache AXIS2 development team for their ongoing efforts in developing and supporting this software. 3. What’s new in this release AXIS2 Version 1.7-3 for OpenVMS is based on Apache AXIS2 1.7.3, and includes the following changes: Apache Axis2 1.7.3 is a security release that contains a fix for CVE-2010-3981. That security vulnerability affects the admin console that is part of the Axis2 Web application. The admin console now has a CSRF prevention mechanism and all known XSS vulnerabilities as well as two non-security bugs in the admin console (AXIS2-4764 and AXIS2-5716) have been fixed. Users of the AXIS2 WAR distribution are encouraged to upgrade to 1.7.3 to take advantage of these improvements. This release also fixes a regression in the HTTP client code that is triggered by the presence of certain types of cookies in HTTP responses (see AXIS2-5772). -

Optimizing Resource Utilization in Distributed Computing Systems For

THESE` DE DOCTORAT DE L’ETABLISSEMENT´ UNIVERSITE´ BOURGOGNE FRANCHE-COMTE´ PREPAR´ EE´ A` L’UNIVERSITE´ DE FRANCHE-COMTE´ Ecole´ doctorale n°37 Sciences Pour l’Ingenieur´ et Microtechniques Doctorat d’Informatique par ANTHONY NASSAR Optimizing Resource Utilization in Distributed Computing Systems for Automotive Applications Optimisation de l’utilisation des ressources dans les systemes` informatiques distribues´ pour les applications automobiles These` present´ ee´ et soutenue publiquement le 04-02-2021 a` Belfort, devant le Jury compose´ de : MR CERIN CHRISTOPHE Professeur a` l’Universite´ Sorbonne Paris Nord President´ MR CHBEIR RICHARD Professeur a` l’Universite´ de Pau et des Pays de l’Adour Rapporteur MME BENBERNOU SALIMA Professeur a` l’Universite´ Paris-Descartes Rapporteur MR MOSTEFAOUI AHMED Maˆıtre de conferences´ a` l’Universite´ de Franche-Comte´ Directeur de these` MR DESSABLES FRANC¸ OIS Ingenieur´ chez Groupe PSA Codirecteur de these` DOCTORAL THESIS OF THE UNIVERSITY BOURGOGNE FRANCHE-COMTE´ INSTITUTION PREPARED AT UNIVERSITE´ DE FRANCHE-COMTE´ Doctoral school n°37 Engineering Sciences and Microtechnologies Computer Science Doctorate by ANTHONY NASSAR Optimizing Resource Utilization in Distributed Computing Systems for Automotive Applications Optimisation de l’utilisation des ressources dans les systemes` informatiques distribues´ pour les applications automobiles Thesis presented and publicly defended in Belfort, on 04-02-2021 Composition of the Jury : CERIN CHRISTOPHE Professor at Universite´ Sorbonne Paris Nord President