Information Processing and Energy Dissipation in Neurons

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Spillway Design for the Dam's Height Over 300 Meters

E3S Web of Conferences 40, 05009 (2018) https://doi.org/10.1051/e3sconf/20184005009 River Flow 2018 The spillway design for the dam’s height over 300 meters Weiwei Yao1,*, Yuansheng Chen1, Xiaoyi Ma2,3,* , and Xiaobin Li4 1 Key Laboratory of Environmental Remediation, Institute of Geographic Sciences and Natural Resources Research, China Academy of Sciences, Beijing, 100101, China. 2 Key Laboratory of Carrying Capacity Assessment for Resource and Environment, Ministry of Land and Resources. 3 College of Water Resources and Architectural Engineering, Northwest A&F University, Yangling, Shaanxi 712100, P.R. China. 4 Powerchina Guiyang Engineering Corporation Limited, Guizhou, 550081, China. Abstract˖According the current hydropower development plan in China, numbers of hydraulic power plants with height over 300 meters will be built in the western region of China. These hydraulic power plants would be in crucial situation with the problems of high water head, huge discharge and narrow riverbed. Spillway is the most common structure in power plant which is used to drainage and flood release. According to the previous research, the velocity would be reaching to 55 m/s and the discharge can reach to 300 m3/s.m during spillway operation in the dam height over 300 m. The high velocity and discharge in the spillway may have the problems such as atomization nearby, slides on the side slope and river bank, Vibration on the pier, hydraulic jump, cavitation and the negative pressure on the spill way surface. All these problems may cause great disasters for both project and society. This paper proposes a novel method for flood release on high water head spillway which is named Rumei hydropower spillway located in the western region of China. -

The Dissipation-Time Uncertainty Relation

The dissipation-time uncertainty relation Gianmaria Falasco∗ and Massimiliano Esposito† Complex Systems and Statistical Mechanics, Department of Physics and Materials Science, University of Luxembourg, L-1511 Luxembourg, Luxembourg (Dated: March 16, 2020) We show that the dissipation rate bounds the rate at which physical processes can be performed in stochastic systems far from equilibrium. Namely, for rare processes we prove the fundamental tradeoff hS˙eiT ≥ kB between the entropy flow hS˙ei into the reservoirs and the mean time T to complete a process. This dissipation-time uncertainty relation is a novel form of speed limit: the smaller the dissipation, the larger the time to perform a process. Despite operating in noisy environments, complex sys- We show in this Letter that the dissipation alone suf- tems are capable of actuating processes at finite precision fices to bound the pace at which any stationary (or and speed. Living systems in particular perform pro- time-periodic) process can be performed. To do so, we cesses that are precise and fast enough to sustain, grow set up the most appropriate framework to describe non- and replicate themselves. To this end, nonequilibrium transient operations. Namely, we unambiguously define conditions are required. Indeed, no process that is based the process duration by the first-passage time for an ob- on a continuous supply of (matter, energy, etc.) currents servable to reach a given threshold [20–23]. We first de- can take place without dissipation. rive a bound for the rate of the process r, uniquely spec- Recently, an intrinsic limitation on precision set by dis- ified by the survival probability that the process is not sipation has been established by thermodynamic uncer- yet completed at time t [24]. -

Estimation of the Dissipation Rate of Turbulent Kinetic Energy: a Review

Chemical Engineering Science 229 (2021) 116133 Contents lists available at ScienceDirect Chemical Engineering Science journal homepage: www.elsevier.com/locate/ces Review Estimation of the dissipation rate of turbulent kinetic energy: A review ⇑ Guichao Wang a, , Fan Yang a,KeWua, Yongfeng Ma b, Cheng Peng c, Tianshu Liu d, ⇑ Lian-Ping Wang b,c, a SUSTech Academy for Advanced Interdisciplinary Studies, Southern University of Science and Technology, Shenzhen 518055, PR China b Guangdong Provincial Key Laboratory of Turbulence Research and Applications, Center for Complex Flows and Soft Matter Research and Department of Mechanics and Aerospace Engineering, Southern University of Science and Technology, Shenzhen 518055, Guangdong, China c Department of Mechanical Engineering, 126 Spencer Laboratory, University of Delaware, Newark, DE 19716-3140, USA d Department of Mechanical and Aeronautical Engineering, Western Michigan University, Kalamazoo, MI 49008, USA highlights Estimate of turbulent dissipation rate is reviewed. Experimental works are summarized in highlight of spatial/temporal resolution. Data processing methods are compared. Future directions in estimating turbulent dissipation rate are discussed. article info abstract Article history: A comprehensive literature review on the estimation of the dissipation rate of turbulent kinetic energy is Received 8 July 2020 presented to assess the current state of knowledge available in this area. Experimental techniques (hot Received in revised form 27 August 2020 wires, LDV, PIV and PTV) reported on the measurements of turbulent dissipation rate have been critically Accepted 8 September 2020 analyzed with respect to the velocity processing methods. Traditional hot wires and LDV are both a point- Available online 12 September 2020 based measurement technique with high temporal resolution and Taylor’s frozen hypothesis is generally required to transfer temporal velocity fluctuations into spatial velocity fluctuations in turbulent flows. -

Turbine By-Pass with Energy Dissipation System for Transient Control

September 2014 Page 1 TURBINE BY-PASS WITH ENERGY DISSIPATION SYSTEM FOR TRANSIENT CONTROL J. Gale, P. Brejc, J. Mazij and A. Bergant Abstract: The Energy Dissipation System (EDS) is a Hydro Power Plant (HPP) safety device designed to prevent pressure rise in the water conveyance system and to prevent turbine runaway during the transient event by bypassing water flow away from the turbine. The most important part of the EDS is a valve whose operation shall be synchronized with the turbine guide vane apparatus. The EDS is installed where classical water hammer control devices are not feasible or are not economically acceptable due to certain requirements. Recent manufacturing experiences show that environmental restrictions revive application of the EDS. Energy dissipation: what, why, where, how, ... Each hydropower plant’s operation depends on available water potential and on the other hand (shaft) side, it depends on electricity consumption/demand. During the years of operation every HPP occasionally encounters also some specific operating conditions like for example significant sudden changes of power load change, or for example emergency shutdown. During such rapid changes of the HPP’s operation, water hammer and possible turbine runaway inevitably occurs. Hydraulic transients are disturbances of flow in the pipeline which are initiated by a change from one steady state to another. The main disturbance is a pressure wave whose magnitude depends on the speed of change (slow - fast) and difference between the initial and the final state (full discharge – zero discharge). Numerous pressure waves are propagating along the hydraulic water conveyance system and they represent potential risk of damaging the water conveyance system as well as water turbine in the case of inappropriate design. -

Evaluating the Transient Energy Dissipation in a Centrifugal Impeller Under Rotor-Stator Interaction

Article Evaluating the Transient Energy Dissipation in a Centrifugal Impeller under Rotor-Stator Interaction Ran Tao, Xiaoran Zhao and Zhengwei Wang * 1 Department of Energy and Power Engineering, Tsinghua University, Beijing 100084, China; [email protected] (R.T.); [email protected] (X.Z.) * Correspondence: [email protected] Received: 20 February 2019; Accepted: 8 March 2019; Published: 11 March 2019 Abstract: In fluid machineries, the flow energy dissipates by transforming into internal energy which performs as the temperature changes. The flow-induced noise is another form that flow energy turns into. These energy dissipations are related to the local flow regime but this is not quantitatively clear. In turbomachineries, the flow regime becomes pulsating and much more complex due to rotor-stator interaction. To quantitatively understand the energy dissipations during rotor-stator interaction, the centrifugal air pump with a vaned diffuser is studied based on total energy modeling, turbulence modeling and acoustic analogy method. The numerical method is verified based on experimental data and applied to further simulation and analysis. The diffuser blade leading-edge site is under the influence of impeller trailing-edge wake. The diffuser channel flow is found periodically fluctuating with separations from the blade convex side. Stall vortex is found on the diffuser blade trailing-edge near outlet. High energy loss coefficient sites are found in the undesirable flow regions above. Flow-induced noise is also high in these sites except in the stall vortex. Frequency analyses show that the impeller blade frequency dominates in the diffuser channel flow except in the outlet stall vortexes. -

Effects of Hydropower Reservoir Withdrawal Temperature on Generation and Dissipation of Supersaturated TDG

p-ISSN: 0972-6268 Nature Environment and Pollution Technology (Print copies up to 2016) Vol. 19 No. 2 pp. 739-745 2020 An International Quarterly Scientific Journal e-ISSN: 2395-3454 Original Research Paper Originalhttps://doi.org/10.46488/NEPT.2020.v19i02.029 Research Paper Open Access Journal Effects of Hydropower Reservoir Withdrawal Temperature on Generation and Dissipation of Supersaturated TDG Lei Tang*, Ran Li *†, Ben R. Hodges**, Jingjie Feng* and Jingying Lu* *State Key Laboratory of Hydraulics and Mountain River Engineering, Sichuan University, 24 south Section 1 Ring Road No.1 ChengDu, SiChuan 610065 P.R.China **Department of Civil, Architectural and Environmental Engineering, University of Texas at Austin, USA †Corresponding author: Ran Li; [email protected] Nat. Env. & Poll. Tech. ABSTRACT Website: www.neptjournal.com One of the challenges for hydropower dam operation is the occurrence of supersaturated total Received: 29-07-2019 dissolved gas (TDG) levels that can cause gas bubble disease in downstream fish. Supersaturated Accepted: 08-10-2019 TDG is generated when water discharged from a dam entrains air and temporarily encounters higher pressures (e.g. in a plunge pool) where TDG saturation occurs at a higher gas concentration, allowing a Key Words: greater mass of gas to enter into solution than would otherwise occur at ambient pressures. As the water Hydropower; moves downstream into regions of essentially hydrostatic pressure, the gas concentration of saturation Total dissolved gas; will drop, as a result, the mass of dissolved gas (which may not have substantially changed) will now Supersaturation; be at supersaturated conditions. The overall problem arises because the generation of supersaturated Hydropower station TDG at the dam occurs faster than the dissipation of supersaturated TDG in the downstream reach. -

"Thermal Modeling of a Storage Cask System: Capability Development."

THERMAL MODELING OF A STORAGE CASK SYSTEM: CAPABILITY DEVELOPMENT Prepared for U.S. Nuclear Regulatory Commission Contract NRC–02–02–012 Prepared by P.K. Shukla1 B. Dasgupta1 S. Chocron2 W. Li2 S. Green2 1Center for Nuclear Waste Regulatory Analyses 2Southwest Research Institute® San Antonio, Texas May 2007 ABSTRACT The objective of the numerical simulations documented in this report was to develop a capability to conduct thermal analyses of a cask system loaded with spent nuclear fuel. At the time these analyses began, the U.S. Department of Energy (DOE) surface facility design at the potential high-level waste repository at Yucca Mountain included the option of dry transfer of spent nuclear fuel from transportation casks to the waste packages (DOE, 2005; Bechtel SAIC Company, LLC, 2005). Because the temperature of the fuel and cladding during dry handling operations could affect their long-term performance, CNWRA staff focused on developing a capability to conduct thermal simulations. These numerical simulations were meant to develop staff expertise using complex heat transfer models to review DOE assessment of fuel cladding temperature. As this study progressed, DOE changed its design concept to include handling of spent nuclear fuel in transportation, aging, and disposal canisters (Harrington, 2006). This new design concept could eliminate the exposure of spent nuclear fuel to the atmosphere at the repository surface facilities. However, the basic objective of developing staff expertise for thermal analysis remains valid because evaluation of cladding performance, which depends on thermal history, may be needed during a review of the potential license application. Based on experience gained in this study, appropriate heat transfer models for the transport, aging, and disposal canister can be developed, if needed. -

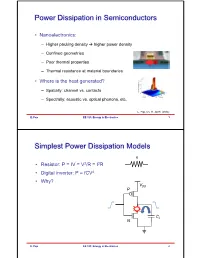

Power Dissipation in Semiconductors Simplest Power Dissipation Models

Power Dissipation in Semiconductors • Nanoelectronics: – Higher packing density higher power density – Confined geometries – Poor thermal properties – Thermal resistance at material boundaries • Where is the heat generated? – Spatially: channel vs. contacts – Spectrally: acoustic vs. optical phonons, etc. E. Pop, Ch. 11, ARHT (2014) E. Pop EE 323: Energy in Electronics 1 Simplest Power Dissipation Models R •Resistor: P = IV = V2/R = I2R • Digital inverter: P = fCV2 •Why? VDD P CL N E. Pop EE 323: Energy in Electronics 2 Revisit Simple Landauer Resistor Ballistic Diffusive I = q/t ? µ P = qV/t = IV 1 µ1 E E µ 2 µ2 µ1-µ2 = qV hL R12 2q Q: Where is the power dissipated and how much? E. Pop EE 323: Energy in Electronics 3 Three Regimes of Device Heating • Diffusive L >> λ – This is the classical case – Continuum (Fourier) applies • Quasi-Ballistic – Few phonons emitted – Some hot carriers escape to contact – Boltzmann transport equation • Near a barrier or contact – Thermionic or thermoelectric effects – Tunneling requires quantum treatment E. Pop, Nano Research 3, 147 (2010) E. Pop EE 323: Energy in Electronics 4 Continuum View of Heat Generation (1) • Lumped model: 2 PIVIR µ1 (phonon emission) • Finite-element model: E (recombination) µ HP JE 2 • More complete finite-element model: HRGEkTJE GB 3 be careful: radiative vs. phonon-assisted recombination/generation?! E. Pop EE 323: Energy in Electronics 5 Continuum View of Heat Generation (2) • Including optical recombination power (direct gap) JE H opt G opt q • Including thermoelectric effects HTSTE J S – where ST inside a continuum (Thomson) T – or SSA SB at a junction between materials A and B (Peltier) [replace J with I total power at A-B junction is TI∆S, in Watts] E. -

Maximum Frictional Dissipation and the Information Entropy of Windspeeds

J. Non-Equilib. Thermodyn. 2002 Vol. 27 pp. 229±238 Á Á Maximum Frictional Dissipation and the Information Entropy of Windspeeds Ralph D. Lorenz Lunar and Planetary Lab, University of Arizona, Tucson, AZ, USA Communicated by A. Bejan, Durham, NC, USA Registration Number 926 Abstract A link is developed between the work that thermally-driven winds are capable of performing and the entropy of the windspeed history: this information entropy is minimized when the minimum work required to transport the heat by wind is dissipated. When the system is less constrained, the information entropy of the windspeed record increases as the windspeed ¯uctuates to dissipate its available work. Fluctuating windspeeds are a means for the system to adjust to a peak in entropy production. 1. Introduction It has been long-known that solar heating is the driver of winds [1]. Winds represent the thermodynamic conversion of heat into work: this work is expended in accelerat- ing airmasses, with that kinetic energy ultimately dissipated by turbulent drag. Part of the challenge of the weather forecaster is to predict the speed and direction of wind. In this paper, I explore how the complexity of wind records (and more generally, of velocity descriptions of thermally-driven ¯ow) relates to the available work and the frictional or viscous dissipation. 2. Zonal Energy Balance Two linked heat ¯uxes drive the Earth's weather ± the vertical transport of heat upwards, and the transport of heat from low to high latitudes. In the present paper only horizontal transport is considered, although the concepts may be extended by analogy into the vertical dimension. -

Measurement of Heat Dissipation and Thermal-Stability of Power Modules on DBC Substrates with Various Ceramics by Sic Micro-Heater Chip System and Ag Sinter Joining

micromachines Article Measurement of Heat Dissipation and Thermal-Stability of Power Modules on DBC Substrates with Various Ceramics by SiC Micro-Heater Chip System and Ag Sinter Joining Dongjin Kim 1,2, Yasuyuki Yamamoto 3, Shijo Nagao 2,* , Naoki Wakasugi 4, Chuantong Chen 2,* and Katsuaki Suganuma 2 1 Department of Adaptive Machine Systems, Graduate School of Engineering, Osaka University, Osaka 565-0871, Japan; [email protected] 2 The Institute of Scientific and Industrial Research, Osaka University, Osaka 567-0047, Japan; [email protected] 3 Specialty products development, Tokuyama Co., Yamaguchi 746-0006, Japan; [email protected] 4 Division of system development, Yamato Scientific Co., Ltd., Tokyo 135-0047, Japan; [email protected] * Correspondence: [email protected] (S.N.); [email protected] (C.C.); Tel.: +81-6-6879-8521 (S.N. & C.C.) Received: 4 October 2019; Accepted: 28 October 2019; Published: 31 October 2019 Abstract: This study introduced the SiC micro-heater chip as a novel thermal evaluation device for next-generation power modules and to evaluate the heat resistant performance of direct bonded copper (DBC) substrate with aluminum nitride (AlN-DBC), aluminum oxide (DBC-Al2O3) and silicon nitride (Si3N4-DBC) ceramics middle layer. The SiC micro-heater chips were structurally sound bonded on the two types of DBC substrates by Ag sinter paste and Au wire was used to interconnect the SiC and DBC substrate. The SiC micro-heater chip power modules were fixed on a water-cooling plate by a thermal interface material (TIM), a steady-state thermal resistance measurement and a power cycling test were successfully conducted. -

Forms of the Dissipation Function for a Class of Viscoplastic Models

NISSUNA UMANA INVESTIGAZIONE SI PUO DIMANDARE VERA SCIENZIA S’ESSA NON PASSA PER LE MATEMATICHE DIMOSTRAZIONI LEONARDO DA VINCI vol. 5 no. 3-4 2017 Mathematics and Mechanics of Complex Systems MASSIMO CUOMO FORMS OF THE DISSIPATION FUNCTION FOR A CLASS OF VISCOPLASTIC MODELS msp MATHEMATICS AND MECHANICS OF COMPLEX SYSTEMS Vol. 5, No. 3-4, 2017 dx.doi.org/10.2140/memocs.2017.5.217 ∩ MM FORMS OF THE DISSIPATION FUNCTION FOR A CLASS OF VISCOPLASTIC MODELS MASSIMO CUOMO The minimum properties that allow a dissipation functional to describe a behav- ior of viscoplastic type are analyzed. It is considered a material model based on an internal variable description of the irreversible processes and characterized by the existence of an elastic region. The dissipation functional derived includes the case of time-independent plasticity in the limit. The complementary dissipation functional and the flow rule are also stated. The model analyzed leads naturally to the fulfillment of the maximum dissipation postulate and thus to associative viscoplasticity. A particular class of models is analyzed, and similarities to and differences from diffused viscoplastic formats are given. 1. Introduction Strain-rate-dependent behavior is characteristic of many materials at least beyond a certain level of stress, temperature or strain rate. Strain-rate sensitivity and time- temperature superposition effects occur when the time scale of the process is com- parable with a characteristic relaxation time of the material. In this paper, we consider the case of a relatively short relaxation time characterizing irreversible phenomena such as plasticity and damage, leading to a generalized viscoplastic model. -

On Rate-Dependent Dissipation Effects in Electro-Elasticity Arxiv

On rate-dependent dissipation effects in electro-elasticity Prashant Saxena, Duc Khoi Vu, Paul Steinmann Chair of Applied Mechanics, University of Erlangen{Nuremberg, Egerlandstraße 5, 91058 Erlangen, Germany Abstract This paper deals with the mathematical modelling of large strain electro-viscoelastic deformations in electro-active polymers. Energy dissipation is assumed to occur due to mechanical viscoelasticity of the polymer as well as due to time-dependent effective polarisation of the material. Additive decomposition of the electric field E = Ee + Ev and multiplicative decomposition of the deformation gradient F = FeFv are proposed to model the internal dissipation mechanisms. The theory is illustrated with some numerical examples in the end. Keywords: Nonlinear electroelasticity, Rate dependence, Viscoelasticity, Electromechanical coupling MSC codes: 74B20, 74D10, 74F15 Original version published in the International Journal of Nonlinear Me- chanics 62: 1{11 (2014) doi: 10.1016/j.ijnonlinmec.2014.02.002 1 Introduction arXiv:1502.02479v1 [physics.class-ph] 9 Feb 2015 Over the past few decades, the theory and numerical simulation of the cou- pled electro-mechanical problem have been interesting subjects of research, cf. Pao [1] and Eringen and Maugin [2]. However, with the invention of the so-called electro-active polymers (EAPs) capable of exhibiting large deforma- tions in response to the application of electric fields, several new challenges 1 appear and need to be addressed. Open problems remain both in the under- standing of electro-mechanical coupling in soft matter and in simulating the behavior of electro-sensitive bodies under the influence of an electric field. EAPs can be used as alternatives to materials traditionally used to de- velop actuators like piezoelectric ceramics, shape memory metals and electro- rheological fluids, cf.