Pagerank Beyond the Web

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Search Engine Optimization: a Survey of Current Best Practices

Grand Valley State University ScholarWorks@GVSU Technical Library School of Computing and Information Systems 2013 Search Engine Optimization: A Survey of Current Best Practices Niko Solihin Grand Valley Follow this and additional works at: https://scholarworks.gvsu.edu/cistechlib ScholarWorks Citation Solihin, Niko, "Search Engine Optimization: A Survey of Current Best Practices" (2013). Technical Library. 151. https://scholarworks.gvsu.edu/cistechlib/151 This Project is brought to you for free and open access by the School of Computing and Information Systems at ScholarWorks@GVSU. It has been accepted for inclusion in Technical Library by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. Search Engine Optimization: A Survey of Current Best Practices By Niko Solihin A project submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Information Systems at Grand Valley State University April, 2013 _______________________________________________________________________________ Your Professor Date Search Engine Optimization: A Survey of Current Best Practices Niko Solihin Grand Valley State University Grand Rapids, MI, USA [email protected] ABSTRACT 2. Build and maintain an index of sites’ keywords and With the rapid growth of information on the web, search links (indexing) engines have become the starting point of most web-related 3. Present search results based on reputation and rele- tasks. In order to reach more viewers, a website must im- vance to users’ keyword combinations (searching) prove its organic ranking in search engines. This paper intro- duces the concept of search engine optimization (SEO) and The primary goal is to e↵ectively present high-quality, pre- provides an architectural overview of the predominant search cise search results while efficiently handling a potentially engine, Google. -

Oracle Database VLDB and Partitioning Guide, 11G Release 2 (11.2) E25523-01

Oracle® Database VLDB and Partitioning Guide 11g Release 2 (11.2) E25523-01 September 2011 Oracle Database VLDB and Partitioning Guide, 11g Release 2 (11.2) E25523-01 Copyright © 2008, 2011, Oracle and/or its affiliates. All rights reserved. Contributors: Hermann Baer, Eric Belden, Jean-Pierre Dijcks, Steve Fogel, Lilian Hobbs, Paul Lane, Sue K. Lee, Diana Lorentz, Valarie Moore, Tony Morales, Mark Van de Wiel This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). -

Data Warehouse Fundamentals for Storage Professionals – What You Need to Know EMC Proven Professional Knowledge Sharing 2011

Data Warehouse Fundamentals for Storage Professionals – What You Need To Know EMC Proven Professional Knowledge Sharing 2011 Bruce Yellin Advisory Technology Consultant EMC Corporation [email protected] Table of Contents Introduction ................................................................................................................................ 3 Data Warehouse Background .................................................................................................... 4 What Is a Data Warehouse? ................................................................................................... 4 Data Mart Defined .................................................................................................................. 8 Schemas and Data Models ..................................................................................................... 9 Data Warehouse Design – Top Down or Bottom Up? ............................................................10 Extract, Transformation and Loading (ETL) ...........................................................................11 Why You Build a Data Warehouse: Business Intelligence .....................................................13 Technology to the Rescue?.......................................................................................................19 RASP - Reliability, Availability, Scalability and Performance ..................................................20 Data Warehouse Backups .....................................................................................................26 -

Google Matrix Analysis of Bi-Functional SIGNOR Network of Protein-Protein Interactions Klaus M

bioRxiv preprint doi: https://doi.org/10.1101/750695; this version posted September 1, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Bioinformatics doi.10.1093/bioinformatics/xxxxxx Manuscript Category Systems biology Google matrix analysis of bi-functional SIGNOR network of protein-protein interactions Klaus M. Frahm 1 and Dima L. Shepelyansky 1,∗ 1Laboratoire de Physique Théorique, IRSAMC, Université de Toulouse, CNRS, UPS, 31062 Toulouse, France. ∗To whom correspondence should be addressed. Associate Editor: XXXXXXX Received on August 28, 2019; revised on XXXXX; accepted on XXXXX Abstract Motivation: Directed protein networks with only a few thousand of nodes are rather complex and do not allow to extract easily the effective influence of one protein to another taking into account all indirect pathways via the global network. Furthermore, the different types of activation and inhibition actions between proteins provide a considerable challenge in the frame work of network analysis. At the same time these protein interactions are of crucial importance and at the heart of cellular functioning. Results: We develop the Google matrix analysis of the protein-protein network from the open public database SIGNOR. The developed approach takes into account the bi-functional activation or inhibition nature of interactions between each pair of proteins describing it in the frame work of Ising-spin matrix transitions. We also apply a recently developed linear response theory for the Google matrix which highlights a pathway of proteins whose PageRank probabilities are most sensitive with respect to two proteins selected for the analysis. -

A Network Approach of the Mandatory Influenza Vaccination Among Healthcare Workers

Wright State University CORE Scholar Master of Public Health Program Student Publications Master of Public Health Program 2014 Best Practices: A Network Approach of the Mandatory Influenza Vaccination Among Healthcare Workers Greg Attenweiler Wright State University - Main Campus Angie Thomure Wright State University - Main Campus Follow this and additional works at: https://corescholar.libraries.wright.edu/mph Part of the Influenza Virus accinesV Commons Repository Citation Attenweiler, G., & Thomure, A. (2014). Best Practices: A Network Approach of the Mandatory Influenza Vaccination Among Healthcare Workers. Wright State University, Dayton, Ohio. This Master's Culminating Experience is brought to you for free and open access by the Master of Public Health Program at CORE Scholar. It has been accepted for inclusion in Master of Public Health Program Student Publications by an authorized administrator of CORE Scholar. For more information, please contact library- [email protected]. Running Head: A NETWORK APPROACH 1 Best Practices: A network approach of the mandatory influenza vaccination among healthcare workers Greg Attenweiler Angie Thomure Wright State University A NETWORK APPROACH 2 Acknowledgements We would like to thank Michele Battle-Fisher and Nikki Rogers for donating their time and resources to help us complete our Culminating Experience. We would also like to thank Michele Battle-Fisher for creating the simulation used in our Culmination Experience. Finally we would like to thank our family and friends for all of the -

Clique-Attacks Detection in Web Search Engine for Spamdexing Using K-Clique Percolation Technique

International Journal of Machine Learning and Computing, Vol. 2, No. 5, October 2012 Clique-Attacks Detection in Web Search Engine for Spamdexing using K-Clique Percolation Technique S. K. Jayanthi and S. Sasikala, Member, IACSIT Clique cluster groups the set of nodes that are completely Abstract—Search engines make the information retrieval connected to each other. Specifically if connections are added task easier for the users. Highly ranking position in the search between objects in the order of their distance from one engine query results brings great benefits for websites. Some another a cluster if formed when the objects forms a clique. If website owners interpret the link architecture to improve ranks. a web site is considered as a clique, then incoming and To handle the search engine spam problems, especially link farm spam, clique identification in the network structure would outgoing links analysis reveals the cliques existence in web. help a lot. This paper proposes a novel strategy to detect the It means strong interconnection between few websites with spam based on K-Clique Percolation method. Data collected mutual link interchange. It improves all websites rank, which from website and classified with NaiveBayes Classification participates in the clique cluster. In Fig. 2 one particular case algorithm. The suspicious spam sites are analyzed for of link spam, link farm spam is portrayed. That figure points clique-attacks. Observations and findings were given regarding one particular node (website) is pointed by so many nodes the spam. Performance of the system seems to be good in terms of accuracy. (websites), this structure gives higher rank for that website as per the PageRank algorithm. -

Introduction to Network Science & Visualisation

IFC – Bank Indonesia International Workshop and Seminar on “Big Data for Central Bank Policies / Building Pathways for Policy Making with Big Data” Bali, Indonesia, 23-26 July 2018 Introduction to network science & visualisation1 Kimmo Soramäki, Financial Network Analytics 1 This presentation was prepared for the meeting. The views expressed are those of the author and do not necessarily reflect the views of the BIS, the IFC or the central banks and other institutions represented at the meeting. FNA FNA Introduction to Network Science & Visualization I Dr. Kimmo Soramäki Founder & CEO, FNA www.fna.fi Agenda Network Science ● Introduction ● Key concepts Exposure Networks ● OTC Derivatives ● CCP Interconnectedness Correlation Networks ● Housing Bubble and Crisis ● US Presidential Election Network Science and Graphs Analytics Is already powering the best known AI applications Knowledge Social Product Economic Knowledge Payment Graph Graph Graph Graph Graph Graph Network Science and Graphs Analytics “Goldman Sachs takes a DIY approach to graph analytics” For enhanced compliance and fraud detection (www.TechTarget.com, Mar 2015). “PayPal relies on graph techniques to perform sophisticated fraud detection” Saving them more than $700 million and enabling them to perform predictive fraud analysis, according to the IDC (www.globalbankingandfinance.com, Jan 2016) "Network diagnostics .. may displace atomised metrics such as VaR” Regulators are increasing using network science for financial stability analysis. (Andy Haldane, Bank of England Executive -

Received Citations As a Main SEO Factor of Google Scholar Results Ranking

RECEIVED CITATIONS AS A MAIN SEO FACTOR OF GOOGLE SCHOLAR RESULTS RANKING Las citas recibidas como principal factor de posicionamiento SEO en la ordenación de resultados de Google Scholar Cristòfol Rovira, Frederic Guerrero-Solé and Lluís Codina Nota: Este artículo se puede leer en español en: http://www.elprofesionaldelainformacion.com/contenidos/2018/may/09_esp.pdf Cristòfol Rovira, associate professor at Pompeu Fabra University (UPF), teaches in the Depart- ments of Journalism and Advertising. He is director of the master’s degree in Digital Documenta- tion (UPF) and the master’s degree in Search Engines (UPF). He has a degree in Educational Scien- ces, as well as in Library and Information Science. He is an engineer in Computer Science and has a master’s degree in Free Software. He is conducting research in web positioning (SEO), usability, search engine marketing and conceptual maps with eyetracking techniques. https://orcid.org/0000-0002-6463-3216 [email protected] Frederic Guerrero-Solé has a bachelor’s in Physics from the University of Barcelona (UB) and a PhD in Public Communication obtained at Universitat Pompeu Fabra (UPF). He has been teaching at the Faculty of Communication at the UPF since 2008, where he is a lecturer in Sociology of Communi- cation. He is a member of the research group Audiovisual Communication Research Unit (Unica). https://orcid.org/0000-0001-8145-8707 [email protected] Lluís Codina is an associate professor in the Department of Communication at the School of Com- munication, Universitat Pompeu Fabra (UPF), Barcelona, Spain, where he has taught information science courses in the areas of Journalism and Media Studies for more than 25 years. -

A Centrality Measure for Electrical Networks

Carnegie Mellon Electricity Industry Center Working Paper CEIC-07 www.cmu.edu/electricity 1 A Centrality Measure for Electrical Networks Paul Hines and Seth Blumsack types of failures. Many classifications of network structures Abstract—We derive a measure of “electrical centrality” for have been studied in the field of complex systems, statistical AC power networks, which describes the structure of the mechanics, and social networking [5,6], as shown in Figure 2, network as a function of its electrical topology rather than its but the two most fruitful and relevant have been the random physical topology. We compare our centrality measure to network model of Erdös and Renyi [7] and the “small world” conventional measures of network structure using the IEEE 300- bus network. We find that when measured electrically, power model inspired by the analyses in [8] and [9]. In the random networks appear to have a scale-free network structure. Thus, network model, nodes and edges are connected randomly. The unlike previous studies of the structure of power grids, we find small-world network is defined largely by relatively short that power networks have a number of highly-connected “hub” average path lengths between node pairs, even for very large buses. This result, and the structure of power networks in networks. One particularly important class of small-world general, is likely to have important implications for the reliability networks is the so-called “scale-free” network [10, 11], which and security of power networks. is characterized by a more heterogeneous connectivity. In a Index Terms—Scale-Free Networks, Connectivity, Cascading scale-free network, most nodes are connected to only a few Failures, Network Structure others, but a few nodes (known as hubs) are highly connected to the rest of the network. -

Mathematical Properties and Analysis of Google's Pagerank

o Bol. Soc. Esp. Mat. Apl. n 0 (0000), 1–6 Mathematical Properties and Analysis of Google’s PageRank Ilse C.F. Ipsen, Rebecca S. Wills Department of Mathematics, North Carolina State University, Raleigh, NC 27695-8205, USA [email protected], [email protected] Abstract To determine the order in which to display web pages, the search engine Google computes the PageRank vector, whose entries are the PageRanks of the web pages. The PageRank vector is the stationary distribution of a stochastic matrix, the Google matrix. The Google matrix in turn is a convex combination of two stochastic matrices: one matrix represents the link structure of the web graph and a second, rank-one matrix, mimics the random behaviour of web surfers and can also be used to combat web spamming. As a consequence, PageRank depends mainly the link structure of the web graph, but not on the contents of the web pages. We analyze the sensitivity of PageRank to changes in the Google matrix, including addition and deletion of links in the web graph. Due to the proliferation of web pages, the dimension of the Google matrix most likely exceeds ten billion. One of the simplest and most storage-efficient methods for computing PageRank is the power method. We present error bounds for the iterates of the power method and for their residuals. Palabras clave : Markov matrix, stochastic matrix, stationary distribution, power method, perturbation bounds Clasificaci´on por materias AMS : 15A51,65C40,65F15,65F50,65F10 1. Introduction How does the search engine Google determine the order in which to display web pages? The major ingredient in determining this order is the PageRank vector, which assigns a score to a every web page. -

Google Matrix of the World Trade Network

Vol. 120 (2011) ACTA PHYSICA POLONICA A No. 6-A Proceedings of the 5th Workshop on Quantum Chaos and Localisation Phenomena, Warsaw, Poland, May 2022, 2011 Google Matrix of the World Trade Network L. Ermann and D.L. Shepelyansky Laboratoire de Physique Théorique du CNRS, IRSAMC, Université de Toulouse UPS, 31062 Toulouse, France Using the United Nations Commodity Trade Statistics Database we construct the Google matrix of the world trade network and analyze its properties for various trade commodities for all countries and all available years from 1962 to 2009. The trade ows on this network are classied with the help of PageRank and CheiRank algorithms developed for the World Wide Web and other large scale directed networks. For the world trade this ranking treats all countries on equal democratic grounds independent of country richness. Still this method puts at the top a group of industrially developed countries for trade in all commodities. Our study establishes the existence of two solid state like domains of rich and poor countries which remain stable in time, while the majority of countries are shown to be in a gas like phase with strong rank uctuations. A simple random matrix model provides a good description of statistical distribution of countries in two-dimensional rank plane. The comparison with usual ranking by export and import highlights new features and possibilities of our approach. PACS: 89.65.Gh, 89.75.Hc, 89.75.−k, 89.20.Hh 1. Introduction damping parameter α in the WWW context describes the probability 1 − α to jump to any node for a random The analysis and understanding of world trade is of pri- surfer. -

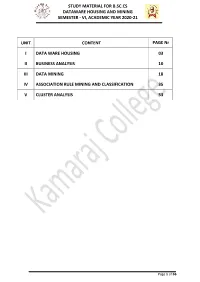

Study Material for B.Sc.Cs Dataware Housing and Mining Semester - Vi, Academic Year 2020-21

STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 UNIT CONTENT PAGE Nr I DATA WARE HOUSING 03 II BUSINESS ANALYSIS 10 III DATA MINING 18 IV ASSOCIATION RULE MINING AND CLASSIFICATION 35 V CLUSTER ANALYSIS 53 Page 1 of 66 STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 UNIT I: DATA WAREHOUSING Data warehousing Components: ->Overall Architecture Data warehouse architecture is Based on a relational database management system server that functions as the central repository (a central location in which data is stored and managed) for informational data In the data warehouse architecture, operational data and processing is separate and data warehouse processing is separate. Central information repository is surrounded by a number of key components. These key components are designed to make the entire environment- (i) functional, (ii) manageable and (iii) accessible by both the operational systems that source data into warehouse by end-user query and analysis tools. Page 2 of 66 STUDY MATERIAL FOR B.SC.CS DATAWARE HOUSING AND MINING SEMESTER - VI, ACADEMIC YEAR 2020-21 The source data for the warehouse comes from the operational applications As data enters the data warehouse, it is transformed into an integrated structure and format The transformation process may involve conversion, summarization, filtering, and condensation of data Because data within the data warehouse contains a large historical component the data warehouse must b capable of holding and managing large volumes of data and different data structures for the same database over time. ->Data Warehouse Database Central data warehouse database is a foundation for data warehousing environment.