Heartbleed: a Formal Methods Perspective

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Installing Fake Root Keys in a PC

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Royal Holloway - Pure Installing Fake Root Keys in a PC Adil Alsaid and Chris J. Mitchell Information Security Group Royal Holloway, University of London Egham, Surrey TW20 0EX fA.Alsaid, [email protected] Abstract. If a malicious party can insert a self-issued CA public key into the list of root public keys stored in a PC, then this party could potentially do considerable harm to that PC. In this paper, we present a way to achieve such an attack for the Internet Explorer web browser root key store, which avoids attracting the user's attention. A realisation of this attack is also described. Finally, countermeasures that can be deployed to prevent such an attack are outlined. 1 Introduction As is widely known [10], most web browsers (e.g. Microsoft Internet Explorer or Netscape) have a repository of root public keys designed for use in verify- ing digitally signed public key certi¯cates. These public keys are bundled with distributions of the web browser, and are used to verify certi¯cates for applet providers [13]. Speci¯cally, web-sites may download applets to a user PC without the PC user knowing it. Depending on the security settings selected by the PC user, these applets may be executed with or without further checks. Typically, the browser will only execute the applet if the following conditions are satis¯ed. 1. The applet must be digitally signed, and the signature must verify correctly. 2. -

Analysis of SSL Certificate Reissues and Revocations in the Wake

Analysis of SSL Certificate Reissues and Revocations in the Wake of Heartbleed Liang Zhang David Choffnes Dave Levin Tudor Dumitra¸s Northeastern University Northeastern University University of Maryland University of Maryland [email protected] [email protected] [email protected] [email protected] Alan Mislove Aaron Schulman Christo Wilson Northeastern University Stanford University Northeastern University [email protected] [email protected] [email protected] ABSTRACT Categories and Subject Descriptors Central to the secure operation of a public key infrastruc- C.2.2 [Computer-Communication Networks]: Net- ture (PKI) is the ability to revoke certificates. While much work Protocols; C.2.3 [Computer-Communication Net- of users' security rests on this process taking place quickly, works]: Network Operations; E.3 [Data Encryption]: in practice, revocation typically requires a human to decide Public Key Cryptosystems, Standards to reissue a new certificate and revoke the old one. Thus, having a proper understanding of how often systems admin- istrators reissue and revoke certificates is crucial to under- Keywords standing the integrity of a PKI. Unfortunately, this is typi- Heartbleed; SSL; TLS; HTTPS; X.509; Certificates; Reissue; cally difficult to measure: while it is relatively easy to deter- Revocation; Extended validation mine when a certificate is revoked, it is difficult to determine whether and when an administrator should have revoked. In this paper, we use a recent widespread security vul- 1. INTRODUCTION nerability as a natural experiment. Publicly announced in Secure Sockets Layer (SSL) and Transport Layer Secu- April 2014, the Heartbleed OpenSSL bug, potentially (and rity (TLS)1 are the de-facto standards for securing Internet undetectably) revealed servers' private keys. -

Trust Me, I'm a Root CA! Analyzing SSL Root Cas in Modern Browsers

Trust me, I’m a Root CA! Analyzing SSL Root CAs in modern Browsers and Operating Systems Tariq Fadai, Sebastian Schrittwieser Peter Kieseberg, Martin Mulazzani Josef Ressel Center for Unified Threat Intelligence SBA Research, on Targeted Attacks, Austria St. Poelten University of Applied Sciences, Austria Email: [pkieseberg,mmulazzani]@sba-research.org Email: [is101005,sebastian.schrittwieser]@fhstp.ac.at Abstract—The security and privacy of our online communi- tected communications is dependent on the trustworthiness cations heavily relies on the entity authentication mechanisms of various companies and governments. It is therefore of provided by SSL. Those mechanisms in turn heavily depend interest to find out which companies we implicitly trust just on the trustworthiness of a large number of companies and governmental institutions for attestation of the identity of SSL by using different operating system platforms or browsers. services providers. In order to offer a wide and unobstructed In this paper an analysis of the root certificates included in availability of SSL-enabled services and to remove the need various browsers and operating systems is introduced. Our to make a large amount of trust decisions from their users, main contributions are: operating systems and browser manufactures include lists of certification authorities which are trusted for SSL entity • We performed an in-depth analysis of Root Certifi- authentication by their products. This has the problematic cate Authorities in modern operating systems and web effect that users of such browsers and operating systems browsers implicitly trust those certification authorities with the privacy • We correlated them against a variety of trust indexes of their communications while they might not even realize it. -

The Trip to TLS Land Using the WSA Tobias Mayer, Consulting Systems Engineer BRKSEC-3006 Me…

The Trip to TLS Land using the WSA Tobias Mayer, Consulting Systems Engineer BRKSEC-3006 Me… CCIE Security #14390, CISSP & Motorboat driving license… Working in Content Security & IPv6 Security tmayer{at}cisco.com Writing stuff at “blogs.cisco.com” Agenda • Introduction • Understanding TLS • Configuring Decryption on the WSA • Troubleshooting TLS • Thoughts about the Future • Conclusion For Your Reference • There are (many...) slides in your print-outs that will not be presented. • They are there “For your Reference” For Your Reference Microsoft and Google pushing encryption • Microsoft pushing TLS with PFS • Google, FB, Twitter encrypting all traffic • HTTPS usage influencing page ranking on google • Deprecate SHA1, only SHA2+ • Browser Vendors aggressively pushing https • Problems with older TLS versions leading to upgrade of servers to newer protocols and ciphers • Poodle, Freak, Beast, …. Google Search Engine • Google ranking influenced by using HTTPS • http://blog.searchmetrics.com/us/2015 /03/03/https-vs-http-website-ssl-tls- encryption-ranking-seo-secure- connection/ Understanding TLS TLS Versions • SSLv3, 1996 • TLS 1.0, 1999, RFC2246 • TLS 1.1, 2006, RFC4346 • Improved security • TLS 1.2, 2008, RFC5246 • Removed IDEA and DES ciphers • Stronger hashes • Supports authenticated encryption ciphers (AES-GCM) • TLS 1.3, currently Internet Draft Attacks… • POODLE • SSLv3 Problems with Padding, turn of SSLv3 • BEAST • Know issues in CBC mode, use TLS 1.1/1.2 with non-CBC mode ciphers (GCM) • CRIME/BREACH • Compression Data Leak, disable -

SSL Insight Certificate Installation Guide Deployment Guide | SSL Insight Certificate Installation Guide

DEPLOYMENT GUIDE SSL Insight Certificate Installation Guide Deployment Guide | SSL Insight Certificate Installation Guide Table of Contents Introduction ....................................................................................................................................................................................................................................3 Generating CA Certificates for SSL Insight ...................................................................................................................................................................3 Importing a CA Certificate and Certificate Chain onto the A10 Thunder SSLi Device .....................................................................5 Installing a Certificate in Microsoft Windows 7 for Internet Explorer..........................................................................................................6 Installing a Certificate in Google Chrome ................................................................................................................................................................10 Installing a Certificate in Mozilla Firefox .....................................................................................................................................................................13 About A10 Networks ..............................................................................................................................................................................................................15 -

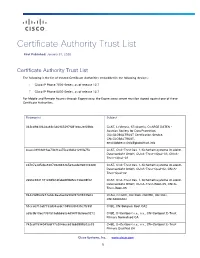

Certificate Authority Trust List

Certificate Authority Trust List First Published: January 31, 2020 Certificate Authority Trust List The following is the list of trusted Certificate Authorities embedded in the following devices: Cisco IP Phone 7800 Series, as of release 12.7 Cisco IP Phone 8800 Series, as of release 12.7 For Mobile and Remote Access through Expressway, the Expressway server must be signed against one of these Certificate Authorities. Fingerprint Subject 342cd9d3062da48c346965297f081ebc2ef68fdc C=AT, L=Vienna, ST=Austria, O=ARGE DATEN - Austrian Society for Data Protection, OU=GLOBALTRUST Certification Service, CN=GLOBALTRUST, [email protected] 4caee38931d19ae73b31aa75ca33d621290fa75e C=AT, O=A-Trust Ges. f. Sicherheitssysteme im elektr. Datenverkehr GmbH, OU=A-Trust-nQual-03, CN=A- Trust-nQual-03 cd787a3d5cba8207082848365e9acde9683364d8 C=AT, O=A-Trust Ges. f. Sicherheitssysteme im elektr. Datenverkehr GmbH, OU=A-Trust-Qual-02, CN=A- Trust-Qual-02 2e66c9841181c08fb1dfabd4ff8d5cc72be08f02 C=AT, O=A-Trust Ges. f. Sicherheitssysteme im elektr. Datenverkehr GmbH, OU=A-Trust-Root-05, CN=A- Trust-Root-05 84429d9fe2e73a0dc8aa0ae0a902f2749933fe02 C=AU, O=GOV, OU=DoD, OU=PKI, OU=CAs, CN=ADOCA02 51cca0710af7733d34acdc1945099f435c7fc59f C=BE, CN=Belgium Root CA2 a59c9b10ec7357515abb660c4d94f73b9e6e9272 C=BE, O=Certipost s.a., n.v., CN=Certipost E-Trust Primary Normalised CA 742cdf1594049cbf17a2046cc639bb3888e02e33 C=BE, O=Certipost s.a., n.v., CN=Certipost E-Trust Primary Qualified CA Cisco Systems, Inc. www.cisco.com 1 Certificate Authority -

Practical Issues with TLS Client Certificate Authentication

Practical Issues with TLS Client Certificate Authentication Arnis Parsovs Software Technology and Applications Competence Center, Estonia University of Tartu, Estonia [email protected] Abstract—The most widely used secure Internet communication Active security research is being conducted to improve standard TLS (Transport Layer Security) has an optional client password security, educate users on how to resist phishing certificate authentication feature that in theory has significant attacks, and to fix CA trust issues [1], [2]. However, the attacks security advantages over HTML form-based password authenti- mentioned above can be prevented or their impact can be cation. In this paper we discuss practical security and usability greatly reduced by using TLS client certificate authentication issues related to TLS client certificate authentication stemming (CCA), since the TLS CCA on the TLS protocol level protects from the server-side and browser implementations. In particular, we analyze Apache’s mod_ssl implementation on the server the client’s account on a legitimate server from a MITM side and the most popular browsers – Mozilla Firefox, Google attacker even in the case of a very powerful attacker who has Chrome and Microsoft Internet Explorer on the client side. We obtained a valid certificate signed by a trusted CA and who complement our paper with a measurement study performed in thus is able to impersonate the legitimate server. We believe Estonia where TLS client certificate authentication is widely used. that TLS CCA has great potential for improving Internet We present our recommendations to improve the security and security, and therefore in this paper we discuss current issues usability of TLS client certificate authentication. -

Create Decryption Policies to Control HTTPS Traffic in Asyncos 10.0 for Cisco Web Security Appliance

Cisco Web Security Appliance How-To Guide Create Decryption Policies to Control HTTPS Traffic Cisco Public Create Decryption Policies to Control HTTPS Traffic in AsyncOS 10.0 for Cisco Web Security Appliance ©1 2017© 2017 Cisco Cisco and/or and/or its affiliates. its affiliates. All rights All rights reserved. reserved. Cisco Web Security Appliance How-To Guide Create Decryption Policies to Control HTTPS Traffic Cisco Public Contents About This Document 3 Introduction to HTTPS Decryption 3 Enabling HTTPS Detection on the WSA 3 HTTPS Detection on the WSA in Action 4 WSA Certificate Use for HTTPS Decryption 5 Introduction 5 Certificate Overview 5 Root Certificates 6 Server Certificates 6 2 © 2017 Cisco and/or its affiliates. All rights reserved. Cisco Web Security Appliance How-To Guide Create Decryption Policies to Control HTTPS Traffic Cisco Public About This Document Decryption policies can handle HTTPS traffic in the following ways. This document is for Cisco engineers and customers who will deploy They can: HTTPS decryption on the Cisco® Web Security Appliance (WSA) using • Pass through encrypted traffic AsyncOS® 10.0. • Decrypt traffic and apply content-based access policies defined for This document covers: HTTP traffic. This process also makes malware scanning possible • Introduction to HTTPS decryption • Drop the HTTPS connection • Enabling HTTPS detection on the Web Security Appliance • Monitor the request (taking no final action) as the web proxy continues to evaluate the request against policies that may lead to a • HTTPS detection on the Web Security Appliance in action final drop, pass through, or decryption • Web Security Appliance certificate use for HTTPS decryption Enabling HTTPS Detection on the WSA Introduction to HTTPS Decryption Step 1. -

Create and Manage Certificates

Create and Manage Certificates For Releases 16.1R2 and later, except as noted in the “Software Release Information” section. You create and manage certificates for the FlexVNF devices in network on SteelConnect EX Director When you first install the Director software, it includes a complete set of self-signed trusted certificates and a certificate repository that enable secure data transfer between the SteelConnect EX Director server and the client using secure socket layer (SSL) encryption. The self-signed certificates contain the common name (CN), which is the fully qualified domain name (FQDN) of the host. The certificates aid in authenticating Director servers and clients before they connect with each other. They also contain keys to establish encrypted and secure connection. It is recommended that you use a self-signed certificate for proof-of-concept (POC) and lab testing. For production environments, you can import an existing private key and a certificate signed by an external Certification Authority (CA). Alternatively, you can generate certificates and a certificate repository using a different CN or domain. For self-signed certificates, the private key is 2048 bits and the hashing algorithm is SHA-256. Director nodes support X.509 certificates in the following formats: • PEM-encoded certificate—PEM encoding uses Base64-encoded ASCII files. It does not store the certification path or private key information. The Director server uses the versa_director_web_client.crt certificate based on PEM encoding. • DER-encoded certificate—DER encoding is the binary form of the certificate. This format supports encoding of private keys. Because the files are in binary format, you cannot view the certificate using a text editor. -

Reflection for Secure IT Client for Windows PKI Tab (Secure Shell Settings)

User's Guide Reflection for Secure IT Version 7.2 Copyrights and Notices © 2016 Attachmate Corporation, a Micro Focus company. All rights reserved. No part of the documentation materials accompanying this Attachmate software product may be reproduced, transmitted, transcribed, or translated into any language, in any form by any means, without the written permission of Attachmate Corporation. Attachmate, the Attachmate logo, and Reflection are registered trademarks of Attachmate Corporation in the USA. All other trademarks, trade names, or company names referenced in this product are used for identification only and are the property of their respective owners. 1 Introduction 7 2Installation 9 Install Your Attachmate Product on a Workstation . 9 Features Selection Tab . 10 Upgrading from Previous Versions. 10 3 Getting Started 11 Start a New Terminal Session . 11 Display the Configuration Toolbar . 11 Transfer Files Using the FTP Client . 12 Understanding Secure Shell. 13 4 Configuration 15 Settings Files . 15 Secure Shell Client Configuration Files . 15 5 Encryption 17 Supported Cryptographic Algorithms . 17 Federal Information Processing Standard (FIPS). 18 6 Authentication 21 Server Authentication using Public Keys . 21 Server Authentication using Certificates . 22 Client Authentication Methods . 23 Connection Reuse in Secure Shell Sessions. 24 7 Public Key Authentication 27 Managing User Keys . 27 Configure Public Key Authentication . 27 Add Keys to Your User Keys List . 28 Upload Client Public Keys to the Server . 29 Change a User Key Passphrase . 29 Export a User Key . .30 Managing Host Keys . 30 Configure Host Key Checking . 30 Configure the Preferred Host Key Type. 31 The Known Hosts File . 32 Host Key Authenticity Dialog Box . -

Exploring CA Certificate Control

What’s in a Name? Exploring CA Certificate Control Zane Ma† Joshua Mason† Manos Antonakakis‡ Zakir Durumeric§ Michael Bailey† ‡Georgia Institute of Technology §Stanford University †University of Illinois at Urbana-Champaign Abstract other transgressions, prompted root store operators to begin discussions about removing trust in WoSign certificates. In TLS clients rely on a supporting PKI in which certificate July 2016, a new discovery revealed that StartCom, a seem- authorities (CAs)—trusted organizations—validate and cryp- ingly unaffiliated CA in Israel, was able to issue certificates tographically attest to the identities of web servers. A client’s signed by WoSign (a Chinese company). A deeper investiga- confidence that it is connecting to the right server depends tion of the incident eventually revealed that “the transaction entirely on the set of CAs that it trusts. However, as we demon- which completed the chain to give WoSign 100% ownership strate in this work, the identity specified in CA certificates is of StartCom completed on November 1st 2015” [59]. Further frequently inaccurate due to lax naming requirements, owner- evidence emerged that StartCom’s CA certificates had likely ship changes, and long-lived certificates. This not only mud- been integrated with WoSign operations as early as December dles client selection of trusted CAs, but also prevents PKI 2015 [56], when the removal of WoSign certificates from root operators and researchers from correctly attributing CA cer- stores appeared imminent. WoSign’s stealthy acquisition of tificate issues to CA organizations. To help Web PKI par- StartCom emphasizes the importance of transparency around ticipants understand the organizations that control each CA operational CA control for a secure web. -

Analysis of the HTTPS Certificate Ecosystem

Analysis of the HTTPS Certificate Ecosystem∗ Zakir Durumeric, James Kasten, Michael Bailey, J. Alex Halderman Department of Electrical Engineering and Computer Science University of Michigan, Ann Arbor, MI 48109, USA {zakir, jdkasten, mibailey, jhalderm}@umich.edu ABSTRACT supporting public key infrastructure (PKI) composed of thousands We report the results of a large-scale measurement study of the of certificate authorities (CAs)—entities that are trusted by users’ HTTPS certificate ecosystem—the public-key infrastructure that un- browsers to vouch for the identity of web servers. CAs do this by derlies nearly all secure web communications. Using data collected signing digital certificates that associate a site’s public key with its by performing 110 Internet-wide scans over 14 months, we gain domain name. We place our full trust in each of these CAs—in detailed and temporally fine-grained visibility into this otherwise general, every CA has the ability to sign trusted certificates for any opaque area of security-critical infrastructure. We investigate the domain, and so the entire PKI is only as secure as the weakest CA. trust relationships among root authorities, intermediate authorities, Nevertheless, this complex distributed infrastructure is strikingly and the leaf certificates used by web servers, ultimately identify- opaque. There is no published list of signed website certificates ing and classifying more than 1,800 entities that are able to issue or even of the organizations that have trusted signing ability. In certificates vouching for the identity of any website. We uncover this work, we attempt to rectify this and shed light on the HTTPS practices that may put the security of the ecosystem at risk, and we certificate ecosystem.