Evaluating Search Engines and Defining a Consensus Implementation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microsoft Advertising Client Brochure

EXPANDMicrosoft YOUR Advertising. CUSTOMER Intelligent AUDIENCE connections. WITH Microsoft Advertising Thank you for considering Microsoft Advertising Though you may already advertise through other platforms such as Google Ads, the Microsoft Search Network can boost traffic by offering an additional customer audience and increase diversity, growth and profits for your business. Globally, the Microsoft 11 billion monthly Search Network is continually searches 1 expanding its reach in 37 markets The Microsoft Search Network powers millions of searches in Canada:2 14 MILLION 296 MILLION 22% unique searchers monthly of the PC who represent searches search market Reach a diverse audience in Canada3 34% 56% 43% have a household income are under the age of have graduated college of $80K+ CAD 45 (16-44 years old) (university/postgraduate degree) High-quality partnerships and The Microsoft Search Network integration add value to the reaches people across multiple Microsoft Search Network devices and platforms4 • Bing powers AOL web, mobile and tablet • Bing search is built into Windows 10, which is now search, providing paid search ads to AOL on over 800 million devices. properties worldwide. • Bing powers Microsoft search, which is a unified • Windows 10 drives more engagement and delivers search experience for enterprises including Office, more volume to the Microsoft Search Network. SharePoint and Microsoft Edge. • Our partnerships with carefully vetted search • Bing is on phones, tablets, PCs and across many partners, like The Wall Street -

Evaluating Exploratory Search Engines : Designing a Set of User-Centered Methods Based on a Modeling of the Exploratory Search Process Emilie Palagi

Evaluating exploratory search engines : designing a set of user-centered methods based on a modeling of the exploratory search process Emilie Palagi To cite this version: Emilie Palagi. Evaluating exploratory search engines : designing a set of user-centered methods based on a modeling of the exploratory search process. Human-Computer Interaction [cs.HC]. Université Côte d’Azur, 2018. English. NNT : 2018AZUR4116. tel-01976017v3 HAL Id: tel-01976017 https://tel.archives-ouvertes.fr/tel-01976017v3 Submitted on 20 Feb 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE DE DOCTORAT Evaluation des moteurs de recherche exploratoire : Elaboration d'un corps de méthodes centrées utilisateurs, basées sur une modélisation du processus de recherche exploratoire Emilie PALAGI Wimmics (Inria, I3S) et Data Science (EURECOM) Présentée en vue de l’obtention Devant le jury, composé de : du grade de docteur en Informatique Christian Bastien, Professeur, Université de Loraine d’Université Côte d’Azur Pierre De Loor, Professeur, ENIB Dirigée par : Fabien Gandon -

Ecosia Launches First Ever Out-Of-Home and TV Advertising Campaign to Let New Users Know They Can Plant Trees with Their Searches - No Matter How Weird

Ecosia launches first ever Out-of-Home and TV advertising campaign to let new users know they can plant trees with their searches - no matter how weird The green search engine is launching its first international campaign on April 5th across 12 European cities with JCDecaux and Sky Media ● Ecosia’s ‘Weird Search Requests’ campaign will be live in France, Germany, the Netherlands and the UK ● Its concept expands on award-winning brand video created by students at Germany’s Filmakademie Baden-Wuerttemberg ● The campaign has been developed together with JCDecaux’s Nurture Programme - which helps start-ups and scale-ups across Europe to scale their brand - and Sky Media in the UK ● With more than 120 million trees already funded by users’ searches globally, Ecosia expects the campaign across 12 European cities to generate significant growth for tree planting Paris, France, 31 st March, 2021: Green search engine Ecosia is launching its first major international brand campaign in cities across Europe, to let millions of potential new users know that they can turn their searches, no matter how weird, into trees. The user-generated campaign speaks directly to users in each city, by displaying searches they have shared via social media. It has been developed together with JCDecaux, the world’s leading out-of-home advertising company, and Sky Media in the UK. The concept stems from an award-winning brand video created by students at Germany’s Filmakademie Baden-Wuerttemberg, originally the students’ own initiative for a class assignment. It shows Ecosia users making unusual searches on the app, which leads to a tree sprouting up wherever the search was made - ranging from a bus to a lecture hall - and ending with the words: “No matter how weird your search request, we’ll plant the trees anyway .” The film won a number of awards, including silver in the prestigious Young Director Award award at Cannes. -

PRESS RELEASE for IMMEDIATE RELEASE Media Contact Name: Kendall Garifo Email: [email protected]

PRESS RELEASE FOR IMMEDIATE RELEASE Media Contact Name: Kendall Garifo Email: [email protected] Ecosia and Trees for the Future announce partnership to plant trees in West Africa SILVER SPRING, MARCH 2, 2018— Trees for the Future (TREES) and Ecosia join forces to plant trees to combat climate change, revitalize degraded lands and improve livelihoods. TREES fully supports Ecosia in its ambitious journey to plant one billion trees by 2020. Ecosia has committed to fund a new Forest Garden site in Kaffrine, Senegal and plant 1.2 Million trees over a 46 month period. An estimated 300 farming families will benefit from this project. About Trees for the Future Trees for the Future (TREES) is an international development non profit that meets a triple bottom line: poverty alleviation, hunger eradication, and healing the environment. Through our Forest Garden Approach they train farmers to plant and manage Forest Gardens that sustainably feed families and raise their incomes by 400 percent. TREES receives donations to implement their work in areas where they can have the greatest impact. TREES currently works across five countries in Sub-Saharan Africa: Cameroon, Kenya, Senegal, Tanzania, and Uganda. Since 1989, TREES has planted nearly 150 million trees. Learn more at trees.org. About Ecosia Ecosia is the search engine that plants trees with its ad revenue. They are committed to donating at least 80 percent of its sponsored links income to tree planting projects around the world. It is Ecosia’s mission to plant 1 billion trees by 2020. Ecosia looks to support poor agricultural communities by planting a trees. -

The Ecosia on Campus Movement Tree Planting Quiz Q&A

Baden-Württemberg! ● What is Ecosia? ● The Ecosia on Campus movement ● Tree planting quiz ● Q&A Fred Henderson Project coordinator Business development team ● Ecosia on Campus co-founder ○ Supporting students at 250 universities ● Organizational support ○ Corporate companies switching to Ecosia ● User support ○ Automating and answering user questions Where does Ecosia plant trees? ● 60 projects across 30 countries ● Focus on biodiversity hotspots ● New contracts signed in India, Australia + Malawi Why trees? ● Ecosia’s 100 million trees are sequestering between 1 - 3.5M of tons of carbon dioxide each year ● Trees benefit both people and planet ● Each project serves a unique purpose Your trees in Ethiopia ● Project with environmental and humanitarian NGO Green Ethiopia ● Over 9 million trees planted Video caption if needed Your trees in Australia ● In response to the bushfires in 2020. ● Ecosia dedicates search revenue from a day to finance a project with ReforestNOW ● 26,000 trees planted in a subtropical area Your trees in South America How does Ecosia make money? ● Ecosia generates revenue from users clicking on ads ● Ecosia is completely transparent with its finances. ● 80% of Ecosia’s profits each month goes towards financing tree-planting projects. ● 20% is put into a reserve to ensure Ecosia is always sustainable. Video caption if needed “Ecosia is not just producing enough solar energy to power all Ecosia searches with renewables - we are producing twice as much.” Wolfgang Oels Ecosia’s COO Video caption if needed Which Universities have switched? ● A global movement of students campaigning to make Ecosia the default search engine at their university; ● More than 250 active campaigns worldwide; What is Ecosia on ● Over 200,000 trees financed by Campus? students searches. -

Final Study Report on CEF Automated Translation Value Proposition in the Context of the European LT Market/Ecosystem

Final study report on CEF Automated Translation value proposition in the context of the European LT market/ecosystem FINAL REPORT A study prepared for the European Commission DG Communications Networks, Content & Technology by: Digital Single Market CEF AT value proposition in the context of the European LT market/ecosystem Final Study Report This study was carried out for the European Commission by Luc MEERTENS 2 Khalid CHOUKRI Stefania AGUZZI Andrejs VASILJEVS Internal identification Contract number: 2017/S 108-216374 SMART number: 2016/0103 DISCLAIMER By the European Commission, Directorate-General of Communications Networks, Content & Technology. The information and views set out in this publication are those of the author(s) and do not necessarily reflect the official opinion of the Commission. The Commission does not guarantee the accuracy of the data included in this study. Neither the Commission nor any person acting on the Commission’s behalf may be held responsible for the use which may be made of the information contained therein. ISBN 978-92-76-00783-8 doi: 10.2759/142151 © European Union, 2019. All rights reserved. Certain parts are licensed under conditions to the EU. Reproduction is authorised provided the source is acknowledged. 2 CEF AT value proposition in the context of the European LT market/ecosystem Final Study Report CONTENTS Table of figures ................................................................................................................................................ 7 List of tables .................................................................................................................................................. -

Ecosia at Your Faculty

Ecosia at your Faculty Imagine your faculty could contribute to reforestation around the world with every internet search that is being done at the faculty. This opportunity can easily be offered by Ecosia. Ecosia is a search engine, just like Google and was founded in 2009 in Berlin. Like most search engines, it makes its money by clicks on advertisements. However in contrast to other search engines, Ecosia uses 80% or more of its profit to plant trees. This way Ecosia is effectively fighting deforestation worldwide. Ecosia calculated that on average every 45 searches are sufficient to plant one tree. So far, they were able to plant over 120 Million trees (February 2021). Ecosia is very transparent when it comes to the usage/storage of data, about privacy and financial reporting. Ecosia on campus is an initiative led by students around the world to implement Ecosia as the standard search engine at their Universities. Over 175,000 trees have already been financed by students' searches! With your help as faculty we’ll be able to plant thousands more! In January 2021, Maastricht University (as the first University in The Netherlands) has set the sustainable search engine Ecosia as the default search engine on 800 Student Desktops (SDT) in both library locations and the Randwyck Computer Facilities. To make UM even more sustainable we are planning to extend this project across all faculties. The sustainable search engine is easy to implement and it is possible to install it on every other UM workstation. For more information about the change at UM please refer to: https://www.maastrichtuniversity.nl/news/ecosia-now-default-800-student-desktops How to switch your standard search engine to Ecosia? The switch is very easy. -

Thème : Le Web Les Moteurs De Recherche Moteur De Recherche

Thème : Le Web Les moteurs de recherche Capacités attendues : - Comprendre le fonctionnement des moteurs de recherche - Mener une analyse critique des résultats d’un moteur de recherche - Comprendre les enjeux de la publication d’informations Moteur de recherche : application informatique permettant de rechercher une ressource (page Web, image, vidéo, fichier…) à partir d’une requête sous forme de mots-clés. 1. Le fonctionnement des moteurs de recherche * Au brouillon, essayez de schématiser le fonctionnement d’un moteur de recherche. Vocabulaire à retenir : - crawlers : robot d’indexation qui explore automatiquement le Web en suivant les liens entre les différentes pages pour collecter les ressources. - indexation : les mots-clés sont listés, classés et enregistrés sur des serveurs qui stockent les données. - pertinence : répond au besoin au moment où il est exprimé. Le Web est un immense graphe : chaque page est un noeud relié à d’autres nœuds par des liens hypertextes. On pourrait le schématiser de façon très simplifiée, comme ci-contre. Mme Suaudeau SNT 2019-2020 * A partir du nœud E, parcourez le graphe précédant en listant les pages consultées et les pages à visiter à chaque étape : Action Pages visitées Pages à visiter On visite E, lié à A et H E A H On visite A , lié à B E A H B On visite H , lié à J E A H B J On visite B , lié à A et C E A H B J C (A déjà visité) On visite J , lié à F E A H B J C F On visite C , lié à A E A H B J C C F (A déjà visité) On visite F , lié à C D G H E A H B J C F D G (C et H déjà visités) On visite D , lié à / E A H B J C F D G On visite G , lié à / E A H B J C F D G * Que se passe-t-il si l’on commence l’exploration par le nœud A ? La visite est terminée en 3 étapes mais on a « raté » une grande partie du graphe. -

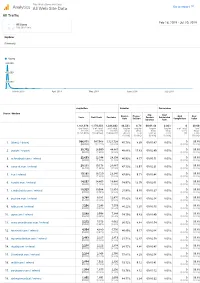

Thiswaifudoesnotexist.Net: Google Analytics: All Traffic 20190218-20190720

This Waifu Does Not Exist Analytics All Web Site Data Go to report All Traffic Feb 18, 2019 - Jul 20, 2019 All Users 100.00% Users Explorer Summary Users 600,000 400,000 200,000 March 2019 April 2019 May 2019 June 2019 July 2019 Acquisition Behavior Conversions Source / Medium Avg. Goal Bounce Pages / Goal Goal Users New Users Sessions Session Conversion Rate Session Completions Value Duration Rate 1,161,978 1,170,333 1,384,602 46.58% 8.74 00:01:48 0.00% 0 $0.00 % of Total: % of Total: % of Total: Avg for Avg for Avg for Avg for % of Total: % of 100.00% 100.07% 100.00% View: View: View: View: 0.00% Total: (1,161,978) (1,169,566) (1,384,602) 46.58% 8.74 00:01:48 0.00% (0) 0.00% (0.00%) (0.00%) (0.00%) (0.00%) ($0.00) 1. (direct) / (none) 966,071 967,968 1,121,738 45.78% 8.39 00:01:47 0.00% 0 $0.00 (82.06%) (82.71%) (81.02%) (0.00%) (0.00%) 2. google / organic 30,702 28,990 44,887 48.86% 15.63 00:02:49 0.00% 0 $0.00 (2.61%) (2.48%) (3.24%) (0.00%) (0.00%) 3. m.facebook.com / referral 22,693 22,544 24,304 46.93% 4.77 00:00:51 0.00% 0 $0.00 (1.93%) (1.93%) (1.76%) (0.00%) (0.00%) 4. away.vk.com / referral 20,111 19,178 26,667 47.13% 13.97 00:02:31 0.00% 0 $0.00 (1.71%) (1.64%) (1.93%) (0.00%) (0.00%) 5. -

Ecosia Brief

CSSD Persuasive Brief Number B144723 EVALUATING 19th April 2019 as a default search engine for UoE What is Ecosia? Key points: Ecosia.org is known as a ‘sustainable’ search engine. The profits generated from every search Ecosia is an alternative search engine that using Ecosia go towards funding 20 tree planting uses ad revenue from searches to fund tree projects across 15 countries. Use of Ecosia has planting projects that contribute to the storage dramatically increased since 2017, with 7 million of approx. 2.5 million tonnes of CO2e. active users currently. Since they launched in The UoE could: 2009, Ecosia has been responsible for the • Replace Bing with Ecosia as the default planting of over 55,000,000 trees, storing search engine on the browser, Edge approximately 2.5 million tonnes of CO2e [1]. The • Trial Ecosia as the default search engine University of Edinburgh (UoE) has set targets for on all browsers carbon neutrality by 2040 (‘Zero by 2040’), which may be aided by switching to a carbon negative • Offset current search engine emissions search engine as its default choice. with an independent tree planting project Why is it different? Sahel region; limit the lowering of the water table UoE currently has 2 search engine defaults in Kenya, Uganda, Ghana and Spain; reduce across all internet browsers – Google and Bing. rural poverty in local communities; increase Every search on a search engine can generate biodiversity by allowing passage of animals along revenue from displaying adverts on search results tree corridors through human territories in pages. Search engines use this revenue to fund Madagascar, Brazil and Uganda, and; help the parent company and their projects. -

Doug Elliott, City Manager RE: Friday Letter DATE: April 9, 2021 ______

MEMORANDUM TO: Mayor Smith and Members of Council FROM: Doug Elliott, City Manager RE: Friday Letter DATE: April 9, 2021 _________________________________________________________________________ POTENTIAL INCOME TAX REVENUE LOSS In March 2020, Governor DeWine signed HB197 into law to respond to the public health emergency and economic crisis precipitated by the COVID-19 pandemic. This legislation included Section 29, which, in effect, states that income taxes should be collected by the municipality where employers are located and not the city or village where remotely working employees reside. Section 29 will expire 30 days after the Governor ends his declaration of a state of emergency. Legislators have introduced HB157 and SB97 (134th General Assembly), which seek to immediately repeal the Section 29 provision. This repeal would allow jurisdictions of residence to collect income tax from employees who are working from home rather than the jurisdiction where they worked pre-pandemic. Also, there are now four lawsuits challenging the legality of collecting city income taxes from people based on where they worked ahead of the pandemic, even if they have since been working elsewhere remotely. The Regional Income Tax Agency (RITA), which administers the City’s income tax has amended its current refund form to allow people to submit claims for refunds if they have been working at home during the coronavirus pandemic. But RITA is making no promises to pay those refunds, instead cautioning on the form: “RITA will hold your request for refund in a suspended status until this litigation is concluded.” I have estimated the City of Oxford’s loss in income tax revenue at $1.5 million (or more). -

Appendix I: Search Quality and Economies of Scale

Appendix I: search quality and economies of scale Introduction 1. This appendix presents some detailed evidence concerning how general search engines compete on quality. It also examines network effects, scale economies and other barriers to search engines competing effectively on quality and other dimensions of competition. 2. This appendix draws on academic literature, submissions and internal documents from market participants. Comparing quality across search engines 3. As set out in Chapter 3, the main way that search engines compete for consumers is through various dimensions of quality, including relevance of results, privacy and trust, and social purpose or rewards.1 Of these dimensions, the relevance of search results is generally considered to be the most important. 4. In this section, we present consumer research and other evidence regarding how search engines compare on the quality and relevance of search results. Evidence on quality from consumer research 5. We reviewed a range of consumer research produced or commissioned by Google or Bing, to understand how consumers perceive the quality of these and other search engines. The following evidence concerns consumers’ overall quality judgements and preferences for particular search engines: 6. Google submitted the results of its Information Satisfaction tests. Google’s Information Satisfaction tests are quality comparisons that it carries out in the normal course of its business. In these tests, human raters score unbranded search results from mobile devices for a random set of queries from Google’s search logs. • Google submitted the results of 98 tests from the US between 2017 and 2020. Google outscored Bing in each test; the gap stood at 8.1 percentage points in the most recent test and 7.3 percentage points on average over the period.