Challenge the Hawk-Eye!

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

WTT . . . at a Glance

WTT . At a glance World TeamTennis Pro League presented by Advanta Dates: July 5-25, 2007 (regular season) Finals: July 27-29, 2007 – WTT Championship Weekend in Roseville, Calif. July 27 & 28 – Conference Championship matches July 29 – WTT Finals What: 11 co-ed teams comprised of professional tennis players and a coach. Where: Boston Lobsters................ Boston, Mass. Delaware Smash.............. Wilmington, Del. Houston Wranglers ........... Houston, Texas Kansas City Explorers....... Kansas City, Mo. Newport Beach Breakers.. Newport Beach, Calif. New York Buzz ................. Schenectady, N.Y. New York Sportimes ......... Mamaroneck, N.Y. Philadelphia Freedoms ..... Radnor, Pa. Sacramento Capitals.........Roseville, Calif. St. Louis Aces................... St. Louis, Mo. Springfield Lasers............. Springfield, Mo. Defending Champions: The Philadelphia Freedoms outlasted the Newport Beach Breakers 21-14 to win the King Trophy at the 2006 WTT Finals in Newport Beach, Calif. Format: Each team is comprised of two men, two women and a coach. Team matches consist of five events, with one set each of men's singles, women's singles, men's doubles, women's doubles and mixed doubles. The first team to reach five games wins each set. A nine-point tiebreaker is played if a set reaches four all. One point is awarded for each game won. If necessary, Overtime and a Supertiebreaker are played to determine the outright winner of the match. Live scoring: Live scoring from all WTT matches featured on WTT.com. Sponsors: Advanta is the presenting sponsor of the WTT Pro League and the official business credit card of WTT. Official sponsors of the WTT Pro League also include Bälle de Mätch, FirmGreen, Gatorade, Geico and Wilson Racquet Sports. -

Laykold® Gel Product Brochure

APT PROUDLYINTRODUCES GEL The revolutionary new cushion court system Asphalt/Concrete Primer .1 Laykold Gel .2 Wearcoat .3 Bond Coat .4 Laykold Filler .5 Laykold Topcoat .6 Line Primer and White Line Paint .7 6 7 5 4 3 2 1 SPORTS AND LEISURE SYNTHETIC SURFACE SPECIALISTS www.aptasiapacific.com.au • 1800 652 548 James Sheahan College, Orange, NSW GEL Laykold® Gel is a revolutionary, technologically advanced, seamless cushioned court system manufactured utilising 60%+ renewable resources. The all-weather court surface offers East Fremantle Lawn Tennis Club, WA a wide variety of benefits to all ages and ability. From recreational to professional athlete, Laykold Gel provides up to 17% force reduction enhancing player performance by reducing joint impact and body fatigue. The chemistry is complex but the result is simple. The evolution of sports equipment over the last The fact is most of the world’s most prominent 30 years has been remarkable. Tennis racquets tennis tournaments are STILL being played on surface have become lighter, with far less vibration systems that were developed during the tennis boom of the 1970’s, which lack the advancement of transfer to the forearm and elbow. Even tennis today’s modern technology. Fortunately, Advanced racquet string holes have been adjusted for Polymer Technology (APT) has been working hard to increased racquet speed and power. Athletic bring modern chemistry and innovation to the tennis apparel is utilising lighter weight, moisture surface. wicking fabrics, some of which even provide UV protection. Modern materials are being used to APT is using its chemical expertise to develop modern construct balls with more consistent bounce and tennis surfaces and is helping the game of tennis catch improved aerodynamics. -

Settling Disputes

SETTLING DISPUTES Disagreements between players on line calling and scoring are common within competitive tennis. When disputes occur within official competitions it’s important that they are handled in a fair, consistent and efficient manner. The following steps detail how to settle disputes. LINE CALLS This involves a player making a subjective decision which ultimately could have a big effect on the outcome of the match. This a hot issue particularly for Juniors who for many reasons, often do not accurately see the ball in relation to lines. Witnessed Line Calls If an referee or court supervisor witnesses a blatantly incorrect call, they can go on-court and tell the player that the incorrect call, was an unintentional hindrance to his/her opponent and the point will be replayed, unless it was a point-ending shot and therefore the point will be awarded to the opponent. In the case of matches played on a clay court, the point is awarded to the opponent regardless of whether it is a point-ending shot or not. Unwitnessed Line Call Dispute (non-clay courts): • Ask the player whose call it was, if they are sure of their call. o If yes, the call stands. o If no, then the point should be replayed. • If you think it would be beneficial to have the match supervised (and this is possible), have the Supervisor stay on the court for the remainder of the match. Advise players that he/she will correct any clearly incorrect calls. • If it is not possible to allocate an adult to supervise the match, you should watch the next few points from off the court and then keep a discreet but watchful eye on the rest of the match. -

2019 World Teamtennis Media Information

2019 World TeamTennis Media Information FACTS & FIGURES ..................................................................................................... 2 BROADCAST OUTLETS & HAWK-EYE LIVE ................................................................. 4 TEAM ROSTERS ......................................................................................................... 6 2019 WTT SCHEDULE ............................................................................................... 8 TEAM VENUES ........................................................................................................ 10 MORE ABOUT WORLD TEAMTENNIS ...................................................................... 11 Important Things To Know .................................................................................. 12 Innovations & Firsts ............................................................................................ 14 Milestones .......................................................................................................... 15 WTT FINALS & CHAMPIONS ................................................................................... 17 FACTS & FIGURES What: World TeamTennis showcases the best in professional tennis with the innovative team format co-founded by Billie Jean King in the 1970s. Recognized as the leader in professional team tennis competition, WTT features many of the world’s best players competing annually for the King Trophy, the league’s championship trophy named after King. 2019 Teams: New York -

The Official Rulebook

THE OFFICIAL RULEBOOK 2020-2021 Comments and suggestions regarding this rulebook are welcome. If you have any feedback, please submit in writing to our address below. Editors: Bob Booth, Cory Brooks, Christopher Cetrone, Kevin Foster, Rebel Good, Lee Ann Haury, Steve Laro, Steven Menoff, Anthony Montero, Courtney Potkey, Darren Potkey, Dean Richardville, Chris Rodger, Andrew Rogers, Richard Rogers, Timothy Russell, Reliford Sanders, Isabelle Snyder, Brad Taylor, Chris Wilson and Sharon Wright. Intercollegiate Tennis Association 1130 E University Drive Suite 115 Tempe, AZ 85281 www.ITATennis.com [email protected] © 2020 by the Intercollegiate Tennis Association. All rights reserved. No part of this book may be reproduced in any form without the written consent of the Intercollegiate Tennis Association. Printed in the United States of America Cover designed by Jacob Dye. Produced for the Intercollegiate Tennis Association by Courtney Potkey i ii FOREWORD Dear Colleagues: As COVID-19 changes our lives, all of us working in the arena of college athletics have been reminded of the lessons learned on and off the field and courts of competition — persistence, getting up from setbacks and moving forward to compete again. The ITA and college tennis continue to be leaders in the sports world at this critical moment, working hard to demonstrate how tennis can return as a safe sport. In recent years striving together has been an overarching theme of the ITA… players, coaches and officials committing to fashion an environment whereby exceptional tennis technique, strategy, tactics and sportsmanship are combined with well-considered rules to create contests of “worthy rivals.” As the 2020-2021 competitive season gets under way, once again we want to ensure that tennis matches are conducted in a safe and fair manner and that attention to the health and welfare of all involved is coupled with qualities of character such as integrity, honesty and trustworthiness. -

TENNIS TENNIS SPORTIME’S 10 and Under Tennis Program

U10SPORTIME SPORTIME U10 TENNIS TENNIS SPORTIME’s 10 and Under Tennis Program PROGRAM INFORMATION SPORTIME WORLD TOUR SPORTIME’s U10 Pathway for our youngest, newest players aims to The SPORTIME World Tour is our “Grand Slam” develop the whole player, physically, mentally, tactically and technically, competition, held at multiple SPORTIME sites each from his or her very first lesson. In the SPORTIME U10 Tennis Pathway, year, which allows students to compete in an age junior players work through clearly defined stages of development that and level appropriate format. With four separate follow an internationally accepted progression of court sizes (red 36’, events held during each indoor season, each orange 60’, green/yellow 78’), ball types (red, orange, green, yellow) SPORTIME World Tour event is based on a and net heights that make it possible for kids to actually play tennis Grand Slam theme, assuring a festive and social from the moment they step on a tennis court. experience for kids and parents. Partcipation in SPORTIME’s U10 Tennis combines the best principles of child learning World Tour is included with all Red, Orange and with world-class tennis instruction to create a truly innovative and Green level programs. engaging program. Our tennis kids don’t just take tennis lessons, SPORTIME World Tour events use a Flexi-Team they get sent on missions, acquire skills, collect points and achieve format, which means that teams are formed on the milestones. Our “gamification” approach is a part of SPORTIME’s fun days of the events and that new friends and partners will be found. -

Leg Before Wicket Douglas Miller Starts to Look at the Most Controversial Form of Dismissal

Leg Before Wicket Douglas Miller starts to look at the most controversial form of dismissal Of the 40 wickets that fell in the match between Gloucestershire and Glamorgan at Cheltenham that ended on 1st August 2010 as many as 18 of the victims were dismissed lbw. Was this, I wondered, a possible world record? Asking Philip Bailey to interrogate the files of Cricket Archive, I discovered that it was not: back in 1953/54 a match between Patiala and Delhi had seen 19 batsmen lose their wickets in this way. However, until the start of the 2010 season the record in English first-class cricket had stood at 17, but, barely credibly, Cheltenham had provided the third instance of a match with 18 lbws in the course of the summer. Gloucestershire had already been involved in one of these, against Sussex at Bristol, while the third occasion was the Sussex-Middlesex match at Hove. Was this startling statistic for 2010 an indication that leg before decisions are more freely given nowadays? It seemed to correlate with an impression that modern technology has given umpires a better feel for when a ball is likely to hit the wicket and that the days when batsmen could push forward and feel safe were now over. I determined to dig deeper and examine trends over time. This article confines itself to matches played in the County Championship since World War I. I propose looking at Tests in a future issue. The table below shows how the incidence of lbw dismissals has fluctuated over time. -

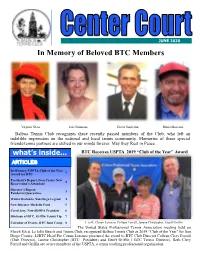

In Memory of Beloved BTC Members

JUNE 2020 In Memory of Beloved BTC Members Virginia Glass Lois Simmons David Sanderlin Mario Moreano Balboa Tennis Club recognizes these recently passed members of the Club, who left an indelible impression on the national and local tennis community. Memories of these special friends/tennis partners are etched in our minds forever. May they Rest in Peace. what ’s inside... BTC Receives USPTA 2019 “Club of the Year” Award ARTICLES In Memory, USPTA Club of the Year 1 Award for BTC President’s Report, Ivan Carter New 2 Reservation’s Attendant Director’s Report, 3 Pandemic/Quarantine Walter Redondo, San Diego Legend 4 New Director Michelle Ford 5 Carol Jory, New SDDTA President 6 Birdman of BTC, Griffin Tennis Tip 7 Calendar of Events, BTC Boot Camp 8 L to R: Conan Lorenzo, Colleen Ferrell, Janene Christopher, Geoff Griffin The United States Professional Tennis Association meeting held on March 8th at La Jolla Beach and Tennis Club, recognized Balboa Tennis Club as 2019 “Club of the Year" for San Diego County. LJBTC Head Pro Conan Lorenzo presented the award to BTC Club Director Colleen Clery Ferrell (Club Director), Janene Christopher (BTC President) and Geoff Griffin ( BTC Tennis Director). Both Clery Ferrell and Griffin are active members of the USPTA, a tennis teaching professional organization. PAGE 2 The President Serves It Up By BTC President Janene Christopher The Club reopened and we are welcoming everyone back!!! You returned to being greet- ed by our smiling, helpful staff, new LED lights throughout the facility and some awe- some wall graphics in the locker rooms. -

The World Teamtennis Pro League Today Announced the League Has Added a Franchise in Washington, D.C

World TeamTennis adds team, Washington Kastles, in Washington D.C. NEW YORK, N.Y. (February 14, 2008) - The World TeamTennis Pro League today announced the League has added a franchise in Washington, D.C. The Washington Kastles, owned by a group led by venture capitalist and entrepreneur Mark Ein, will play their home matches at a Washington, D.C., location soon to be announced. The 2008 WTT regular season runs July 3-23 with the WTT Championship Weekend set for July 24-27 in Roseville, Calif. World TeamTennis, co-founded in 1974 by tennis legend Billie Jean King, features several generations of tennis pros competing in a co-ed pro league in communities throughout the U.S. each summer. This is the first Washington, D.C.-based team in the League’s 33-year history. “Washington D.C. is a world-class sports market that will be a showcase for World TeamTennis,” said WTT CEO/Commissioner Ilana Kloss. “We’ve looked at this market for several years as a great location for expansion. Thanks to the leadership and vision of Mark Ein, everything came together for it to happen this year.” “Area fans have supported tennis for many years and we think they will really embrace our unique brand of tennis,” added Kloss. The Kastles will select their lineup when they draft sixth out of 11 teams at the WTT Player Draft on April 1 in Miami. Washington will play seven home matches and seven matches on the road during the month-long season. The team is currently looking at several potential locations to build a temporary stadium for the season. -

Tennis Rules and Etiquette Guidelines Our Spectator Policy, This Will Not Be Tolerated

Tennis Rules and Etiquette Guidelines our spectator policy, this will not be tolerated. If someone does question you on a line call or a ruling Tennis you have the right to remove them/ask them to leave the playing area. Should they refuse to leave the playing area, let them and the player know that the cheat sheet player will now receive a code violation related to this action and will continue to do so until either one of two things occur – Rules and a) The parent/spectator leaves the area b) The player reaches his or her 3rd code violation and is defaulted from match play. Etiquette Parent/Spectator Continues to be Unruly: These are grounds for immediate removal from the playing area and facility. Should they refuse to leave the premises and having gone through Code Violations, then do Guidelines not hesitate to contact security or the police to have them removed. Safety of the players, officials, tournament staff and facility staff always comes first. Should an Incident Occur: If something does take place, please contact USTA Northern: http://www.northern.usta.com. Tennis Rules and Etiquette Guidelines Tennis Rules and Etiquette Guidelines Etiquette (for parent or spectators) 1. Any attempt by a spectator (which includes the above mentioned) to question an official, tournament staff or 1. Use primarily your “inside voice” when watching player regarding a line call or other ruling during match matches. play will be cause for immediate removal from the playing area and, if warranted, from the premises. 2. Don’t cheer, shout encouragement, or applaud during a point or serve. -

Week 1: Lesson Plans -- Contact Point/Forehand 6Th Grade Program -- AAACTA

Week 1: Lesson Plans -- Contact Point/Forehand 6th Grade Program -- AAACTA -Show LISTENING POSITION -- hug racquet while they listen to coach and watch demo -Explain drill verbally in less than 20 seconds or less and DEMONSTRATE (visually). -Ask players to “show you” they can do the skill, or ask “Can you do that?” After you demonstrate skill -USE players’ names constantly -Show WHOLE SHAPE of Forehand Time Activity Activity Decription/Notes (approx) Name/Purpose 3 min Intros Names! Overview, Start learning player names and using them right away! excited they are here. 3 min Dynamic Circle Jog/Ball Toss: Players and coaches get in BIG circle using whole side of court. Warm Up Gets heart rate up, legs Depending on size of group, have about 1 ball for every 3-4 players. Jog slowly in circle warmed up and watching and GENTLY toss ball over your should to the person behind you. She gently tosses it incoming ball. behind her,to next player etc. Switch direction. 3 min Athletic Ball Drop Partner 1 holds 2 balls (1 in each hand) with arms outstretched to the sides of body at shoulder Warm up height. Partner 2 stands about 5 feet away. Partner 1 opens fingers and lets one ball drop. Partner 2 tries to catch the ball in 1 or 2 bounces (partner 1 calls 1 or 2 bounces). Increase/decrease distance from partner to make harder/easier. Anticipation/First step quickness 1 min Racquet Anatomy of Racquet Racquet Face, Butt, Grip, Handle, Tip, Strings, Sweet Spot, Throat, etc. Warm up 3 min Racquet Racquet Handling Roll ball around racquet face, try popping ball up on strings with palm up, then palm Warm up down (no higher than eyebows/forehead); try dribbling -- no higher than waist. -

Technical Interpretations

TECHNICAL INTERPRETATIONS LAW 36: LEG BEFORE WICKET There are several points that an umpire must consider when an appeal is made for LBW. The final and most important question is “would the ball have hit the stumps?” but more about that later. (1) Firstly, the delivery must not be a “no ball”. (2) The ball MUST pitch in line with the stumps or outside the off stump. Any ball pitching outside leg stump CANNOT BE OUT LBW. (3) The ball must not come off the bat or the hand holding the bat before it hits the batsman on the pads or body. (4) Where does the ball hit striker? – only the first impact is considered. Quite often the ball will hit the front pad and deflect onto the back pad. (5) If the striker was attempting to play the ball the ball must hit him in line between wicket and wicket, even if the impact is above the level of the bails. (6) If the striker was making no genuine attempt to play the ball, then the impact can be outside off stump. (7) Where would the ball have gone if not interrupted by impact on striker? (8) The ball does not always pitch before hitting the batsman. If it is a full toss the umpire MUST assume the path at impact will continue after impact. (9) The “Off” side of wicket is now clearly defined as when the batsman takes his stance and the ball comes into play – ie when the bowler starts his run up or if he has no run up, his bowling action The term “LBW” whilst meaning Leg Before Wicket also allows the batsman to be out if the ball strikes any other part of his body, even his shoulder or head! All of the above points must all be satisfied for the batsman to be out under this Law.