Using a Machine Translation Tool to Counter Cross-Language Plagiarism

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TANIZE MOCELLIN FERREIRA Narratology and Translation Studies

TANIZE MOCELLIN FERREIRA Narratology and Translation Studies: an analysis of potential tools in narrative translation PORTO ALEGRE 2019 UNIVERSIDADE FEDERAL DO RIO GRANDE DO SUL INSTITUTO DE LETRAS PROGRAMA DE PÓS-GRADUAÇÃO EM LETRAS MESTRADO EM LITERATURAS DE LÍNGUA INGLESA LINHA DE PESQUISA: SOCIEDADE, (INTER)TEXTOS LITERÁRIOS E TRADUÇÃO NAS LITERATURAS ESTRANGEIRAS MODERNAS Narratology and Translation Studies: an analysis of potential tools in narrative translation Tanize Mocellin Ferreira Dissertação de Mestrado submetida ao Programa de Pós-graduação em Letras da Universidade Federal do Rio Grande do Sul como requisito parcial para a obtenção do título de Mestre em Letras. Orientadora: Elaine Barros Indrusiak PORTO ALEGRE Agosto de 2019 2 CIP - Catalogação na Publicação Ferreira, Tanize Mocellin Narratology and Translation Studies: an analysis of potential tools in narrative translation / Tanize Mocellin Ferreira. -- 2019. 98 f. Orientadora: Elaine Barros Indrusiak. Dissertação (Mestrado) -- Universidade Federal do Rio Grande do Sul, Instituto de Letras, Programa de Pós-Graduação em Letras, Porto Alegre, BR-RS, 2019. 1. tradução literária. 2. narratologia. 3. Katherine Mansfield. I. Indrusiak, Elaine Barros, orient. II. Título. Elaborada pelo Sistema de Geração Automática de Ficha Catalográfica da UFRGS com os dados fornecidos pelo(a) autor(a). Tanize Mocellin Ferreira Narratology and Translation Studies: an analysis of potential tools in narrative translation Dissertação de Mestrado submetida ao Programa de Pós-graduação em Letras -

Defining and Avoiding Plagiarism: the WPA Statement on Best Practices Council of Writing Program Administrators ( January 2003

Defining and Avoiding Plagiarism: The WPA Statement on Best Practices Council of Writing Program Administrators (http://www.wpacouncil.org), January 2003. Plagiarism has always concerned teachers and administrators, who want students’ work to represent their own efforts and to reflect the outcomes of their learning. However, with the advent of the Internet and easy access to almost limitless written material on every conceivable topic, suspicion of student plagiarism has begun to affect teachers at all levels, at times diverting them from the work of developing students’ writing, reading, and critical thinking abilities. This statement responds to the growing educational concerns about plagiarism in four ways: by defining plagiarism; by suggesting some of the causes of plagiarism; by proposing a set of responsibilities (for students, teachers, and administrators) to address the problem of plagiarism; and by recommending a set of practices for teaching and learning that can significantly reduce the likelihood of plagiarism. The statement is intended to provide helpful suggestions and clarifications so that instructors, administrators, and students can work together more effectively in support of excellence in teaching and learning. What Is Plagiarism? In instructional settings, plagiarism is a multifaceted and ethically complex problem. However, if any definition of plagiarism is to be helpful to administrators, faculty, and students, it needs to be as simple and direct as possible within the context for which it is intended. Definition: In an instructional setting, plagiarism occurs when a writer deliberately uses someone else’s language, ideas, or other original (not common-knowledge) material without acknowledging its source. This definition applies to texts published in print or on-line, to manuscripts, and to the work of other student writers. -

LIT 3003: the Forms of Narrative - Narrating the Ineffable Section 025F

LIT 3003: The Forms of Narrative - Narrating the Ineffable Section 025F Instructor:Cristina Ruiz-Poveda Email:[email protected] Meetings:MTWRF Period 5 (2-3:15pm) at TUR 2350 Office hours:MW 3:15-4 pm, and by appointment at TUR 4415 "Whereof one cannot speak, thereof one must be silent." From Ludwig Wittgenstein’sTractatus The Tao that can be spoken is not the eternal Tao The name that can be named is not the eternal name From Lao Tzu’sTao Те С hing Course description: Storytelling is such an inherent part of human experience that we often overlook how narratives, even those which may be foundational to our world views, are articulated. This course serves as an introduction to narrative forms, as well as a critical exploration of the potentialnarratology of —the theory of narrative—to analyze stories of ineffable experiences. How do narratives convey elusive existential and spiritual concepts? Are there shared formal and procedural traits of such narratives? In this class, we will learn the foundations of narratology, so as critically analyze how different narrative media (literature, visual culture, film, etc.) represent intense but seemingly incommunicable human experiences. These stories will serve as a vehicle to explore the limits of storytelling and to analyze narrative strategies at those limits. Students will also experiment with different forms of narrative through creative activities. Please note: this is not a course in religion or religious art history. This course is a survey of narratology: it offers theoretical tools to analytically understand narrativesabout profound existential concerns and transcendental experiences. Course goals and outcomes:by the end of the semester you should be able to.. -

Loan and Calque Found in Translation from English to Indonesian

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by International Institute for Science, Technology and Education (IISTE): E-Journals Journal of Literature, Languages and Linguistics www.iiste.org ISSN 2422-8435 An International Peer-reviewed Journal DOI: 10.7176/JLLL Vol.54, 2019 Loan and Calque Found in Translation from English to Indonesian Marlina Adi Fakhrani Batubara A Postgraduate student of Translation Studies in University of Gunadarma, Depok, Indonesia Abstract The aim of this article is to find out the cause of using loan and calque found in translation from English to Indonesian, find out which strategy is mostly used in translating some of the words and phrases found in translation from English to Indonesian and what form that is usually use loan and calque in the translation. Data of this article is obtained from the English novel namely Murder in the Orient Express by Agatha Christie and its Indonesian translation. This article concluded that out of 100 data, 57 data uses loan and 47 data uses calque. Moreover, it shows that 70 data are in the form of words that uses loan or calque and 30 data are in the form of phrases that uses loan or calque. Keywords: Translation, Strategy, Loan and Calque. DOI : 10.7176/JLLL/54-03 Publication date :March 31 st 2019 1. INTRODUCTION Every country has their own languages in order to express their intentions or to communicate. Language plays a great tool for humans to interact with each other. Goldstein (2008) believed that “We can define language as a system of communication using sounds or symbols that enables us to express our feelings, thoughts, ideas and experiences.” (p. -

Rethinking Pauline Hopkins

Rethinking Pauline Hopkins: Plagiarism, Appropriation, and African American Cultural Production Downloaded from https://academic.oup.com/alh/article/30/4/e3/5099108 by guest on 29 September 2021 Introduction, pp. e4–e8 By Richard Yarborough The Practice of Plagiarism in a Changing Context pp. e9–e13 By JoAnn Pavletich Black Livingstone: Pauline Hopkins, Of One Blood, and the Archives of Colonialism, pp. e14–e20 By Ira Dworkin Appropriating Tropes of Womanhood and Literary Passing in Pauline Hopkins’s Hagar’s Daughter, pp. e21–e27 By Lauren Dembowitz Introduction Richard Yarborough* Downloaded from https://academic.oup.com/alh/article/30/4/e3/5099108 by guest on 29 September 2021 I recall first encountering Pauline Hopkins in graduate school in the mid-1970s. Conducting research on her work entailed tolerat- ing the eyestrain brought on by microfilm and barely legible photo- copies of the Colored American Magazine. I also vividly remember the appearance of the 1978 reprint edition of her novel Contending Forces (1900) in Southern Illinois University Press’s Lost American Fiction series. I experienced both gratification at the long-overdue attention the novel was garnering and also no little distress upon reading Gwendolyn Brooks’s afterword to the text. While acknowl- edging our “inevitable indebtedness” to Hopkins, Brooks renders this brutal judgment: “Often doth the brainwashed slave revere the modes and idolatries of the master. And Pauline Hopkins consis- tently proves herself a continuing slave, despite little bursts of righ- teous heat, throughout Contending Forces” (409, 404–405). This view of the novel as a limited, compromised achievement reflects the all-too-common lack at the time of a nuanced, informed engage- ment with much post-Reconstruction African American literature broadly and with that produced by African American women in particular. -

Untranslating the Neo-Avant-Gardes Luke Skrebowski

INTRODUCTION UnTranslaTing The neo-aVanT-garDes luke skrebowski This guest-edited issue aims to trouble assumptions about the trans- latability of various global neo-avant-gardes into canonical Anglo- American terms and categories—including Pop, Minimalism, Conceptualism—however problematized and expanded they may be in the process. The assumptions I have in mind tend to prop up the cul- tural hegemony of Western institutions by means of a logic of inclusion that serves to reinforce rather than destabilize the status quo. To this end, this issue foregrounds the problem of translation, and specifi cally the fi gure of “the untranslatable,” to address the mediation of global neo-avant-gardes in a more refl exive way, going beyond the often nebu- lous and frequently one-sided notions of “infl uence,” “interaction,” or “contact” that continue to characterize much of the discourse on the global neo-avant-gardes. The notion of the untranslatable is borrowed from Barbara Cassin, via Emily Apter and Jacques Lezra, and is developed here for comparative work in, but also in a certain sense against, global art his- tory. Cassin’s multilingual philosophical lexicon Vocabulaire européen des philosophies: Dictionnaire des intraduisibles (2004) consists of a select number of terms drawn from particular national and linguistic philosophical traditions which are chosen precisely for their “untrans- latability,” a term that, as Cassin insists, “n’implique nullement que les termes en question . ne soient pas traduits et ne puissent pas 4 © 2018 ARTMargins and the Massachusetts Institute of Technology doi:10.1162/ARTM_e_00206 Downloaded from http://www.mitpressjournals.org/doi/pdf/10.1162/artm_e_00206 by guest on 26 September 2021 l’être—l’intraduisible c’est plutôt ce qu’on ne cesse pas de (ne pas) traduire.” (“In no way implies that the terms in question . -

Chapter One: Narrative Point of View and Translation

Chapter One: Narrative Point of View and Translation 1. Introduction In ‘The Russian Point of View’ Virginia Woolf explains that most of us have to depend “blindly and implicitly” on the works of translators in order to read a Russian novel (1925a: 174). According to Woolf, the effect of translation resembles the impact of “some terrible catastrophe”. Translation is a ‘mutilating’ process: When you have changed every word in a sentence from Russian to English, have thereby altered the sense a little, the sound, weight, and accent of the words in relation to each other completely, nothing remains except a crude and coarsened version of the sense. Thus treated, the great Russian writers are like men deprived by an earthquake or a railway accident not only of all their clothes, but also of something subtler and more important – their manner, the idiosyncrasies of their character. What remains is, as the English have proved by the fanaticism of their admiration, something very powerful and impressive, but it is difficult to feel sure, in view of these mutilations, how far we can trust ourselves not to impute, to distort, to read into them an emphasis which is false (1925a: 174). Woolf was thus aware of the transformations brought about by translation. However, although she spoke French, she seemed uninterested in what French translators did to and with her texts. As a matter of fact Woolf, who recorded most of her life in her diary, never mentions having read a translation of her works. The only evidence of a meeting with one of her translators is to be found in her diary and a letter written in 1937, which describes receiving Marguerite Yourcenar, who had questions regarding her translation of The Waves. -

Genre Analysis and Translation

Genre analysis and translation... 75 GENRE ANALYSIS AND TRANSLATION - AN INVESTI- GATION OF ABSTRACTS OF RESEARCH ARTICLES IN TWO LANGUAGES Ornella Inês Pezzini Universidade Federal de Santa Catarina [email protected] Abstract This study presents an analysis of abstracts from research articles found in Linguistics and Translation Studies journals. It first presents some theoreti- cal background on discourse community and genre analysis, then it shows the analysis carried out on 18 abstracts, 6 written in English, 6 in Portu- guese and 6 being their translations into English. The analysis aims at verifying whether the rhetorical patterns of organizations and the moves found in abstracts coincide with those proposed by Swales (1993) in his study of research articles and introductions. Besides, it intends to identify the verb tenses and voice preferably used in this kind of text as well as mechanisms used to indicate presence or absence of the writer in the text. The analysis reveals that the rhetorical patterns and some moves proposed by Swales are found in abstracts, though not in the same order. It also shows a high occurrence of present simple tense and active voice in all moves and passive voice only occasionally. It argues that the absence of the writer is a distinctive feature of scientific discourse and it is obtained by means of passive voice and typical statements used as resources to avoid the use of personal pronouns. Keywords: genre analysis, scientific discourse, abstracts, rhetorical pat- terns. 76 Ornella Inês Pezzini Introduction The scientific community is growing considerably and no mat- ter what country the research is developed in, English is THE lan- guage used in scientific discourse, especially in research articles published in journals through which the work becomes accessible for the international scientific community. -

Rethinking Mimesis

Rethinking Mimesis Rethinking Mimesis: Concepts and Practices of Literary Representation Edited by Saija Isomaa, Sari Kivistö, Pirjo Lyytikäinen, Sanna Nyqvist, Merja Polvinen and Riikka Rossi Rethinking Mimesis: Concepts and Practices of Literary Representation, Edited by Saija Isomaa, Sari Kivistö, Pirjo Lyytikäinen, Sanna Nyqvist, Merja Polvinen and Riikka Rossi Layout: Jari Käkelä This book first published 2012 Cambridge Scholars Publishing 12 Back Chapman Street, Newcastle upon Tyne, NE6 2XX, UK British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Copyright © 2012 by Saija Isomaa, Sari Kivistö, Pirjo Lyytikäinen, Sanna Nyqvist, Merja Polvinen and Riikka Rossi and contributors All rights for this book reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner. ISBN (10): 1-4438-3901-9, ISBN (13): 978-1-4438-3901-3 Table of ConTenTs Introduction: Rethinking Mimesis The Editors...........................................................................................vii I Concepts of Mimesis Aristotelian Mimesis between Theory and Practice Stephen Halliwell....................................................................................3 Rethinking Aristotle’s poiêtikê technê Humberto Brito.....................................................................................25 Paul Ricœur and -

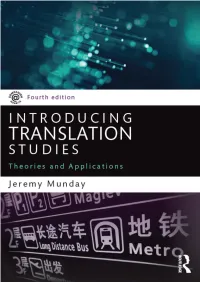

Introducing Translation Studies: Theories and Applications

Introducing Translation Studies Introducing Translation Studies remains the definitive guide to the theories and concepts that make up the field of translation studies. Providing an accessible and up-to-date overview, it has long been the essential textbook on courses worldwide. This fourth edition has been fully revised and continues to provide a balanced and detailed guide to the theoretical landscape. Each theory is applied to a wide range of languages, including Bengali, Chinese, English, French, German, Italian, Punjabi, Portuguese and Spanish. A broad spectrum of texts is analysed, including the Bible, Buddhist sutras, Beowulf, the fiction of García Márquez and Proust, European Union and UNESCO documents, a range of contemporary films, a travel brochure, a children’s cookery book and the translations of Harry Potter. Each chapter comprises an introduction outlining the translation theory or theories, illustrative texts with translations, case studies, a chapter summary and discussion points and exercises. New features in this fourth edition include: Q new material to keep up with developments in research and practice, including the sociology of translation, multilingual cities, translation in the digital age and specialized, audiovisual and machine translation Q revised discussion points and updated figures and tables Q new, in-chapter activities with links to online materials and articles to encourage independent research Q an extensive updated companion website with video introductions and journal articles to accompany each chapter, online exercises, an interactive timeline, weblinks, and PowerPoint slides for teacher support This is a practical, user-friendly textbook ideal for students and researchers on courses in Translation and Translation Studies. -

Linguaculture 1, 2015

LINGUACULTURE 1, 2015 THE MANY CONTEXTS OF TRANSLATION (STUDIES) RODICA DIMITRIU Alexandru Ioan Cuza University of Iaşi Abstract This article examines the ways in which, in just a couple of decades, and in view of the interdisciplinary nature of Translation Studies, the key notion of context has become increasingly broader and diversified within this area of research, allowing for complex analyses of the translators’ activities and decisions, of translation processes and, ultimately, of what accounts for the meaning(s) of a translated text. Consequently, some (brief) incursions are made into a number of (main) directions of the discipline and the related kinds of contexts they prioritized in investigating translation both as process and product. In the second section of this introductory article, the issue of context is particularized through references to the contributions in this special volume, which add new layers of meaning to context, touching upon further perspectives from which this complex notion could be approached. Keywords: context, co-text, linguistic directions in Translation Studies, pragmatic directions, socio-cultural directions In the last couple of decades, “context” has become an increasingly important parameter according to which research is carried out in almost every field of knowledge. In the linguistic and pragmatic as well as in the cultural and literary studies it has turned into a main area of investigation, which is tightly related to the creation and interpretation of meaning(s). However, this complex notion has been taken into account even by the more traditional (linguistic and literary) philosophical and philological discourse,1 whenever processes of retrieving meaning at various levels and/or of providing various forms of textual interpretation were involved. -

Translation Issues in Modern Chinese Literature: Viewpoint, Fate and Metaphor in Xia Shang's "The Finger-Guessing Game"

University of Massachusetts Amherst ScholarWorks@UMass Amherst Masters Theses Dissertations and Theses October 2019 Translation Issues in Modern Chinese Literature: Viewpoint, Fate and Metaphor in Xia Shang's "The Finger-Guessing Game" Jonathan Heinrichs University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/masters_theses_2 Part of the Chinese Studies Commons Recommended Citation Heinrichs, Jonathan, "Translation Issues in Modern Chinese Literature: Viewpoint, Fate and Metaphor in Xia Shang's "The Finger-Guessing Game"" (2019). Masters Theses. 834. https://doi.org/10.7275/15178098 https://scholarworks.umass.edu/masters_theses_2/834 This Open Access Thesis is brought to you for free and open access by the Dissertations and Theses at ScholarWorks@UMass Amherst. It has been accepted for inclusion in Masters Theses by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. Translation Issues in Modern Chinese Fiction: Viewpoint, Fate and Metaphor in Xia Shang’s The Finger-Guessing Game A Thesis Presented by JONATHAN M. HEINRICHS Submitted to the Graduate School of the University of Massachusetts in partial fulfillment of the requirements for the degree of Master of Arts September 2019 Chinese © Copyright by Jonathan M. Heinrichs 2019 All rights reserved Translation Issues in Modern Chinese Fiction: Viewpoint, Fate and Metaphor in Xia Shang’s The Finger-Guessing Game A Thesis Presented by JONATHAN M. HEINRICHS Approved as