Sparse Discriminant Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AMSTATNEWS the Membership Magazine of the American Statistical Association •

May 2021 • Issue #527 AMSTATNEWS The Membership Magazine of the American Statistical Association • http://magazine.amstat.org 2021 COPSS AWARD WINNERS ALSO: ASA, International Community Continue to Decry Georgiou Persecution Birth of an ASA Outreach Group: The Origins of JEDI AMSTATNEWS MAY 2021 • ISSUE #527 Executive Director Ron Wasserstein: [email protected] Associate Executive Director and Director of Operations Stephen Porzio: [email protected] features Senior Advisor for Statistics Communication and Media Innovation 3 President’s Corner Regina Nuzzo: [email protected] 5 ASA, International Community Continue to Decry Director of Science Policy Georgiou Persecution Steve Pierson: [email protected] Director of Strategic Initiatives and Outreach 8 What a Year! Practical Significance Celebrates Resilient Donna LaLonde: [email protected] Class of 2021 Director of Education 9 Significance Launches Data Economy Series with April Issue Rebecca Nichols: [email protected] 10 CHANCE Highlights: Spring Issue Features Economic Managing Editor Impact of COVID-19, Kullback’s Career, Sharing Data Megan Murphy: [email protected] 11 Forget March Madness! Students Test Probability Skills Editor and Content Strategist with March Randomness Val Nirala: [email protected] 12 My ASA Story: James Cochran Advertising Manager Joyce Narine: [email protected] 14 StatFest Back for 21st Year in 2021 Production Coordinators/Graphic Designers 15 Birth of an ASA Outreach Group: The Origins of JEDI Olivia Brown: [email protected] Megan Ruyle: [email protected] 18 2021 COPSS Award Winners Contributing Staff Members 23 A Story of COVID-19, Mentoring, and West Virginia Kim Gilliam Amstat News welcomes news items and letters from readers on matters of interest to the association and the profession. -

Thesis Is in Two Parts

Downloaded from orbit.dtu.dk on: Oct 03, 2021 Statistical Learning with Applications in Biology Nielsen, Agnes Martine Publication date: 2019 Document Version Publisher's PDF, also known as Version of record Link back to DTU Orbit Citation (APA): Nielsen, A. M. (2019). Statistical Learning with Applications in Biology. Technical University of Denmark. DTU Compute PHD-2018 Vol. 488 General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. Users may download and print one copy of any publication from the public portal for the purpose of private study or research. You may not further distribute the material or use it for any profit-making activity or commercial gain You may freely distribute the URL identifying the publication in the public portal If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Statistical Learning with Applications in Biology Agnes Martine Nielsen Kongens Lyngby 2018 PhD-2018-488 Technical University of Denmark Department of Applied Mathematics and Computer Science Richard Petersens Plads, building 324, 2800 Kongens Lyngby, Denmark Phone +45 4525 3031 [email protected] www.compute.dtu.dk PhD-2018-488 Summary (English) Statistical methods are often motivated by real problems. We consider methods inspired by problems in biology and medicine. The thesis is in two parts. -

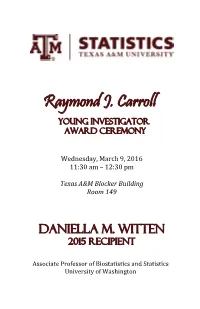

Raymond J. Carroll

Raymond J. Carroll Young Investigator Award Ceremony Wednesday, March 9, 2016 11:30 am – 12:30 pm Texas A&M Blocker Building Room 149 Daniella M. Witten 2015 Recipient Associate Professor of Biostatistics and Statistics University of Washington Raymond J. Carroll, Distinguished Professor The Raymond J. Carroll Young Investigator Award was established to honor Dr. Raymond J. Carroll, Distinguished Professor of Statistics, Nutrition and Toxicology, for his fundamental contributions in many areas of statistical methodology and practice, such as measurement error models, nonparametric and semiparametric regression, nutritional and genetic epidemiology. Carroll has been instrumental in mentoring and helping young researchers, including his own students and post-doctoral trainees, as well as others in the statistical community. Dr. Carroll is highly regarded as one of the world’s foremost experts on problems of measurement error, functional data analysis, semiparametric methods and more generally on statistical regression modeling. His work, characterized by a combination of deep theoretical effort, innovative methodological development and close contact with science, has impacted a broad variety of fields, including marine biology, laboratory assay methods, econometrics, epidemiology and molecular biology. In 2005, Raymond Carroll became the first statistician ever to receive the prestigious National Cancer Institute Method to Extend Research in Time (MERIT) Award for his pioneering efforts in nutritional epidemiology and biology and the resulting advances in human health. Less than five percent of all National Institutes of Health-funded investigators merit selection for the highly selective award, which includes up to 10 years of grant support. The Carroll Young Investigator Award is awarded biennially on odd numbered years to a statistician who has made important contributions to the area of statistics. -

Trevor John Hastie 1040 Campus Drive Stanford, CA 94305 Home Phone&FAX: (650) 326-0854

Trevor John Hastie 1040 Campus Drive Stanford, CA 94305 Home Phone&FAX: (650) 326-0854 Department of Statistics Born: June 27, 1953, South Africa Sequoia Hall Married, two children Stanford University U. S. citizen, S.A. citizen Stanford, CA 94305 E-Mail: [email protected] (650) 725-2231 Fax: 650/725-8977 Updated: June 22, 2021 Present Position 2013{ John A. Overdeck Professor of Mathematical Sciences, Stanford University. 2006{2009 Chair, Department of Statistics, Stanford University. 2005{2006 Associate Chair, Department of Statistics, Stanford University. 1999{ Professor, Statistics and Biostatistics Departments, Stanford University. Founder and co-director of Statistics department industrial affiliates program. 1994{1998 Associate Professor (tenured), Statistics and Biostatistics Departments, Stan- ford University. Research interests include nonparametric regression models, computer in- tensive data analysis techniques, statistical computing and graphics, and statistical consulting. Currently working on adaptive modeling and predic- tion procedures, signal and image modeling, and problems in bioinformatics with many more variables than observations. Education 1984 Stanford University, Stanford, California { Ph.D, Department of Statis- tics (Werner Stuetzle, advisor) 1979 University of Cape Town, Cape Town, South Africa { First Class Masters Degree in Statistics (June Juritz, advisor). 1976 Rhodes University, Grahamstown, South Africa { Bachelor of Science Honors Degree in Statistics. 1975 Rhodes University, Grahamstown, South Africa { Bachelor of Science Degree (cum laude) in Statistics, Computer Science and Mathematics. Awards and Honors 2020 \Statistician of the year" award, Chicago chapter ASA. 2020 Breiman award (senior) 2019 Recipient of the Sigillum Magnum, University of Bologna, Italy. 2019 Elected to The Royal Netherlands Academy of Arts and Science. 2019 Wald lecturer, JSM Denver. -

Big Data Fazekas 2020.Pdf

Using Big Data for social science research Mihály Fazekas School of Public Policy Central European University Winter semester 2019-20 (2 credits) Class times : Fridays on a bi-weekly bases (6 blocks) Office hours: By appointment (Quellenstrasse building) Teaching Assistant: Daniel Kovarek Version: 9/12/2019 Introduction The course is an introduction to state-of-the-art methods to use Big Data in social sciences research. It is a hands-on course requiring students to bring their own research problems and ideas for independent research. The course will review three main topics making Big Data research unique: 1. New and emerging data sources such social media or government administrative data; 2. Innovative data collection techniques such as web scraping; and 3. Data analysis techniques typical of Big Data analysis such as machine learning. Big Data means that both the speed and frequency of data created are increasing at an accelerating pace virtually covering the full spectrum of social life in ever greater detail. Moreover, much of this data is more and more readily available making real-time data analysis feasible. During the course students will acquaint themselves with different concepts, methodological approaches, and empirical results revolving around the use of Big Data in social sciences. As this domain of knowledge is rapidly evolving and already vast, the course can only engender basic literacy skills for understanding Big Data and its novel uses. Students will be encouraged to use acquired skills in their own research throughout the course and continue engaging with new methods. Learning outcomes Students will be acquainted with basic concepts and methods of Big Data and their use for social sciences research. -

Ali Shojaie, Ph.D

Ali Shojaie, Ph.D. University of Washington Department of Biostatistics Phone: (206) 616-5323 334 Hans Rosling Center Email: [email protected] 3980 15th Avenue NE, Box 351617 Homepage: faculty.washington.edu/ashojaie/ Seattle, WA 98195 Education • Iran University of Science & Technology, Tehran, Iran, B.Sc in Industrial and Systems Engineering, 09/1993-02/1998 • Amirkabir University of Technology (Tehran Polytechnic), Tehran, Iran, M.Sc in Industrial Engineering, 09/1998-02/2001 • Michigan State University, East Lansing, MI, M.S. Statistics, 01/2003-05/2005 • University of Michigan, Ann Arbor, MI, M.S. in Human Genetics, 09/2006-12/2009 • University of Michigan, Ann Arbor, MI, M.S. in Applied Mathematics, 09/2007-04/2010 • University of Michigan, Ann Arbor, MI, PhD in Statistics, 09/2005- 04/2010 Professional Positions • Postdoctoral Research Fellow, Dept. of Statistics, University of Michigan, 05/2010-06/2011 • Visiting Scholar, Statistical & Applied Mathematical Sciences Institute (SAMSI), 09/2010-05/2010 • Professor of Biostatistics and Adjunct Professor of Statistics, University of Washington, 07/2020- present – Associate Professor of Biostatistics and Adjunct Associate Professor of Statistics, University of Washington, 07/2016-06/2020 – Assistant Professor of Biostatistics and Adjunct Assistant Professor of Statistics, University of Washington, 07/2011-06/2016 • Associate Chair for Strategic Research Affairs, Department of Biostatistics, University of Washington, 09/2020-present • Founding Director, Summer Institute for Statistics in Big Data (SISBID), 05/2014-present • Affiliate Member, Center for Statistics in Social Sciences (CSSS), University of Washington, 03/2012- present • Affiliate Member, Biostatistics and Biomathematics Program, Fred Hutchinson Cancer Research Center (FHCRC), 09/2012-present • Affiliate Faculty, eSciences Institute, University of Washington, 03/2014-present • Affiliate Member, Algorithmic Foundations of Data Science (ADSI) Institute, University of Washington, 2018-present Ali Shojaie, Ph.D. -

Selective Inference for Hierarchical Clustering Arxiv:2012.02936V1

Selective Inference for Hierarchical Clustering Lucy L. Gaoy,∗ Jacob Bien◦, and Daniela Witten z y Department of Statistics and Actuarial Science, University of Waterloo ◦ Department of Data Sciences and Operations, University of Southern California z Departments of Statistics and Biostatistics, University of Washington September 23, 2021 Abstract Classical tests for a difference in means control the type I error rate when the groups are defined a priori. However, when the groups are instead defined via clus- tering, then applying a classical test yields an extremely inflated type I error rate. Notably, this problem persists even if two separate and independent data sets are used to define the groups and to test for a difference in their means. To address this problem, in this paper, we propose a selective inference approach to test for a differ- ence in means between two clusters. Our procedure controls the selective type I error rate by accounting for the fact that the choice of null hypothesis was made based on the data. We describe how to efficiently compute exact p-values for clusters obtained using agglomerative hierarchical clustering with many commonly-used linkages. We apply our method to simulated data and to single-cell RNA-sequencing data. Keywords: post-selection inference, hypothesis testing, difference in means, type I error arXiv:2012.02936v2 [stat.ME] 22 Sep 2021 ∗Corresponding author: [email protected] 1 1 Introduction Testing for a difference in means between groups is fundamental to answering research questions across virtually every scientific area. Classical tests control the type I error rate when the groups are defined a priori. -

Enhancement of Multimodal Traffic Safety in High-Quality Transit Areas

Project 1920 February 2021 Enhancement of Multimodal Traffic Safety in High-Quality Transit Areas Yongping Zhang, PhD, P.E. Wen Cheng, PhD, P.E. Xudong Jia, PhD, P.E. CSU TRANSPORTATION CONSORTIUM transweb.sjsu.edu/csutc MINETA TRANSPORTATION INSTITUTE MTI FOUNDER Hon. Norman Y. Mineta Founded in 1991, the Mineta Transportation Institute (MTI), an organized research and training unit in partnership with the Lucas College and Graduate School of Business at San José State University (SJSU), increases mobility for all by improving the safety, MTI BOARD OF TRUSTEES efficiency, accessibility, and convenience of our nation’s transportation system. Through research, education, workforce development, and technology transfer, we help create a connected world. MTI leads the Mineta Consortium for Transportation Mobility (MCTM) Founder, Honorable Grace Crunican** Diane Woodend Jones Takayoshi Oshima Norman Mineta* Owner Principal & Chair of Board Chairman & CEO funded by the U.S. Department of Transportation and the California State University Transportation Consortium (CSUTC) funded Secretary (ret.), Crunican LLC Lea + Elliott, Inc. Allied Telesis, Inc. by the State of California through Senate Bill 1. MTI focuses on three primary responsibilities: US Department of Transportation Donna DeMartino David S. Kim* Paul Skoutelas* Chair, Managing Director Secretary President & CEO Abbas Mohaddes Los Angeles-San Diego-San Luis California State Transportation American Public Transportation President & COO Obispo Rail Corridor Agency Agency (CALSTA) Association -

1 an Introduction to Statistical Learning

1 Introduction CS 189 / 289A [Spring 2017] Machine Learning Jonathan Shewchuk http://www.cs.berkeley.edu/∼jrs/189 TAs: Daylen Yang, Ting-Chun Wang, Moulos Vrettos, Mostafa Rohaninejad, Michael Zhang, Anurag Ajay, Alvin Wan, Soroush Nasiriany, Garrett Thomas, Noah Golmant, Adam Villaflor, Raul Puri, Alex Francis Questions: Please use Piazza, not email. [Piazza has an option for private questions, but please use public for most questions so other people can benefit.] For personal matters only, [email protected] Discussion sections: 12 now; more will be added. Attend any section. If the room is too full, please go to another one. [However, to get into the course, you have to pick some section with space. Doesn’t matter which one!] No sections this week. [Enrollment: We’re trying to raise it to 540. After enough students drop, it’s possible that everyone might get in. Concurrent enrollment students have the lowest priority; non-CS grad students the second-lowest.] [Textbooks: Available free online. Linked from class web page.] STS Hastie • Tibshirani Tibshirani Hastie • • Friedman Springer Texts in Statistics Tibshirani · Hastie Witten James · Springer Texts in Statistics Gareth James · Daniela Witten · Trevor Hastie · Robert Tibshirani Springer Series in Statistics Springer Series in Statistics An Introduction to Statistical Learning with Applications in R Gareth James An Introduction to Statistical Learning provides an accessible overview of the fi eld of statistical learning, an essential toolset for making sense of the vast and complex data sets that have emerged in fi elds ranging from biology to fi nance to marketing to Daniela Witten astrophysics in the past twenty years. -

STAT 639 Data Mining and Analysis

STAT 639 Data Mining and Analysis Course Description This course is an introduction to concepts, methods, and practices in statistical data mining. We will provide a broad overview of topics that are related to supervised and unsupervised learning. See the tentative schedule on the last page for details. Emphasis will be placed on applied data analysis. Learning Objectives Students will learn how and when to apply statistical learning techniques, their comparative strengths and weaknesses, and how to critically evaluate the performance of learning algorithms. Students who successfully complete this course should be able to apply basic statistical learning methods to build predictive models or perform exploratory analysis, and make sense of their findings. Prerequisites/Corequisites Familiarity with programming language R and knowledge of basic multivariate calculus, statisti- cal inference, and linear algebra is expected. Students should be comfortable with the following concepts: probability distribution functions, expectations, conditional distributions, likelihood functions, random samples, estimators and linear regression models. Suggested Textbooks • (ISLR) An Introduction to Statistical Learning with Applications in R by Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshirani. Available online: http://www-bcf.usc.edu/ gareth/ISL/index.html • (PRML) Pattern Recognition and Machine Learning by Christopher M. Bishop. Available online: https://www.microsoft.com/en-us/research/uploads/prod/2006/01/Bishop- Pattern-Recognition-and-Machine-Learning-2006.pdf 1 STAT639 Syllabus • (DMA) Data Mining and Analysis: Fundamental Concepts and Algorithms by Mo- hammed J. Zaki and Wagner Meira, Jr. Available online: http://www.dataminingbook.info/pmwiki.php • (ESL) The Elements of Statistical Learning: Data Mining, Inference, and Prediction by Trevor Hastie, Robert Tibshirani and Jerome Friedman. -

AMSTATNEWS the Membership Magazine of the American Statistical Association •

October 2020 • Issue #520 AMSTATNEWS The Membership Magazine of the American Statistical Association • http://magazine.amstat.org JSM 2020 THE HIGHLIGHTS ALSO: ASA Fellows Analysis To Get a PhD or Not to Get a PhD? Naaonal Center for Science and Engineering Staasacs 1 of 13 federal Measures U.S. science and staasacal agencies technological performance NCSES is the naaon’s Idenafy knowledge gaps premier source of data on Inform policy Sustain and improve U.S. The science and engineering staasacal data workforce Research and development As a principal federal staasacal U.S. compeaaveness in science, agency, NCSES serves as a clearinghouse engineering, technology, and for the collecaon, interpretaaon, research and development analysis, and disseminaaon of objecave The condiaon and progress of data on the science and engineering U.S. STEM educaaon enterprise. www.ncses.nsf.gov 2415 Eisenhower Avenue, W14200, Alexandria, VA 22314|703.292.8780 AMSTATNEWS OCTOBER 2020 • ISSUE #520 Executive Director Ron Wasserstein: [email protected] Associate Executive Director and Director of Operations features Stephen Porzio: [email protected] 3 President’s Corner Senior Advisor for Statistics Communication and Media Innovation Regina Nuzzo: [email protected] 6 Highlights of the July 28–30, 2020, ASA Board of Directors Meeting Director of Science Policy Steve Pierson: [email protected] 7 Technometrics Calls for Editor Applications and Nominations Director of Strategic Initiatives and Outreach 8 Multi-Stakeholder Alliances: A Must for Equity in Higher -

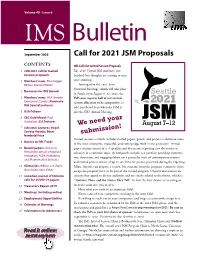

Call for 2021 JSM Proposals

Volume 49 • Issue 6 IMS Bulletin September 2020 Call for 2021 JSM Proposals CONTENTS IMS Calls for Invited Session Proposals 1 JSM 2021 call for Invited The 2020 Virtual JSM may have just Session proposals finished, but thoughts are turning to next 2 Members’ news: Rina Foygel year’s meeting. Barber, Daniela Witten Starting from the 2021 Joint Statistical Meetings, which will take place 3 Nominate for IMS Awards in Seattle from August 7–12, 2021, the 4 Members’ news: ASA Awards, IMS now requires half of our invited Emmanuel Candès; Nominate session allocation to be competitive, in IMS Special Lecturers odd-numbered years when the JSM is 5 ASA Fellows also the IMS Annual Meeting. 6 SSC Gold Medal: Paul our Gustafson; SSC lectures We need y 7 Schramm Lectures; Royal Society Awards; Ethel submission! Newbold Prize Invited sessions — which includes invited papers, panels, and posters — showcase some 8 Donors to IMS Funds of the most innovative, impactful, and cutting-edge work in our profession. Invited 10 Recent papers: Annals of paper sessions consist of 2–6 speakers and discussants reporting new discoveries or Probability, Annals of Applied advances in a common topic; invited panels include 3–6 panelists providing commen- Probability, ALEA, Probability tary, discussion, and engaging debate on a particular topic of contemporary interest; and Mathematical Statistics and invited posters consist of up to 40 electronic posters presented during the Opening 12 Obituaries: Willem van Zwet, Mixer. Anyone can propose a session, but someone from the program committee must Don Gaver, Yossi Yahav accept the proposal for it to be part of the invited program.