Iterative Goal-Based Voting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Critical Strategies Under Approval Voting: Who Gets Ruled in and Ruled Out

Critical Strategies Under Approval Voting: Who Gets Ruled In And Ruled Out Steven J. Brams Department of Politics New York University New York, NY 10003 USA [email protected] M. Remzi Sanver Department of Economics Istanbul Bilgi University 80310, Kustepe, Istanbul TURKEY [email protected] January 2005 2 Abstract We introduce the notion of a “critical strategy profile” under approval voting (AV), which facilitates the identification of all possible outcomes that can occur under AV. Included among AV outcomes are those given by scoring rules, single transferable vote, the majoritarian compromise, Condorcet systems, and others as well. Under each of these systems, a Condorcet winner may be upset through manipulation by individual voters or coalitions of voters, whereas AV ensures the election of a Condorcet winner as a strong Nash equilibrium wherein voters use sincere strategies. To be sure, AV may also elect Condorcet losers and other lesser candidates, sometimes in equilibrium. This multiplicity of (equilibrium) outcomes is the product of a social-choice framework that is more general than the standard preference-based one. From a normative perspective, we argue that voter judgments about candidate acceptability should take precedence over the usual social-choice criteria, such as electing a Condorcet or Borda winner. Keywords: approval voting; elections; Condorcet winner/loser; voting games; Nash equilibrium. Acknowledgments. We thank Eyal Baharad, Dan S. Felsenthal, Peter C. Fishburn, Shmuel Nitzan, Richard F. Potthoff, and Ismail Saglam for valuable suggestions. 3 1. Introduction Our thesis in this paper is that several outcomes of single-winner elections may be socially acceptable, depending on voters’ individual views on the acceptability of the candidates. -

Brake & Branscomb

Colorado Secretary of State 8/3/2021 Election Rulemaking These proposed edits to the SOS draft are contributed by Emily Brake (R) and Harvie Branscomb (D), and approved by Frank Atwood, Chair of the Approval Voting Party This document may be found on http://electionquality.com version 1.3 8/10/2021 15:50 Only portions of the recent draft rule text are included here with inline edits and comments highlighted as follows: Comments are highlighted in yellow. INSERTS ARE IN GREEN AND LARGE FONT deletions are in red and include strikeout Other indications of strikeout are from the original tabulation process 2.13.2 In accordance with section 1-2-605(7), C.R.S., no later than 90 days following a General Election, the county clerk in each county must SECRETARY OF STATE WILL PROPOSE cancelLATION OF the registrations of electors TO EACH COUNTY CLERK: [Comment: SOS taking responsibility from counties will lead to less verification and less resilience. The SOS office has not been subject to watcher access but will be as various steps of the conduct of election are taken over.] (a) Whose records have been marked “Inactive – returned mail”, “Inactive – undeliverable ballot”, or “Inactive – NCOA”; AND (b) Who have been mailed a confirmation card; and (c) Who have since THEREAFTER failed to vote in two consecutive general elections. New Rule 2.13.3, amendments to current Rule 2.13.3, repeal of 2.13.5, and necessary renumbering:VOTERS WHO REQUEST AN EMERGENCY BALLOT BE SENT TO THEM ELECTRONICALLY MUST BE DIRECTED BY THE COUNTY CLERK TO THE ONLINE BALLOT DELIVERY SYSTEM MAINTAINED BY THE SECRETARY OF STATE TO RECEIVE THEIR BALLOT ELECTRONICALLY. -

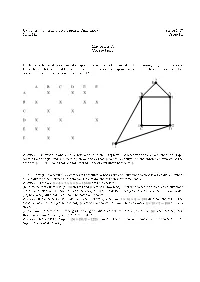

MGF 1107 FINAL EXAM REVIEW CHAPTER 9 1. Amy (A), Betsy

MGF 1107 FINAL EXAM REVIEW CHAPTER 9 1. Amy (A), Betsy (B), Carla (C), Doris (D), and Emilia (E) are candidates for an open Student Government seat. There are 110 voters with the preference lists below. 36 24 20 18 8 4 A E D B C C B C E D E D C B C C B B D D B E D E E A A A A A Who wins the election if the method used is: a) plurality? b) plurality with runoff? c) sequential pairwise voting with agenda ABEDC? d) Hare system? e) Borda count? 2. What is the minimum number of votes needed for a majority if the number of votes cast is: a) 120? b) 141? 3. Consider the following preference lists: 1 1 1 A C B B A D D B C C D A If sequential pairwise voting and the agenda BACD is used, then D wins the election. Suppose the middle voter changes his mind and reverses his ranking of A and B. If the other two voters have unchanged preferences, B now wins using the same agenda BACD. This example shows that sequential pairwise voting fails to satisfy what desirable property of a voting system? 4. Consider the following preference lists held by 5 voters: 2 2 1 A B C C C B B A A First, note that if the plurality with runoff method is used, B wins. Next, note that C defeats both A and B in head-to-head matchups. This example shows that the plurality with runoff method fails to satisfy which desirable property of a voting system? 5. -

On the Distortion of Voting with Multiple Representative Candidates∗

The Thirty-Second AAAI Conference on Artificial Intelligence (AAAI-18) On the Distortion of Voting with Multiple Representative Candidates∗ Yu Cheng Shaddin Dughmi David Kempe Duke University University of Southern California University of Southern California Abstract voters and the chosen candidate in a suitable metric space (Anshelevich 2016; Anshelevich, Bhardwaj, and Postl 2015; We study positional voting rules when candidates and voters Anshelevich and Postl 2016; Goel, Krishnaswamy, and Mu- are embedded in a common metric space, and cardinal pref- erences are naturally given by distances in the metric space. nagala 2017). The underlying assumption is that the closer In a positional voting rule, each candidate receives a score a candidate is to a voter, the more similar their positions on from each ballot based on the ballot’s rank order; the candi- key questions are. Because proximity implies that the voter date with the highest total score wins the election. The cost would benefit from the candidate’s election, voters will rank of a candidate is his sum of distances to all voters, and the candidates by increasing distance, a model known as single- distortion of an election is the ratio between the cost of the peaked preferences (Black 1948; Downs 1957; Black 1958; elected candidate and the cost of the optimum candidate. We Moulin 1980; Merrill and Grofman 1999; Barbera,` Gul, consider the case when candidates are representative of the and Stacchetti 1993; Richards, Richards, and McKay 1998; population, in the sense that they are drawn i.i.d. from the Barbera` 2001). population of the voters, and analyze the expected distortion Even in the absence of strategic voting, voting systems of positional voting rules. -

Approval Voting Under Dichotomous Preferences: a Catalogue of Characterizations

Draft – June 25, 2021 Approval Voting under Dichotomous Preferences: A Catalogue of Characterizations Florian Brandl Dominik Peters University of Bonn Harvard University [email protected] [email protected] Approval voting allows every voter to cast a ballot of approved alternatives and chooses the alternatives with the largest number of approvals. Due to its simplicity and superior theoretical properties it is a serious contender for use in real-world elections. We support this claim by giving eight characterizations of approval voting. All our results involve the reinforcement axiom, which requires choices to be consistent across different electorates. In addition, we consider strategyproofness, consistency with majority opinions, consistency under cloning alternatives, and invariance under removing inferior alternatives. We prove our results by reducing them to a single base theorem, for which we give a simple and intuitive proof. 1 Introduction Around the world, when electing a leader or a representative, plurality is by far the most common voting system: each voter casts a vote for a single candidate, and the candidate with the most votes is elected. In pioneering work, Brams and Fishburn (1983) proposed an alternative system: approval voting. Here, each voter may cast votes for an arbitrary number of candidates, and can thus choose whether to approve or disapprove of each candidate. The election is won by the candidate who is approved by the highest number of voters. Approval voting allows voters to be more expressive of their preferences, and it can avoid problems such as vote splitting, which are endemic to plurality voting. Together with its elegance and simplicity, this has made approval voting a favorite among voting theorists (Laslier, 2011), and has led to extensive research literature (Laslier and Sanver, 2010). -

2016 US Presidential Election Herrade Igersheim, François Durand, Aaron Hamlin, Jean-François Laslier

Comparing Voting Methods: 2016 US Presidential Election Herrade Igersheim, François Durand, Aaron Hamlin, Jean-François Laslier To cite this version: Herrade Igersheim, François Durand, Aaron Hamlin, Jean-François Laslier. Comparing Voting Meth- ods: 2016 US Presidential Election. 2018. halshs-01972097 HAL Id: halshs-01972097 https://halshs.archives-ouvertes.fr/halshs-01972097 Preprint submitted on 7 Jan 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. WORKING PAPER N° 2018 – 55 Comparing Voting Methods: 2016 US Presidential Election Herrade Igersheim François Durand Aaron Hamlin Jean-François Laslier JEL Codes: D72, C93 Keywords : Approval voting, range voting, instant runoff, strategic voting, US Presidential election PARIS-JOURDAN SCIENCES ECONOMIQUES 48, BD JOURDAN – E.N.S. – 75014 PARIS TÉL. : 33(0) 1 80 52 16 00= www.pse.ens.fr CENTRE NATIONAL DE LA RECHERCHE SCIENTIFIQUE – ECOLE DES HAUTES ETUDES EN SCIENCES SOCIALES ÉCOLE DES PONTS PARISTECH – ECOLE NORMALE SUPÉRIEURE INSTITUT NATIONAL DE LA RECHERCHE AGRONOMIQUE – UNIVERSITE PARIS 1 Comparing Voting Methods: 2016 US Presidential Election Herrade Igersheim☦ François Durand* Aaron Hamlin✝ Jean-François Laslier§ November, 20, 2018 Abstract. Before the 2016 US presidential elections, more than 2,000 participants participated to a survey in which they were asked their opinions about the candidates, and were also asked to vote according to different alternative voting rules, in addition to plurality: approval voting, range voting, and instant runoff voting. -

The Expanding Approvals Rule: Improving Proportional Representation and Monotonicity 3

Working Paper The Expanding Approvals Rule: Improving Proportional Representation and Monotonicity Haris Aziz · Barton E. Lee Abstract Proportional representation (PR) is often discussed in voting settings as a major desideratum. For the past century or so, it is common both in practice and in the academic literature to jump to single transferable vote (STV) as the solution for achieving PR. Some of the most prominent electoral reform movements around the globe are pushing for the adoption of STV. It has been termed a major open prob- lem to design a voting rule that satisfies the same PR properties as STV and better monotonicity properties. In this paper, we first present a taxonomy of proportional representation axioms for general weak order preferences, some of which generalise and strengthen previously introduced concepts. We then present a rule called Expand- ing Approvals Rule (EAR) that satisfies properties stronger than the central PR axiom satisfied by STV, can handle indifferences in a convenient and computationally effi- cient manner, and also satisfies better candidate monotonicity properties. In view of this, our proposed rule seems to be a compelling solution for achieving proportional representation in voting settings. Keywords committee selection · multiwinner voting · proportional representation · single transferable vote. JEL Classification: C70 · D61 · D71 1 Introduction Of all modes in which a national representation can possibly be constituted, this one [STV] affords the best security for the intellectual qualifications de- sirable in the representatives—John Stuart Mill (Considerations on Represen- tative Government, 1861). arXiv:1708.07580v2 [cs.GT] 4 Jun 2018 H. Aziz · B. E. Lee Data61, CSIRO and UNSW, Sydney 2052 , Australia Tel.: +61-2-8306 0490 Fax: +61-2-8306 0405 E-mail: [email protected], [email protected] 2 Haris Aziz, Barton E. -

Selecting the Runoff Pair

Selecting the runoff pair James Green-Armytage New Jersey State Treasury Trenton, NJ 08625 [email protected] T. Nicolaus Tideman Department of Economics, Virginia Polytechnic Institute and State University Blacksburg, VA 24061 [email protected] This version: May 9, 2019 Accepted for publication in Public Choice on May 27, 2019 Abstract: Although two-round voting procedures are common, the theoretical voting literature rarely discusses any such rules beyond the traditional plurality runoff rule. Therefore, using four criteria in conjunction with two data-generating processes, we define and evaluate nine “runoff pair selection rules” that comprise two rounds of voting, two candidates in the second round, and a single final winner. The four criteria are: social utility from the expected runoff winner (UEW), social utility from the expected runoff loser (UEL), representativeness of the runoff pair (Rep), and resistance to strategy (RS). We examine three rules from each of three categories: plurality rules, utilitarian rules and Condorcet rules. We find that the utilitarian rules provide relatively high UEW and UEL, but low Rep and RS. Conversely, the plurality rules provide high Rep and RS, but low UEW and UEL. Finally, the Condorcet rules provide high UEW, high RS, and a combination of UEL and Rep that depends which Condorcet rule is used. JEL Classification Codes: D71, D72 Keywords: Runoff election, two-round system, Condorcet, Hare, Borda, approval voting, single transferable vote, CPO-STV. We are grateful to those who commented on an earlier draft of this paper at the 2018 Public Choice Society conference. 2 1. Introduction Voting theory is concerned primarily with evaluating rules for choosing a single winner, based on a single round of voting. -

Midterm 2. Correction

University of Illinois at Urbana-Champaign Spring 2007 Math 181 Group F1 Midterm 2. Correction. 1. The table below shows chemical compounds which cannot be mixed without causing dangerous reactions. Draw the graph that would be used to facilitate the choice of disposal containers for these compounds ; what is the minimal number of containers needed ? 2 E A B C D E F A X X X B A 3 1 B X X X X C X X D X X X 1 2 E X X C D F X X F 1 Answer. The graph is above ; a vertex-coloring of this graph will at least use three colors since the graph contains a triangle, and the coloring above shows that 3 colors is enough. So the chromatic number of the graph is 3, which means that the minimal number of containers needed is 3. 2. (a) In designing a security system for its accounts, a bank asks each customer to choose a ve-digit number, all the digits to be distinct and nonzero. How many choices can a customer make ? Answer. There are 9 × 8 × 7 × 6 × 5 = 15120 possible choices. (b) A restaurant oers 4 soups, 10 entrees and 8 desserts. How many dierent choices for a meal can a customer make if one selection is made from each category ? If 3 of the desserts are pie and the customer will never order pie, how many dierent meals can the customer choose ? Answer. If one selection is made from each category, there are 4 × 10 × 8 = 320 dierent choices ; if the customer never orders pie, he has only 5 desserts to choose from and thus can make 4 × 10 × 5 = 200 dierent choices. -

Committee Reports to the 2018 Kansas Legislature

Committee Reports to the 2018 Kansas Legislature Supplement Kansas Legislative Research Department February 2018 2017 Legislative Coordinating Council Chairperson Senator Susan Wagle, President of the Senate Vice-chairperson Representative Ron Ryckman, Speaker of the House Jim Denning, Senate Majority Leader Anthony Hensley, Senate Minority Leader Don Hineman, House Majority Leader Scott Schwab, Speaker Pro Tem Jim Ward, House Minority Leader Kansas Legislative Research Department 300 SW 10th, Room 68-West, Statehouse Topeka, Kansas 66612-1504 Telephone: (785) 296-3181 FAX: (785) 296-3824 [email protected] http://www.kslegislature.org/klrd Supplement Special Committee on Commerce Special Committee on Elections Special Committee on Natural Resources Kansas Legislative Research Department 300 SW 10th Avenue Room 68-West, Statehouse Topeka, Kansas 66612-1504 Telephone: (785) 296-3181 FAX: (785) 296-3824 [email protected] http://www.kslegislature.org/klrd Foreword This publication is the supplement to the Committee Reports to the 2018 Legislature. It contains the reports of the following committees: Special Committee on Commerce, Special Committee on Elections, and Special Committee on Natural Resources. This publication is available in electronic format at http://www.kslegresearch.org/KLRD- web/Publications.html. TABLE OF CONTENTS Special Committee on Commerce Report..............................................................................................................................................1-1 Special Committee on Elections -

Approval Voting

Approval Voting 21-301 2018 3 May 1 Approval Voting All of the voting methods we have yet discussed are what is known as \preferential voting" methods. In a preferential voting system, voters are asked for their specific preferences regarding candidates: who do you prefer most, who in second place, etc. Nonpreferential voting is a variant in which voters do not rank candidates, but instead divide into categories of \acceptable" and \unacceptable." The following schema include some elements of nonpreferential voting, although some combine preferential and nonpreferential ideas. 1.1 Approval Voting: Basics In an approval voting system, each voter indicates, for each candidate, whether they approve of that choice or not. There is no ranking necessary. The candidate that then has the highest number of approvals goes on to win the election. Approval voting has gotten some recent support in political circles as a way of preventing a so-called \spoiler" candidate from taking support away from an otherwise viable first-choice candidate. If voters are permitted to approve multiple candidates, then they can vote both for the major candidate and the minor \spoiler." In addition, proponents of approval voting say that such systems reduce negative campaigning, as your goal is not to become a \lesser of two evils" but to maximize the number of voters who approve of you (this claim is hotly debated, but we've veered now into the crossover between mathematics and behavioral science). Criticisms of this approach center around the tendency of voters to engage in \bullet" voting, that is, voting only for their first choice preferred candidate rather than all those they approve; and the tendency of voters to approve of a candidate that they may not actually approve in order to prevent a different candidate from winning. -

Election Auditing

ELECTION AUDITING KEY ISSUES AND PERSPECTIVES SUMMARY REPORT ELECTION AUDIT SUMMIT DECEMBER 7-8, 2018 ACKNOWLEDGMENTS The coordinators of this summary report extend their deepest appreciation to each of the panelists who participated in the Election Audit Summit, without whom this report could not have taken form, and to all those involved in making the event and this report possible, especially Claire DeSoi, Daniel Guth, and Kathryn Treder. They also gratefully acknowledge the many attendees of that summit, who continue to advance the conversa- tion on election administration and auditing in the United States. DISCLAIMER The findings, interpretations, and conclustions expressed in this report do not necessarily represent the views or opinions of the California Institute of Technology or the Massachu- setts Institute of Technology. The Caltech/MIT Voting Technology Project and the MIT Election Data & Science Lab encourage dissemination of knowledge and research; this report may be reproduced in whole or in part for non-commercial or educational purposes so long as full attribution to its authors is given. PREFACE On December 7 and 8, 2018, The Caltech/MIT Voting Technology Project (VTP) hosted the Multidisciplinary Conference on Election Auditing, or “Election Audit Summit,” for short, at the Massachusetts Institute of Tech- nology in Cambridge, Massachusetts. The conference was organized by a small group of academics and practitioners from across the United States: » R. Michael Alvarez (Caltech) » Jennifer Morrell (Democracy Fund, Election Validation Project ) » Ronald Rivest (MIT) » Philip Stark (UC Berkeley) » Charles Stewart III (MIT) Inspired by the groundswell of interest in risk-limiting audits and other rig- orous methods to ensure that elections are properly administered, the confer- ence assembled an eclectic mix of academics, election officials, and members of the public to explore these issues.