Connecting the Last.Fm Dataset to Lyricwiki and Musicbrainz. Lyrics-Based Experiments in Genre Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Festival Fever Sequel Season Fashion Heats Up

arts and entertainment weekly entertainment and arts THE SUMMER SEASON IS FOR MOVIES, MUSIC, FASHION AND LIFE ON THE BEACH sequelmovie multiples season mean more hits festival fever the endless summer and even more music fashionthe hemlines heats rise with up the temps daily.titan 2 BUZZ 05.14.07 TELEVISION VISIONS pg.3 One cancelled in record time and another with a bleak future, Buzz reviewrs take a look at TV show’s future and short lived past. ENTERTAINMENT EDITOR SUMMER MOVIE PREVIEW pg.4 Jickie Torres With sequels in the wings, this summer’s movie line-up is a EXECUTIVE EDITOR force to be reckoned with. Adam Levy DIRECTOR OF ADVERTISING Emily Alford SUIT SEASON pg.6 Bathing suit season is upon us. Review runway trends and ASSISTANT DIRECTOR OF ADVERTISING how you can rock the baordwalk on summer break. Beth Stirnaman PRODUCTION Jickie Torres FESTIVAL FEVER pg.6 ACCOUNT EXECUTIVES Summer music festivals are popping up like the summer Sarah Oak, Ailin Buigis temps. Take a look at what’s on the horizon during the The Daily Titan 714.278.3373 summer months. The Buzz Editorial 714.278.5426 [email protected] Editorial Fax 714.278.4473 The Buzz Advertising 714.278.3373 [email protected] Advertising Fax 714.278.2702 The Buzz , a student publication, is a supplemental insert for the Cal State Fullerton Daily Titan. It is printed every Thursday. The Daily Titan operates independently of Associated Students, College of Communications, CSUF administration and the WHAT’S THE BUZZ p.7 CSU system. The Daily Titan has functioned as a public forum since inception. -

Thepowerpopshow's

thepowerpopshow’s greatest hits vol. 21 1. army navy – the long goodbye (from the album the last place c. 2011) F 2. fountains of wayne – someone’s gonna break your heart the (from the album sky full of holes c. 2011) A 3. the whiskey saints – always in my heart C (from the album 24 hours c. 2011) 4. the smithereens – sorry E power (from the album 2011 c. 2011) B 5. the wellingtons – baby’s got a secret O (from the album in transit c. 2011) 6. bill schulz – future butterfly O pop (from the album bill schulz c. 2011) 7. pugwash – there you are K (from the album the olympus sound c. 2011) . 8. the hazey janes – girl in the night (from the album the winter that was c. 2011) C show 9. cut copy – where i’m going (from the album zonoscope c. 2011) O 10. ryan allen and his extra arms – oh yeah M (from the album ryan allen and his extra arms c. 2011) / 11. queen electric – gonna let you down (from the album queen electric c. 2011) P 12. urge overkill – rock n’ roll submarine O (from the album rock n’ roll submarine c. 2011) 13. the evil eye – vicious circle W fridays (digital single c. 2011) 14. boston spaceships – come on baby grace E 5-7pm (from the album our cubehouse still rocks c. 2010) R kscu 103.3fm 15. sloan – follow the leader santa clara, ca (from the album our the double cross c. 2011) P 16. beady eye – the beat goes on O (from the album different gear, still speeding c. -

Banner of Light V25 N10 22 May 1869

I eti UL an. its ten ‘JU y. «u, od LIGH T at ter. 'ay ura Bar and ick, Old Ited •cry d In Ina. .dd; K. avid Ella eak> Cor* tion »0ay and. mlt- • f WM. WHITE & CO., 1 $3,00 PER ANNUM, VOL. XXV. (.Publishers and Proprietors.! BOSTON, SATURDAY, MAY 22, 1869. In Advance. NO. 10. iglve enn- a at Wll- would show thorn how knowledge may go hand too young to lie his mother, and tho total dissim iday, .doned the workshop altogether, put Ids trowel on. graphs appended of > the great men of the present Hoa 11 £ J il 1 JJ £ U HI I 111 £ Il !♦; the hook, and buried himself among his papers. day—without counting those which I was obliged in hand with industry; I would teach them to ilarity between them rendered this st ill moro Im ■■■■ ■ ■ y .. ■■■— My wife had often blamed my.patience, declar- to sell to get bread—a note from the minister of ilnd in mental enjoyment a recompense for physl- probable. Her countenance combined greet sweet itnral cal fatigue; I would assist them ns much as lay rbu, DFirTMranPMnTa inti T'VDt'DTPAinFQ InR tbftt the wm going to ruin; she soon public instruction informing me of a bounty of ness and intelligence; her speaking, gray eyes, ■ REMINISCENCES AND EXPERIENCES Z.XS Xf-S. fifty francs 1 accorded to; my literary merit!’ in my power; I would try to elevate them aud to though bright, woro an air of sadness; her cheeks a and '■•I.'’ ><UUUII Utvi l ' times, to warn»anfl advise James in a friendly, Those were the very words; It is at pnee a proof inspire them with a love for the ideal; I would were pale, but beautifully rounded, nnd around r way, and, at first, he gave some heed to my words, of my indigence, and a certificate of my glory. -

5-Stepcoordination Challenge Pat Travers’ Sandy Gennaro Lessons Learned Mike Johnston Redefining “Drum Hero”

A WILD ZEBRA BLACK FADE DRUMKIT FROM $ WIN DIXON VALUED OVER 9,250 • HAIM • WARPAINT • MIKE BORDIN THE WORLD’S #1 DRUM MAGAZINE APRIL 2014 DARKEST HOUR’S TRAVIS ORBIN BONUS! MIKE’S LOVES A GOOD CHALLENGE 5-STEPCOORDINATION CHALLENGE PAT TRAVERS’ SANDY GENNARO LESSONS LEARNED MIKE JOHNSTON REDEFINING “DRUM HERO” MODERNDRUMMER.com + SABIAN CYMBAL VOTE WINNERS REVIEWED + VISTA CHINO’S BRANT BJORK TELLS IT LIKE IT IS + OLSSON AND MAHON GEAR UP FOR ELTON JOHN + BLUE NOTE MASTER MICKEY ROKER STYLE AND ANALYSIS NICKAUGUSTO TRIVIUM LEGENDARYIT ONLYSTARTS BEGINS TO HERE.DESCRIBE THEM. “The excitement of getting my first kit was like no other, a Wine Red 5 piece Pearl Export. I couldn’t stop playing it. Export was the beginning of what made me the drummer I am today. I may play Reference Series now but for me, it all started with Export.” - Nick Augusto Join the Export family at pearldrum.com. ® CONTENTS Cover and contents photos by Elle Jaye Volume 38 • Number 4 EDUCATION 60 ROCK ’N’ JAZZ CLINIC Practical Independence Challenge A 5-Step Workout for Building Coordination Over a Pulse by Mike Johnston 66 AROUND THE WORLD Implied Brazilian Rhythms on Drumset Part 3: Cô co by Uka Gameiro 68 STRICTLY TECHNIQUE Rhythm and Timing Part 2: Two-Note 16th Groupings by Bill Bachman 72 JAZZ DRUMMER’S WORKSHOP Mickey Roker Style and Analysis by Steve Fidyk EQUIPMENT On the Cover 20 PRODUCT CLOSE˜UP • DW Collector’s Series Cherry Drumset • Sabian 2014 Cymbal Vote Winners • Rich Sticks Stock Series Drumsticks • TnR Products Booty Shakers and 50 MIKE JOHNSTON Little Booty Shakers by Miguel Monroy • Magnus Opus FiBro-Tone Snare Drums Back in the day—you know, like ve years ago—you 26 ELECTRONIC REVIEW had to be doing world tours or making platinum records Lewitt Audio DTP Beat Kit Pro 7 Drum to in uence as many drummers as this month’s cover Microphone Pack and LCT 240 Condensers star does with his groundbreaking educational website. -

Mustang, August 26, 1971

A ro kk rm Here Sunday Jesse Fuller: One man band It doesn’t teem too difficult to It takes a little finagling with a toe, they strike a string. Now combine the sounds at a guitar, a the Instruments to make It that the baas fiddle la basically harmonica, a kazoo and a bass possible to play all four changed why not rename It? fiddle, does It? All It takes Is four simultaneously. How Is it done ? How about fotdella. That's what performers and the Instruments, Fuller named It. Well, first of all figure out what V right? Well, somebody forgot to tell to do with the harmonica and The song list that the 76 year- j w f ! Jesse Fuller that he needed three kazoo. Since both require wind old Fuller plays Is mainly blues, other people to perform with. they have to be rigged up In the In the style of the late Leadbelly, Fuller has become a skilled area of the mouth. Fuller a close friend of his. On* of muslcan with all four In managed to attach them to a wire Fuller’s more popular songs Is struments and plays them and hang It around his neck. his own composition "San equally well, at the same time. Francisco Bay Blues." Other The Southern-born folk singer Secondly, the bass fiddle and songs are Just everyday Ilf* one-man band ii performing the guitar obviously can’t be blues. "The Lon* Cat''— 7* year-old J* u a Fuller, matter of folk, this Sunday night at S In the played at the same time. -

Enjoy Your Reading Adventure!

HAPPY LEARNERS NURSERY BOOK CORNER Enjoy your reading adventure! "You can find magic wherever you look... All you need is a book." - Dr. Seuss OLR's Book of Stories 2 0 2 0 W R I T I N G C O M P E T I T I O N F I N A L I S T S Ella K – 1 BLUE One day a little girl went for a walk and the girl saw a boat. She hopped in the boat and she went out to sea. She did almost get eaten by a shark and she wanted to go fishing. The girl did catch a lot of fish. She did bring the fish home and cooked them, and she said, “those fish were so so yum!”. The girls name was Elle. Ciara K-S - 1 BLUE Once upon a time there was a boy named Jack. He really loved going to the fun park. One day he went to the fun park and he found a green creek. He went over to the green creek and saw a scary snake. He got bitten by the scary snake and had to go to the hospital. “Why can’t it go away now?” he said. Kobe R - 1 GOLD There was a little girl named Madison and she went to the river to get some water. There she found a boat and it led her to a time machine and it took her back in time and it crashed in the sand. She lost the cable and she was trapped in time. -

Winter's Better with Freeze Over!

HAVE A BITE AT THE NEST THE P l e a s e r e c y c l e t h i s Thursday, Jan. 17, 2008 newspaper when you are Volume 45, Issue 17 NUGGET fi nished with it. YOUR STUDENT NEWSPAPER EDMONTON, ALBERTA, CANADA Winter’s better with Freeze Over! NAITSA’s winter extravaganza on Feb. 1 features a survivor challenge, a tailgate party and a hockey game in the NAIT arena with bands and prizes Story, Page 10 INSIDE: Arts and Culture: Page 10 Ask a Counsellor: Page 18 Editorial: Page 5 Grapevines: Page 17 Horoscope: Page 15 Photo by Brendan Abbott Hot Single: Page 16 AT THE NET Mouthing Off: Page 20 The NAIT women go for a block against a Lakeland College player on Saturday, Jan. 12 News: Page 2, 3, 4 during a volleyball game at the NAIT gym. The Ooks won 3-1. Sports: Pages 6, 7, 8 The Nugget Thursday, January 17, 2008 NEWS&FEATURES Life after president leaves NAITSA office moves on “When you work so closely with people, to the point that you are eating, breathing and sleep- ing next to the person (when on conferences, for example), it is very hard to separate your work life from your personal life. I know that this is something every executive struggles with, and will continue to struggle with for years to come.” VP Academic Lisi Monro agrees. GABRIELLE HAY-BYERS “We attend conferences together, we see each Student Issues Editor other 10-12 hours a day in the office. Personal life With NAITSA Executive Council elections and professional life are melded together when Photo by Dorothy Carter looming just around the corner on Feb. -

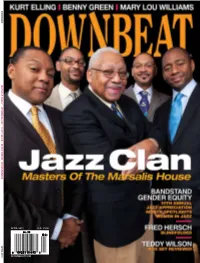

Downbeat.Com April 2011 U.K. £3.50

£3.50 £3.50 U.K. PRIL 2011 DOWNBEAT.COM A D OW N B E AT MARSALIS FAMILY // WOMEN IN JAZZ // KURT ELLING // BENNY GREEN // BRASS SCHOOL APRIL 2011 APRIL 2011 VOLume 78 – NumbeR 4 President Kevin Maher Publisher Frank Alkyer Editor Ed Enright Associate Editor Aaron Cohen Art Director Ara Tirado Production Associate Andy Williams Bookkeeper Margaret Stevens Circulation Manager Sue Mahal Circulation Associate Maureen Flaherty ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile 630-941-2030 [email protected] Musical Instruments & East Coast Schools Ritche Deraney 201-445-6260 [email protected] Classified Advertising Sales Sue Mahal 630-941-2030 [email protected] OFFICES 102 N. Haven Road Elmhurst, IL 60126–2970 630-941-2030 Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, John McDonough, Howard Mandel Atlanta: Jon Ross; Austin: Michael Point, Kevin Whitehead; Boston: Fred Bouchard, Frank-John Hadley; Chicago: John Corbett, Alain Drouot, Michael Jackson, Peter Margasak, Bill Meyer, Mitch Myers, Paul Natkin, Howard Reich; Denver: Norman Provizer; Indiana: Mark Sheldon; Iowa: Will Smith; Los Angeles: Earl Gibson, Todd Jenkins, Kirk Silsbee, Chris Walker, Joe Woodard; Michigan: John Ephland; Minneapolis: Robin James; Nashville: Robert Doerschuk; New Orleans: Erika Goldring, David Kunian, Jennifer Odell; New York: Alan Bergman, Herb Boyd, Bill Douthart, Ira Gitler, Eugene Gologursky, Norm Harris, D.D. Jackson, Jimmy Katz, -

Sloan Parallel Play Mp3, Flac, Wma

Sloan Parallel Play mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: Parallel Play Country: Canada Released: 2008 MP3 version RAR size: 1959 mb FLAC version RAR size: 1842 mb WMA version RAR size: 1607 mb Rating: 4.1 Votes: 435 Other Formats: WMA VOX MOD VQF MMF XM APE Tracklist 1 Believe In Me 3:18 2 Cheap Champagne 2:46 3 All I Am Is All You're Not 3:03 4 Emergency 911 1:50 5 Burn For It 2:38 6 Witch's Wand 2:50 7 The Dogs 3:54 8 Living The Dream 2:53 9 The Other Side 2:54 10 Down In The Basement 2:59 11 If I Could Change Your Mind 2:08 12 I'm Not A Kid Anymore 2:26 13 Too Many 3:43 Credits Mastered By – Joao Carvalho Mixed By – Nick Detoro, Sloan Performer – Andrew Scott , Chris Murphy , Jay Ferguson , Patrick Pentland Performer [Additional] – Dick Pentland, Gregory Macdonald, Kevin Hilliard, Nick Detoro Producer – Nick Detoro, Sloan Recorded By – Nick Detoro Written-By – Sloan Notes The packaging is a four panel digipak. Barcode and Other Identifiers Barcode: 6 6674-400047 Other versions Category Artist Title (Format) Label Category Country Year YEP 2180 Sloan Parallel Play (CD, Album) Yep Roc Records YEP 2180 US 2008 Bittersweet Recordings, BS 055 CD Sloan Parallel Play (CD, Album) BS 055 CD Spain 2008 Murderecords Parallel Play (LP, Album, Yep-2180 Sloan Yep Roc Records Yep-2180 US 2008 180) Parallel Play (CD, Album, YEP-2180 Sloan Yep Roc Records YEP-2180 US 2008 Promo) HSR-002 Sloan Parallel Play (CD, Album) High Spot Records HSR-002 Australia 2008 Related Music albums to Parallel Play by Sloan P.F. -

Beets Documentation Release 1.5.1

beets Documentation Release 1.5.1 Adrian Sampson Oct 01, 2021 Contents 1 Contents 3 1.1 Guides..................................................3 1.2 Reference................................................. 14 1.3 Plugins.................................................. 44 1.4 FAQ.................................................... 120 1.5 Contributing............................................... 125 1.6 For Developers.............................................. 130 1.7 Changelog................................................ 145 Index 213 i ii beets Documentation, Release 1.5.1 Welcome to the documentation for beets, the media library management system for obsessive music geeks. If you’re new to beets, begin with the Getting Started guide. That guide walks you through installing beets, setting it up how you like it, and starting to build your music library. Then you can get a more detailed look at beets’ features in the Command-Line Interface and Configuration references. You might also be interested in exploring the plugins. If you still need help, your can drop by the #beets IRC channel on Libera.Chat, drop by the discussion board, send email to the mailing list, or file a bug in the issue tracker. Please let us know where you think this documentation can be improved. Contents 1 beets Documentation, Release 1.5.1 2 Contents CHAPTER 1 Contents 1.1 Guides This section contains a couple of walkthroughs that will help you get familiar with beets. If you’re new to beets, you’ll want to begin with the Getting Started guide. 1.1.1 Getting Started Welcome to beets! This guide will help you begin using it to make your music collection better. Installing You will need Python. Beets works on Python 3.6 or later. • macOS 11 (Big Sur) includes Python 3.8 out of the box. -

Billy Joel and the Practice of Law: Melodies to Which a Lawyer Might Work

Touro Law Review Volume 32 Number 1 Symposium: Billy Joel & the Law Article 10 April 2016 Billy Joel and the Practice of Law: Melodies to Which a Lawyer Might Work Randy Lee Follow this and additional works at: https://digitalcommons.tourolaw.edu/lawreview Part of the Legal Profession Commons Recommended Citation Lee, Randy (2016) "Billy Joel and the Practice of Law: Melodies to Which a Lawyer Might Work," Touro Law Review: Vol. 32 : No. 1 , Article 10. Available at: https://digitalcommons.tourolaw.edu/lawreview/vol32/iss1/10 This Article is brought to you for free and open access by Digital Commons @ Touro Law Center. It has been accepted for inclusion in Touro Law Review by an authorized editor of Digital Commons @ Touro Law Center. For more information, please contact [email protected]. Lee: Billy Joel and the Practice of Law BILLY JOEL AND THE PRACTICE OF LAW: MELODIES TO WHICH A LAWYER MIGHT WORK Randy Lee* Piano Man has ten tracks.1 The Stranger has nine.2 This work has seven melodies to which a lawyer might work. I. TRACK 1: FROM PIANO BARS TO FIRES (WHY WE HAVE LAWYERS) Fulton Sheen once observed, “[t]he more you look at the clock, the less happy you are.”3 Piano Man4 begins by looking at the clock. “It’s nine o’clock on a Saturday.”5 As “[t]he regular crowd shuffles in,” there’s “an old man” chasing a memory, “sad” and “sweet” but elusive and misremembered.6 There are people who pre- fer “loneliness” to “being alone,” people in the wrong place, people out of time, no matter how much time they might have.7 They all show up at the Piano Man’s bar hoping “to forget about life for a while”8 because, as the song reminds us, sometimes people can find themselves in a place where their life is hard to live with. -

Whats That Sound 06-09 5.0 1/23/06 9:56 AM Page 325

Whats That Sound 06-09_5.0 1/23/06 9:56 AM Page 324 The Growth of FM Rock Radio FM is a type of radio broad- selves. But the tide for FM or “Progressive” radio was cast developed in 1933 by turned in the 1950s with the format that worked best. Edwin Howard Armstrong. It improvements in home audio Free-form radio was prob- uses the same radio waves as equipment, the development ably first developed at New AM but in a different way: of stereo recording and the York’s WOR-FM, but Tom information is transmitted by LP, and the Federal Donahue became the most varying the frequency of the Communication Com- influential proponent of the carrier wave, instead of the mission’s 1967 order that format when he adopted it at amplitude of the wave. FM dual-license owners had to KMPX-FM in San Francisco broadcasts have less static provide original FM program- in the spring of 1967. Thanks and a shorter range than AM, ming for at least 50 percent of to Donahue’s success in the so they are a purely local but the broadcast day. Beginning Bay Area and subsequently higher-quality broadcast. that year, stations moved in Los Angeles, the format live from ... The development of FM quickly to differentiate them- spread nationwide by 1968. was bitterly contested by the selves from AM. “Free-form” It also was the main format radio industry, despite its obvious virtues. Because broadcasting corporations like RCA had millions of dol- lars invested in AM radio equipment, programming, and marketing, they had no desire to invest in an expen- sive new medium just so they could compete with them- 324 Whats That Sound 06-09_5.0 1/23/06 9:56 AM Page 325 for student-run college sta- developed a method of string- result, progressive radio tions.