FFT-Based Spectrum Analysis Using a Digital Signal Processor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Secure, Low Cost Synchophasor Measurement Device

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Doctoral Dissertations Graduate School 8-2015 A 3rd Generation Frequency Disturbance Recorder: A Secure, Low Cost Synchophasor Measurement Device Jerel Alan Culliss University of Tennessee - Knoxville, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_graddiss Part of the Power and Energy Commons, Systems and Communications Commons, and the VLSI and Circuits, Embedded and Hardware Systems Commons Recommended Citation Culliss, Jerel Alan, "A 3rd Generation Frequency Disturbance Recorder: A Secure, Low Cost Synchophasor Measurement Device. " PhD diss., University of Tennessee, 2015. https://trace.tennessee.edu/utk_graddiss/3495 This Dissertation is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a dissertation written by Jerel Alan Culliss entitled "A 3rd Generation Frequency Disturbance Recorder: A Secure, Low Cost Synchophasor Measurement Device." I have examined the final electronic copy of this dissertation for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Doctor of Philosophy, with a major in Electrical Engineering. Yilu Liu, Major Professor We have read this dissertation and recommend its acceptance: Leon M. Tolbert, Wei Gao, Lee L. Riedinger Accepted for the Council: Carolyn R. Hodges Vice Provost and Dean of the Graduate School (Original signatures are on file with official studentecor r ds.) A 3rd Generation Frequency Disturbance Recorder: A Secure, Low Cost Synchrophasor Measurement Device A Dissertation Presented for the Doctor of Philosophy Degree The University of Tennessee, Knoxville Jerel Alan Culliss August 2015 Copyright © 2015 by Jerel A. -

A Continuación Se Realizará Una Breve Descripción De Los Objetivos De Los Cuales Estará Formado El Trabajo Perteneciente

UNIVERSIDAD POLITÉCNICA DE MADRID Escuela Universitaria de Ingeniera Técnica de Telecomunicación INTEGRACIÓN MPLAYER – OPENSVC EN EL PROCESADOR MULTINÚCLEO OMAP3530 TRABAJO FIN DE MÁSTER Autor: Óscar Herranz Alonso Ingeniero Técnico de Telecomunicación Tutor: Fernando Pescador del Oso Doctor Ingeniero de Telecomunicación Julio 2012 2 4 AGRADECIMIENTOS Un año y pocos meses después de la defensa de mi Proyecto Fin de Carrera me vuelvo a encontrar en la misma situación: escribiendo estás líneas de mi Trabajo Fin de Máster para agradecer a aquellas personas que me han apoyado y ayudado de alguna forma durante esta etapa de mi vida. Después de un año en el que he hecho demasiadas cosas importantes en mi vida (y sorprendentemente todas bien), ha llegado la hora de dar por finalizado el Máster, el último paso antes de cerrar mi carrera de estudiante. Por ello, me gustaría agradecer en primer lugar al Grupo de Investigación GDEM por darme la oportunidad de formar parte de su equipo y en especial a Fernando, persona ocupada donde las haya, pero que una vez más me ha aconsejado en numerosas ocasiones el camino a seguir para solventar los problemas. Gracias Fernando por tu tiempo y dedicación. Agradecer a mis padres, Fidel y Victoria, y a mi hermano, Víctor, el apoyo y las fuerzas recibidas en todo momento. Sé que no todos podréis estar presentes en mi defensa de este Trabajo Fin de Máster pero da igual. Ya me habéis demostrado con creces lo maravillosos que sois. Gracias por vuestro apoyo incondicional y por recibirme siempre con una sonrisa dibujada en vuestro rostro. -

A Hardware Monitor Using Tms320c40 Analysis Module

Disclaimer: This document was part of the DSP Solution Challenge 1995 European Team Papers. It may have been written by someone whose native language is not English. TI assumes no liability for the quality of writing and/or the accuracy of the information contained herein. Implementing a Hardware Monitor Using the TMS320C40 Analysis Module and JTAG Interface for Performance Measurements in a Multi-DSP System Authors: R. Ginthor-Kalcsics, H. Eder, G. Straub, Dr. R. Weiss EFRIE, France December 1995 SPRA308 IMPORTANT NOTICE Texas Instruments (TI™) reserves the right to make changes to its products or to discontinue any semiconductor product or service without notice, and advises its customers to obtain the latest version of relevant information to verify, before placing orders, that the information being relied on is current. TI warrants performance of its semiconductor products and related software to the specifications applicable at the time of sale in accordance with TI’s standard warranty. Testing and other quality control techniques are utilized to the extent TI deems necessary to support this warranty. Specific testing of all parameters of each device is not necessarily performed, except those mandated by government requirements. Certain application using semiconductor products may involve potential risks of death, personal injury, or severe property or environmental damage (“Critical Applications”). TI SEMICONDUCTOR PRODUCTS ARE NOT DESIGNED, INTENDED, AUTHORIZED, OR WARRANTED TO BE SUITABLE FOR USE IN LIFE-SUPPORT APPLICATIONS, DEVICES OR SYSTEMS OR OTHER CRITICAL APPLICATIONS. Inclusion of TI products in such applications is understood to be fully at the risk of the customer. Use of TI products in such applications requires the written approval of an appropriate TI officer. -

Designing an Embedded Operating System with the TMS320 Family of Dsps

Designing an Embedded Operating System with the TMS320 Family of DSPs APPLICATION BRIEF: SPRA296A Astro Wu DSP Applications – TI Asia Digital Signal Processing Solutions December 1998 IMPORTANT NOTICE Texas Instruments and its subsidiaries (TI) reserve the right to make changes to their products or to discontinue any product or service without notice, and advise customers to obtain the latest version of relevant information to verify, before placing orders, that information being relied on is current and complete. All products are sold subject to the terms and conditions of sale supplied at the time of order acknowledgement, including those pertaining to warranty, patent infringement, and limitation of liability. TI warrants performance of its semiconductor products to the specifications applicable at the time of sale in accordance with TI's standard warranty. Testing and other quality control techniques are utilized to the extent TI deems necessary to support this warranty. Specific testing of all parameters of each device is not necessarily performed, except those mandated by government requirements. CERTAIN APPLICATIONS USING SEMICONDUCTOR PRODUCTS MAY INVOLVE POTENTIAL RISKS OF DEATH, PERSONAL INJURY, OR SEVERE PROPERTY OR ENVIRONMENTAL DAMAGE ('CRITICAL APPLICATIONS"). TI SEMICONDUCTOR PRODUCTS ARE NOT DESIGNED, AUTHORIZED, OR WARRANTED TO BE SUITABLE FOR USE IN LIFE-SUPPORT DEVICES OR SYSTEMS OR OTHER CRITICAL APPLICATIONS. INCLUSION OF TI PRODUCTS IN SUCH APPLICATIONS IS UNDERSTOOD TO BE FULLY AT THE CUSTOMER'S RISK. In order to minimize risks associated with the customer's applications, adequate design and operating safeguards must be provided by the customer to minimize inherent or procedural hazards. TI assumes no liability for applications assistance or customer product design. -

(HIL) Simulator for Simulation and Analysis of Embedded Systems with Non-Real-Time Applications

Louisiana State University LSU Digital Commons LSU Master's Theses Graduate School March 2021 Low-cost Hardware-In-the-Loop (HIL) Simulator for Simulation and Analysis of Embedded Systems with non-real-time Applications Chanuka S. Elvitigala Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_theses Part of the Hardware Systems Commons Recommended Citation Elvitigala, Chanuka S., "Low-cost Hardware-In-the-Loop (HIL) Simulator for Simulation and Analysis of Embedded Systems with non-real-time Applications" (2021). LSU Master's Theses. 5262. https://digitalcommons.lsu.edu/gradschool_theses/5262 This Thesis is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Master's Theses by an authorized graduate school editor of LSU Digital Commons. For more information, please contact [email protected]. LOW-COST HARDWARE-IN-THE-LOOP (HIL) SIMULATOR FOR SIMULATION AND ANALYSIS OF EMBEDDED SYSTEMS WITH NON-REAL-TIME APPLICATIONS A Thesis Submitted to the Graduate Faculty of the Louisiana State University and Agriculture and Mechanical College in partial fulfillment of the requirements for the degree of Master of Science in The Department of Electrical and Computer Engineering by Chanuka Sandaru Elvitigala B.Sc. Information Technology (Hons.), Universtiy of Moratuwa, Sri Lanka 2018 May 2021 To my family and teachers who believed that I can do good and friends who helped in different ways. ii Acknowledgment I take pleasure in submitting herewith the report on \Low-cost Hardware-In-the-Loop (HIL) Simulator for Simulation and Analysis of Embedded Systems with non-real Time Appli- cations" in partial fulfillment of the requirements for the degree of Master of Science in Electrical and Computer Engineering. -

Blackhawk Emulators

BlackhawkBlackhawk™™ USB510USB510 JTAGJTAG EmulatorEmulator forfor TITI DSP’s TI DSP platforms: C6000, C5000, C2000, OMAP, VC33 Compatible Operating Systems: Windows® 98, ME, 2000, XP The Blackhawk™ USB510 JTAG Emulator is the latest addi- tion to our high-performance JTAG Emulator lineup for Features Texas Instruments TMS320, TMS470 (ARM) and OMAP families. The credit card sized USB510 JTAG Emulator is the ▪ Ultra compact designed pod with status LED most compact and lightweight JTAG emulator available. High-speed USB 2.0 (480 Mbits/sec) port The USB bus powered design, which requires no external ▪ power source, is the ultimate in portability resulting in an ▪ Bus powered, no external power required ultra-compact unit that is very affordable. Blackhawk is the ▪ Windows® Plug n’ Play Installation first company to introduce a USB JTAG emulator for Texas Instruments DSP’s and the new USB510 set’s the standard ▪ Auto-sensing low I/O voltage support for portability, reliability and ease of installation. ▪ Windows® 98, ME, 2000, XP Drivers The high-speed USB 2.0 port operates at 480 Mbits/second ▪ High-speed micro coax cable assembly and is Plug n’ Play compatible with Windows® 98/ ME/2000/XP. Software compatibility with all versions of ▪ Free technical support and web downloads Code Composer and Code Composer Studio including v2.2 ▪ Standard 14-pin JTAG Header Connector and above, and a driver independent DLL developed by Affordable price Blackhawk, assures existing and future device compatibility ▪ for legacy or new DSP’s right out of the box with no up- grades required. System Requirements The Blackhawk USB510 includes legacy support for Code Composer v4.1x and CCStudio v1.2 gives users access and compatibility to VC33, C20x and C24x. -

Title: Digital Signal Processors and Development Tools

CEEE/CEU Course Title: Digital Signal Processors and Development Tools Speaker: Boris Gramatikov, PhD Assistant Professor, Ophthalmic Optics and Electronics Lab Wilmer Eye Institute, Johns Hopkins University School of Medicine, Baltimore, MD Abstract: Digital Signal Processors (DSPs) are used in a wide range of applications. Low-priced versions have made them very attractive in many applications that were considered too costly up until recently. With the introduction of the Texas Instruments TM320C6x processor architecture, DSPs started supporting features that facilitate the development of efficient high-level language compilers. Powerful programming tools like the Code Composer Studio (CCS) have enhanced programming productivity, while the addition of new hardware modules have improved connectivity, real-time operation, and execution speed. This, along with the introduction of a Real-Time Operating System (RTOS), the DSP-BIOS, and combination with Field-Programmable- Gate-Array (FPGA) technology has led to unprecedented expansion of DSP-based systems. This free four-hour mini-course will examine the origins of DSPs, their stages of development, and will cover the basics of getting started as a DSP developer. We will examine details of the most basic designs, analyze applications that include basic functions such as Fast Fourier Transform (FFT), digital filter design and implementation, signal synthesis (wave generation), compare using C- vs. Assembly language, Floating Point (FP) vs. Fixed point applications and much more. The course will focus on the C6713 processor and two development platforms – Traquair’s c6713Compact and TI’s DSP starter kit (DSK), both using the Code Composer Studio’s Integrated Development Environment (IDE). The course is appropriate for EE engineers wishing to acquire new knowledge and skills in the DSP area, system designers, embedded system programmers, senior undergraduate and first year graduate students, and anybody interested in computer engineering in general. -

Second-Generation Digital Signal Processors

TMS320 SECOND GENERATION DIGITAL SIGNAL PROCESSORS SPRS010B — MAY 1987 — REVISED NOVEMBER 1990 68-Pin GB Package† 80-ns Instruction Cycle Time (Top View) 544 Words of On-Chip Data RAM 1234567891011 4K Words of On-Chip Secure Program A EPROM (TMS320E25) B 4K Words of On-Chip Program ROM C (TMS320C25) D E 128K Words of Data/Program Space F 32-Bit ALU/Accumulator G 16 16-Bit Multiplier With a 32-Bit Product H J Block Moves for Data/Program K Management L Repeat Instructions for Efficient Use of Program Space Serial Port for Direct Codec Interface 68-Pin FN and FZ Packages† (Top View) Synchronization Input for Synchronous Multiprocessor Configurations CC CC D8 D9 D10 D11 D12 D13 D14 D15 READY CLKR CLKX V V Wait States for Communication to Slow 9876543216867666564636261 Off-Chip Memories/Peripherals VSS 10 60 IACK D7 11 59 MSC On-Chip Timer for Control Operations D6 12 58 CLKOUT1 D5 13 57 CLKOUT2 Single 5-V Supply D4 14 56 XF D3 15 55 HOLDA D2 16 54 DX Packaging: 68-Pin PGA, PLCC, and D1 17 53 FSX CER-QUAD D0 18 52 X2 CLKIN SYNC 19 51 X1 68-to-28 Pin Conversion Adapter Socket for INT0 20 50 BR INT1 21 49 STRB EPROM Programming INT2 22 48 R/W VCC 23 47 PS Commercial and Military Versions Available DR 24 46 IS FSR 25 45 DS NMOS Technology: A0 26 44 VSS ADVANCE INFORMATION — TMS32020......... 200-ns cycle time 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 A1 A2 A3 A4 A5 A6 A7 A8 A9 SS CC A11 A10 A12 A13 A14 A15 V CMOS Technology: V — TMS320C25....... -

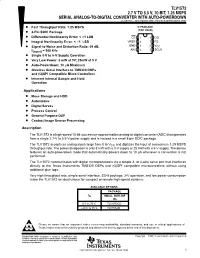

Texas Instruments TMS320 Dsps and (Q)SPI Compatible Microcontrollers Without Using Additional Glue Logic

TLV1572 2.7 V TO 5.5 V, 10-BIT, 1.25 MSPS SERIAL ANALOG-TO-DIGITAL CONVERTER WITH AUTO-POWERDOWN SLAS171A – DECEMBER 1997– REVISED SEPTEMBER 1998 D Fast Throughput Rate: 1.25 MSPS D PACKAGE (TOP VIEW) D 8-Pin SOIC Package D Differential Nonlinearity Error: < ±1 LSB CS 1 8 DO D Integral Nonlinearity Error: < ±1 LSB VREF 2 7 FS D Signal-to-Noise and Distortion Ratio: 59 dB, GND 3 6 VCC AIN 4 5 SCLK f(input) = 500 kHz D Single 3-V to 5-V Supply Operation D Very Low Power: 8 mW at 3V; 25mW at 5 V D Auto-Powerdown: 10 µA Maximum D Glueless Serial Interface to TMS320 DSPs and (Q)SPI Compatible Micro-Controllers D Inherent Internal Sample and Hold Operation Applications D Mass Storage and HDD D Automotive D Digital Servos D Process Control D General Purpose DSP D Contact Image Sensor Processing description The TLV1572 is a high-speed 10-bit successive-approximation analog-to-digital converter (ADC) that operates from a single 2.7-V to 5.5-V power supply and is housed in a small 8-pin SOIC package. The TLV1572 accepts an analog input range from 0 to VCC and digitizes the input at a maximum 1.25 MSPS throughput rate. The power dissipation is only 8 mW with a 3-V supply or 25 mW with a 5-V supply. The device features an auto-powerdown mode that automatically powers down to 10 µA whenever a conversion is not performed. The TLV1572 communicates with digital microprocessors via a simple 3- or 4-wire serial port that interfaces directly to the Texas Instruments TMS320 DSPs and (Q)SPI compatible microcontrollers without using additional glue logic. -

The Blackhawk™ USB-JTAG Emulator Is Now Shipping for the Texas Instruments TMS320Ô Family of Digital Signal Processors

The Blackhawk™ USB-JTAG Emulator is Now Shipping for the Texas Instruments TMS320Ô Family of Digital Signal Processors MARLTON, N.J. (March 15, 2001) – Enabling developers to link a USB- enabled Windows-based PC to a target DSP system, EWA, a leader in the development of Digital Signal Processing (DSP) Enabling Technologies™, today announced availability of the Blackhawk™ USB-JTAG Emulator . The Blackhawk™ USB-JTAG Emulator is fully compatible with the Texas Instruments (TI) TMS320Ô family of DSPs as well as TI's Code Composer StudioÔ integrated development environment (IDE). The Blackhawk™ USB-JTAG Emulator is a very-small footprint (5.5" x 2.6" x 1.1") device that allows a developer to link a USB-enabled Windows-based PC or laptop computer to a target DSP system via the target DSP system's JTAG interface connector. The developer can then use in-system scan-based emulation to perform system-level integration and debug. The target DSP can then be accessed, programmed and tested by making use of TI Code Composer Studio IDE running on the developer's host PC. The Blackhawk™ USB-JTAG Emulator provides a fast, flexible, simple connection, with no boards to install, and no jumpers to set. The drivers supplied with the emulator support Windows 98/2000 and Code Composer Studio, for both the TMS320C5000Ô and TMS320C6000Ô DSP platforms , an important added-value benefit. “In addition to being compact, the BlackhawkÔUSB-JTAG Emulator is a USB bus-powered peripheral that requires no external power, which allows it to be used just about anywhere,” said Bill Novak, Emulation Manager, TI . -

TMS320C6000 Optimizing Compiler V 7.0

TMS320C6000 Optimizing Compiler v 7.0 User's Guide Literature Number: SPRU187Q February 2010 2 SPRU187Q–February 2010 Submit Documentation Feedback Copyright © 2010, Texas Instruments Incorporated Contents Preface ...................................................................................................................................... 13 1 Introduction to the Software Development Tools ................................................................... 17 1.1 Software Development Tools Overview ................................................................................ 18 1.2 C/C++ Compiler Overview ................................................................................................ 19 1.2.1 ANSI/ISO Standard ............................................................................................... 19 1.2.2 Output Files ....................................................................................................... 20 1.2.3 Compiler Interface ................................................................................................ 20 1.2.4 Utilities ............................................................................................................. 20 2 Using the C/C++ Compiler .................................................................................................. 21 2.1 About the Compiler ........................................................................................................ 22 2.2 Invoking the C/C++ Compiler ........................................................................................... -

TMS320C6000 Optimizing Compiler V7.4 User's Guide

TMS320C6000 Optimizing Compiler v7.4 User's Guide Literature Number: SPRU187U July 2012 Contents Preface ...................................................................................................................................... 11 1 Introduction to the Software Development Tools ................................................................... 14 1.1 Software Development Tools Overview ................................................................................ 15 1.2 C/C++ Compiler Overview ................................................................................................ 16 1.2.1 ANSI/ISO Standard ............................................................................................... 16 1.2.2 Output Files ....................................................................................................... 17 1.2.3 Compiler Interface ................................................................................................ 17 1.2.4 Utilities ............................................................................................................. 17 2 Using the C/C++ Compiler .................................................................................................. 18 2.1 About the Compiler ........................................................................................................ 19 2.2 Invoking the C/C++ Compiler ............................................................................................ 19 2.3 Changing the Compiler's Behavior With Options .....................................................................