Best Practices and Metrics for Virtual Reality User Interfaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Albere Albe 1

a b 1 ALBERE ALBERE ALBERE ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH PRODUCT-LIST 2020 All Products Excluding Shipping Fees TM Price per Unit (or otherwise explained) 2 In Euro albere TM albere TM albereGamepads ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH a b 1 ALBERE ALBERE ALBERE ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH ID CATEGORY TITLE TM 2 albere TM albere TM albere ALBERE ELECTRONICS GmbH GAMEPADS Lanjue USB GamePad 13001-S (PC) ALBERE ELECTRONICS GmbH ALBERE ELECTRONICS GmbH GAMEPADS Tracer Gamepad Warrior PC GAMEPADS VR Bluetooth Gamepad White GAMEPADS Esperanza Vibration Gamepad USB Warrior PC/PS3 GAMEPADS Gembird JPD-UDV-01 GAMEPADS Competition PRO Powershock Controller (PS3/PC) GAMEPADS PDP Rock Candy Red GAMEPADS PC Joystick USB U-706 GAMEPADS Konix Drakkar Blood Axe GAMEPADS Gembird USB Gamepad JPD-UB-01 GAMEPADS Element GM-300 Gamepad GAMEPADS Intex DM-0216 GAMEPADS Esperanza Corsair Red GAMEPADS Havit HV-G69 GAMEPADS Nunchuck Controller Wii/Wii U White GAMEPADS Esperanza Fighter Black GAMEPADS Esperanza Fighter Red GAMEPADS VR Bluetooth Gamepad 383346582 GAMEPADS 744 GAMEPADS CO-100 GAMEPADS Shinecon SC-B01 GAMEPADS Gamepad T066 GAMEPADS Media-Tech MT1506 AdVenturer II GAMEPADS Scene It? Buzzers XBOX 360 Red GAMEPADS Media-Tech MT1507 Corsair II Black GAMEPADS Esperanza EGG107R Black/Red GAMEPADS Esperanza Wireless Gladiator Black GAMEPADS 239 GAMEPADS PowerWay USB GAMEPADS Nunchuck Controller Wii/Wii U Red GAMEPADS Powertech BO-23 -

Using Your Smartphone As a Game Controller to Your PC

Bachelor Thesis Computer Science June 2013 Using your Smartphone as a Game Controller to your PC Marcus Löwegren Rikard Johansson School of Computing BTH-Blekinge Institute of Technology Address: 371 79 Karlskrona, Telephone: +46 455 38 50 00 This thesis is submitted to the School of Computing at Blekinge Institute of Technology in par- tial fulfillment of the requirements for the degree of Bachelor in Computer Science. The thesis is equivalent to 10 weeks of full time studies. Abstract Many people in the world today own a smartphone. Smartphones of today usually have an ad- vanced array of inputs in forms of tilting, touching and speaking, and outputs in forms of visual representation on the screen, vibration of the smartphone and speakers for sound. They also usu- ally have different kinds of connectivity in forms of WLAN, Bluetooth and USB. Despite this we are still not seeing a lot of interaction between computers and smartphones, especially within games. We believe that the high presence of smartphones amongst people combined with the ad- vanced inputs and outputs of the smartphone and the connectivity possibilities makes the smart- phone a very viable option to be used as a game controller for the PC. We experimented with this developing the underlying architecture for the smartphone to communicate with the PC. Three different games were developed that users tested to see if the smartphone’s inputs are good enough to make it suitable for such purpose. We also attempted to find out if doing this made the gaming experience better, or in other words increased the enjoyment, of a PC game. -

The Ergonomic Development of Video Game Controllers Raghav Bhardwaj* Department of Design and Technology, United World College of South East Asia, Singapore

of Ergo al no rn m u ic o s J Bhardwaj, J Ergonomics 2017, 7:4 Journal of Ergonomics DOI: 10.4172/2165-7556.1000209 ISSN: 2165-7556 Research Article Article Open Access The Ergonomic Development of Video Game Controllers Raghav Bhardwaj* Department of Design and Technology, United World College of South East Asia, Singapore Abstract Video game controllers are often the primary input devices when playing video games on a myriad of consoles and systems. Many games are sometimes entirely shaped around a controller which makes the controllers paramount to a user’s gameplay experience. Due to the growth of the gaming industry and, by consequence, an increase in the variety of consumers, there has been an increased emphasis on the development of the ergonomics of modern video game controllers. These controllers now have to cater to a wider range of user anthropometrics and therefore manufacturers have to design their controllers in a manner that meets the anthropometric requirements for most of their potential users. This study aimed to analyse the evolution of video game controller ergonomics due to increased focus on user anthropometric data and to validate the hypothesis that these ergonomics have improved with successive generations of video game hardware. It has analysed and compared the key ergonomic features of the SEGA Genesis, Xbox, Xbox 360, and PS4 controllers to observe trends in their development, covering a range of 25 years of controller development. This study compared the dimensions of the key ergonomic features of the four controllers to ideal anthropometric values that have been standardised for use in other handheld devices such as TV remotes or machinery controls. -

Download the User Manual and Keymander PC Software from Package Contents 1

TM Quick Start Guide KeyMander - Keyboard & Mouse Adapter for Game Consoles GE1337P PART NO. Q1257-d This Quick Start Guide is intended to cover basic setup and key functions to get you up and running quickly. For a complete explanation of features and full access to all KeyMander functions, please download the User Manual and KeyMander PC Software from www.IOGEAR.com/product/GE1337P Package Contents 1 1 x GE1337P KeyMander Controller Emulator 2 x USB A to USB Mini B Cables 1 x 3.5mm Data Cable (reserved for future use) 1 x Quick Start Guide 1 x Warranty Card System Requirements Hardware Game Consoles: • PlayStation® 4 • PlayStation® 3 • Xbox® One S • Xbox® One • Xbox® 360 Console Controller: • PlayStation® 4 Controller • PlayStation® 3 Dual Shock 3 Controller (REQUIRED)* • Xbox® 360 Wired Controller (REQUIRED)* • Xbox® One Controller with Micro USB cable (REQUIRED)* • USB keyboard & USB mouse** Computer with available USB 2.0 port OS • Windows Vista®, Windows® 7 and Windows® 8, Windows® 8.1 *Some aftermarket wired controllers do not function correctly with KeyMander. It is strongly recommended to use official PlayStation/Xbox wired controllers. **Compatible with select wireless keyboard/mouse devices. Overview 2 1. Gamepad port 1 2 3 2. Keyboard port 3. Mouse port 4. Turbo/Keyboard Mode LED Gamepad Keyboard Mouse Indicator: a. Lights solid ORANGE when Turbo Mode is ON b. Flashes ORANGE when Keyboard Mode is ON 5. Setting LED indicator: a. Lights solid BLUE when PC port is connected to a computer. b. Flashes (Fast) BLUE when uploading a profile from a computer to the KeyMander. -

About Your SHIELD Controller SHIELD Controller Overview

About Your SHIELD controller Your NVIDIA® SHIELD™ controller works with your SHIELD tablet and SHIELD portable for exceptional responsiveness and immersion in today’s hottest games. It is the first-ever precision controller designed for both Android™ and PC gaming. Your controller includes the following features: Console-grade controls Ultra-responsive Wi-Fi performance Stereo headset jack with chat support Microphone for voice search Touch pad for easy navigation Rechargeable battery and USB charging cable Your controller is compatible with the SHIELD portable and the SHIELD tablet. It can also be used as a wired controller for a Windows 7 or Windows 8 PC running GeForce Experience. Learn more about wired PC support here. Your controller cannot be used with other Android devices at this time. SHIELD controller Overview The SHIELD controller box includes one SHIELD controller, one USB charging cable, one Support Guide, and one Quick Start Guide. Shield controller D-pad NVIDIA button Android navigation and Start buttons A B X Y buttons Left thumbstick Right thumbstick Touchpad Volume control Turn on or Turn Off your controller How to Turn On the Controller Tap the NVIDIA button. How to Turn Off the Controller Hold the NVIDIA button for 6 seconds. The controller automatically turns off when you turn off the SHIELD device the controller is connected to. The controller also automatically turns off after 10 minutes of idle time. NOTE The controller stays on during video and music playback on the SHIELD device. This allows the controller to be active to control media playback. How to Connect Your Controller to a SHIELD Device Your controller is compatible with the SHIELD portable and the SHIELD tablet. -

English Sharkps3 Manual.Pdf

TM FRAGFX SHARK CLASSIC - WIRELESS CONTROLLER ENGLISH repaIR CONNECTION BETWEEN DONGLE AND MOUSE/CHUCK Should your dongle light up, but not connect to either mouse or chuck or both, the unit HAPPY FRAGGING! needs unpairing and pairing. This process should only have to be done once. Welcome and thank you for purchasing your new FragFX SHARK Classic for the Sony Playstation 3, PC and MAC. 1) unpair the mouse (switch OFF the Chuck): - Switch on the mouse The FragFX SHARK classic is specifically designed for the Sony PlayStation 3, PC / - Press R1, R2, mousewheel, start, G, A at the same time MAC and compatible with most games, however you may find it‘s best suited to shoo- - Switch the mouse off, and on again. The blue LED should now be blinking - The mouse is now unpaired, and ready to be paired again ting, action and sport games. To use your FragFX SHARK classic, you are expected to 2) pair the mouse have a working Sony PlayStation 3 console system. - (Switch on the mouse) - Insert the dongle into the PC or PS3 For more information visit our web site at www.splitfish.com. Please read the entire - Hold the mouse close (~10cm/~4inch) to the dongle, and press either F, R, A or instruction - you get most out of your FragFX SHARK classic. G button - The LED on the mouse should dim out and the green LED on the dongle should light Happy Fragging! - If not, repeat the procedure GET YOUR DONGLE READY 3) unpair the chuck (switch OFF the mouse): Select Platform Switch Position the Dongle - Switch on the chuck PS3 - ‘Gamepad mode’ for function as a game controller - Press F, L1, L2, select, FX, L3(press stick) at the same time - ‘Keyboard Mode’ for chat and browser only - Switch the chuck off, and on again. -

Recommendations for Integrating a P300-Based Brain–Computer Interface in Virtual Reality Environments for Gaming: an Update

computers Review Recommendations for Integrating a P300-Based Brain–Computer Interface in Virtual Reality Environments for Gaming: An Update Grégoire Cattan 1,* , Anton Andreev 2 and Etienne Visinoni 3 1 IBM, Cloud and Cognitive Software, Department of SaferPayment, 30-150 Krakow, Poland 2 GIPSA-lab, CNRS, Department of Platforms and Project, 38402 Saint Martin d’Hères, France; [email protected] 3 SputySoft, 75004 Paris, France; [email protected] * Correspondence: [email protected] Received: 19 September 2020; Accepted: 12 November 2020; Published: 14 November 2020 Abstract: The integration of a P300-based brain–computer interface (BCI) into virtual reality (VR) environments is promising for the video games industry. However, it faces several limitations, mainly due to hardware constraints and limitations engendered by the stimulation needed by the BCI. The main restriction is still the low transfer rate that can be achieved by current BCI technology, preventing movement while using VR. The goal of this paper is to review current limitations and to provide application creators with design recommendations to overcome them, thus significantly reducing the development time and making the domain of BCI more accessible to developers. We review the design of video games from the perspective of BCI and VR with the objective of enhancing the user experience. An essential recommendation is to use the BCI only for non-complex and non-critical tasks in the game. Also, the BCI should be used to control actions that are naturally integrated into the virtual world. Finally, adventure and simulation games, especially if cooperative (multi-user), appear to be the best candidates for designing an effective VR game enriched by BCI technology. -

Q4-15 Earnings

January 27th, 2016 Facebook Q4-15 and Full Year 2015 Earnings Conference Call Operator: Good afternoon. My name is Chris and I will be your conference operator today. At this time I would like to welcome everyone to the Facebook Fourth Quarter and Full Year 2015 Earnings Call. All lines have been placed on mute to prevent any background noise. After the speakers' remarks, there will be a question and answer session. If you would like to ask a question during that time, please press star then the number 1 on your telephone keypad. This call will be recorded. Thank you very much. Deborah Crawford, VP Investor Relations: Thank you. Good afternoon and welcome to Facebook’s fourth quarter and full year 2015 earnings conference call. Joining me today to discuss our results are Mark Zuckerberg, CEO; Sheryl Sandberg, COO; and Dave Wehner, CFO. Before we get started, I would like to take this opportunity to remind you that our remarks today will include forward-looking statements. Actual results may differ materially from those contemplated by these forward-looking statements. Factors that could cause these results to differ materially are set forth in today’s press release, our annual report on form 10 -K and our most recent quarterly report on form 10-Q filed with the SEC. Any forward-looking statements that we make on this call are based on assumptions as of today and we undertake no obligation to update these statements as a result of new information or future events. During this call we may present both GAAP and non-GAAP financial measures. -

Virtual Reality Tremor Reduction in Parkinson's Disease

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 29 February 2020 doi:10.20944/preprints202002.0452.v1 Virtual Reality tremor reduction in Parkinson's disease John Cornacchioli, Alec Galambos, Stamatina Rentouli, Robert Canciello, Roberta Marongiu, Daniel Cabrera, eMalick G. Njie* NeuroStorm, Inc, New York City, New York, United States of America stoPD, Long Island City, New York, United States of America *Corresponding author at: Dr. eMalick G. Njie, NeuroStorm, Inc, 1412 Broadway, Fl21, New York, NY 10018. Tel.: +1 347 651 1267; [email protected], [email protected] Abstract: Multidisciplinary neurotechnology holds the promise of understanding and non-invasively treating neurodegenerative diseases. In this preclinical trial on Parkinson's disease (PD), we combined neuroscience together with the nascent field of medical virtual reality and generated several important observations. First, we established the Oculus Rift virtual reality system as a potent measurement device for parkinsonian involuntary hand tremors (IHT). Interestingly, we determined changes in rotation were the most sensitive marker of PD IHT. Secondly, we determined parkinsonian tremors can be abolished in VR with algorithms that remove tremors from patients' digital hands. We also found that PD patients were interested in and were readily able to use VR hardware and software. Together these data suggest PD patients can enter VR and be asymptotic of PD IHT. Importantly, VR is an open-medium where patients can perform actions, activities, and functions that positively impact their real lives - for instance, one can sign tax return documents in VR and have them printed on real paper or directly e-sign via internet to government tax agencies. -

Video Game Control Dimensionality Analysis

http://www.diva-portal.org Postprint This is the accepted version of a paper presented at IE2014, 2-3 December 2014, Newcastle, Australia. Citation for the original published paper: Mustaquim, M., Nyström, T. (2014) Video Game Control Dimensionality Analysis. In: Blackmore, K., Nesbitt, K., and Smith, S.P. (ed.), Proceedings of the 2014 Conference on Interactive Entertainment (IE2014) New York: Association for Computing Machinery (ACM) http://dx.doi.org/10.1145/2677758.2677784 N.B. When citing this work, cite the original published paper. Permanent link to this version: http://urn.kb.se/resolve?urn=urn:nbn:se:uu:diva-234183 Video Game Control Dimensionality Analysis Moyen M. Mustaquim Tobias Nyström Uppsala University Uppsala University Uppsala, Sweden Uppsala, Sweden +46 (0) 70 333 51 46 +46 18 471 51 49 [email protected] [email protected] ABSTRACT notice that very few studies have concretely examined the effect In this paper we have studied the video games control of game controllers on game enjoyment [25]. A successfully dimensionality and its effects on the traditional way of designed controller can contribute in identifying different player interpreting difficulty and familiarity in games. This paper experiences by defining various types of games that have been presents the findings in which we have studied the Xbox 360 effortlessly played with a controller because of the controller’s console’s games control dimensionality. Multivariate statistical design [18]. One example is the Microsoft Xbox controller that operations were performed on the collected data from 83 different became a favorite among players when playing “first person- games of Xbox 360. -

Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces

TECHNOLOGY AND CODE published: 04 June 2021 doi: 10.3389/frvir.2021.684498 Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces Florian Kern*, Peter Kullmann, Elisabeth Ganal, Kristof Korwisi, René Stingl, Florian Niebling and Marc Erich Latoschik Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany This article introduces the Off-The-Shelf Stylus (OTSS), a framework for 2D interaction (in 3D) as well as for handwriting and sketching with digital pen, ink, and paper on physically aligned virtual surfaces in Virtual, Augmented, and Mixed Reality (VR, AR, MR: XR for short). OTSS supports self-made XR styluses based on consumer-grade six-degrees-of-freedom XR controllers and commercially available styluses. The framework provides separate modules for three basic but vital features: 1) The stylus module provides stylus construction and calibration features. 2) The surface module provides surface calibration and visual feedback features for virtual-physical 2D surface alignment using our so-called 3ViSuAl procedure, and Edited by: surface interaction features. 3) The evaluation suite provides a comprehensive test bed Daniel Zielasko, combining technical measurements for precision, accuracy, and latency with extensive University of Trier, Germany usability evaluations including handwriting and sketching tasks based on established Reviewed by: visuomotor, graphomotor, and handwriting research. The framework’s development is Wolfgang Stuerzlinger, Simon Fraser University, Canada accompanied by an extensive open source reference implementation targeting the Unity Thammathip Piumsomboon, game engine using an Oculus Rift S headset and Oculus Touch controllers. The University of Canterbury, New Zealand development compares three low-cost and low-tech options to equip controllers with a *Correspondence: tip and includes a web browser-based surface providing support for interacting, Florian Kern fl[email protected] handwriting, and sketching. -

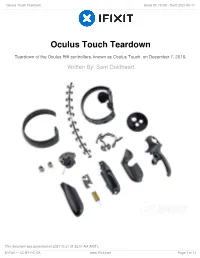

Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17

Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Oculus Touch Teardown Teardown of the Oculus Rift controllers, known as Oculus Touch, on December 7, 2016. Written By: Sam Goldheart This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 1 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 INTRODUCTION Oculus finally catches up with the big boys with the release of their ultra-responsive Oculus Touch controllers. Requiring a second IR camera and featuring a whole mess of tactile and capacitive input options, these controllers are bound to be chock full of IR LEDs and tons of exciting tech—but we'll only know for sure if we tear them down! Want to keep in touch? Follow us on Instagram, Twitter, and Facebook for all the latest gadget news. TOOLS: T5 Torx Screwdriver (1) T6 Torx Screwdriver (1) iOpener (1) Soldering Iron (1) iFixit Opening Tools (1) Spudger (1) Tweezers (1) This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 2 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Step 1 — Oculus Touch Teardown Before we tear down, we take the opportunity to ogle the Oculus Touch system, which includes: An additional Oculus Sensor A Rockband adapter Two IR-emitting Touch controllers with finger tracking and a mess of buttons This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 3 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Step 2 Using our spectrespecs fancy IR- viewing technology, we get an Oculus Sensor eye's view of the Touch controllers.