An Analysis of Sexual Dimorphism in the Human Face

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Recognizing When a Child's Injury Or Illness Is Caused by Abuse

U.S. Department of Justice Office of Justice Programs Office of Juvenile Justice and Delinquency Prevention Recognizing When a Child’s Injury or Illness Is Caused by Abuse PORTABLE GUIDE TO INVESTIGATING CHILD ABUSE U.S. Department of Justice Office of Justice Programs 810 Seventh Street NW. Washington, DC 20531 Eric H. Holder, Jr. Attorney General Karol V. Mason Assistant Attorney General Robert L. Listenbee Administrator Office of Juvenile Justice and Delinquency Prevention Office of Justice Programs Innovation • Partnerships • Safer Neighborhoods www.ojp.usdoj.gov Office of Juvenile Justice and Delinquency Prevention www.ojjdp.gov The Office of Juvenile Justice and Delinquency Prevention is a component of the Office of Justice Programs, which also includes the Bureau of Justice Assistance; the Bureau of Justice Statistics; the National Institute of Justice; the Office for Victims of Crime; and the Office of Sex Offender Sentencing, Monitoring, Apprehending, Registering, and Tracking. Recognizing When a Child’s Injury or Illness Is Caused by Abuse PORTABLE GUIDE TO INVESTIGATING CHILD ABUSE NCJ 243908 JULY 2014 Contents Could This Be Child Abuse? ..............................................................................................1 Caretaker Assessment ......................................................................................................2 Injury Assessment ............................................................................................................4 Ruling Out a Natural Phenomenon or Medical Conditions -

Evolutionary Trajectories Explain the Diversified Evolution of Isogamy And

Evolutionary trajectories explain the diversified evolution of isogamy and anisogamy in marine green algae Tatsuya Togashia,b,c,1, John L. Barteltc,d, Jin Yoshimuraa,e,f, Kei-ichi Tainakae, and Paul Alan Coxc aMarine Biosystems Research Center, Chiba University, Kamogawa 299-5502, Japan; bPrecursory Research for Embryonic Science and Technology, Japan Science and Technology Agency, Kawaguchi 332-0012, Japan; cInstitute for Ethnomedicine, Jackson Hole, WY 83001; dEvolutionary Programming, San Clemente, CA 92673; eDepartment of Systems Engineering, Shizuoka University, Hamamatsu 432-8561, Japan; and fDepartment of Environmental and Forest Biology, State University of New York College of Environmental Science and Forestry, Syracuse, NY 13210 Edited by Geoff A. Parker, University of Liverpool, Liverpool, United Kingdom, and accepted by the Editorial Board July 12, 2012 (received for review March 1, 2012) The evolution of anisogamy (the production of gametes of dif- (11) suggested that the “gamete size model” (Parker, Baker, and ferent size) is the first step in the establishment of sexual dimorphism, Smith’s model) is the most applicable to fungi (11). However, and it is a fundamental phenomenon underlying sexual selection. none of these models successfully account for the evolution of It is believed that anisogamy originated from isogamy (production all known forms of isogamy and anisogamy in extant marine of gametes of equal size), which is considered by most theorists to green algae (12). be the ancestral condition. Although nearly all plant and animal Marine green algae are characterized by a variety of mating species are anisogamous, extant species of marine green algae systems linked to their habitats (Fig. -

Nasolabial and Forehead Flap Reconstruction of Contiguous Alar

Journal of Plastic, Reconstructive & Aesthetic Surgery (2017) 70, 330e335 Nasolabial and forehead flap reconstruction of contiguous alareupper lip defects Jonathan A. Zelken a,b, Sashank K. Reddy c, Chun-Shin Chang a, Shiow-Shuh Chuang a, Cheng-Jen Chang a, Hung-Chang Chen a, Yen-Chang Hsiao a,* a Department of Plastic and Reconstructive Surgery, Chang Gung Memorial Hospital, College of Medicine, Chang Gung University, Taipei, Taiwan b Department of Plastic and Reconstructive Surgery, Breastlink Medical Group, Laguna Hills, CA, USA c Department of Plastic and Reconstructive Surgery, Johns Hopkins Hospital, Baltimore, MD, USA Received 4 May 2016; accepted 31 October 2016 KEYWORDS Summary Background: Defects of the nasal ala and upper lip aesthetic subunits can be Nasal reconstruction; challenging to reconstruct when they occur in isolation. When defects incorporate both Nasolabial flap; the subunits, the challenge is compounded as subunit boundaries also require reconstruc- Rhinoplasty; tion, and local soft tissue reservoirs alone may provide inadequate coverage. In such cases, Forehead flap we used nasolabial flaps for upper lip reconstructionandaforeheadflapforalarrecon- struction. Methods: Three men and three women aged 21e79 years (average, 55 years) were treated for defects of the nasal ala and upper lip that resulted from cancer (n Z 4) and trauma (n Z 2). Unaffected contralateral subunits dictated the flap design. The upper lip subunit was excised and replaced with a nasolabial flap. The flap, depending on the contralateral reference, determined accurate alar base position. A forehead flap resurfaced or replaced the nasal ala. Autologous cartilage was used in every case to fortify the forehead flap reconstruction. Results: Patients were followed for 25.6 months (range, 1e4 years). -

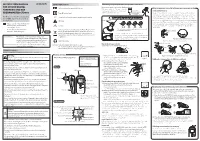

Instruction Manual for Citizen Digital Forehead And

INSTRUCTION MANUAL Symbol Explanations Measuring body temperature (temperature detection) Remove the probe cap and check the probe tip. FOR CITIZEN DIGITAL Refer to instruction manual before use. How to measure correctly in the ear measurement mode * When using it for the first time, open the FOREHEAD AND EAR battery cap and remove the insulation Open the Temperature basics battery cap to THERMOMETER CTD710 Type BF applied part sheet under the battery cap. remove the All objects radiate heat. This device consists of a probe with a built-in insulation sheet. infrared sensor that measures body temperature by detecting the heat Thank you very much for purchasing IP22 Classification for water ingress and particulate matter. radiated by the eardrum and surrounding tissue. Figure 4 shows the the CITIZEN digital forehead and ear Keeping the probe window clean tortuous anatomy of a normal ear canal. As shown in Figure 5, hold the thermometer. Warning Probe window ear and gently pull it back at an angle or pull it straight back to straighten out the ear canal. The shape of the ear canal differs from individual, check • Please read all of the information in before measurement. Accurate temperature measurements make it this instruction manual before Caution essential to straighten the ear canal so that the probe tip directly faces operating the device. the eardrum. • Be sure to have this instruction Indicates this device is subject to the Waste Electrical and Dirt in the probe window will impact the accuracy of temperature detection. External auditory manual to hand during use. 1902LA Electronic Equipment Directive in the European Union. -

Ear and Forehead Thermometer

Ear and Forehead Thermometer INSTRUCTION MANUAL Item No. 91807 19.PJN174-14_GA-USA_HHD-Ohrthermometer_DSO364_28.07.14 Montag, 28. Juli 2014 14:37:38 USAD TABLE OF CONTENTS No. Topic Page 1.0 Definition of symbols 5 2.0 Application and functionality 6 2.1 Intended use 6 2.2 Field of application 7 3.0 Safety instructions 7 3.1 General safety instructions 7 3.3 Environment for which the DSO 364 device is not suited 9 3.4 Usage by children and adolescents 10 3.5 Information on the application of the device 10 4.0 Questions concerning body temperature 13 4.1 What is body temperature? 13 4.2 Advantages of measuring the body tempera- ture in the ear 14 4.3 Information on measuring the body tempera- ture in the ear 15 5.0 Scope of delivery / contents 16 6.0 Designation of device parts 17 7.0 LCD display 18 8.0 Basic functions 19 8.1 Commissioning of the device 19 8.2 Warning indicator if the body temperature is 21 too high 2 19.PJN174-14_GA-USA_HHD-Ohrthermometer_DSO364_28.07.14 Montag, 28. Juli 2014 14:37:38 TABLE OF CONTENTS USA No. Topic Page 8.3 Backlighting / torch function 21 8.4 Energy saving mode 22 8.5 Setting °Celsius / °Fahrenheit 22 9.0 Display / setting time and date 23 9.1 Display of time and date 23 9.2 Setting time and date 23 10.0 Memory mode 26 11.0 Measuring the temperature in the ear 28 12.0 Measuring the temperature on the forehead 30 13.0 Object temperature measurement 32 14.0 Disposal of the device 33 15.0 Battery change and information concerning batteries 33 16.0 Cleaning and care 36 17.0 “Cleaning” warning indicator 37 18.0 Calibration 38 19.0 Malfunctions 39 20.0 Technical specification 41 21.0 Warranty 44 3 19.PJN174-14_GA-USA_HHD-Ohrthermometer_DSO364_28.07.14 Montag, 28. -

Core Strength Exercises

CORE STRENGTH EXERCISES • The main muscles involved with Core Strength include muscles of the abdomen, hip flexors and low back postural muscles. • It is important to maintain proper strength of these muscles to prevent and rehabilitate from low back / pelvic dysfunction and pain. • All of these exercises should be comfortable and not produce pain or discomfort. Bracing: The Key to preventing low back injuries Abdominal Bracing – Lying down • Lie down, face up with your knees bent and feet on floor. • Contract your stomach muscles so that you imagine pushing your low back to the floor or that you are pulling your belly button to your spine. Abdominal Bracing - Standing • Stand up straight and place one hand on the small of the back and one hand on your abdomen. • Bend Forward at the waist and feel the lower back muscles contract (extensors). • Come back up to neutral to relax the low back muscles. • You will feel the low back muscles contract when you contract your abdominal and gluteal muscles. Bracing technique and curling • Lay on the ground on your back. • One leg is bent and the other leg remains flat on the floor. Your hands can be on your chest or at your side. • Fix your eyes on a spot on the ceiling directly above you. • Tighten your stomach muscles. • Holding the stomach contraction, lift your shoulder blades and head off the floor while looking at that spot on the ceiling. Hold for 3-10 seconds and repeat three times. • Switch leg positions and repeat the technique. Beginner to Intermediate position • Position yourself on your hands and knees with your pelvis and thighs at 90 degrees and upper back and arms at 90 degrees. -

Facial Image Comparison Feature List for Morphological Analysis

Disclaimer: As a condition to the use of this document and the information contained herein, the Facial Identification Scientific Working Group (FISWG) requests notification by e-mail before or contemporaneously to the introduction of this document, or any portion thereof, as a marked exhibit offered for or moved into evidence in any judicial, administrative, legislative, or adjudicatory hearing or other proceeding (including discovery proceedings) in the United States or any foreign country. Such notification shall include: 1) the formal name of the proceeding, including docket number or similar identifier; 2) the name and location of the body conducting the hearing or proceeding; and 3) the name, mailing address (if available) and contact information of the party offering or moving the document into evidence. Subsequent to the use of this document in a formal proceeding, it is requested that FISWG be notified as to its use and the outcome of the proceeding. Notifications should be sent to: Redistribution Policy: FISWG grants permission for redistribution and use of all publicly posted documents created by FISWG, provided the following conditions are met: Redistributions of documents, or parts of documents, must retain the FISWG cover page containing the disclaimer. Neither the name of FISWG, nor the names of its contributors, may be used to endorse or promote products derived from its documents. Any reference or quote from a FISWG document must include the version number (or creation date) of the document and mention if the document is in a draft status. Version 2.0 2018.09.11 Facial Image Comparison Feature List for Morphological Analysis 1. -

Establishing Sexual Dimorphism in Humans

CORE Metadata, citation and similar papers at core.ac.uk Coll. Antropol. 30 (2006) 3: 653–658 Review Establishing Sexual Dimorphism in Humans Roxani Angelopoulou, Giagkos Lavranos and Panagiota Manolakou Department of Histology and Embryology, School of Medicine, University of Athens, Athens, Greece ABSTRACT Sexual dimorphism, i.e. the distinct recognition of only two sexes per species, is the phenotypic expression of a multi- stage procedure at chromosomal, gonadal, hormonal and behavioral level. Chromosomal – genetic sexual dimorphism refers to the presence of two identical (XX) or two different (XY) gonosomes in females and males, respectively. This is due to the distinct content of the X and Y-chromosomes in both genes and regulatory sequences, SRY being the key regulator. Hormones (AMH, testosterone, Insl3) secreted by the foetal testis (gonadal sexual dimorphism), impede Müller duct de- velopment, masculinize Wolff duct derivatives and are involved in testicular descent (hormonal sexual dimorphism). Steroid hormone receptors detected in the nervous system, link androgens with behavioral sexual dimorphism. Further- more, sex chromosome genes directly affect brain sexual dimorphism and this may precede gonadal differentiation. Key words: SRY, Insl3, testis differentiation, gonads, androgens, AMH, Müller / Wolff ducts, aromatase, brain, be- havioral sex Introduction Sex is a set model of anatomy and behavior, character- latter referring to the two identical gonosomes in each ized by the ability to contribute to the process of repro- diploid cell. duction. Although the latter is possible in the absence of sex or in its multiple presences, the most typical pattern The basis of sexual dimorphism in mammals derives and the one corresponding to humans is that of sexual di- from the evolution of the sex chromosomes2. -

Sex-Specific Spawning Behavior and Its Consequences in an External Fertilizer

vol. 165, no. 6 the american naturalist june 2005 Sex-Specific Spawning Behavior and Its Consequences in an External Fertilizer Don R. Levitan* Department of Biological Science, Florida State University, a very simple way—the timing of gamete release (Levitan Tallahassee, Florida 32306-1100 1998b). This allows for an investigation of how mating behavior can influence mating success without the com- Submitted October 29, 2004; Accepted February 11, 2005; Electronically published April 4, 2005 plications imposed by variation in adult morphological features, interactions within the female reproductive sys- tem, or post-mating (or pollination) investments that can all influence paternal and maternal success (Arnqvist and Rowe 1995; Havens and Delph 1996; Eberhard 1998). It abstract: Identifying the target of sexual selection in externally also provides an avenue for exploring how the evolution fertilizing taxa has been problematic because species in these taxa often lack sexual dimorphism. However, these species often show sex of sexual dimorphism in adult traits may be related to the differences in spawning behavior; males spawn before females. I in- evolutionary transition to internal fertilization. vestigated the consequences of spawning order and time intervals One of the most striking patterns among animals and between male and female spawning in two field experiments. The in particular invertebrate taxa is that, generally, species first involved releasing one female sea urchin’s eggs and one or two that copulate or pseudocopulate exhibit sexual dimor- males’ sperm in discrete puffs from syringes; the second involved phism whereas species that broadcast gametes do not inducing males to spawn at different intervals in situ within a pop- ulation of spawning females. -

Sexual Dimorphism in Four Species of Rockfish Genus Sebastes (Scorpaenidae)

181 Sexual dimorphism in four species of rockfish genus Sebastes (Scorpaenidae) Tina Wyllie Echeverria Soiithwest Fisheries Center Tihurori Laboratory, Natioriril Maririe Fisheries Service, NOAA, 3150 Paradise Drive) Tibirron, CA 94920, U.S.A. Keywords: Morphology, Sexual selection. Natural selection, Parental care. Discriminant analysis Synopsis Sexual dimorphisms, and factors influencing the evolution of these differences, have been investigated for four species of rockfish: Sebastcs melanops, S. fluvidus, S.mystiriiis. and S. serranoides. These four species, which have similar ecology. tend to aggregate by species with males and females staying together throughout the year. In all four species adult females reach larger sizes than males, which probably relates to their role in reproduction. The number of eggs produced increases with size, so that natural selection has favored larger females. It appears malzs were subjected to different selective pressures than females. It was more advantageous for males to mature quickly, to become reproductive, than to expend energy on growth. Other sexually dimorphic features include larger eyes in males of all four species and longer pectoral fin rays in males of the three piscivorous species: S. rnelanops, S. fhvidiis. and S. serranoides. The larger pectoral fins may permit smaller males to coexist with females by increasing acceleration and, together with the proportionately larger eye, enable the male to compete successfully with the female tb capture elusive prey (the latter not necessarily useful for the planktivore S. niy.ftiriiis). Since the size of the eye is equivalent in both sexes of the same age, visual perception should be comparable for both sexes. Introduction tails (Xiphophorus sp.) and gobies (Gobius sp.) as fin variations, in guppies (Paecilia sp.) and wrasses Natural selection is a mechanism of evolution (Labrus sp.) as color variations, in eels (Anguilla which can influence physical characteristics of a sp.) and mullets (Mugil sp.) as size differences. -

Surgical Anatomy of the Ligamentous Attachments in the Temple and Periorbital Regions

Cosmetic Surgical Anatomy of the Ligamentous Attachments in the Temple and Periorbital Regions Christopher J. Moss, M.B., B.S., F.R.A.C.S., Dip.Anat., Bryan C. Mendelson, F.R.C.S.(E), F.R.A.C.S., F.A.C.S., and G. Ian Taylor, F.R.C.S., F.R.A.C.S., M.D. Melbourne, Australia This study documents the anatomy of the deep attach- complex system of deep attachments that arise ments of the superficial fasciae within the temporal and from the underlying deep fascia/periosteum. periorbital regions. A highly organized and consistent three-dimensional connective tissue framework supports The subSMAS plane that contains these attach- the overlying skin and soft tissues in these areas. ments is therefore not always a simple cleavage The regional nerves and vessels display constant and plane. This explains why surgical dissection is predictable relationships with both the fascial planes and considerably more complicated in the midfa- their ligamentous attachments. Knowledge of these rela- tionships allows the surgeon to use the tissue planes and cial, temporal, and periorbital regions than in soft-tissue ligaments as intraoperative landmarks for the the scalp. vital neurovascular structures. This results in improved In the cheek, these deep attachments have efficiency and safety for aesthetic procedures in these been defined as the zygomatic, masseteric, and regions. (Plast. Reconstr. Surg. 105: 1475, 2000.) mandibular-cutaneous ligaments.21,22 These lig- aments provide a lateral line of fixation for the mobile tissues of the medial cheek. Release of The patterns of arrangement of the layers of these retaining ligaments is fundamental to the superficial fascia in the cheek,1–9 forehead,10–13 14 15,16 extended SMAS technique of “deep-plane” sur- scalp, and temple have been well de- 18,19,23,24 scribed. -

Human Anatomy and Physiology

LECTURE NOTES For Nursing Students Human Anatomy and Physiology Nega Assefa Alemaya University Yosief Tsige Jimma University In collaboration with the Ethiopia Public Health Training Initiative, The Carter Center, the Ethiopia Ministry of Health, and the Ethiopia Ministry of Education 2003 Funded under USAID Cooperative Agreement No. 663-A-00-00-0358-00. Produced in collaboration with the Ethiopia Public Health Training Initiative, The Carter Center, the Ethiopia Ministry of Health, and the Ethiopia Ministry of Education. Important Guidelines for Printing and Photocopying Limited permission is granted free of charge to print or photocopy all pages of this publication for educational, not-for-profit use by health care workers, students or faculty. All copies must retain all author credits and copyright notices included in the original document. Under no circumstances is it permissible to sell or distribute on a commercial basis, or to claim authorship of, copies of material reproduced from this publication. ©2003 by Nega Assefa and Yosief Tsige All rights reserved. Except as expressly provided above, no part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without written permission of the author or authors. This material is intended for educational use only by practicing health care workers or students and faculty in a health care field. Human Anatomy and Physiology Preface There is a shortage in Ethiopia of teaching / learning material in the area of anatomy and physicalogy for nurses. The Carter Center EPHTI appreciating the problem and promoted the development of this lecture note that could help both the teachers and students.