Natural Language Processing and Text Mining with Graph-Structured Representations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Construction of English-Bodo Parallel Text

International Journal on Natural Language Computing (IJNLC) Vol.7, No.5, October 2018 CONSTRUCTION OF ENGLISH -BODO PARALLEL TEXT CORPUS FOR STATISTICAL MACHINE TRANSLATION Saiful Islam 1, Abhijit Paul 2, Bipul Syam Purkayastha 3 and Ismail Hussain 4 1,2,3 Department of Computer Science, Assam University, Silchar, India 4Department of Bodo, Gauhati University, Guwahati, India ABSTRACT Corpus is a large collection of homogeneous and authentic written texts (or speech) of a particular natural language which exists in machine readable form. The scope of the corpus is endless in Computational Linguistics and Natural Language Processing (NLP). Parallel corpus is a very useful resource for most of the applications of NLP, especially for Statistical Machine Translation (SMT). The SMT is the most popular approach of Machine Translation (MT) nowadays and it can produce high quality translation result based on huge amount of aligned parallel text corpora in both the source and target languages. Although Bodo is a recognized natural language of India and co-official languages of Assam, still the machine readable information of Bodo language is very low. Therefore, to expand the computerized information of the language, English to Bodo SMT system has been developed. But this paper mainly focuses on building English-Bodo parallel text corpora to implement the English to Bodo SMT system using Phrase-Based SMT approach. We have designed an E-BPTC (English-Bodo Parallel Text Corpus) creator tool and have been constructed General and Newspaper domains English-Bodo parallel text corpora. Finally, the quality of the constructed parallel text corpora has been tested using two evaluation techniques in the SMT system. -

Usage of IT Terminology in Corpus Linguistics Mavlonova Mavluda Davurovna

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075, Volume-8, Issue-7S, May 2019 Usage of IT Terminology in Corpus Linguistics Mavlonova Mavluda Davurovna At this period corpora were used in semantics and related Abstract: Corpus linguistic will be the one of latest and fast field. At this period corpus linguistics was not commonly method for teaching and learning of different languages. used, no computers were used. Corpus linguistic history Proposed article can provide the corpus linguistic introduction were opposed by Noam Chomsky. Now a day’s modern and give the general overview about the linguistic team of corpus. corpus linguistic was formed from English work context and Article described about the corpus, novelties and corpus are associated in the following field. Proposed paper can focus on the now it is used in many other languages. Alongside was using the corpus linguistic in IT field. As from research it is corpus linguistic history and this is considered as obvious that corpora will be used as internet and primary data in methodology [Abdumanapovna, 2018]. A landmark is the field of IT. The method of text corpus is the digestive modern corpus linguistic which was publish by Henry approach which can drive the different set of rules to govern the Kucera. natural language from the text in the particular language, it can Corpus linguistic introduce new methods and techniques explore about languages that how it can inter-related with other. for more complete description of modern languages and Hence the corpus linguistic will be automatically drive from the these also provide opportunities to obtain new result with text sources. -

Forescout Counteract® Endpoint Support Compatibility Matrix Updated: October 2018

ForeScout CounterACT® Endpoint Support Compatibility Matrix Updated: October 2018 ForeScout CounterACT Endpoint Support Compatibility Matrix 2 Table of Contents About Endpoint Support Compatibility ......................................................... 3 Operating Systems ....................................................................................... 3 Microsoft Windows (32 & 64 BIT Versions) ...................................................... 3 MAC OS X / MACOS ...................................................................................... 5 Linux .......................................................................................................... 6 Web Browsers .............................................................................................. 8 Microsoft Windows Applications ...................................................................... 9 Antivirus ................................................................................................. 9 Peer-to-Peer .......................................................................................... 25 Instant Messaging .................................................................................. 31 Anti-Spyware ......................................................................................... 34 Personal Firewall .................................................................................... 36 Hard Drive Encryption ............................................................................. 38 Cloud Sync ........................................................................................... -

Enriching an Explanatory Dictionary with Framenet and Propbank Corpus Examples

Proceedings of eLex 2019 Enriching an Explanatory Dictionary with FrameNet and PropBank Corpus Examples Pēteris Paikens 1, Normunds Grūzītis 2, Laura Rituma 2, Gunta Nešpore 2, Viktors Lipskis 2, Lauma Pretkalniņa2, Andrejs Spektors 2 1 Latvian Information Agency LETA, Marijas street 2, Riga, Latvia 2 Institute of Mathematics and Computer Science, University of Latvia, Raina blvd. 29, Riga, Latvia E-mail: [email protected], [email protected] Abstract This paper describes ongoing work to extend an online dictionary of Latvian – Tezaurs.lv – with representative semantically annotated corpus examples according to the FrameNet and PropBank methodologies and word sense inventories. Tezaurs.lv is one of the largest open lexical resources for Latvian, combining information from more than 300 legacy dictionaries and other sources. The corpus examples are extracted from Latvian FrameNet and PropBank corpora, which are manually annotated parallel subsets of a balanced text corpus of contemporary Latvian. The proposed approach augments traditional lexicographic information with modern cross-lingually interpretable information and enables analysis of word senses from the perspective of frame semantics, which is substantially different from (complementary to) the traditional approach applied in Latvian lexicography. In cases where FrameNet and PropBank corpus evidence aligns well with the word sense split in legacy dictionaries, the frame-semantically annotated corpus examples supplement the word sense information with clarifying usage examples and commonly used semantic valence patterns. However, the annotated corpus examples often provide evidence of a different sense split, which is often more coarse-grained and, thus, may help dictionary users to cluster and comprehend a fine-grained sense split suggested by the legacy sources. -

20 Toward a Sustainable Handling of Interlinear-Glossed Text in Language Documentation

Toward a Sustainable Handling of Interlinear-Glossed Text in Language Documentation JOHANN-MATTIS LIST, Max Planck Institute for the Science of Human History, Germany 20 NATHANIEL A. SIMS, The University of California, Santa Barbara, America ROBERT FORKEL, Max Planck Institute for the Science of Human History, Germany While the amount of digitally available data on the worlds’ languages is steadily increasing, with more and more languages being documented, only a small proportion of the language resources produced are sustain- able. Data reuse is often difficult due to idiosyncratic formats and a negligence of standards that couldhelp to increase the comparability of linguistic data. The sustainability problem is nicely reflected in the current practice of handling interlinear-glossed text, one of the crucial resources produced in language documen- tation. Although large collections of glossed texts have been produced so far, the current practice of data handling makes data reuse difficult. In order to address this problem, we propose a first framework forthe computer-assisted, sustainable handling of interlinear-glossed text resources. Building on recent standardiza- tion proposals for word lists and structural datasets, combined with state-of-the-art methods for automated sequence comparison in historical linguistics, we show how our workflow can be used to lift a collection of interlinear-glossed Qiang texts (an endangered language spoken in Sichuan, China), and how the lifted data can assist linguists in their research. CCS Concepts: • Applied computing → Language translation; Additional Key Words and Phrases: Sino-tibetan, interlinear-glossed text, computer-assisted language com- parison, standardization, qiang ACM Reference format: Johann-Mattis List, Nathaniel A. -

Linguistic Annotation of the Digital Papyrological Corpus: Sematia

Marja Vierros Linguistic Annotation of the Digital Papyrological Corpus: Sematia 1 Introduction: Why to annotate papyri linguistically? Linguists who study historical languages usually find the methods of corpus linguis- tics exceptionally helpful. When the intuitions of native speakers are lacking, as is the case for historical languages, the corpora provide researchers with materials that replaces the intuitions on which the researchers of modern languages can rely. Using large corpora and computers to count and retrieve information also provides empiri- cal back-up from actual language usage. In the case of ancient Greek, the corpus of literary texts (e.g. Thesaurus Linguae Graecae or the Greek and Roman Collection in the Perseus Digital Library) gives information on the Greek language as it was used in lyric poetry, epic, drama, and prose writing; all these literary genres had some artistic aims and therefore do not always describe language as it was used in normal commu- nication. Ancient written texts rarely reflect the everyday language use, let alone speech. However, the corpus of documentary papyri gets close. The writers of the pa- pyri vary between professionally trained scribes and some individuals who had only rudimentary writing skills. The text types also vary from official decrees and orders to small notes and receipts. What they have in common, though, is that they have been written for a specific, current need instead of trying to impress a specific audience. Documentary papyri represent everyday texts, utilitarian prose,1 and in that respect, they provide us a very valuable source of language actually used by common people in everyday circumstances. -

Formal Concept Analysis in Knowledge Processing: a Survey on Applications ⇑ Jonas Poelmans A,C, , Dmitry I

Expert Systems with Applications 40 (2013) 6538–6560 Contents lists available at SciVerse ScienceDirect Expert Systems with Applications journal homepage: www.elsevier.com/locate/eswa Review Formal concept analysis in knowledge processing: A survey on applications ⇑ Jonas Poelmans a,c, , Dmitry I. Ignatov c, Sergei O. Kuznetsov c, Guido Dedene a,b a KU Leuven, Faculty of Business and Economics, Naamsestraat 69, 3000 Leuven, Belgium b Universiteit van Amsterdam Business School, Roetersstraat 11, 1018 WB Amsterdam, The Netherlands c National Research University Higher School of Economics, Pokrovsky boulevard, 11, 109028 Moscow, Russia article info abstract Keywords: This is the second part of a large survey paper in which we analyze recent literature on Formal Concept Formal concept analysis (FCA) Analysis (FCA) and some closely related disciplines using FCA. We collected 1072 papers published Knowledge discovery in databases between 2003 and 2011 mentioning terms related to Formal Concept Analysis in the title, abstract and Text mining keywords. We developed a knowledge browsing environment to support our literature analysis process. Applications We use the visualization capabilities of FCA to explore the literature, to discover and conceptually repre- Systematic literature overview sent the main research topics in the FCA community. In this second part, we zoom in on and give an extensive overview of the papers published between 2003 and 2011 which applied FCA-based methods for knowledge discovery and ontology engineering in various application domains. These domains include software mining, web analytics, medicine, biology and chemistry data. Ó 2013 Elsevier Ltd. All rights reserved. 1. Introduction mining, i.e. gaining insight into source code with FCA. -

Tracking Users Across the Web Via TLS Session Resumption

Tracking Users across the Web via TLS Session Resumption Erik Sy Christian Burkert University of Hamburg University of Hamburg Hannes Federrath Mathias Fischer University of Hamburg University of Hamburg ABSTRACT modes, and browser extensions to restrict tracking practices such as User tracking on the Internet can come in various forms, e.g., via HTTP cookies. Browser fingerprinting got more difficult, as trackers cookies or by fingerprinting web browsers. A technique that got can hardly distinguish the fingerprints of mobile browsers. They are less attention so far is user tracking based on TLS and specifically often not as unique as their counterparts on desktop systems [4, 12]. based on the TLS session resumption mechanism. To the best of Tracking based on IP addresses is restricted because of NAT that our knowledge, we are the first that investigate the applicability of causes users to share public IP addresses and it cannot track devices TLS session resumption for user tracking. For that, we evaluated across different networks. As a result, trackers have an increased the configuration of 48 popular browsers and one million of the interest in additional methods for regaining the visibility on the most popular websites. Moreover, we present a so-called prolon- browsing habits of users. The result is a race of arms between gation attack, which allows extending the tracking period beyond trackers as well as privacy-aware users and browser vendors. the lifetime of the session resumption mechanism. To show that One novel tracking technique could be based on TLS session re- under the observed browser configurations tracking via TLS session sumption, which allows abbreviating TLS handshakes by leveraging resumptions is feasible, we also looked into DNS data to understand key material exchanged in an earlier TLS session. -

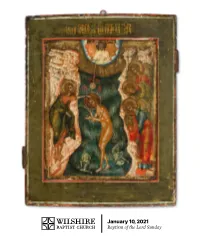

January 10, 2021 Baptism of the Lord Sunday Preparing for Worship If I Had to Guess, I Would Estimate That I Have Something of Me

January 10, 2021 Baptism of the Lord Sunday Preparing for worship If I had to guess, I would estimate that I have something of me. Each baptism required that I been baptized no less than 50 times in my life. get in the water. I had to remember to hold my Growing up as a pastor’s kid, playing baptism breath — that one was sometimes tricky. I had was one of the favorite pastimes in our house- to be ready for something, even if I wasn’t sure hold. My brother and I often baptized our what that something was. stuffed animals, or if they were very unlucky, Today, we recognize in worship the Baptism our live animals. Baby dolls and Barbies didn’t of the Lord Sunday. We recognize the step that escape my young baptismal zealotry. Most Jesus took to get into the water, to come nearer swimming pools flip-flopped between staging to us. Some of you might remember your own grounds for Marco Polo and baptism services of baptism. Some of you might be wondering what cousins and neighborhood kids. baptism might be like for you one day. Wherever Then, of course, there was the baptism where you are in your journey, baptism requires I stood in the baptistery of my beloved church getting into the water and being ready for as my father baptized me. There has been much something. Today, as you prepare for worship, light-hearted debate as to which one of these you might take a few deep cleansing breaths. baptisms “took,” which one really counted. -

Concept Maps Mining for Text Summarization

UNIVERSIDADE FEDERAL DO ESPÍRITO SANTO CENTRO TECNOLÓGICO DEPARTAMENTO DE INFORMÁTICA PROGRAMA DE PÓS-GRADUAÇÃO EM INFORMÁTICA Camila Zacché de Aguiar Concept Maps Mining for Text Summarization VITÓRIA-ES, BRAZIL March 2017 Camila Zacché de Aguiar Concept Maps Mining for Text Summarization Dissertação de Mestrado apresentada ao Programa de Pós-Graduação em Informática da Universidade Federal do Espírito Santo, como requisito parcial para obtenção do Grau de Mestre em Informática. Orientador (a): Davidson CurY Co-orientador: Amal Zouaq VITÓRIA-ES, BRAZIL March 2017 Dados Internacionais de Catalogação-na-publicação (CIP) (Biblioteca Setorial Tecnológica, Universidade Federal do Espírito Santo, ES, Brasil) Aguiar, Camila Zacché de, 1987- A282c Concept maps mining for text summarization / Camila Zacché de Aguiar. – 2017. 149 f. : il. Orientador: Davidson Cury. Coorientador: Amal Zouaq. Dissertação (Mestrado em Informática) – Universidade Federal do Espírito Santo, Centro Tecnológico. 1. Informática na educação. 2. Processamento de linguagem natural (Computação). 3. Recuperação da informação. 4. Mapas conceituais. 5. Sumarização de Textos. 6. Mineração de dados (Computação). I. Cury, Davidson. II. Zouaq, Amal. III. Universidade Federal do Espírito Santo. Centro Tecnológico. IV. Título. CDU: 004 “Most of the fundamental ideas of science are essentially simple, and may, as a rule, be expressed in a language comprehensible to everyone.” Albert Einstein 4 Acknowledgments I would like to thank the manY people who have been with me over the years and who have contributed in one way or another to the completion of this master’s degree. Especially my advisor, Davidson Cury, who in a constructivist way disoriented me several times as a stimulus to the search for new answers. -

Empowering Video Storytellers: Concept Discovery and Annotation for Large Audio-Video-Text Archives

Empowering Video Storytellers: Concept Discovery and Annotation for Large Audio-Video-Text Archives A large amount of video content is available and stored by broadcasters, libraries and other enterprises. However, the lack of effective and efficient tools for searching and retrieving has prevented video becoming a major value asset. Much work has been done for retrieving video information from constrained structured domains, such as news and sports, but general solutions for unstructured audio-video-language intensive content are missing. Our goal is to transform the multimedia archive into an organized and searchable asset so that human efforts can focus on a more important aspect - storytelling. This project involves a cross- disciplinary effort towards development of new techniques for organizing, summarizing, and question answering over audio-video-language intensive content, such as raw documentary footage. Such domains offer the full range of real-world situations and events, manifested with interaction of rich multimedia information. The team includes investigators from Schools of Engineering and Applied Science, Journalism, and Arts. It has extensive experience and deep expertise in analysis of multimedia information - video, audio, and language - as well as broad experience with professional documentary film production and interactive media designs. The project includes two major content partners, WITNESS and WNET, both providing unique video archives, well-defined application domains and user communities. In particular, WITNESS collects and manages a documentary video archive from activists all over the world to support the promotion of human rights. This archive has several important qualities: it is diverse (including interviews, documentation of events, and ‘hidden camera’ reporting), it is relatively ‘raw’ (shot by semi-professional operators), it has been shot for specific purposes (e.g. -

Technical Phrase Extraction for Patent Mining: a Multi-Level Approach

2020 IEEE International Conference on Data Mining (ICDM) Technical Phrase Extraction for Patent Mining: A Multi-level Approach Ye Liu, Han Wu, Zhenya Huang, Hao Wang, Jianhui Ma, Qi Liu, Enhong Chen*, Hanqing Tao, Ke Rui Anhui Province Key Laboratory of Big Data Analysis and Application, School of Data Science & School of Computer Science and Technology, University of Science and Technology of China {liuyer, wuhanhan, wanghao3, hqtao, kerui}@mail.ustc.edu.cn, {huangzhy, jianhui, qiliuql, cheneh}@ustc.edu.cn Abstract—Recent years have witnessed a booming increase of TABLE I: Technical Phrases vs. Non-technical Phrases patent applications, which provides an open chance for revealing Domain Technical phrase Non-technical phrase the inner law of innovation, but in the meantime, puts forward Electricity wireless communication, wire and cable, TV sig- higher requirements on patent mining techniques. Considering netcentric computer service nal, power plug Mechanical fluid leak detection, power building materials, steer that patent mining highly relies on patent document analysis, Engineering transmission column, seat back this paper makes a focused study on constructing a technology portrait for each patent, i.e., to recognize technical phrases relevant works have been explored on phrase extraction. Ac- concerned in it, which can summarize and represent patents cording to the extraction target, they can be divided into Key from a technology angle. To this end, we first give a clear Phrase Extraction [8], Named Entity Recognition (NER) [9] and detailed description about technical phrases in patents and Concept Extraction [10]. Key Phrase Extraction aims to based on various prior works and analyses. Then, combining characteristics of technical phrases and multi-level structures extract phrases that provide a concise summary of a document, of patent documents, we develop an Unsupervised Multi-level which prefers those both frequently-occurring and closed to Technical Phrase Extraction (UMTPE) model.