Autonomic Programming Paradigm for High Performance Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Personal Autonomic Computing Reflex Reactions and Self-Healing 305

304 IEEE TRANSACTIONS ON SYSTEMS, MAN, AND CYBERNETICS—PART C: APPLICATIONS AND REVIEWS, VOL. 36, NO. 3, MAY 2006 Personal Autonomic Computing Reflex Reactions and Self-Healing Roy Sterritt, Member, IEEE, and David F. Bantz, Member, IEEE Abstract—The overall goal of this research is to improve the Personal computing is an area that can benefit substantially self-awareness and environment-awareness aspect of personal au- from autonomic principles. Examples of current difficult expe- tonomic computing (PAC) to facilitate self-managing capabilities riences that can be overcome by such an approach include [2]: such as self-healing. Personal computing offers unique challenges for self-management due to its multiequipment, multisituation, and 1) trouble connecting to a wired or a wireless network at a multiuser nature. The aim is to develop a support architecture for conference, hotel, or other work location; 2) switching between multiplatform working, based on autonomic computing concepts home and work; 3) losing a working connection (and shouting and techniques. Of particular interest is collaboration among per- across the office to see if anyone else has had the same prob- sonal systems to take a shared responsibility for self-awareness and lem!); 4) going into the IP settings area in Windows and being environment awareness. Concepts mirroring human mechanisms, such as reflex reactions and the use of vital signs to assess oper- unsure about the correct values to use; 5) having a PC which ational health, are used in designing and implementing the PAC stops booting and needs major repair or reinstallation of the architecture. As proof of concept, this was implemented as a self- operating system; 6) recovering from a hard-disk crash; and 7) healing tool utilizing a pulse monitor and a vital signs health moni- migrating efficiently to a new PC. -

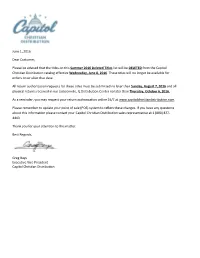

June 1, 2016 Dear Customer, Please Be Advised That the Titles On

June 1, 2016 Dear Customer, Please be advised that the titles on this Summer 2016 Deleted Titles list will be DELETED from the Capitol Christian Distribution catalog effective Wednesday, June 8, 2016. These titles will no longer be available for orders on or after that date. All return authorization requests for these titles must be submitted no later than Sunday, August 7, 2016 and all physical returns received in our Jacksonville, IL Distribution Center no later than Thursday, October 6, 2016. As a reminder, you may request your return authorization online 24/7 at www.capitolchristiandistribution.com. Please remember to update your point of sale (POS) system to reflect these changes. If you have any questions about this information please contact your Capitol Christian Distribution sales representative at 1 (800) 877- 4443. Thank you for your attention to this matter. Best Regards, Greg Bays Executive Vice President Capitol Christian Distribution CAPITOL CHRISTIAN DISTRIBUTION SUMMER 2016 DELETED TITLES LIST Return Authorization Due Date August 7, 2016 • Physical Returns Due Date October 6, 2016 RECORDED MUSIC ARTIST TITLE UPC LABEL CONFIG Amy Grant Amy Grant 094639678525 Amy Grant Productions CD Amy Grant My Father's Eyes 094639678624 Amy Grant Productions CD Amy Grant Never Alone 094639678723 Amy Grant Productions CD Amy Grant Straight Ahead 094639679225 Amy Grant Productions CD Amy Grant Unguarded 094639679324 Amy Grant Productions CD Amy Grant House Of Love 094639679829 Amy Grant Productions CD Amy Grant Behind The Eyes 094639680023 Amy Grant Productions CD Amy Grant A Christmas To Remember 094639680122 Amy Grant Productions CD Amy Grant Simple Things 094639735723 Amy Grant Productions CD Amy Grant Icon 5099973589624 Amy Grant Productions CD Seventh Day Slumber Finally Awake 094635270525 BEC Recordings CD Manafest Glory 094637094129 BEC Recordings CD KJ-52 The Yearbook 094637829523 BEC Recordings CD Hawk Nelson Hawk Nelson Is My Friend 094639418527 BEC Recordings CD The O.C. -

CWIC: Using Images to Passively Browse the Web

CWIC: Using Images to Passively Browse the Web Quasedra Y. Brown D. Scott McCrickard Computer Information Systems College of Computing and GVU Center Clark Atlanta University Georgia Institute of Technology Atlanta, GA 30314 Atlanta, GA 30332 [email protected] [email protected] Advisor: John Stasko ([email protected]) Abstract The World Wide Web has emerged as one of the most widely available and diverse information sources of all time, yet the options for accessing this information are limited. This paper introduces CWIC, the Continuous Web Image Collector, a system that automatically traverses selected Web sites collecting and analyzing images, then presents them to the user using one of a variety of available display mechanisms and layouts. The CWIC mechanisms were chosen because they present the images in a non-intrusive method: the goal is to allow users to stay abreast of Web information while continuing with more important tasks. The various display layouts allow the user to select one that will provide them with little interruptions as possible yet will be aesthetically pleasing. 1 1. Introduction The World Wide Web has emerged as one of the most widely available and diverse information sources of all time. The Web is essentially a multimedia database containing text, graphics, and more on an endless variety of topics. Yet the options for accessing this information are somewhat limited. Browsers are fine for surfing, and search engines and Web starting points can guide users to interesting sites, but these are all active activities that demand the full attention of the user. This paper introduces CWIC, a passive-browsing tool that presents Web information in a non-intrusive and aesthetically pleasing manner. -

Concept-Oriented Programming: References, Classes and Inheritance Revisited

Concept-Oriented Programming: References, Classes and Inheritance Revisited Alexandr Savinov Database Technology Group, Technische Universität Dresden, Germany http://conceptoriented.org Abstract representation and access is supposed to be provided by the translator. Any object is guaranteed to get some kind The main goal of concept-oriented programming (COP) is of primitive reference and a built-in access procedure describing how objects are represented and accessed. It without a possibility to change them. Thus there is a makes references (object locations) first-class elements of strong asymmetry between the role of objects and refer- the program responsible for many important functions ences in OOP: objects are intended to implement domain- which are difficult to model via objects. COP rethinks and specific structure and behavior while references have a generalizes such primary notions of object-orientation as primitive form and are not modeled by the programmer. class and inheritance by introducing a novel construct, Programming means describing objects but not refer- concept, and a new relation, inclusion. An advantage is ences. that using only a few basic notions we are able to describe One reason for this asymmetry is that there is a very many general patterns of thoughts currently belonging to old and very strong belief that it is entity that should be in different programming paradigms: modeling object hier- the focus of modeling (including programming) while archies (prototype-based programming), precedence of identities simply serve entities. And even though object parent methods over child methods (inner methods in identity has been considered “a pillar of object orienta- Beta), modularizing cross-cutting concerns (aspect- tion” [Ken91] their support has always been much weaker oriented programming), value-orientation (functional pro- in comparison to that of entities. -

February 2021 Official Publication of Alamitos Bay Yacht Club Volume 94 • Number 2 January Raft Up

February 2021 Official Publication of Alamitos Bay Yacht Club Volume 94 • Number 2 january raft up ads, how does one figure out a way to spend a Saturday evening when we’re not supposed to travel or get within six feet of anyone other than who you live with and when there are no bars or restaurants or the Club open? GSimple, just email or text a few of your ABYC friends (little advance warning is needed) and suggest a sunset rafting. The Taughers, Heavrin/Reeds, Clantons, Bells and Ott-Conns had a perfectly delightful time one January evening...wish you all were there! Dana Bell inside Manager’s Corner ............................................. 2 Commodore’s Comments............................... 2-3 Vice Verses ....................................................... 4 Rear View .......................................................... 4 Fleet Captains Log ............................................ 4 sav e the date Rules Quiz #75............................................ 5 & 7 Friday Night Patio......................... Feb 5,12,19,26 Membership Report ........................................... 5 Super Bowl ..........................................February 7 Juniors............................................................... 6 Zoom Membership Meeting ..............February 19 Eight Bells ......................................................... 6 Hails From the Fleets ................................. 10-11 Full ABYC Calendar sou’wester • february 2021 • page 1 manager’scorner t a recent General Membership meeting, members asked about how the ABYC employees are doing and how they are getting along through this pandemic. AThe short answer is that our regular staff is doing just fine. But rather than have me write about it, I have asked them to express their thoughts to you in their own words. Sheila Mattox - “I am doing well. I feel very lucky that I haven’t had any symptoms of the COVID-19 virus and haven’t felt the need to be tested. -

Self-Adaptive Systems

1 Self-Adaptive Systems: A Systematic Literature Review Across Categories and Domains Terence Wong, Markus Wagner, and Christoph Treude Abstract—Championed by IBM’s vision of autonomic computing paper in 2003, the autonomic computing research field has seen increased research activity over the last 20 years. Several conferences (SEAMS, SASO, ICAC) and workshops (SISSY) have been established and have contributed to the autonomic computing knowledge base in search of a new kind of system – a self-adaptive system (SAS). These systems are characterized by being context-aware and can act on that awareness. The actions carried out could be on the system or on the context (or environment). The underlying goal of a SAS is the sustained achievement of its goals despite changes in its environment. Despite a number of literature reviews on specific aspects of SASs ranging from their requirements to quality attributes, we lack a systematic understanding of the current state of the art. This paper contributes a systematic literature review into self-adaptive systems using the dblp computer science bibliography as a database. We filtered the records systematically in successive steps to arrive at 293 relevant papers. Each paper was critically analyzed and categorized into an attribute matrix. This matrix consisted of five categories, with each category having multiple attributes. The attributes of each paper, along with the summary of its contents formed the basis of the literature review that spanned 30 years (1990-2020). We characterize the maturation process of the research area from theoretical papers over practical implementations to more holistic and generic approaches, frameworks, and exemplars, applied to areas such as networking, web services, and robotics, with much of the recent work focusing on IoT and IaaS. -

Tolono Library CD List

Tolono Library CD List CD# Title of CD Artist Category 1 MUCH AFRAID JARS OF CLAY CG CHRISTIAN/GOSPEL 2 FRESH HORSES GARTH BROOOKS CO COUNTRY 3 MI REFLEJO CHRISTINA AGUILERA PO POP 4 CONGRATULATIONS I'M SORRY GIN BLOSSOMS RO ROCK 5 PRIMARY COLORS SOUNDTRACK SO SOUNDTRACK 6 CHILDREN'S FAVORITES 3 DISNEY RECORDS CH CHILDREN 7 AUTOMATIC FOR THE PEOPLE R.E.M. AL ALTERNATIVE 8 LIVE AT THE ACROPOLIS YANNI IN INSTRUMENTAL 9 ROOTS AND WINGS JAMES BONAMY CO 10 NOTORIOUS CONFEDERATE RAILROAD CO 11 IV DIAMOND RIO CO 12 ALONE IN HIS PRESENCE CECE WINANS CG 13 BROWN SUGAR D'ANGELO RA RAP 14 WILD ANGELS MARTINA MCBRIDE CO 15 CMT PRESENTS MOST WANTED VOLUME 1 VARIOUS CO 16 LOUIS ARMSTRONG LOUIS ARMSTRONG JB JAZZ/BIG BAND 17 LOUIS ARMSTRONG & HIS HOT 5 & HOT 7 LOUIS ARMSTRONG JB 18 MARTINA MARTINA MCBRIDE CO 19 FREE AT LAST DC TALK CG 20 PLACIDO DOMINGO PLACIDO DOMINGO CL CLASSICAL 21 1979 SMASHING PUMPKINS RO ROCK 22 STEADY ON POINT OF GRACE CG 23 NEON BALLROOM SILVERCHAIR RO 24 LOVE LESSONS TRACY BYRD CO 26 YOU GOTTA LOVE THAT NEAL MCCOY CO 27 SHELTER GARY CHAPMAN CG 28 HAVE YOU FORGOTTEN WORLEY, DARRYL CO 29 A THOUSAND MEMORIES RHETT AKINS CO 30 HUNTER JENNIFER WARNES PO 31 UPFRONT DAVID SANBORN IN 32 TWO ROOMS ELTON JOHN & BERNIE TAUPIN RO 33 SEAL SEAL PO 34 FULL MOON FEVER TOM PETTY RO 35 JARS OF CLAY JARS OF CLAY CG 36 FAIRWEATHER JOHNSON HOOTIE AND THE BLOWFISH RO 37 A DAY IN THE LIFE ERIC BENET PO 38 IN THE MOOD FOR X-MAS MULTIPLE MUSICIANS HO HOLIDAY 39 GRUMPIER OLD MEN SOUNDTRACK SO 40 TO THE FAITHFUL DEPARTED CRANBERRIES PO 41 OLIVER AND COMPANY SOUNDTRACK SO 42 DOWN ON THE UPSIDE SOUND GARDEN RO 43 SONGS FOR THE ARISTOCATS DISNEY RECORDS CH 44 WHATCHA LOOKIN 4 KIRK FRANKLIN & THE FAMILY CG 45 PURE ATTRACTION KATHY TROCCOLI CG 46 Tolono Library CD List 47 BOBBY BOBBY BROWN RO 48 UNFORGETTABLE NATALIE COLE PO 49 HOMEBASE D.J. -

Technical Manual Version 8

HANDLE.NET (Ver. 8) Technical Manual HANDLE.NET (version 8) Technical Manual Version 8 Corporation for National Research Initiatives June 2015 hdl:4263537/5043 1 HANDLE.NET (Ver. 8) Technical Manual HANDLE.NET 8 software is subject to the terms of the Handle System Public License (version 2). Please read the license: http://hdl.handle.net/4263537/5030. A Handle System Service Agreement is required in order to provide identifier/resolution services using the Handle System technology. Please read the Service Agreement: http://hdl.handle.net/4263537/5029. © Corporation for National Research Initiatives, 2015, All Rights Reserved. Credits CNRI wishes to thank the prototyping team of the Los Alamos National Laboratory Research Library for their collaboration in the deployment and testing of a very large Handle System implementation, leading to new designs for high performance handle administration, and handle users at the Max Planck Institute for Psycholinguistics and Lund University Libraries NetLab for their instructions for using PostgreSQL as custom handle storage. Version Note Handle System Version 8, released in June 2015, constituted a major upgrade to the Handle System. Major improvements include a RESTful JSON-based HTTP API, a browser-based admin client, an extension framework allowing Java Servlet apps, authentication using handle identities without specific indexes, multi-primary replication, security improvements, and a variety of tool and configuration improvements. See the Version 8 Release Notes for details. Please send questions or comments to the Handle System Administrator at [email protected]. 2 HANDLE.NET (Ver. 8) Technical Manual Table of Contents Credits Version Note 1 Introduction 1.1 Handle Syntax 1.2 Architecture 1.2.1 Scalability 1.2.2 Storage 1.2.3 Performance 1.3 Authentication 1.3.1 Types of Authentication 1.3.2 Certification 1.3.3 Sessions 1.3.4 Algorithms TODO 3 HANDLE.NET (Ver. -

Introduction to Autonomic Concepts Applied to Future Self-Managed Networks

Chapter 1 Introduction to Autonomic Concepts Applied to Future Self-Managed Networks Nazim Agoulmine DEFINITION AND SCOPE Network area has seen tremendous changes during the last decade through several waves. The first wave has been the Internet, which has completely changed the way network services are provided and helped global usage net- worked applications worldwide. The second wave came with the cellular and wireless technologies that have allowed the provisioning of telephony and data services anyplace, anytime. Cellular technologies have also drastically changed our behavior, allowing any person to be able to make phone calls from anywhere. They have also helped poor countries to develop efficient and cost- effective telephony infrastructure quickly, which was not possible with wire technologies. The third wave has surprisingly come from the POTS (Plain Old Telephony System) last mile access. Indeed, development of DSL (Digital Sub- scriber Line) technologies has enabled operators to provide high-speed IP access through a telephone line without having to pay for optical fiber installation and by taking advantage of existing telephone lines. This has allowed the emergence of the so-called Triple Play Services to the home (IP data access, Telephony of IP, and TV over IP). The fourth ware is probably under way with the con- vergence of the services Triple Play plus Mobile (sometimes called Quadruple Play) but also All-in-One emerging services such as P2P, social networking, and presence. In this ideal picture of technology development, there are many behind-the- scenes issues. Indeed, slowly but steadily, the users’ focus has changed from the high-speed network to value-added services. -

Pointers Getting a Handle on Data

3RLQWHUV *HWWLQJ D +DQGOH RQ 'DWD Content provided in partnership with Prentice Hall PTR, from the book C++ Without Fear: A Beginner’s Guide That Makes You Feel Smart, 1/e by Brian Overlandà 6 Perhaps more than anything else, the C-based languages (of which C++ is a proud member) are characterized by the use of pointers—variables that store memory addresses. The subject sometimes gets a reputation for being difficult for beginners. It seems to some people that pointers are part of a plot by experienced programmers to wreak vengeance on the rest of us (because they didn’t get chosen for basketball, invited to the prom, or whatever). But a pointer is just another way of referring to data. There’s nothing mysterious about pointers; you will always succeed in using them if you follow simple, specific steps. Of course, you may find yourself baffled (as I once did) that you need to go through these steps at all—why not just use simple variable names in all cases? True enough, the use of simple variables to refer to data is often sufficient. But sometimes, programs have special needs. What I hope to show in this chapter is why the extra steps involved in pointer use are often justified. The Concept of Pointer The simplest programs consist of one function (main) that doesn’t interact with the network or operating system. With such programs, you can probably go the rest of your life without pointers. But when you write programs with multiple functions, you may need one func- tion to hand off a data address to another. -

KTH Introduction to Opencl

Introduction to OpenCL David Black-Schaffer [email protected] 1 Disclaimer I worked for Apple developing OpenCL I’m biased Please point out my biases. They help me get a better perspective and may reveal something. 2 What is OpenCL? Low-level language for high-performance heterogeneous data-parallel computation. Access to all compute devices in your system: CPUs GPUs Accelerators (e.g., CELL… unless IBM cancels Cell) Based on C99 Portable across devices Vector intrinsics and math libraries Guaranteed precision for operations Open standard Low-level -- doesn’t try to do everything for you, but… High-performance -- you can control all the details to get the maximum performance. This is essential to be successful as a performance- oriented standard. (Things like Java have succeeded here as standards for reasons other than performance.) Heterogeneous -- runs across all your devices; same code runs on any device. Data-parallel -- this is the only model that supports good performance today. OpenCL has task-parallelism, but it is largely an after-thought and will not get you good performance on today’s hardware. Vector intrinsics will map to the correct instructions automatically. This means you don’t have to write SSE code anymore and you’ll still get good performance on scalar devices. The precision is important as historically GPUs have not cared about accuracy as long as the images looked “good”. These requirements are forcing them to take accuracy seriously.! 3 Open Standard? Huge industry support Driving hardware requirements This is a big deal. Note that the big three hardware companies are here (Intel, AMD, and Nvidia), but that there are also a lot of embedded companies (Nokia, Ericsson, ARM, TI). -

A Concise Introduction to Autonomic Computing

Advanced Engineering Informatics 19 (2005) 181–187 www.elsevier.com/locate/aei A concise introduction to autonomic computing Roy Sterritta,*, Manish Parasharb,1, Huaglory Tianfieldc,2, Rainer Unlandd,3 aSchool of Computing and Mathematics, Faculty of Engineering, University of Ulster at Jordanstown, Shore Road, Newtownabbey, County Antrim BT37 0QB, Northern Ireland, UK bThe Applied Software Systems Laboratory (TASSL), Electrical and Computer Engineering, Rutgers, The State University of New Jersey, 94 Brett Road, Piscataway, NJ 08854-8058, USA cSchool of Computing and Mathematical Sciences, The SRIF/SHEFC Centre for Virtual Organization Technology Enabling Research (VOTER), Glasgow Caledonian University, 70 Cowcaddens Road, Glasgow G4 0BA, UK dInstitute for Computer Science and Business Information Systems (ICB), University of Duisburg-Essen, Schu¨tzenbahn 70, 45117 Essen, Germany 1. Introduction specifically, an autonomic system is a self-managing, autonomous and ubiquitous computing environment that The advances in computing and communication tech- completely hides its complexity, thus providing the user nologies and software have resulted in an explosive growth with an interface that exactly meets her/his needs. The in computing systems and applications that impact all system will always decide on its own, using high-level aspects of our life. However, as the scale and complexity of guidance from humans, what needs to be done to keep it these systems and applications grow, their development, stable. It will constantly check and optimize its status, configuration and management challenges are beginning to and automatically adapt itself to changing conditions. break current paradigms, overwhelm the capabilities of Self-management is achieved through key aspects such as existing tools and methodologies, and rapidly render the self-governing, self-adaptation, self-organization, self- systems and applications brittle, unmanageable and optimization, self-configuration, self-diagnosis of faults, insecure.