A Vehicle Human-Machine Interface Implementation Based on Google Android Automotive OS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux Automotive Security “Safer and More Secure”

Linux Automotive Security “Safer and more secure” Authors Fulup Ar Foll [email protected] José Bollo [email protected] Abstract Cars are expensive pieces of equipment, yet they represent a huge mass market. Adding Internet connectivity to previous elements generates perfect conditions for the growth of a viable business model on attacking “Connected Cars”. It is already well understood that cars will be connected and connected cars will be attacked. While it's still too early to predict with certainty how “Connected Cars” will be impacted by security flaws, making the assumption that a car should be at least as secure as a TV, a set-top-box or a smart phone should make sense to everyone. This white paper focuses on how Linux best practices security mechanisms that could be used today and within the next couple of years to make connected cars safer and more secure. Version 1.0 January 2016 Linux Automotive Security Table of contents 1.Introduction...................................................................................................3 2.Make Sure You Run the Right Code...................................................................4 2.1.Before Booting............................................................................................4 2.2.When Booting.............................................................................................5 2.3.After Booting...............................................................................................5 3.Keeping Secrets Secret...................................................................................6 -

EB GUIDE: and Engineering Services All-In-One Automotive HMI UI and Application Development Services Development Toolchain, Voice Assistants (E.G

How can software suppliers create future car user experiences? Elektrobit, the visionary supplier of embedded software products and services is your answer. Deliver stunning, next-generation automotive user interfaces Disruption is affecting every industry. In the automotive At EB, we believe that an optimal interaction between hu- industry, this is most visible in the transformation of the man and machine is characterized by three aspects: classic vehicle into a software-driven Internet of Things (IoT) device. Personalization, connectivity, and mobility-as- a-service are becoming increasingly important for drivers EXCELLENT and passengers. USABILITY HIGH saving you In order to stand out in a marketplace that is growing more EFFICIENCY operator time saving both time competitive by the day, automotive manufacturers are seizing and money the opportunity to showcase their distinct advantages in pro- viding their drivers and passengers user interfaces that are sleek, intuitive, and above all, seamlessly integrated. MAXIMUM Elektrobit has created a big move from simple driver infor- PRODUCTIVITY ultimately giving your mation centers, to in-vehicle infotainment systems, to con- company a competitive nected cockpits that integrate with multiple technologies advantage simultaneously. 2 The secret to our success is simple. We're passionate about Our mission is to deliver HMIs optimized for your target promoting the interactions between humans and machines platform - HMIs that meet your expectations for high qua- through exceptionally designed software tools and solutions lity, that perfectly integrate multimodal input and output and our skilled engineering teams. devices, and that can be easily maintained over multiple variants and even multiple life cycles of your product. -

Vehicle Embedded Data Stream Processing Platform for Android Devices

(IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 6, No. 2, 2015 Vehicle Embedded Data Stream Processing Platform for Android Devices Shingo Akiyama∗, Yukikazu Nakamoto∗, Akihiro Yamaguchiy, Kenya Satoz, Hiroaki Takadax ∗Graduate School of Applied Informatics, University of Hyogo, Japan yCenter for Embedded Computing System, Nagoya University, Japan zMobility Research Center, Doshisha University, Japan xGraduate School of Information Science, Nagoya University, Japan Abstract—Automotive information services utilizing vehicle and make decisions on the basis of this data to control data are rapidly expanding. However, there is currently no the vehicle on behalf of the driver. Jones used information data centric software architecture that takes into account the obtained from multiple on-board sensors to perform evasive scale and complexity of data involving numerous sensors. To steering and, when collision is unavoidable, to activate brake address this issue, the authors have developed an in-vehicle data- intervention to dampen the impact, thus decreasing damage stream management system for automotive embedded systems [5]. Such intelligent control systems acquire data from many (eDSMS) as data centric software architecture. Providing the data stream functionalities to drivers and passengers are highly sensors, such as cameras and millimeter-wave radar. Google beneficial. This paper describes a vehicle embedded data stream and Urban Challenge, which is a competition funded by the processing platform for Android devices. The platform enables Defense Advanced Research Projects Agency, revealed that flexible query processing with a dataflow query language and self-driving in urban areas is both feasible and safe in terms extensible operator functions in the query language on the of autonomous driving [6], [7]. -

<Document Title>

DATE OSS Disclosure Document th CM_CI1 11 feb 2021 OSS Licenses used in RN_AIVI Project This document is provided as part of the fulfillment of OSS license conditions and does not require users to take any action before or while using the product. Page 1 © This is the exclusive property of ROBERT BOSCH ENGINEERING AND BUSINESS SOLUTIONS PRIVATE LIMITED. Without their consent, it may not be reproduced or given to third parties DATE OSS Disclosure Document th CM_CI1 11 feb 2021 Table of Contents Contents 1 List of used Open Source Components. ............................................................................................... 6 2 Appendix - License Text ................................................................................................................. 56 2.1 BSD-4-Clause (University of California-Specific) ............................................................. 56 2.2 Academic Free Licensev. 2.1 ............................................................................................. 57 2.3 Academic Free License v1.1 .............................................................................................. 59 2.4 Apache License 1.1 ........................................................................................................... 60 2.5 Apache License 2.0 ........................................................................................................... 62 2.6 Apple Public Source License 1.1 ....................................................................................... 65 2.7 -

Strong Momentum Continues by Egil Juliussen The

GENIVI: Strong Momentum Continues By Egil Juliussen The GENIVI Alliance had its membership meeting in Shanghai in mid-October. IHS Automotive was invited as part of their analyst track and was asked to give perspectives on HMI and apps for auto infotainment. This is the fourth time we have attended and given a presentation at the GENIVI Alliance meeting. We have previously been asked to do presentations on Software Security and HTML5 Infotainment Impact. Does this mean that GENIVI is now focusing on HMI technologies and/or a HMI framework? This impression will update a previous version that was done in November 2011 and will focus on the following topics: • What is new with GENIVI? • How does GENIVI compete with other OS platforms? • What is the outlook for GENIVI success? What is new with GENIVI? The GENIVI Alliance is a non-profit organization that is developing a software platform for in-vehicle infotainment (IVI) systems that uses Open Source Software (OSS) business model. The software platform is based on the Linux operating system (Version 2.6 Kernel) and additional middleware that are needed for running automotive infotainment applications in head-units, telematics and navigation systems. The GENIVI platform can be based on any Linux distribution, but a major goal of GENIVI is to avoid fragmentation of Linux in automotive systems—hence the compliance program. To assure a high level of compatibility between Linux versions, GENIVI released its first edition of the compliance testing program in August 2011 after 14-months of development. The second version of the compliance testing program was announced this spring and the third compliance version was announced in Shanghai this October. -

Pressemitteilung

Pressemitteilung Zur sofortigen Veröffentlichung GENIVI Alliance stellt Konformitätsprogramm für Mitglieder vor Erste fünf GENIVI-konforme Lösungen anerkannt SAN RAMON, Kalifornien, USA - 2. August 2011 - The GENIVI Alliance, ein Branchenverband der Automobil- und Unterhaltungselektronikindustrie, der sich für die Entwicklung und Einführung einer offenen Referenzplattform für Infotainment in Fahrzeugen (IVI – In-Vehicle Infotainment) einsetzt, stellte heute sein neu entwickeltes Konformitätsprogramm für seine Mitgliedsunternehmen vor. Dieses Programm ist das Ergebnis 14-monatiger akribischer Forschungs- und Untersuchungsbemühungen von GENIVI-„Expertengruppen“ – mit umfassender Beteiligung aller Mitglieder – zur Aufstellung einer Bandbreite detaillierter technischer Anforderungen. Die GENIVI-Miglieder Canonical, Mentor Graphics, MontaVista und Wind River sowie die Linux Foundation sind die ersten mit „GENIVI-konform“ anerkannten Angeboten, was sie für OEM- Ausschreibungen mit GENIVI-konformen Produkten qualifiziert. „Dieses Programm verkörpert den natürlichen Ablauf innerhalb der GENIVI Alliance von zielgerichteten Anforderungen über identifizierte Komponenten, die diesen Anforderungen gerecht werden, bis hin zu einer kompakten wiederverwendbaren Plattform“, sagte Steve Crumb, Executive Director der GENIVI Alliance. „Das Programm klärt, was von Mitgliedern erwartet wird, die konforme Softwarelösungen anbieten wollen.“ „Jaguar Land Rover und andere Automobil-OEMs und GENIVI-Mitglieder geben jetzt die GENIVI-Konformität in ihren Ausschreibungen -

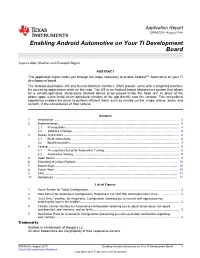

Enabling Android Automotive on Your TI Development Board

Application Report SPRACO0–August 2019 Enabling Android Automotive on Your TI Development Board Joshua Allen Shafran and Praneeth Bajjuri ABSTRACT This application report walks you through the steps necessary to enable Android™ Automotive on your TI development board. The Android Automotive OS and Human-Machine Interface (HMI) provide users with a simplified interface for accessing applications while on the road. The OS is an Android-based infotainment system that allows for a vehicle-optimized, stand-alone Android device to be placed inside the head unit. In place of the phone apps, users install driver-optimized versions of the app directly onto the console. This streamlined experience enables the driver to perform efficient tasks, such as climate control, maps, phone, audio, and so forth, in the convenience of their vehicle. Contents 1 Introduction ................................................................................................................... 2 2 Implementation............................................................................................................... 4 2.1 Prerequisites......................................................................................................... 4 2.2 Software Changes .................................................................................................. 5 3 Deploy Instructions .......................................................................................................... 6 3.1 Build Instructions................................................................................................... -

Hypervisors in the Vehicle: a Standards-Based Approach to Virtualization

Hypervisors in the vehicle: A standards-based approach to virtualization A report based on the webinar by Automotive World and GENIVI Alliance. The webinar which was broadcast in February of ! ! is still available for download " see #$%. It however had some poor audio &uality in some parts. A better recordin' is now available at #$% but some may still prefer to 'et this information in written form. The document is not an e(act transcript of the webinar) but it is close. The content and messa'e is the same, and the information is presented here to'ether with the correspondin' presentation slides from the webinar) with the followin' adjustments: , -ommon spo.en e(pressions have been e(chan'ed for more typical written lan'ua'e to reach more typical written &uality. , /inor clarifications and some lost details have been added. , A few chapters are e(tended and elaborate on details that did not 0t within the time of the webinar , For the 1&A session, more thou'htful and complete answers to 'iven &uestions can be 'iven, now that there is time to formulate the answers. Also) the section presented by Tero 3alminen from 4pen3yner'y has a 'ood &uality audio in the recordin') and is not included in te(t form in this document. The 0rst part of the presentation was done by Gunnar Andersson 5GENIVI Technical 6ead7) who is also the author of this text. We promised to address a &uestion in this webinar : 8Is there a need for a more standards-based approach for deployin' hypervisors in the vehicle?: , to ensure that automotive requirements are met, , to reduce risk and concerns amon' adopters of virtualization) , to promote portability, , and to minimize system integration effort. -

Vehicle Data Models – Overview & Gap Analysis

DECEMBER 2019 Category: Cloud & Connected Services Vehicle Data Models – Overview & Gap Analysis Introduction With the proliferation of connected cars making them the predominant form of automotive transportation in a few years, a number of parties are working at breaking down the barriers to adoption for mobility services based on automotive data. At the core of such initiatives lie several vehicle data models. Models describing data produced by vehicles are highly heterogeneous and non-interoperable to the extent that many OEMs use proprietary specifications to define thousands of vehicle signals, different unit systems, modelling patterns and formats. The competition between proprietary solutions and overlapping collaborative efforts results in a fragmented ecosystem of vehicle data models. This fragmented ecosystem is a major motivation for the work of the GENIVI Cloud and Connected Services (CCS) Project. The main goal of this project is to join forces and harmonize activities when designing and implementing the full data-oriented connected vehicle architecture (in-vehicle and back-end). In our charter, we present a list of benefits that could be enabled by this project: Enable easy interoperability of building blocks, flexibility and choice Develop common solutions and software Enable access to all data we want to exchange Control access to data Enable user privacy and data security Clarify responsibilities Agree on terminology for improving the shared work around data: names, roles, responsibilities Facilitate business opportunities and contractual agreements. Aligning the data model(s) is crucial for our industry and required to take the next planned step for this project, which is to propose a reference architecture for the vehicle data oriented environment. -

Nativex: Native Executioner Freezes Android

NativeX: Native Executioner Freezes Android Qinsheng Hou Yao Cheng Lingyun Ying∗ QI-ANXIN Technology Research Huawei International QI-ANXIN Technology Research Institute Singapore, Singapore Institute Legendsec Information Technology [email protected] University of Chinese Academy of (Beijing) Inc. Sciences Beijing, China Beijing, China [email protected] [email protected] ABSTRACT Taipei, Taiwan. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/ Android is a Linux-based multi-thread open-source operating 3320269.3384713 system that dominates 85% of the worldwide smartphone market share. Though Android has its established management for its 1 INTRODUCTION framework layer processes, we discovered for the first time that the Android is a Linux-based multi-thread open-source operating weak management of native processes is posing tangible threats system for mobile devices. It not only has gained tremendous to Android systems from version 4.2 to 9.0. As a consequence, popularity among mobile users in recent years, but also has any third-party application without any permission can freeze been used in IoT devices and various mission-critical tasks, such the system or force the system to go through a reboot by as point-of-sale devices [11], medical devices [30], on-vehicle starving or significantly delaying the critical system services using systems [5][6], and even aircraft and satellite devices [1][10]. One Android commands in its native processes. We design NativeX to of the reasons that Android has been widely adopted is that Android systematically analyze the Android source code to identify the provides compatible development support for programming in both risky Android commands. -

Android™ Release Notes (ARN): a Document That Introduces Key Updates and Known Issues in This Release

NXP Semiconductors Document identifier: ARN Release Notes Rev. automotive-10.0.0_1.1.0, 24 March 2020 ™ Android Release Notes Contents 1 Release Description...... 1 2 Supported Hardware 1 Release Description SoC/Boards.................. 1 The i.MX Android™ automotive-10.0.0_1.1.0 release is an Android Automotive GA (RFP) release on NXP's i.MX 8QuadXPlus/8QuadMax MEK board and 3 Release Package platform, which is based on Android 10. It supports the device type In-vehicle infotainment defined in https://source.android.com/devices/automotive/. Contents....................... 1 i.MX Android automotive-10.0.0_1.1.0 release includes all necessary code, documents, and tools to assist users in building and running Android 4 Features ....................... 2 Automotive on the i.MX 8QuadXPlus/8QuadMax MEK board from scratch. Pre-built images are also included for a quick trial on the following platforms: 5 Multimedia Codecs........4 • i.MX 8QuadXPlus/8QuadMax MEK Board and Platform This release includes all porting and enhancements based on the Android open source code. 6 Change Log...................4 Most of the deliveries in this release are provided in source code with the exception of some proprietary modules/libraries from third parties. 7 Known Issues and Limitations.....................4 2 Supported Hardware SoC/Boards The supported hardware system-on-chip (SoCs)/boards are listed as follows: 8 Revision History............ 5 • i.MX 8QuadXPlus/8QuadMax (Silicon Revision B0) MEK Board and Platform 3 Release Package Contents The automotive-10.0.0_1.1.0 release package includes the following software and documents. Table 1. Release package contents i.MX Android proprietary source • imx-automotive-10.0.0_1.1.0.tar.gz: i.MX Android Automotive proprietary source code code package package to enable Android Automotive on i.MX boards. -

Android Automotive Audio

Public Android Automotive Audio Introduction Bartosz Bialek Senior Software Engineer Tieto, PDS BU Automotive & Smart Devices [email protected] Table of contents Public • Android Automotive Architecture • Audio Framework • Android sounds and streams • Multi-Zone Audio • Audio Signal Arbitration • Audio HAL interfaces 2 © Tieto Corporation Public Audio Framework Architecture overview Android Automotive Architecture Public Applications Android Framework API Car API Android System Services Car Services HAL Automotive HAL Linux Kernel 4 © Tieto Corporation Audio Framework Public Application Car Audio Audio Effect Audio Track Audio Manager Manager Car Audio Audio Service Service Audio Track Audio Effect Audio System Audio Policy Effects Factory Audio Flinger Manager Audio Control Audio HAL HAL TinyALSA ASoC / Drivers 5 © Tieto Corporation Android sounds and streams (1/2) Public Music Player Voice Assistant Navigation Calendar Audio sources Audio Flinger Mixer 0 Mixer 1 Mixer 2 Audio contexts Audio devices MUSIC Bus 0 Bus 1 Bus 2 NAVIGATION VOICE_COMMAND Audio HAL CALL_RING Chime Unit CALL External Mixer ALARM Tuner NOTIFICATION Car Amplifier(s) SYSTEM_SOUND 6 © Tieto Corporation Android sounds and streams (2/2) Public Android 10: New player Radio Navigation HwAudioSource Audio Flinger Tuner Mixer 0 Mixer 1 Tuner Bus 0 Bus 1 Audio HAL Tuner External Mixer Car Amplifier(s) 7 © Tieto Corporation Multi-Zone Audio (Android 10) Public Primary zone • Application can be played in zone Media Player Media playback • Zone contains audio devices