Split and Rephrase: Better Evaluation and Stronger Baselines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Thijs Slegers Zijn Visie Over De Huidige Sportmedia

VOETBAL & MEDIA IN NEDERLAND Thijs Slegers Zijn visie over de huidige sportmedia PSV - Willem II Door de ogen van de pers CP-talentendag Iedereen een kans in het Nederlands elftal Ben jij er volgend jaar wel weer bij? Mis niets van het volgende seizoen en zorg dat je een plek in het stadion hebt. De seizoenskaarten voor seizoen 2017/2018 kunnen besteld worden op de website van de clubs in de Eredivisie. Ik stel voor dat ze tv-kijken snel olympisch maken COLUMN THIJS ZONNEVELD, OLYMPISCHE SPELEN 2016 Van handbal. Naar volleybal. Naar openwaterzwemmen. Naar atletiek. Naar baanwielrennen. Naar paardrijden. Via badminton en pingpong en de voorbeschouwing van het turnen weer naar handbal. Naar volleybal. Naar openwaterzwemmen. Naar atletiek. Naar baanwielrennen. Naar paardrijden. Via badminton en pingpong weer naar handbal. Ik zat voor de tv en zapte mezelf een duimverrekking en een muisarm. Tijd om na te denken was er niet. Tijd om even te gaan pissen was er al helemaal niet: één moment niet opletten en je miste een goal, een punt, een beslissende demarrage of een zwemmer die over een koelkast moest klimmen in de baai van Rio. Het was teveel. Het was nondeju veel te veel. Het is élke dag teveel. In de eerste week trok ik het nog net, maar sinds Yuri van Gelder naar huis is en al die Nederlandse sporters aan hun nachtrust toekomen is het hek van de dam. Ze winnen alleen nog maar goud; het is alsof de andere kleuren er niet meer toe doen. En tijd om ervan te genieten is er niet: in een paar dagen “Sinds Yuri van jagen we er voor járen waard aan sportsuccessen doorheen. -

Ajaxjaar Cover Ajaxjaar Cover 08-11-12 18:47 Pagina 1

Ajaxjaar cover_Ajaxjaar cover 08-11-12 18:47 Pagina 1 Voorzitter Hennie Henrichs: ‘Dit verenigingsjaar was vergelijkbaar met een rit in de achtbaan...’ JAARVERSLAG VAN DE VERENIGING AFC AJAX, SPORTPARK DE TOEKOMST Borchlandweg 16-18, NL 1099 CT Amsterdam Zuidoost Tel.: +31 20 677 74 00 Fax: +31 20 311 91 12 E-mail: [email protected] www.ajax.nl SEIZOEN 2011-2012 1 Ajaxjaar deel1_Ajax 08-11-12 21:25 Pagina 1 Ajax Jaarverslag van de vereniging 1 juli 2011 - 30 juni 2012 Ajaxjaar deel1_Ajax 08-11-12 21:26 Pagina 2 Colofon Samenstelling: Tijn Middendorp - eindredacteur Ed Lefeber Met bijdragen van: Rob Been Jr. Ger Boer Hans Bijvank Jan Buskermolen Theo van Duivenbode Yuri van Eijden Hennie Henrichs Ronald Jonges Thijs Lindeman Marjon van Nielen Rob Stol René Zegerius Correctie: Henk Buhre, Arjan Duijnker Fotografie: Louis van de Vuurst en Gerard van Hees, tenzij anders vermeld Foto voorzijde omslag: Gerard van Hees Vormgeving: Vriedesign, John de Vries Druk: Stadsdrukkerij Amsterdam Adres AFC Ajax, Sportpark de Toekomst Borchlandweg 16-18 1099 CT Amsterdam Zuidoost Telefoon: +31 20 677 74 00 Fax: +31 20 311 91 12 AFC Ajax NV Arena Boulevard 29 1101 AX Amsterdam Zuidoost Postbus 12522 1100 AM Amsterdam Zuidoost Telefoon: +31 900 232 25 29 Fax: +31 20 311 14 80 E-mail: [email protected] Kick off Ajax Life Ajax kids clubblad www.ajax.nl @AFCAjax facebook.com/AFCAjax ajax.hyves.nl youtube.com/ajax 2 Ajaxjaar deel1_Ajax 08-11-12 21:26 Pagina 3 Inhoud Deel 1 5 Voorwoord 6 Clubleven 10 Erelijst 11 Betaald voetbal Start Ongeloof Historische slotfase 14 ‘Je -

Clubs Without Resilience Summary of the NBA Open Letter for Professional Football Organisations

Clubs without resilience Summary of the NBA open letter for professional football organisations May 2019 Royal Netherlands Institute of Chartered Accountants The NBA’s membership comprises a broad, diverse occupational group of over 21,000 professionals working in public accountancy practice, at government agencies, as internal accountants or in organisational manage- ment. Integrity, objectivity, professional competence and due care, confidentiality and professional behaviour are fundamental principles for every accountant. The NBA assists accountants to fulfil their crucial role in society, now and in the future. Royal Netherlands Institute of Chartered Accountants 2 Introduction Football and professional football organisations (hereinafter: clubs) are always in the public eye. Even though the Professional Football sector’s share of gross domestic product only amounts to half a percent, it remains at the forefront of society from a social perspective. The Netherlands has a relatively large league for men’s professio- nal football. 34 clubs - and a total of 38 teams - take part in the Eredivisie and the Eerste Divisie (division one and division two). From a business economics point of view, the sector is diverse and features clubs ranging from listed companies to typical SME’s. Clubs are financially vulnerable organisations, partly because they are under pressure to achieve sporting success. For several years, many clubs have been showing negative operating results before transfer revenues are taken into account, with such revenues fluctuating each year. This means net results can vary greatly, leading to very little or even negative equity capital. In many cases, clubs have zero financial resilience. At the end of the 2017-18 season, this was the case for a quarter of all Eredivisie clubs and two thirds of all clubs in the Eerste Divisie. -

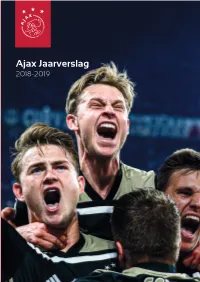

Ajax Jaarverslag 2018-2019 Jaarverslag 2018-2019

Ajax Jaarverslag 2018-2019 Jaarverslag 2018-2019 AFC Ajax Arena Boulevard 29 1101 AX Amsterdam Postbus 12522 1100 AM Amsterdam Kerncijfers € 199,5 mln. € 51,9 mln. Omzet Netto resultaat € 72,6 mln. € 95,3 mln. Resultaat transfers Voetbal opbrengsten Europese toernooien € 18,50 52,3 k Koers per 30/06/2019 Gemiddeld aantal plaatsen per competitiewedstrijd 374 58 Aantal fte’s Amsterdam Aantal gespeelde wedstrijden AFC AJAX NV JAARVERSLAG 2018-2019 AFC AJAX NV JAARVERSLAG 2018-2019 1 AFC AJAX NV JAARVERSLAG 2018-2019 Play video Matthijs de Ligt the golden boy GOALS PASS GAMES GOLD ACCURACY 2 AFC AJAX NV JAARVERSLAG 2018-2019 Play video Frenkie de Jong the gifted GAMES PASS BALLS ANKLES ACCURACY RECOVERED BROKEN 3 AFC AJAX NV JAARVERSLAG 2018-2019 Play video Dusan Tadic the machine GOALS ASSISTS GAMES ON FIRE 4 AFC AJAX NV JAARVERSLAG 2018-2019 Inhoud 6 Algemeen 109 Overige gegevens 110 Controleverklaring van de onafhankelijke accountant 13 Verslag van de directie 113 Statutaire bepalingen omtrent winstbestemming 114 Meerjarenoverzicht 2009/2010 tot en met 2018/2019 37 Corporate Governance Verklaring 56 Verslag van de Raad van Commissarissen 61 Jaarrekening 2018/2019 62 Geconsolideerde balans 64 Geconsolideerde winst- en verliesrekening 65 Geconsolideerd overzicht van gerealiseerde en niet- gerealiseerde resultaten 66 Geconsolideerd kasstroomoverzicht 67 Geconsolideerd mutatieoverzicht eigen vermogen 68 Grondslagen voor waardering en resultaatbepaling 78 Toelichting op de geconsolideerde balans 92 Toelichting op de geconsolideerde winst- en verliesrekening 100 Enkelvoudige balans 102 Enkelvoudige winst- en verliesrekening 103 Toelichting op de enkelvoudige balans 5 AFC AJAX NV JAARVERSLAG 2018-2019 Algemeen Voorwoord Voor Ajax is het seizoen 2018-2019 het beste seizoen van deze wedstrijd in de Johan Cruijff ArenA. -

Ajax Jaarverslag 2019-2020

Ajax Jaarverslag 2019-2020 Jaarverslag 2018-2019 Jaarverslag AFC Ajax Johan Cruijff Boulevard 29 1101 AX Amsterdam Postbus 12522 1100 AM Amsterdam Kerncijfers €162,3 mln Omzet €20,7 mln Netto resultaat €84,5 mln Resultaat transfers €55,6 mln Voetbal opbrengsten Europese toernooien €15,22 Koers per 30.06.2020 52,300 Gemiddeld aantal plaatsen per competitiewedstrijd 41 Aantal gespeelde wedstrijden 409 Aantal fte’s Amsterdam Inhoud 6. Voorwoord 8. Wij zijn Ajax 9. Onze voetbalfilosofie 10. Missie: For the Fans 11. Visie: For the Future 13. Historie 14. Het seizoen 16. Voetbal 28. Ajax als wereldspeler 36. Personeel en organisatie 41. Financiële balans 46. Organisatie 46. Samenstelling van onze organisatie 49. Ons Governance systeem 50. Samenstelling van het bestuur 51. Governance 51. Corporate Governance 56. Risico's en risicomanagement 63. Verklaring van de directie 64. Samenstelling van het bestuur 67. Artikel 10 van de EU overnamerichtlijn 71. Verslag RvC 71. Het verslagjaar 2019-2020 74. Bezoldiging bestuurders Pagina 4 77. Jaarrekening 2019/2020 78. Geconsolideerde balans 80. Geconsolideerde winst- en verliesrekening 81. Geconsolideerd overzicht van gerealiseerde en niet-gerealiseerde resultaten 82. Geconsolideerd kasstroomoverzicht 83. Geconsolideerd mutatieoverzicht eigen vermogen 84. Grondslagen voor waardering en resultaatbepaling 99. Toelichting op de geconsolideerde balans 116. Toelichting op de geconsolideerde winst- en verliesrekening 126. Enkelvoudige balans 128. Enkelvoudige winst- en verliesrekening 129. Toelichting op de enkelvoudige balans 135. Overig 136. Controleverklaring van de onafhankelijke accountant 142. Statutaire bepalingen omtrent winstbestemming 144. Meerjarenoverzicht 2010/2011 tot en met 2019/2020 Inhoud Wij zijn Ajax Het seizoen Organisatie Governance Verslag RvC Jaarrekening Overig Voorwoord Waar het seizoen 2018-2019 als het beste seizoen van deze eeuw de boeken in ging, kan het afgelopen seizoen als kortste worden omschreven. -

Number #9 in the TDF Top 50

From being a small boy, I have always been a huge fan of both football and professional wrestling and at around the age of 10-years-old, I came across a monthly magazine brought to newsagents from the makers of the world famous Sports Illustrated all about pro wrestling. It was simply named Pro Wrestling Illustrated. It was a publication I regularly read as well as Shoot and Match weekly magazines to feed my football addiction. In 1991, PWI began this new craze that instantly grabbed my attention more than no other magazine had before. They revealed the 'PWI 500'. The 'PWI 500' section in the magazine listed (in rank order) the top 500 professional wrestlers in the world that year. Counting down from 500, this revealed to me many, many talents that I have not discovered as of yet. This was released every year, and still to this day is the best selling edition of the magazine each year. I studied and read and read over and over again that edition in 1991 and I came up with an idea. What if Match or Shoot revealed the top 500 footballers in the world? Thinking back to 1991, I used to love to tune into the 'BBC Radio 1 Top 40' show every Sunday afternoon too... maybe I'm a tad obsessed with countdowns. Anyways, reflecting on the idea, I thought about it each year the 'PWI 500' was released. As years went on, I felt that this would have grown into a hugely successful plan, gaining great recognition with people in the game, possibly gained its own award ceremony on TV with the emergence and exposure SKY Television in the United Kingdom was just beginning to give to the game back then. -

Dutch Pro Academy Training Sessions Vol 1

This ebook has been licensed to: Bob Hansen ([email protected]) If you are not Bob Hansen please destroy this copy and contact WORLD CLASS COACHING. This ebook has been licensed to: Bob Hansen ([email protected]) Dutch Pro Academy Training Sessions Vol 1 By Dan Brown Published by WORLD CLASS COACHING If you are not Bob Hansen please destroy this copy and contact WORLD CLASS COACHING. This ebook has been licensed to: Bob Hansen ([email protected]) First published September, 2019 by WORLD CLASS COACHING 3404 W. 122nd Leawood, KS 66209 (913) 402-0030 Copyright © WORLD CLASS COACHING 2019 All rights reserved. No parts of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without prior written permission of the publisher. Author – Dan Brown Edited by Tom Mura Front Cover Design – Barrie Bee Dutch Pro Academy Training Sessions I 1 ©WORLD CLASS COACHING If you are not Bob Hansen please destroy this copy and contact WORLD CLASS COACHING. This ebook has been licensed to: Bob Hansen ([email protected]) Table of Contents 3 Overview and Itinerary 8 Opening Presentation with Harry Jensen, KNVB 10 Notes from Visit to ESA Arnhem Rijkerswoerd Youth Club 12 Schalke ’04 Stadium and Training Complex Tour 13 Ajax Background 16 Training Session with Harry Jensen, KNVB 19 Training Session with Joost van Elden, Trekvogels FC 22 Ajax Youth Team Sessions 31 Ajax U13 Goalkeeper Training 34 Ajax Reserve Game & Attacking Tendencies Dutch Pro Academy Training Sessions I 2 ©WORLD CLASS COACHING If you are not Bob Hansen please destroy this copy and contact WORLD CLASS COACHING. -

Amsterdamsche Football Club

AMSTERDAMSCHE FOOTBALL CLUB Opgericht 18 januari 1895 ¿" Ê/Ê-Ê17Ê ¶' Ê-/ , \Ê6"",Ê-*",/ 6 Ê/"**, -// -Ê"*Ê Ê ]ÊÃÌi«iÊ£]ÊÃÌiÀ`>]ÊÌi°ÊäÓäxÈÓä£ää Deukje? Wilt u dat het snel verholpen is? Ga dan naar Wilt u gratis vervangend vervoer? ASN Autoschade Service Wilt u kwaliteit? Fritzsche Amsterdam En.. ook nog eens vriendelijk? (onderdeel van de ASN Groep) Van der Madeweg 25 1099 BS Amsterdam t 020 69 37 399 f 020 66 85 789 e [email protected] 19 maart 2014 92e jaargang nr. 8 e 19 maart 2014 92 e jaargang nr. 8 19 maart 2014 92 jaargange nr. 8 19 maart 2014 9 2 jaargang nr. 8 De volgendeDe volgende wedstrijden wedstrijden van van AFCAFC 11 (en(en beker beker AFC AFC 2) 2) De zondag volgende zondag 23 maart 23 maart wedstrijden zondag zondag 30 30 maart vanmaart AFC 1 zondag (enzondag 6beker april 6 april AFC 2) speelronde speelronde 25 2 5 beker bekerprogrammaprogramma speelronde speelronde 26 26 14.30 uur 14.00 uur 14.30 uur zondag 14.30 23 uur maart zondag 14.00 uur30 maart 14.30 zondag uur 6 april HSC'21 - AFC AFC 2 - JOS/W'meer AFC - WKE speelrondeHSC'21 - AFC 25 AFC beker 2 - JOS/W'meerprogramma AFC speelronde - WKE 26 14.30 uur zondag 13 april 14.00 uur zaterdag 19 april !!! 14.30 uur HSC'21 - AFC zondag speelronde 13 AFC april 2 27 - JOS/W'meer zaterdag speelronde 19 2 8april !!!AFC - WKE speelronde 27 speelronde 28 14.30 uur 14.30 uur 14.3 EVV0 uur - AFC AFC 14.30 - HBS uur zondag 13 april zaterdag 19 april !!! EVV - AFC AFC - HBS speelronde 27 speelronde 28 14.30 uur 14.30 uur EVV - AFC AFC - HBS De volgende Schakel verschijnt 16 april 2014 DeEn volgende . -

Ei Ndrapport Auditteam Voetbalvandalisme Seizoen 2003'2004

OETBALVANDALTçME ea Ei ndrapport Auditteam Voetbalvandalisme seizoen 2003'2004 Den Haag,8 augustus 2004 Auditteom Voetbolvondolisme I +3 1 l}l20 625 7 5 37 (0]120 627 47 s9 DSP - groep BV f +31 Von Diemenstrool 374 e [email protected] 10'l 3 CR Amsterdom w www.dsp-groeP.nl lnhoudsopgave Samenvatting 3 1 lnleiding I 11 2 Werkwijze en activiteiten auditteam 11 2.1 Selectie audits 12 2.2 Uitvoering audits 13 2.3 Wedstrijdbezoeken 15 3 Audits en wedstrijdbezoeken 15 3.1 Audit AFC Ajax - FC Brugge d.d' 1 oktober 2003 20 3.2 Audit ADO Den Haag - AFC Ajax d.d. 9 november 2003 26 3.3 Audit Jong Ajax - Jong Feyenoord d.d. 15 april 2004 35 3.4 Audit 4-tal incidenten vrij reizende supporters 47 3.5 wedstrijdbezoek FC Twente en FC Utrecht d.d. 26 oktober 2003 50 3.6 Wedstrijdbezoek N.E.C. - Vitesse d.d.14 december 2003 52 3.7 Wedstrijdbezoek PSV - Feyenoord d.d. 14 maart 2004 55 3.8 Wedstrijdbezoek HFC Haarlem - Stormvogels Telstar d'd'28 maart2004 59 4 Conctusies en aanbevelingen eindrapportage 59 4.1 Algemene conclusies 60 4.2 Samenwerkingketenpartners,convenantenenbetrekkensupporters 62 4.3 Vermindering Politie-inzet 62 4.4 Combiregeling en vervoer 64 4.5 Dadergerichte aanPak 65 4.6 Opleggen en handhaven stadionverboden 67 4.7 Tolerantiegrenzen beleidskader 69 4.8 Categoriserin g risicowedstrijden Bijlagen 72 Bijlage 1 Samenstelling auditteam Pagina 2 Eindrapport Auditteam Voetbalvandalisme seizoen 2003-2004 Samenvatting Zoals aangekondigd in het Beleidskader bestrijding voetbalvandalisme en geweld is op 13 augustus 2003 door de minister van BZK het Auditteam Voetbalvandalisme ingesteld. -

![Arxiv:1805.01035V1 [Cs.CL] 2 May 2018 Et Al., 2014; Koehn and Knowles, 2017)](https://docslib.b-cdn.net/cover/4940/arxiv-1805-01035v1-cs-cl-2-may-2018-et-al-2014-koehn-and-knowles-2017-1714940.webp)

Arxiv:1805.01035V1 [Cs.CL] 2 May 2018 Et Al., 2014; Koehn and Knowles, 2017)

Accepted as a short paper in ACL 2018 Split and Rephrase: Better Evaluation and a Stronger Baseline Roee Aharoni & Yoav Goldberg Computer Science Department Bar-Ilan University Ramat-Gan, Israel froee.aharoni,[email protected] Abstract companying RDF information, and a semantics- augmented setup that does. They report a BLEU Splitting and rephrasing a complex sen- score of 48.9 for their best text-to-text system, and tence into several shorter sentences that of 78.7 for the best RDF-aware one. We focus on convey the same meaning is a chal- the text-to-text setup, which we find to be more lenging problem in NLP. We show that challenging and more natural. while vanilla seq2seq models can reach We begin with vanilla SEQ2SEQ models with high scores on the proposed benchmark attention (Bahdanau et al., 2015) and reach an ac- (Narayan et al., 2017), they suffer from curacy of 77.5 BLEU, substantially outperforming memorization of the training set which the text-to-text baseline of Narayan et al.(2017) contains more than 89% of the unique and approaching their best RDF-aware method. simple sentences from the validation and However, manual inspection reveal many cases test sets. To aid this, we present a of unwanted behaviors in the resulting outputs: new train-development-test data split and (1) many resulting sentences are unsupported by neural models augmented with a copy- the input: they contain correct facts about rele- mechanism, outperforming the best re- vant entities, but these facts were not mentioned ported baseline by 8.68 BLEU and foster- in the input sentence; (2) some facts are re- ing further progress on the task. -

Jaarboek 2013-2014

HET OFFICIËLE AJAX JAARBOEK 2013-2014 Raymond Bouwman Ronald Jonges Michel Sleutelberg De officiële teamfoto 2013-2014 (juli 2013) Eerste rij: Kenneth Vermeer, Daley Blind, Niklas Moisander, Carlo l’Ami, Hennie Spijkerman, Frank de Boer, Dennis Bergkamp, Kolbeinn Sigthórsson, Tobias Sana, Jasper Cillessen Tweede rij: Thulani Serero, Viktor Fischer, Joël Veltman, Toby Alderweireld, Siem de Jong, Christian Eriksen, Danny Hoesen, Christian Poulsen, Mike van der Hoorn Derde rij: Ruben Ligeon, Lasse Schöne, Lucas Andersen, Derk Boerrigter, Nicolai Boilesen, Ricardo van Rhijn, Davy Klaassen, Stefano Denswil, Eyong Enoh, Bojan Krkic. Niet op de foto: Mickey van der Hart De officiële teamfoto 2013-2014 (september 2013) Eerste rij: Daley Blind, Niklas Moisander, Jasper Cillessen, Carlo l’Ami, Hennie Spijkerman, Frank de Boer, Dennis Bergkamp, Mickey van der Hart, Siem de Jong, Tobias Sana Tweede rij: Thulani Serero, Ricardo van Rhijn, Viktor Fischer, Kolbeinn Sigthórsson, Kenneth Vermeer, Danny Hoesen, Nicolai Boilesen, Christian Poulsen, Bojan Krkic Derde rij: Lerin Duarte, Lasse Schöne, Lucas Andersen, Mike van der Hoorn, Stefano Denswil, Joël Veltman, Davy Klaassen, Ruben Ligeon 2 AJAX JAARBOEK 2013-2014 INHOUD KAMPIOEN 4 VAN DAG TOT DAG 22 STATISTIEKEN 158 KRONIEK 12 WEDSTRIJDEN 60 FOTOGRAFIE, AFKORTINGEN 176 WEDSTRIJDEN EERSTE PRIJS NA MENTALE TIK IN SLOTMINUUT SLEUTELROL VOOR VERVANGERS ONVERWACHTE UITPUTTINGSSLAG 02-11-2013 Ajax - Vitesse 94 06-02-2014 Ajax - FC Groningen 124 27-07-2013 AZ - Ajax 60 AJAX DOET WEER VOLOP MEE VERKRAMPING -

26E Nationale G-Voetbaltoernooi ‘Op Deze Dag Zijn Inhoud Michael Van Praag We Allemaal Profvoetballer’

1 augustus 2018 - Sportpark De Bongerd - Barendrecht 26e nationale G-voetbaltoernooi ‘Op deze dag zijn Inhoud Michael van Praag we allemaal profvoetballer’ Inhoud 2 Zeg eens eerlijk, wie van u sliep er vroeger in een Feyenoord-pyjama? Had een Voorwoord KNVB 3 poster van Ajax aan de muur? Of lag ‘s nachts te dromen onder een PSV-dekbed? Prof worden bij een geweldige club; het is de wens van alle voetballende jongens Welkomstwoord CBV en BSBV 4 en meisjes over de gehele wereld. KNVB Marcel Geestman 5 Helaas is dat niet voor iedereen weggelegd. Behalve vandaag natuurlijk, op dit altijd weer Programma 6 bijzondere G-Voetbaltoernooi! Bijna 400 G-voetballers zijn te gast bij BVV Barendrecht en G-toernooi, informatie 7 nemen het tegen elkaar op, in de tenues van onze betaald voetbalclubs. Alle BVO’s zijn, zoals altijd, op sportpark De Bongerd vertegenwoordigd, inclusief trainers en Fonds Gehandicaptensport 8 coaches. Net als diverse scheidsrechters uit het betaald voetbal. Als bondsvoorzitter ben ik Kleedkamerindeling 9 ongelooflijk trots op alles en iedereen die deze dag elk jaar weer mogelijk maakt. De eerste woensdag van augustus is al voor de 26e keer vaste prik op de voetbalkalender en daarvoor Fotoverslag 25 jaar G-toernooi 10-13 wil ik iedereen die dit mogelijk maakt een enorm compliment geven. Het is geweldig wat u Poule indeling senioren 14 doet! Penaltybokaal 15 Iedereen aan de bal 2 Met een warm gevoel denk ik terug aan de jubileumeditie van vorig jaar. Een prachtige dag 3 Schema B-Categorie 16 waarop we met elkaar op feestelijke wijze het 25e nationale G-voetbaltoernooi in Nederland Schema C-Categorie 17 konden vieren.