P-127 Umschlag

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

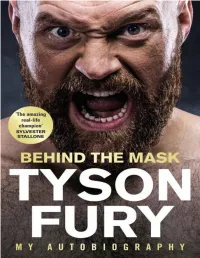

Behind the Mask: My Autobiography

Contents 1. List of Illustrations 2. Prologue 3. Introduction 4. 1 King for a Day 5. 2 Destiny’s Child 6. 3 Paris 7. 4 Vested Interests 8. 5 School of Hard Knocks 9. 6 Rolling with the Punches 10. 7 Finding Klitschko 11. 8 The Dark 12. 9 Into the Light 13. 10 Fat Chance 14. 11 Wild Ambition 15. 12 Drawing Power 16. 13 Family Values 17. 14 A New Dawn 18. 15 Bigger than Boxing 19. Illustrations 20. Useful Mental Health Contacts 21. Professional Boxing Record 22. Index About the Author Tyson Fury is the undefeated lineal heavyweight champion of the world. Born and raised in Manchester, Fury weighed just 1lb at birth after being born three months premature. His father John named him after Mike Tyson. From Irish traveller heritage, the“Gypsy King” is undefeated in 28 professional fights, winning 27 with 19 knockouts, and drawing once. His most famous victory came in 2015, when he stunned longtime champion Wladimir Klitschko to win the WBA, IBF and WBO world heavyweight titles. He was forced to vacate the belts because of issues with drugs, alcohol and mental health, and did not fight again for more than two years. Most thought he was done with boxing forever. Until an amazing comeback fight with Deontay Wilder in December 2018. It was an instant classic, ending in a split decision tie. Outside of the ring, Tyson Fury is a mental health ambassador. He donated his million dollar purse from the Deontay Wilder fight to the homeless. This book is dedicated to the cause of mental health awareness. -

April-2014.Pdf

BEST I FACED: MARCO ANTONIO BARRERA P.20 THE BIBLE OF BOXING ® + FIRST MIGHTY LOSSES SOME BOXERS REBOUND FROM MARCOS THEIR INITIAL MAIDANA GAINS SETBACKS, SOME DON’T NEW RESPECT P.48 P.38 CANELO HALL OF VS. ANGULO FAME: JUNIOR MIDDLEWEIGHT RICHARD STEELE WAS MATCHUP HAS FAN APPEAL ONE OF THE BEST P.64 REFEREES OF HIS ERA P.68 JOSE SULAIMAN: 1931-2014 ARMY, NAV Y, THE LONGTIME AIR FORCE WBC PRESIDENT COLLEGIATE BOXING APRIL 2014 WAS CONTROVERSIAL IS ALIVE AND WELL IN THE BUT IMPACTFUL SERVICE ACADEMIES $8.95 P.60 P.80 44 CONTENTS | APRIL 2014 Adrien Broner FEATURES learned a lot in his loss to Marcos Maidana 38 DEFINING 64 ALVAREZ about how he’s FIGHT VS. ANGULO perceived. MARCOS MAIDANA THE JUNIOR REACHED NEW MIDDLEWEIGHT HEIGHTS BY MATCHUP HAS FAN BEATING ADRIEN APPEAL BRONER By Doug Fischer By Bart Barry 67 PACQUIAO 44 HAPPY FANS VS. BRADLEY II WHY WERE SO THERE ARE MANY MANY PEOPLE QUESTIONS GOING PLEASED ABOUT INTO THE REMATCH BRONER’S By Michael MISFORTUNE? Rosenthal By Tim Smith 68 HALL OF 48 MAKE OR FAME BREAK? REFEREE RICHARD SOME FIGHTERS STEELE EARNED BOUNCE BACK HIS INDUCTION FROM THEIR FIRST INTO THE IBHOF LOSSES, SOME By Ron Borges DON’T By Norm 74 IN TYSON’S Frauenheim WORDS MIKE TYSON’S 54 ACCIDENTAL AUTOBIOGRAPHY CONTENDER IS FLAWED BUT CHRIS ARREOLA WORTH THE READ WILL FIGHT By Thomas Hauser FOR A TITLE IN SPITE OF HIS 80 AMERICA’S INCONSISTENCY TEAMS By Keith Idec INTERCOLLEGIATE BOXING STILL 60 JOSE THRIVES IN SULAIMAN: THE SERVICE 1931-2014 ACADEMIES THE By Bernard CONTROVERSIAL Fernandez WBC PRESIDENT LEFT HIS MARK ON 86 DOUGIE’S THE SPORT MAILBAG By Thomas Hauser NEW FEATURE: THE BEST OF DOUG FISCHER’S RINGTV.COM COLUMN COVER PHOTO BY HOGAN PHOTOS; BRONER: JEFF BOTTARI/GOLDEN BOY/GETTY IMAGES BOY/GETTY JEFF BOTTARI/GOLDEN BRONER: BY HOGAN PHOTOS; PHOTO COVER By Doug Fischer 4.14 / RINGTV.COM 3 DEPARTMENTS 30 5 RINGSIDE 6 OPENING SHOTS Light heavyweight 12 COME OUT WRITING contender Jean Pascal had a good night on 15 ROLL WITH THE PUNCHES Jan. -

Dagstuhl Reports, Vol. 4, Issue 6, Pp

Volume 4, Issue 6, June 2014 Scientific Visualization (Dagstuhl Seminar 14231) Min Chen, Charles D. Hansen, Penny Rheingans, and Gerik Scheuermann . 1 Design and Synthesis from Components (Dagstuhl Seminar 14232) Jakob Rehof and Moshe Y. Vardi ................................................ 29 Challenges in Analysing Executables: Scalability, Self-Modifying Code and Synergy (Dagstuhl Seminar 14241) Roberto Giacobazzi, Axel Simon, and Sarah Zennou .............................. 48 Software Development Analytics (Dagstuhl Seminar 14261) Harald Gall, Tim Menzies, Laurie Williams, and Thomas Zimmermann . 64 Scripting Languages and Frameworks: Analysis and Verification (Dagstuhl Seminar 14271) Fritz Henglein, Ranjit Jhala, Shriram Krishnamurthi, and Peter Thiemann . 84 Exploring Interdisciplinary Grand Challenges in ICT Design to Support Proactive Health and Wellbeing (Dagstuhl Perspectives Workshop 14272) m. c. schraefel and Elizabeth F. Churchill ........................................ 108 DagstuhlReports,Vol.4,Issue6 ISSN2192-5283 ISSN 2192-5283 Published online and open access by Aims and Scope Schloss Dagstuhl – Leibniz-Zentrum für Informatik The periodical Dagstuhl Reports documents the GmbH, Dagstuhl Publishing, Saarbrücken/Wadern, program and the results of Dagstuhl Seminars and Germany. Online available at Dagstuhl Perspectives Workshops. http://www.dagstuhl.de/dagpub/2192-5283 In principal, for each Dagstuhl Seminar or Dagstuhl Perspectives Workshop a report is published that Publication date contains the following: March, 2015 an executive summary of the seminar program and the fundamental results, Bibliographic information published by the Deutsche an overview of the talks given during the seminar Nationalbibliothek (summarized as talk abstracts), and The Deutsche Nationalbibliothek lists this publica- summaries from working groups (if applicable). tion in the Deutsche Nationalbibliografie; detailed bibliographic data are available in the Internet at This basic framework can be extended by suitable http://dnb.d-nb.de. -

Table of Contents

Table of Contents Scroll to the title and select a Blue link to open a paper. After viewing the paper, use the bookmarks to the left to return to the beginning of the Table of Contents. Theme: AI and Agents BIO - Bioinformatics Track Track Co-Chairs: Paola Lecca, University of Trento, Italy Dan Tulpan, University of Guelph, Canada Juan Manuel Corchado, University of Salamanca, Spain Track Editorial ..................................................................................................................................................... 1 An Insight into Biological Data Mining based on Rarity and Correlation as Constraints ........................... 3 Souad Bouasker, University of Tunis ElManar, Tunisia Sadok Ben Yahia, Tallinn University of Technology, Estonia Gayo Diallo, University of Bordeaux, France Drug Target Discovery using Knowledge Graph Embeddings ..................................................................... 11 Sameh K. Mohamed, National University of Ireland Galway, Ireland Aayah Nounu, University of Bristol, UK Vit Nováček, National University of Ireland Galway, Ireland Ensemble Feature Selectin for Biomarker Discovery in Mass Spectrometry-based Metabolomics .......... 19 AliAsghar ShahrjooiHaghighi, University of Louisville, USA Hichem Frigui, University of Louisville, USA Xiang Zhang, University of Louisville, USA Xiaoli Wei, University of Louisville, USA Biyun Shi, University of Louisville, USA Craig J. McClain, University of Louisville, USA Molecule Specific Normalization for Protein and Metabolite Biomarker -

Ranking As of Jan. 10, 2015

Ranking as of Jan. 10, 2015 HEAVYWEIGHT JR. HEAVYWEIGHT LT. HEAVYWEIGHT (Over 201 lb)(Over 91,17 kg) (200 lb)(90,72 kg) (175 lb)(79,38 kg) CHAMPION CHAMPION CHAMPION WLADIMIR KLITSCHKO (Sup Champ) UKR MARCO HUCK (Sup. Champ) GER SERGEY KOVALEV RUS 1. Tyson Fury (International) UK 1. Krzysztof Glowacki POL 1. Jean Pascal HAI 2. Andy Ruiz (Int-Cont) USA 2. Tony Bellew (International) UK 2. Robin Krasniqi (International) GER 3. Christian Hammer ROM 3. Nuri Seferi (WBO Europe) ALB 3. Nadjib Mohammedi FRA 4. Bryant Jennings USA 4. Oleksandr Usyk (Int-Cont.) UKR 4. Erik Skoglund SWD 5. Charles Martin (NABO) USA 5. Vikapita Meroro NAM 5. Dominic Böesel (Inter-Continental) GER 6. Francesco Pianeta (WBO Europe) ITA 6. Thabiso Mchunu SA 6. Isaac Chilemba MAL 7. David Price UK 7. Ilunga Makabu SA 7. Vasily Lepikhin (Oriental) RUS 8. Vyacheslav Glazkov UKR 8. Rakhim Chakhkiev RUS 8. Andre Dirrell USA 9. Alexander Povetkin RUS 9. Mateusz Masternak POL 9. Isidro Ranoni Prieto (Latino) PAR 10. Dereck Chisora UK 10. Noel Gevor (WBO Youth) ARM 10. Enrico Koelling GER 11. Joseph Parker (Oriental) NZ 11. Iago Kiladze UKR 11. Blake Caparello (Asia-Pacific) AUST 12. Anthony Joshua UK 12. BJ Flores USA 12. Trevor McCumby USA 13. Arthur Szpilka POL 13. Mirko Larghetti ITA 13. Sean Monaghan USA 14. Steve Cunningham USA 14. Andrey Knyazev (Oriental) RUS 14. Umar Salamov (WBO Europe) RUS 15. Razvan Cojanu (Asia-Pacific) ROM 15. Anthony McCraken (Asia-Pacific) AUST 15. Sullivan Barrera CUB ** CHAMPIONS CHAMPIONS CHAMPIONS WLADIMIR KLITSCHKO WBA DENNIS LEBEDEV WBA SERGEY KOVALEV WBA WLADIMIR KLITSCHKO IBF YOAN PABLO HERNANDEZ IBF SERGEY KOVALEV IBF BERMANE STIVERNE WBC GRIGORY DROZD WBC ADONIS STEVENSON WBC SUP. -

In Memory Of

THE IRISH BOXING REVIEW 2015 EDITION STEVE WELLINGS Copyright © 2015 Steve Wellings All rights reserved. The moral right of Steve Wellings to be identified as author of this Work has been asserted by him in accordance with the Copyright, Designs and Patents Act of 1988. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner. You must not circulate this book without the authority to do so. In memory of Paddy Hyland and Eamonn Magee Jnr CONTENTS Introduction Is Carl Frampton Set to Bring Irish Boxing to Heights Never Reached? Jones Backs Frampton to Beat Kiko and Earn World Strap – 5th August 2014 Could the Weather KO Frampton’s Open Arena Plans? – 6th August 2014 Frampton Reveals He Was Unhappy with First Martinez Performance – 13th August 2014 Chris Avalos: ‘I Will Beat the Winner of Martinez-Frampton II’ – 27th August 2014 Chris Avalos Says He’s Being Avoided and Calls On Rivals to Face Him – 29th August 2014 Big Time Boxing Returns to Dublin at the Red Cow Hotel Venue – 2nd September 2014 A Tale of Two Trainers: McGuigan vs. Sarmiento – 3rd September 2014 Sergio Martinez: ‘Kiko Will Knock Out Carl Frampton This Time’ – 4th September 2014 Barry McGuigan: ‘Kiko Return Will Be Better Than the First Fight’ – 4th September 2014 Jamie Conlan Boxes Estrella and Closes In On World Top Five Spot – 5th September 2014 The Waiting Is Over: Frampton’s -

State of the Game

STATE OF THE GAME THEOUR BIBLE EXCLUSIVE OF BOXING DIVISION-BY-DIVISION ANALYSIS THE BIBLE OF BOXING ® MAN OH +HALL OF FAME 2014 MANNY PACQUIAO PROVES HE ISN’T FINISHED OSCAR P.76 DE LA HOYA THE RING AT PEACE WITH REMEMBERS RUBIN CARTER, HIMSELF, CAREER MICKEY DUFF P.40 P.80 TH 25 BEST I ANNIVERSARY: FACED: THE HALL’S EVOLUTION P.48 FELIX TRINIDAD JULY 2014 JULY P.20 $8.95 83 CONTENTS | JULY 2014 Rubin “Hurricane” FEATURES Carter, the former middleweight contender who maintained he was wrongly convicted of murder and ultimately won his freedom, died on April 20. Oscar De La Hoya (left) and Felix Trinidad enter the International Boxing 48 Hall of Fame in June. HALL OF FAME 40 DE LA HOYA: NEW CHAPTER THE ‘GOLDEN BOY,’ FINALLY AT PEACE WITH HIMSELF, SAYS HE’S BACK By Ron Borges 48 25TH ANNIVERSARY THE BOXING HALL OF FAME HAS BECOME AN ICONIC DESTINATION By Bernard Fernandez 54 STATE OF THE GAME THE RING MAGAZINE’S ANNUAL DIVISION-BY-DIVISION BREAKDOWN By David Greisman 76 PACMAN’S STATEMENT MANNY PACQUIAO PROVED AGAINST TIM BRADLEY THAT HE ISN’T FINISHED By Norm Frauenheim 80 MICKEY DUFF: 1929-2014 THE BRITISH PROMOTER WAS AN UNFORGETTABLE CHARACTER By Gareth A Davies 83 RUBIN CARTER: 1937-2014 ‘HURRICANE’ GAINED INTERNATIONAL FAME BY FIGHTING FOR HIS FREEDOM By Tim Smith CARTER: FPG/GETTY IMAGES; DE LA HOYA/TRINIDAD: THE RING MAGAZINE FPG/GETTY IMAGES; DE LA HOYA/TRINIDAD: CARTER: COVER PHOTO / GREG GORMAN 7.14 / RINGTV.COM 3 DEPARTMENTS 6 RINGSIDE 7 OPENING SHOTS 96 12 COME OUT WRITING 15 ROLL WITH THE PUNCHES Unbeaten junior Jabs and Straight Writes by Thomas Hauser featherweight Shelly Vincent 20 BEST I FACED: FELIX TRINIDAD overcame By Anson Wainwright daunting challenges with 22 READY TO GRUMBLE the help of boxing. -

Yoka-Hammer Undercard – Victor Faust and Volkan Gökcek

Yoka-Hammer Undercard – Victor Faust and Volkan Gökcek in action Hamburg, 25th of November – This Friday another thrilling boxing event is taking place. In Nantes, France EC Boxing‘s Christian Hammer will take on Olympic Champion Tony Yoka in a 10 round Heavyweight bout. On top of that there are two other Erol Ceylan promoted fighters competing that night. Both Victor Faust and Volkan Gökcek will step into the ring of the H Arena and try to stay unbeaten. After turning pro early this year, Faust and Gökcek both will have their fifth fight since doing so – something you rarely see in the crazy year of 2020. Heayvweight Faust (4 fights, 4 wins, 4 knockouts) just had his toughest test so far, when he took on the experienced Yakup Saglam on October 31st in Hamburg. After securing yet another stoppage win, the Ukrainian will step up in competition again. In France he will face tough Pole Kamil Sokolowski (31 fights, 10 wins, 4 knockouts), who just went the distance with Faust‘s ECB team member Ali Eren Demirezen. The fight is scheduled for 6 rounds. Quite a few weight classes below Faust rising star Volkan Gökcek (4 fights, 4 wins, 2 knockouts) from Turkey is making his mark. The 24 year old also fought in Hamburg last month, beating Octavian Gratii from Moldova on points. Now Gökcek ist taking on Aboubeker Bechelaghem (25 fights, 12 wins). The Frenchman already competed against some known names in the sport, including 2012 Olympic Champion Luke Campbell. This Super-Lightweight contest is also scheduled for six rounds. -

Does ‘Money’ Have Work Cut Out?

AGELESS WONDER : BERNARD HOPKINS MAKES HISTORY AGAIN SPECIAL PREVIEW PACKAGE: DOES ‘MONEY’ HAVE WORK CUT OUT? HALL OF FAME VOICE INDUCTEE JIMMY LENNON JR. FOLLOWED HIS FATHER’S LEAD STYLES MAKE FIGHTS THE RING EXPLAINS EXACTLY WHAT THAT ADAGE MEANS PRIZEFIGHTER SERIES EIGHT-MAN, ONE-DAY TOURNAMENTS ARE A HIT IN THE U.K. JUNE 2013 LETTERS FROM EUROPE $8.95 U.K. PROMOTERS WARREN AND HEARN DO BATTLE CONTENTS JUNE 2013 FEATURES DEPARTMENTS 4 | RINGSIDE 5 | OPENING SHOTS 10 | COME OUT WRITING 11 | READY TO GRUMBLE By David Greisman 15 | ROLL WITH THE PUNCHES Jabs and Straight Writes by Thomas Hauser 22 | BEST I’VE FACED: EARNIE SHAVERS 24 | OUTSIDE THE ROPES By Brian Harty 26 | RING CARD GIRL 30 | RING RATINGS PACKAGE 93 | LETTERS FROM EUROPE By Gareth A Davies 97 | RINGSIDE REPORTS 38 102 | WORLDWIDE RESULTS 106 | SIX PACK FLOYD MAYWEATHER JR. VS. 62 | FAMILY TRADITION By Scot LaFee ROBERT GUERRERO PACKAGE HALL OF FAMER JIMMY LENNON JR. 110 | WOMEN’S BOXING FOLLOWED IN DAD’S FOOTSTEPS By Ryan Songalia 38 | LEARNING TO FLY By Bernard Fernandez THE FORMATIVE YEARS OF THE ERA’S 113 | FROM THE ARCHIVE GREATEST FIGHTER 69 | STEPPING OUT 114 | AT THE FIGHTS By Norm Frauenheim AMBITIOUS VICTOR ORTIZ TRIES DANCING WITH THE STARS 44 | WHAT MAKES HIM SO GOOD? By Bart Barry OPPONENTS EXPLAIN WHY AT RINGTV.COM MAYWEATHER IS SO SUCCESSFUL 72 | A MATTER OF STYLES VIDEO: HOPKINS By Mike Coppinger THE RING EXPLAINS WHY STYLES DO POST FIGHT INDEED MAKE FIGHTS 48 | LIVE UNDERDOG BERNARD By Brian Harty and Doug Fischer HOPKINS HOLDS GUERRERO BRINGS GUILE AND GRIT COURT WITH THE TO A STIFF CHALLENGE 81 | HE DID IT AGAIN MEDIA AFTER By Ron Borges BERNARD HOPKINS MADE HISTORY BY MAKING HISTORY BEATING TAVORIS CLOUD AGAINST TAVORIS 56 | HEAD TO HEAD By Norm Frauenheim CLOUD. -

Bienvenue, Tony: French Olympic Gold Medalist Tony Yoka Returns Against Christian Hammer Friday LIVE and Exclusively on ESPN+

Bienvenue, Tony: French Olympic Gold Medalist Tony Yoka Returns Against Christian Hammer Friday LIVE and Exclusively on ESPN+ (November 24, 2020) — Tony Yoka, the dynamic heavyweight punching Parisian, aims to impress in his ESPN platform debut. Yoka, who won a super heavyweight gold medal for France at the 2016 Rio Olympics, will fight veteran Christian Hammer in a 10-rounder Friday at H Arena in Nantes, France. Yoka-Hammer will stream live and exclusively this Friday, Nov. 27 in the United States on ESPN+ beginning at 2:55 p.m. ET/11:55 a.m. PT. The ESPN+ stream will also include the return of unbeaten 2016 French Olympic gold medalist Estelle Yoka-Mossely against Pasa Malagic in an eight-round lightweight bout. Yoka and Yoka-Mossely, who have been married since 2018, welcomed their second child in May. Earlier this year, Yoka inked a promotional agreement with Top Rank, which will co-promote him with Ringstar France. “Tony Yoka’s potential is limitless, and he is a grounded young man who is motivated to be a great professional fighter,” said Top Rank chairman Bob Arum. “France has never had a world heavyweight champion, and I believe Tony is the one to bring the sport’s biggest honor home.” The 28-year-old Yoka’s stellar amateur run included a berth at the 2012 London Olympics and gold medals at the 2015 World Championships and 2010 Youth Olympic Games. Before his triumph in Rio, he’d already defeated the likes of former heavyweight world champion Joseph Parker and current undefeated prospects Joe Joyce and Ivan Dychko. -

Heavyweight Cruiserweight

WBA RATINGS COMMITTEE FEBRUARY 2015 MOVEMENTS REPORT Based on results held from 08th February to March 15th, 2015 Miguel Prado Sanchez Chairman Gustavo Padilla Vice Chairman HEAVYWEIGHT DATE PLACE BOXER A RESULT BOXER B TITLE REMARKS 02-28-2015 London, U.K. Tyson Fury W TKO 8 Christian Hammer WBO/inter. S.Leblank 116-112 03-14-2015 Quebec, Canada Vyacheslav Glaszkov W UD 12 Steve Cunningham USBA A.Levin 115-113 P.Procopio 116-112 MOVEMENT N° 13 Steve Cunningham removed from the rating after losing with N° 12 V.Glazkov OUT N° 15 Travis Kaufman removed from the rating due to inactivity (395 days) Francesco Paineta enters at position N° 13 due to caliber and record (31-1-1, 17 kos) IN David Price enters at position N° 15 due to activity, caliber and record 19-2-0, 16 kos) PROMOTIONS N° 12 Vyacheslav Glazkov goes up to position N° 11 after defeating a rated boxer (S.Cunningham) DEMOTIONS N° 11 Kubrat Pulev goes down to position N° 12 at criteria of the ratings committee CRUISERWEIGHT DATE PLACE BOXER A RESULT BOXER B TITLE REMARKS 1 MOVEMENTS N° 13 Mark Flanagan removed from the ranking at discretion of the ratings committee OUT N° 15 Daniel Baff removed from the rating due to inactivity (202 days) B.J. Flores enters at position N° 7 due to activity, caliber and record (31-1-1, 20 kos) IN Noel Gevor enters at position N° 15 due to activity, caliber and record ( 16-0-0, 9 kos) PROMOTIONS NOT CHANGES N° 7 Denton Daley, N° 8 Krisztof Glowaski, N° 9 Tony Bellew, N° 10 Rakhim Chakhkiev, N° 11 Lucasz Janik and N° 12 Anthony DEMOTIONS McCraken go down one position due to BJ.Flores’s entrance LIGHT HEAVYWEIGHT DATE PLACE BOXER A RESULT BOXER B TITLE REMARKS G.Feldman 116-112 02-21-2015 Berlin, Germany Mirco Ricci W UD 12 Enrico Koelling WBA I/C J.Morata G. -

Schattenblick Druckausgabe

Neueste tagesaktuelle Berichte ... Interviews ... Kommentare ... Meinungen .... Textbeiträge ... Dokumente ... MA-Verlag Elektronische Zeitung Schattenblick Samstag, 27. Dezember 2014 SPORT / BOXEN Linksliteraten - Marktferne Presse, engagiertes Buch ... Fury bläst die Backen auf Der Brite redet seinen nächsten 19. Linke Literaturmesse Nürnberg Gegner stark (SB) - Wie zu erwarten war, hat sich Verlagsarbeit zwischen geschäftlichen Zwängen, der britische Schwergewichtler Ty- politischen Zielen und Freude am Büchermachen son Fury für seinen Aufbaukampfam 28. Februar in der Londoner O2 Are- na einen leichten Gegner ausgesucht. (SB) "Die erste Freiheit der Presse Fury ist seit dem Sieg über seinen besteht darin, kein Gewerbe zu sein" Landsmann Dereck Chisora Ende - die berühmte Feststellung von Karl November Pflichtherausforderer Marx könnte kaum anachronistischer Wladimir Klitschkos beim Verband klingen als in der sogenannten Infor- WBO und will diese Position natür- mations- und Wissensgesellschaft. In lich nicht durch eine zwischenzeitli- beiden Fällen geht es um die Verfüg- che Niederlage preisgeben. Der in 23 barkeit einer immateriellen Ware, ei- Profikämpfen ungeschlagene Brite nes Mittels zum Zweck der Kapi- ist auch in den Ranglisten der ande- talakkumulation, dem das Anliegen ren drei Verbände unter den Top 15 politischer Emanzipation und Be- plaziert (IBF 3, WBO 4, WBC 12). freiung nicht unwillkommener sein Er tritt gegen den 27 Jahre alten könnte. Das war auch vor 172 Jahren Christian Hammer an, der 17 Auf- so, als Karl Marx eine Artikelserie in tritte gewonnen und drei verloren der Rheinischen Zeitung zum Thema hat. Der gebürtige Rumäne wird Zensur und Pressefreiheit verfaßte, dennoch der dieses Zitat entstammt. Er kriti- ... (Seite 9) sierte damals einen Vertreter bürger- licher Freiheitsrechte, der die damals noch nicht existierende Pressefrei- Nicht vergessen ..