Expression Simulation in Virtual Ballet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THE ULTIMATE VIRTUAL DANCE Experience Bringing Dance and the World Together WELCOME! REGISTRATION, RATES & REGULATIONS

Information Package THE ULTIMATE VIRTUAL DANCE Experience Bringing Dance and the World Together WELCOME! REGISTRATION, RATES & REGULATIONS VIRTUAL REGISTRATION All registrations (for both studios and individuals) for our Virtual Event are to be completed through DanceBug (www.dancebug.com). For individuals and non-Canadian studios, payment must be made via credit card though DanceBug. For Canadian studios, payments can be made via credit card through DanceBug, via E-transfer to [email protected], or via cheque made payable to On The Floor and mailed to: On The Floor 80 Rustle Woods Ave Markham, ON L6B 0V2 Additional processing fees apply to payments made via credit card through DanceBug. REGISTRATION DEADLINE June Event July Event Registration Deadline: May 15, 2021 Registration Deadline: June 15, 2021 Video Submission Deadline: Jun1, 2021 Video Submission Deadline: July 1, 2021 Airing: Week of June 22, 2021 (TBC) Airing: Week of July 22, 2021 (TBC) VIDEO SUBMISSION FORMAT Videos must be uploaded to DanceBug in .MP4 format (preferred), but .MOV files are also acceptable. Please ensure that all videos are recorded in landscape orientation only. We will not accept videos shot in portrait orientation. We accept home/studio recordings as well as videos from previous competitions, as long as they were filmed in the 2020 or 2021 season. REFUND POLICY Before you book, please make sure you understand that On The Floor operates under a strict policy of no refunds for any reason. If the event is postponed or cancelled, you will receive FULL CREDIT towards another OTF Virtual Event. Payment in FULL is due at registration - we make no exceptions. -

Rokdim-Nirkoda” #99 Is Before You in the Customary Printed Format

Dear Readers, “Rokdim-Nirkoda” #99 is before you in the customary printed format. We are making great strides in our efforts to transition to digital media while simultaneously working to obtain the funding מגזין לריקודי עם ומחול .to continue publishing printed issues With all due respect to the internet age – there is still a cultural and historical value to publishing a printed edition and having the presence of a printed publication in libraries and on your shelves. עמותת ארגון המדריכים Yaron Meishar We are grateful to those individuals who have donated funds to enable והיוצרים לריקודי עם financial the encourage We editions. printed recent of publication the support of our readers to help ensure the printing of future issues. This summer there will be two major dance festivals taking place Magazine No. 99 | July 2018 | 30 NIS in Israel: the Karmiel Festival and the Ashdod Festival. For both, we wish and hope for their great success, cooperation and mutual YOAV ASHRIEL: Rebellious, Innovative, enrichment. Breaks New Ground Thank you Avi Levy and the Ashdod Festival for your cooperation 44 David Ben-Asher and your use of “Rokdim-Nirkoda” as a platform to reach you – the Translation: readers. Thank you very much! Ruth Schoenberg and Shani Karni Aduculesi Ruth Goodman Israeli folk dances are danced all over the world; it is important for us to know and read about what is happening in this field in every The Light Within DanCE place and country and we are inviting you, the readers and instructors, 39 The “Hora Or” Group to submit articles about the background, past and present, of Israeli folk Eti Arieli dance as it is reflected in the city and country in which you are active. -

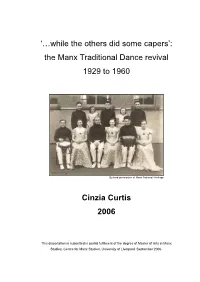

Manx Traditional Dance Revival 1929 to 1960

‘…while the others did some capers’: the Manx Traditional Dance revival 1929 to 1960 By kind permission of Manx National Heritage Cinzia Curtis 2006 This dissertation is submitted in partial fulfilment of the degree of Master of Arts in Manx Studies, Centre for Manx Studies, University of Liverpool. September 2006. The following would not have been possible without the help and support of all of the staff at the Centre for Manx Studies. Special thanks must be extended to the staff at the Manx National Library and Archive for their patience and help with accessing the relevant resources and particularly for permission to use many of the images included in this dissertation. Thanks also go to Claire Corkill, Sue Jaques and David Collister for tolerating my constant verbalised thought processes! ‘…while the others did some capers’: The Manx Traditional Dance Revival 1929 to 1960 Preliminary Information 0.1 List of Abbreviations 0.2 A Note on referencing 0.3 Names of dances 0.4 List of Illustrations Chapter 1: Introduction 1.1 Methodology 1 1.2 Dancing on the Isle of Man in the 19th Century 5 Chapter 2: The Collection 2.1 Mona Douglas 11 2.2 Philip Leighton Stowell 15 2.3 The Collection of Manx Dances 17 Chapter 3: The Demonstration 3.1 1929 EFDS Vacation School 26 3.2 Five Manx Folk Dances 29 3.3 Consolidating the Canon 34 Chapter 4: The Development 4.1 Douglas and Stowell 37 4.2 Seven Manx Folk Dances 41 4.3 The Manx Folk Dance Society 42 Chapter 5: The Final Figure 5.1 The Manx Revival of the 1970s 50 5.2 Manx Dance Today 56 5.3 Conclusions -

The Biomechanics of the Tendu in Closing to the Traditional Position, Pli#233

University of South Florida Scholar Commons Graduate Theses and Dissertations Graduate School January 2013 The iomechB anics of the Tendu in Closing to the Traditional Position, Pli#233; and Relev#233; Nyssa Catherine Masters University of South Florida, [email protected] Follow this and additional works at: http://scholarcommons.usf.edu/etd Part of the Engineering Commons Scholar Commons Citation Masters, Nyssa Catherine, "The iomeB chanics of the Tendu in Closing to the Traditional Position, Pli#233; and Relev#233;" (2013). Graduate Theses and Dissertations. http://scholarcommons.usf.edu/etd/4825 This Thesis is brought to you for free and open access by the Graduate School at Scholar Commons. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. The Biomechanics of the Tendu in Closing to the Traditional Position, Plié and Relevé by Nyssa Catherine Masters A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Mechanical Engineering Department of Mechanical Engineering College of Engineering University of South Florida Major Professor: Rajiv Dubey, Ph.D. Stephanie Carey, Ph.D. Merry Lynn Morris, M.F.A. Date of Approval: November 7, 2013 Keywords: Dance, Motion Analysis, Knee Abduction, Knee Adduction, Knee Rotation Copyright © 2013, Nyssa Catherine Masters DEDICATION This is dedicated to my parents, Michael and Margaret, who have supported me and encouraged -

Securing Our Dance Heritage: Issues in the Documentation and Preservation of Dance by Catherine J

Securing Our Dance Heritage: Issues in the Documentation and Preservation of Dance by Catherine J. Johnson and Allegra Fuller Snyder July 1999 Council on Library and Information Resources Washington, D.C. ii About the Contributors Catherine Johnson served as director for the Dance Heritage Coalition’s Access to Resources for the History of Dance in Seven Repositories Project. She holds an M.S. in library science from Columbia University with a specialization in rare books and manuscripts and a B.A. from Bethany College with a major in English literature and theater. Ms. Johnson served as the founding director of the Dance Heritage Coalition from 1992 to 1997. Before that, she was assistant curator at the Harvard Theatre Collection, where she was responsible for access, processing, and exhibitions, among other duties. She has held positions at The New York Public Library and the Folger Shakespeare Library. Allegra Fuller Snyder, the American Dance Guild’s 1992 Honoree of the Year, is professor emeritus of dance and former director of the Graduate Program in Dance Ethnology at the University of California, Los Angeles. She has also served as chair of the faculty, School of the Arts, and chair of the Department of Dance at UCLA. She was visiting professor of performance studies at New York University and honorary visiting professor at the University of Surrey, Guildford, England. She has written extensively and directed several films about dance and has received grants from NEA and NEH in addition to numerous honors. Since 1993, she has served as executive director, president, and chairwoman of the board of directors of the Buckminster Fuller Institute. -

Dance Program Handbook 2020-2021

Bethel College Academy of Performing Arts Dance Program Handbook 2020-2021 DANCE HANDBOOK CONTENTS GUIDE Contact Information 1 General Policies 2 Dance Department Expectations 4 Dress Code 5 Class Requirements 7 Ballet 6 Pointe 10 Jazz 11 Tap 12 Modern 13 Irish 13 Hip Hop 14 Adult Classes 14 Company Requirements 14 IMPORTANT CONTACTS Bethel College Academy of Performing Arts (316) 283-4902 EMAIL: [email protected] 300 E 27th Street, North Newton KS 67117 WEBSITE: www.bcapaks.org Lisa Riffel, Operations Manager (316) 283-4902 [email protected] Haylie Berning, Director of Dance (316) 619-7580 [email protected] Molly Flavin, Instructor (316) 283-4902 [email protected] Emily Blane, Instructor (316) 283-4902 [email protected] Resa Marie Davenport, Instructor (316) 283-4902 [email protected] Aviance Battles, Instructor (316) 283-4902 [email protected] Cathy Anderson, Instructor (316) 283-4902 [email protected] 1 GENERAL INFORMATION AND POLICIES Please read the current registration packet for a complete list of BCAPA’s policies and safety precautions. Keep in mind that some of the below policies may be affected by COVID policies put in place by BCAPA and Bethel College. 1. Communication Methods and Response Time. The best way to contact BCAPA’s dance director is by phone message at (316) 283-4902 or by email at [email protected]. Current students can find important information on upcoming events, workshops, and other announcements at our website, www.bcapaks.org or our Facebook page. 2. *Drop-Off Expectations. In the interest of safety, students must be accompanied to and from their classes by an adult. -

CDS Boston News Spring 2021

CDS Boston News The Newsletter of the Country Dance Society, Boston Centre Spring 2021 Boston Playford VirtuBall – Saturday March 6th Save the date: Saturday, March 6th at 7:30pm (doors (COVID restrictions permitting). We’ll also have photo open 7:15pm) for a virtual version of our annual memories of previous balls. Boston Playford Ball, on the traditional “first Saturday Watch your email (for CDSBC members), our website after the first Friday” in March! (https://www.cds-boston.org/), and our Facebook page Last year we celebrated 40 years of the Boston (https://www.facebook.com/cds.bostoncentre ) for Playford Ball, at what ended up being our last dance more information and how to register. together. This year our ball will be a little different since we can’t be together in person, but we can be together online, and geography is no barrier this time for our further away friends. Barbara Finney, Compere*, is preparing a wonderful evening of entertainment for you, with music by members of Bare Necessities – Kate Barnes and Jacqueline Schwab, and Mary Lea * compere Cambridge English Dictionary: noun [ C ] Participants at the 2020 Boston Playford Ball. Photo by Jeffrey Bary UK/’köm.peər/ US/’ka:m.per/ (US emcee) a person whose job is to introduce performers in a television, radio, or stage show: He started his career as a TV compere. Also in this issue: National Folk Organization Hosts Virtual Conference for 2021 ................................. p.5 About CDS Boston Centre .............................................. p.2 Philippe Callens Passes ................................................. p.5 Save the Date: 2021 Annual General Meeting ..................... p.2 Virtual Events Listings .................................................. -

Digitalbeing: Visual Aesthetic Expression Through Dance

DigitalBeing – Using the Environment as an Expressive Medium for Dance Magy Seif El-Nasr Thanos Vasilakos Penn State University University of Thessaly [email protected] [email protected] Abstract Dancers express their feelings and moods through gestures and body movements. We seek to extend this mode of expression by dynamically and automatically adjusting music and lighting in the dance environment to reflect the dancer’s arousal state. Our intention is to offer a space that performance artists can use as a creative tool that extends the grammar of dance. To enable the dynamic manipulation of lighting and music, the performance space will be augmented with several sensors: physiological sensors worn by a dancer to measure her arousal state, as well as pressure sensors installed in a floor mat to track the dancers’ locations and movements. Data from these sensors will be passed to a three layered architecture. Layer 1 is composed of a sensor analysis system that analyzes and synthesizes physiological and pressure sensor signals. Layer 2 is composed of intelligent systems that adapt lighting and music to portray the dancer’s arousal state. The intelligent on-stage lighting system dynamically adjusts on-stage lighting direction and color. The intelligent virtual lighting system dynamically adapts virtual lighting in the projected imagery. The intelligent music system dynamically and unobtrusively adjusts the music. Layer 3 translates the high-level adjustments made by the intelligent systems in layer 2 to appropriate lighting board, image rendering, and audio box commands. In this paper, we will describe this architecture in detail as well as the equipment and control systems used. -

Movement Research: Exploring Liminality of Dance

Lawrence University Lux Lawrence University Honors Projects 6-2020 Movement Research: Exploring Liminality of Dance Michele D. Haeberlin Lawrence University Follow this and additional works at: https://lux.lawrence.edu/luhp Part of the Arts and Humanities Commons © Copyright is owned by the author of this document. Recommended Citation Haeberlin, Michele D., "Movement Research: Exploring Liminality of Dance" (2020). Lawrence University Honors Projects. 149. https://lux.lawrence.edu/luhp/149 This Honors Project is brought to you for free and open access by Lux. It has been accepted for inclusion in Lawrence University Honors Projects by an authorized administrator of Lux. For more information, please contact [email protected]. Movement Research: Exploring Liminality of Dance Michele D. Haeberlin Advisor Professor Paek Lawrence University May 2020 Acknowledgements I cannot possibly count all the wonderful people who have helped contribute to this in such a small space, but to those who have made space to dance in such a time as this- my sincere gratitude. Every single person who sent in a video has an important role in this project becoming what it is, and it would not be the same without all of your beautiful submissions. To everyone at Movement Research thank you for allowing me to play and explore dance in an environment as wonderfully dynamic as New York City with you. A heartfelt thank you to George and Marjorie Chandler, of the Chandler Senior Experience Grant, who helped me achieve my dreams. Amongst the many givers to my project’s completion would be my wonderful friends who have bounced ideas for me and kept me sane mentally- Amanda Chin and Rebecca Tibbets. -

Vinylnews 03-04-2020 Bis 05-06-2020.Xlsx

Artist Title Units Media Genre Release ABOMINATION FINAL WAR -COLOURED- 1 LP STM 17.04.2020 ABRAMIS BRAMA SMAKAR SONDAG -REISSUE- 2 LP HR. 29.05.2020 ABSYNTHE MINDED RIDDLE OF THE.. -LP+CD- 2 LP POP 08.05.2020 ABWARTS COMPUTERSTAAT / AMOK KOMA 1 LP PUN 15.05.2020 ABYSMAL DAWN PHYLOGENESIS -COLOURED- 1 LP STM 17.04.2020 ABYSMAL DAWN PHYLOGENESIS -GATEFOLD- 1 LP STM 17.04.2020 AC/DC WE SALUTE YOU -COLOURED- 1 LP ROC 10.04.2020 ACACIA STRAIN IT COMES IN.. -GATEFOLD- 1 LP HC. 10.04.2020 ACARASH DESCEND TO PURITY 1 LP HM. 29.05.2020 ACCEPT STALINGRAD-DELUXE/LTD/HQ- 2 LP HM. 29.05.2020 ACDA & DE MUNNIK GROETEN UIT.. -COLOURED- 1 LP POP 05.06.2020 ACHERONTAS PSYCHICDEATH - SHATTERING 2 LP BLM 01.05.2020 ACHERONTAS PSYCHICDEATH.. -COLOURED- 2 LP BLM 01.05.2020 ACHT EIMER HUHNERHERZEN ALBUM 1 LP PUN 27.04.2020 ACID BABY JESUS 7-SELECTED OUTTAKES -EP- 1 12in GAR 15.05.2020 ACID MOTHERS TEMPLE REVERSE OF.. -LTD- 1 LP ROC 24.04.2020 ACID MOTHERS TEMPLE & THE CHOSEN STAR CHILD`S.. 1 LP ROC 24.04.2020 ACID TONGUE BULLIES 1 LP GAR 08.05.2020 ACXDC SATAN IS KING 1 LP PUN 15.05.2020 ADDY ECLIPSE -DOWNLOAD- 1 LP ROC 01.05.2020 ADULT PERCEPTION IS/AS/OF.. 1 LP ELE 10.04.2020 ADULT PERCEPTION.. -COLOURED- 1 LP ELE 10.04.2020 AESMAH WALKING OFF THE HORIZON 2 LP ROC 24.04.2020 AGATHOCLES & WEEDEOUS HOL SELF.. -COLOURED- 1 LP M_A 22.05.2020 AGENT ORANGE LIVING IN DARKNESS -RSD- 1 LP PUN 18.04.2020 AGENTS OF TIME MUSIC MADE PARADISE 1 12in ELE 10.04.2020 AIRBORNE TOXIC EVENT HOLLYWOOD PARK -HQ- 2 LP ROC 08.05.2020 AIRSTREAM FUTURES LE FEU ET LE SABLE 1 LP ROC 24.04.2020 ALBINI, STEVE & ALISON CH MUSIC FROM THE FILM. -

No. 22 How Did North Korean Dance Notation Make Its Way to South

School of Oriental and African Studies University of London SOAS-AKS Working Papers in Korean Studies No. 22 How did North Korean dance notation make its way to South Korea’s bastion of traditional arts, the National Gugak Center? Keith Howard http://www.soas.ac.uk/japankorea/research/soas-aks-papers/ How did North Korean dance notation make its way to South Korea’s bastion of traditional arts, the National Gugak Center? Keith Howard (SOAS, University of London) © 2012 In December 2009, the National Gugak Center published a notation for the dance for court sacrificial rites (aak ilmu). As the thirteenth volume in a series of dance notations begun back in 1988 this seems, at first glance, innocuous. The dance had been discussed in relation to the music and dance at the Rite to Confucius (Munmyo cheryeak) in the 1493 treatise, Akhak kwebŏm (Guide to the Study of Music), and had also, as part of Chongmyo cheryeak, been used in the Rite to Royal Ancestors. Revived in 1923 during the Japanese colonial period by members of the court music institute, then known as the Yiwangjik Aakpu (Yi Kings’ Court Music Institute), the memories and practice of former members of that institute ensured that the music and dance to both rites would be recognised as intangible cultural heritage within the post-liberation Republic of Korea (South Korea), with Chongymo cheryeak appointed Important Intangible Cultural Property (Chungyo muhyŏng munhwajae)1 1 in December 1964 and a UNESCO Masterpiece of the Oral and Intangible Heritage in 2001, and the entire Confucian rite (Sŏkchŏn taeje) as Intangible Cultural Property 85 in November 1986.2 In fact, the director general of the National Gugak Center, Pak Ilhun, in a preface to volume thirteen, notes how Sŏng Kyŏngnin (1911–2008), Kim Kisu (1917–1986) and others who had been members of the former institute, and who in the 1960s were appointed ‘holders’ (poyuja) for Intangible Cultural Property 1, taught the dance for sacrificial rites to students at the National Traditional Music High School in 1980. -

ICTM Abstracts Final2

ABSTRACTS FOR THE 45th ICTM WORLD CONFERENCE BANGKOK, 11–17 JULY 2019 THURSDAY, 11 JULY 2019 IA KEYNOTE ADDRESS Jarernchai Chonpairot (Mahasarakham UnIversIty). Transborder TheorIes and ParadIgms In EthnomusIcological StudIes of Folk MusIc: VIsIons for Mo Lam in Mainland Southeast Asia ThIs talk explores the nature and IdentIty of tradItIonal musIc, prIncIpally khaen musIc and lam performIng arts In northeastern ThaIland (Isan) and Laos. Mo lam refers to an expert of lam singIng who Is routInely accompanIed by a mo khaen, a skIlled player of the bamboo panpIpe. DurIng 1972 and 1973, Dr. ChonpaIrot conducted fIeld studIes on Mo lam in northeast Thailand and Laos with Dr. Terry E. Miller. For many generatIons, LaotIan and Thai villagers have crossed the natIonal border constItuted by the Mekong RIver to visit relatIves and to partIcipate In regular festivals. However, ChonpaIrot and Miller’s fieldwork took place durIng the fInal stages of the VIetnam War which had begun more than a decade earlIer. DurIng theIr fIeldwork they collected cassette recordings of lam singIng from LaotIan radIo statIons In VIentIane and Savannakhet. ChonpaIrot also conducted fieldwork among Laotian artists living in Thai refugee camps. After the VIetnam War ended, many more Laotians who had worked for the AmerIcans fled to ThaI refugee camps. ChonpaIrot delIneated Mo lam regIonal melodIes coupled to specIfic IdentItIes In each locality of the music’s origin. He chose Lam Khon Savan from southern Laos for hIs dIssertation topIc, and also collected data from senIor Laotian mo lam tradItion-bearers then resIdent In the United States and France. These became his main informants.