Physical Security Systems Assessment Guide, Dec 2016

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Standard Review Plan 13.6.2 Physical Security

NUREG-0800 U.S. NUCLEAR REGULATORY COMMISSION STANDARD REVIEW PLAN 13.6.2 PHYSICAL SECURITY - DESIGN CERTIFICATION REVIEW RESPONSIBILITIES Primary – Office of Nuclear Security and Incident Response Secondary – None I. AREAS OF REVIEW For design certification (DC) applications, the review involves the evaluation of the physical security program design, to include those physical protection and mitigative measure elements identified to be within the scope of an applicant’s design. The review must include the required physical security elements of a DC application and may also include a review of the voluntarily submitted physical protection and mitigative measure elements. DRAFT - August 2007 USNRC STANDARD REVIEW PLAN This Standard Review Plan, NUREG-0800, has been prepared to establish criteria that the U.S. Nuclear Regulatory Commission staff responsible for the review of applications to construct and operate nuclear power plants intends to use in evaluating whether an applicant/licensee meets the NRC's regulations. The Standard Review Plan is not a substitute for the NRC's regulations, and compliance with it is not required. However, an applicant is required to identify differences between the design features, analytical techniques, and procedural measures proposed for its facility and the SRP acceptance criteria and evaluate how the proposed alternatives to the SRP acceptance criteria provide an acceptable method of complying with the NRC regulations. The standard review plan sections are numbered in accordance with corresponding sections in Regulatory Guide 1.70, "Standard Format and Content of Safety Analysis Reports for Nuclear Power Plants (LWR Edition)." Not all sections of Regulatory Guide 1.70 have a corresponding review plan section. -

Designing Physical Security Monitoring for Water Quality Surveillance and Response Systems

United States Environmental Protection Agency Designing Physical Security Monitoring For Water Quality Surveillance and Response Systems Office of Water (AWBERC, MS 140) EPA 817-B-17-001 September 2017 Disclaimer The Water Security Division of the Office of Ground Water and Drinking Water has reviewed and approved this document for publication. This document does not impose legally binding requirements on any party. The information in this document is intended solely to recommend or suggest and does not imply any requirements. Neither the U.S. Government nor any of its employees, contractors or their employees make any warranty, expressed or implied, or assumes any legal liability or responsibility for any third party’s use of any information, product or process discussed in this document, or represents that its use by such party would not infringe on privately owned rights. Mention of trade names or commercial products does not constitute endorsement or recommendation for use. Version History: The 2019 version is the second release of the document, originally published in September 2017. This release includes updated component names (Enhanced Security Monitoring was changed to Physical Security Monitoring and Consequence Management was changed to Water Contamination Response), an updated version of Figure 1.1 that reflects the component name changes and includes the Advanced Metering Infrastructure component, an updated Glossary, updated target capabilities, and updated links to external resources. “Enhanced” was replaced with “Physical” in this document to avoid any implication of a baseline standard, and better describe the type of security. Questions concerning this document should be addressed to [email protected] or the following contacts: Nelson Mix U.S. -

Standards for Building Materials, Equipment and Systems Used in Detention and Correctional Facilities

NATIONAL INSTITUTE OF STANDARDS & TECHNOLOGY Research Information Center Gaithersburg, MD 20890 PUBLICATIONS NBSIR 87-3687 Standards for Building Materials, Equipment and Systems Used in Detention and Correctional Facilities Robert D. Dikkers Belinda C. Reeder U.S. DEPARTMENT OF COMMERCE National Bureau of Standards National Engineering Laboratory Center for Building Technology Building Environment Division Gaithersburg, MD 20899 November 1987 Prepared for: -QC ment of Justice 100 ititute of Corrections i, DC 20534 . U56 87-3687 1987 C . 2 Research Information Center National Bureau of Standards Gaithersburg, Maryland NBSIR 87-3687 20899 STANDARDS FOR BUILDING MATERIALS, EQUIPMENT AND SYSTEMS USED IN u - DETENTION AND CORRECTIONAL FACILITIES Robert D. Dikkers Belinda C. Reeder U.S. DEPARTMENT OF COMMERCE National Bureau of Standards National Engineering Laboratory Center for Building Technology Building Environment Division Gaithersburg, MD 20899 November 1987 Prepared for: U.S. Department of Justice National Institute of Corrections Washington, DC 20534 U.S. DEPARTMENT OF COMMERCE, C. William Verity, Secretary NATIONAL BUREAU OF STANDARDS, Ernest Ambler, Director TABLE OF CONTENTS Page Preface . vi Acknowledgements vii Executive Summary ix I . INTRODUCTION 1 A. Background , 1 B. Objectives and Scope of NBS Study 3 II. FACILITY DESIGN AND CONSTRUCTION 6 A. Facility Development Process 6 1 . Needs Assessment ........................................ 6 2 . Master Plan 6 3 . Mission Statement . 6 4. Architectural Program 7 5. Schematic Design and Design Development 7 6 . Construction 9 B. Security Levels 10 C . ACA S tandar ds 13 . III MATERIALS , EQUIPMENT AND SYSTEMS .... 14 A. Introduction 14 B. Performance Problems 15 C. Available Standards/Guide Specifications 20 iii TABLE OF CONTENTS (continued) 5 Page D« Perimeter Systems 21 1 . -

Physical Access Control Systems (PACS) Customer Ordering Guide

Physical Access Control Systems (PACS) Customer Ordering Guide Revised January 2017 1 Physical Access Control Systems (PACS) Customer Ordering Guide Table of Contents Purpose ...................................................................................................................3 Background .............................................................................................................3 Recent Policy Announcements ...............................................................................4 What is PACS? .......................................................................................................5 As an end-user agency, where do I start and what steps are involved? ................. 7 Where do I purchase PACS Solutions from GSA? ..............................................10 How do I purchase a PACS Solution using GSA eBuy? .....................................11 Frequently Asked Questions (FAQs) ...................................................................12 GSA Points of Contact for PACS .........................................................................15 Reference Documents ...........................................................................................16 Sample Statement of Work (SOW) ......................................................................18 2 Physical Access Control Systems (PACS) Customer Ordering Guide Purpose The purpose of this document is to create a comprehensive ordering guide that assists ordering agencies, particularly contracting officers, to -

Security Industry Monitor March 2014

Security Industry Monitor March 2014 For additional information on our Security Team, please contact: John E. Mack III Co-head, Investment Banking Group Head of Mergers and Acquisitions (310) 246-3705 [email protected] Michael McManus Managing Director, Investment Banking Group (310) 246-3702 [email protected] PLEASE SEE IMPORTANT DISCLOSURES ON LAST PAGE About Imperial Capital, LLC Imperial Capital is a full-service investment bank offering a uniquely integrated platform of comprehensive services to institutional investors and middle market companies. We offer sophisticated sales and trading services to institutional investors and a wide range of investment banking advisory, capital markets and restructuring services to middle market corporate clients. We also provide proprietary research across an issuer’s capital structure, including bank debt, debt securities, hybrid securities, preferred and common equity and special situations claims. Our comprehensive and integrated service platform, expertise across the full capital structure, and deep industry sector knowledge enable us to provide clients with superior advisory services, capital markets insight, investment ideas and trade execution. We are quick to identify opportunities under any market conditions and we have a proven track record of offering creative, proprietary solutions to our clients. Imperial Capital’s expertise includes the following sectors: Aerospace, Defense & Government Services, Airlines & Transportation, Business Services, Consumer, Energy (Clean Energy and Traditional Energy), Financial Services, Gaming & Leisure, General Industrials, Healthcare, Homebuilding & Real Estate, Media & Telecommunications, Security & Homeland Security and Technology. Imperial Capital has three principal businesses: Investment Banking, Institutional Sales & Trading and Institutional Research. For additional information, please visit our Web site at www.imperialcapital.com. -

Turkish Journal of Computer and Mathematics Education Vol.12 No

Turkish Journal of Computer and Mathematics Education Vol.12 No. 11 (2021), 6007-6011 Research Article Image Classification For Visitors Home Security 1K.B Sri Sathya, 2Archana V, 1Assistant Professor, Dept. of CSE,[email protected] 2Deevika K, Hariharan A, Final year CSE, [email protected] [email protected] [email protected] KPR Institute of Engineering and Technology. Article History: Received: 11 January 2021; Revised: 12 February 2021; Accepted: 27 March 2021; Published online: 10 May 2021 Abstract-The actual need of the today’s organizations and residential areas is security frameworks. The security methods which were implemented on those areas are now frequently facing major issues like break-in burglar easily. And also, the major obstacle with home security is people who are being incautious about the visitors or intruders before opening the door while security alarm goes off unfortunately. As a result, to attain the desired security, there will be an additional need of particularly skilled personnel to increase the level of safety frameworks. As a human being, those personnel will likely make faults which leads to uncertainty of persons entering into the residential zones. The main motive of the project is to provide the better home security against potential harm made by intruders and unknown visitors by recognizing them with predictable accuracy. This security system consists of hardware part and software part. The image capturing is done by camera which comes under hardware part, whereas face detection, face recognition and voice notification are all will be done based on algorithms under software part. Keywords: Facial Detection and Recognition, Feature extraction, FaceNet, Home Security, SVM 1. -

HIPAA Security Standards: Physical Safeguards

Security SERIES HIPAA Security 3 Security Standards: Physical Safeguards Topics What is the Security Series? 1. The security series of papers will provide guidance from the Centers for Security 101 for Medicare & Medicaid Services (CMS) on the rule titled “Security Standards Covered Entities for the Protection of Electronic Protected Health Information,” found at 45 CFR Part 160 and Part 164, Subparts A and C. This rule, commonly known 2. as the Security Rule, was adopted to implement provisions of the Health Security Standards Insurance Portability and Accountability Act of 1996 (HIPAA). The series - Administrative will contain seven papers, each focused on a specific topic related to the Safeguards Security Rule. The papers, which cover the topics listed to the left, are designed to give HIPAA covered entities 3. insight into the Security Rule, and Compliance Deadlines assistance with implementation of the No later than April 20, 2005 Security for all covered entities except Standards security standards. This series aims to small health plans which have - Physical explain specific requirements, the thought Safeguards until no later than April 20, process behind those requirements, and 2006. 4. possible ways to address the provisions. Security Standards - Technical CMS recommends that covered entities read the first paper in this series, Safeguards “Security 101 for Covered Entities” before reading the other papers. The first paper clarifies important Security Rule concepts that will help covered entities as they plan for implementation. This third paper in the series is 5. devoted to the standards for Physical Safeguards and their implementation Security Standards - Organizational, specifications and assumes the reader has a basic understanding of the Policies and Security Rule. -

How to Manage Physical Security Risk

How to Manage Physical Security Risk Table of Contents Introduction ........................................................................................................................................................3 What Is Physical Security Exactly? ...........................................................................................................4 Components of Physical Security ..............................................................................................................5 The Importance Of Physical Security ......................................................................................................5 How Physical Security Helps To Ensure Digital Safety ...................................................................7 The Most Common Physical Security Threats ....................................................................................9 How To Mitigate Physical Security Threats ...................................................................................... 10 Top Tips To Maintain Physical Security At Your Workplace .................................................... 12 Final Words....................................................................................................................................................... 14 Introduction Digital security has become the watchword of the day. As we step into the new decade, the world is visibly dependent on IT infrastructure for carrying out everyday business operations. Be it monetary transactions or information exchange, -

Cyber Security and Cloud Video Surveillance

CYBER SECURITY WHITE PAPER Page 1 of 14 Cyber Security and Cloud Video Surveillance Eagle Eye Networks | 4611 Bee Caves Rd, #200 | Austin, TX 78746 www.een.com | +1-512-473-0500 | [email protected] CYBER SECURITY WHITE PAPER Page 2 of 14 This paper explains why video surveillance system security can and should be more fully addressed within the industry, so that cyber security is not left as a problem for installers or customers to solve. Eagle Eye Networks is a leader in this respect, mitigating security concerns from the point of product research, development and deployment. Introduction Figure 1. Timeline: escalating cyber attacks on security video cameras and DVRs. Today’s networked video surveillance systems are vulnerable in many ways, and their cameras have been weaponiZed by hackers to create massive Distributed Denial of Service (DDoS) attacks on targeted systems. Figure 1 presents a timeline of recent cyber attacks and threats affecting Internet-connected security cameras and digital video recorders (DVRs). Securing today’s networked video systems can be a complex and difficult technical challenge. However, especially for small and medium siZe businesses, it doesn’t have to be that way. Video systems and equipment can be purpose-built to constitute a pre- hardened and more easily securable system, in contrast to the current installed base of networked video technology. In September of 2016, a large French web-hosting provider reported a record-breaking 1-terabit-per- second DDoS attack against their web servers, unleashed by a collection of more than 145 thousand hacked Internet-connected video cameras and digital video recorders. -

Physical Security Models, Philosophies, and Context

Journal of International Information Management Volume 10 Issue 2 Article 9 2001 Physical security models, philosophies, and context Karen A. Forcht James Madison University S. E. Kruck James Madison University Follow this and additional works at: https://scholarworks.lib.csusb.edu/jiim Part of the Management Information Systems Commons Recommended Citation Forcht, Karen A. and Kruck, S. E. (2001) "Physical security models, philosophies, and context," Journal of International Information Management: Vol. 10 : Iss. 2 , Article 9. Available at: https://scholarworks.lib.csusb.edu/jiim/vol10/iss2/9 This Article is brought to you for free and open access by CSUSB ScholarWorks. It has been accepted for inclusion in Journal of International Information Management by an authorized editor of CSUSB ScholarWorks. For more information, please contact [email protected]. Forcht and Kruck: Physical security models, philosophies, and context Physical Security Journal of International Information Manasement Physical security models, philosophies, and context Karen A. Forcht S. E. Krnck James Madison University ABSTRACT This paper presents physical security of a computer facility within the context of a corpo rate environment. The context is established from several different perspectives. It first presents physical security philosophies and illustrates the philosophies via the Onion and Garlic Mod els. It defines a process for identifying and describing transition strategies between security levels. Once the models are defined, a Macro View of physical security is presented. This view discusses physical security goals and critical factors such as budget, monitoring and redun dancy. With this context established, the Micro View is presented. Its focus is on information technology (IT) facilities that protect centralized or clustered IT resources. -

AR 190-13 the Army Physical Security Program

Army Regulation 190–13 Military Police The Army Physical Security Program Headquarters Department of the Army Washington, DC 30 September 1993 Unclassified SUMMARY of CHANGE AR 190–13 The Army Physical Security Program This revision- o Consolidates AR 190-13 and AR 15-15, Department of the Army Physical Security Review Board (DAPSRB), and incorporates policy on the purpose, function, composition of the DAPSRB (chap 7). o Addresses command responsibility for a crime prevention program (para 2-2). o Revises DA Form 4261 and DA Form 4261-1 (Physical Security Inspector Identification Card) (paras 3-1 and 3-2). o Redesignates site surveys as security engineering surveys. Modifies Physical Security Equipment Management Program objectives (para 2-14). o Identifies the establishment, purpose, functions, and composition of the Department of the Army (DA) Physical Security Equipment Action Group (PSEAG) (para 4-4). o Redesignates the Product Manager for Physical Security Equipment (PM-PSE) to the Physical Security Equipment Manager, Physical Security Equipment Management Office (PSEMO) (para 4-5). o Adds an outline of the establishment and specific functions of the Physical Security Equipment Working Group (para 4-6). o Addresses intrusion detection systems (IDS) by: revising the priority for installation of IDS based on the level of security needed (para 4-9); discussing planning for IDS (para 4-15); establishing new priorities and priority codes for the IDS (table 4-1). o Outlines security force procedures, inspections, and security patrol plans (chap 8). o Authorizes exact replication of any Department of the Army and Department of Defense forms that are prescribed in this regulation and are generated by the automated Military Police Management Information System in place of the official printed version of the forms (app A). -

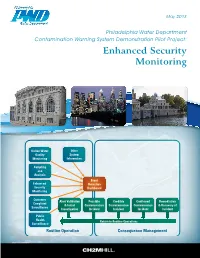

Enhanced Security Monitoring

May 2013 Philadelphia Water Department Contamination Warning System Demonstration Pilot Project: Enhanced Security Monitoring Online Water Other Quality System Monitoring Information Sampling and Analysis Event Enhanced Detection Security Dashboard Monitoring Customer Alert Validation Possible Credible Confirmed Remediation Complaint & Initial Contamination Contaimination Contamination & Recovery of Surveillance Investigation Incident Incident Incident Incident Public Health Return to Routine Operations Surveillance Routine Operation Consequence Management WBG012313172402PHL EPA Disclaimer Although the information in this document has been funded wholly or in part by the United States Environmental Protection Agency under the Water Security Initiative program, it may not necessarily reflect the views of the agency and no official endorsement should be inferred. When referencing this white paper in another document, please use the following citation: Philadelphia Water Department and CH2M HILL. May 2013. Philadelphia Water Department Contamination Warning System Demonstration Pilot Project: Enhanced Security Monitoring. White Paper Submitted to EPA as part of the Water Security Initiative Grant Awarded to Philadelphia Water Department. This paper can also be downloaded from www.ch2mhill.com/iws. ii Acknowledgments The Philadelphia Water Department would like to recognize the following individuals and organizations for their assistance and contributions in the development of this document: EPA Water Security Division Brian Pickard, PE,