Lecture Notes on Statistical Mechanics & Thermodynamics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Entropy, Information, and Conservation of Information

entropy Article On Entropy, Information, and Conservation of Information Yunus A. Çengel Department of Mechanical Engineering, University of Nevada, Reno, NV 89557, USA; [email protected] Abstract: The term entropy is used in different meanings in different contexts, sometimes in contradic- tory ways, resulting in misunderstandings and confusion. The root cause of the problem is the close resemblance of the defining mathematical expressions of entropy in statistical thermodynamics and information in the communications field, also called entropy, differing only by a constant factor with the unit ‘J/K’ in thermodynamics and ‘bits’ in the information theory. The thermodynamic property entropy is closely associated with the physical quantities of thermal energy and temperature, while the entropy used in the communications field is a mathematical abstraction based on probabilities of messages. The terms information and entropy are often used interchangeably in several branches of sciences. This practice gives rise to the phrase conservation of entropy in the sense of conservation of information, which is in contradiction to the fundamental increase of entropy principle in thermody- namics as an expression of the second law. The aim of this paper is to clarify matters and eliminate confusion by putting things into their rightful places within their domains. The notion of conservation of information is also put into a proper perspective. Keywords: entropy; information; conservation of information; creation of information; destruction of information; Boltzmann relation Citation: Çengel, Y.A. On Entropy, 1. Introduction Information, and Conservation of One needs to be cautious when dealing with information since it is defined differently Information. Entropy 2021, 23, 779. -

Temperature Dependence of Breakdown and Avalanche Multiplication in In0.53Ga0.47As Diodes and Heterojunction Bipolar Transistors

This is a repository copy of Temperature dependence of breakdown and avalanche multiplication in In0.53Ga0.47As diodes and heterojunction bipolar transistors . White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/896/ Article: Yee, M., Ng, W.K., David, J.P.R. et al. (3 more authors) (2003) Temperature dependence of breakdown and avalanche multiplication in In0.53Ga0.47As diodes and heterojunction bipolar transistors. IEEE Transactions on Electron Devices, 50 (10). pp. 2021-2026. ISSN 0018-9383 https://doi.org/10.1109/TED.2003.816553 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ IEEE TRANSACTIONS ON ELECTRON DEVICES, VOL. 50, NO. 10, OCTOBER 2003 2021 Temperature Dependence of Breakdown and Avalanche Multiplication in InHXSQGaHXRUAs Diodes and Heterojunction Bipolar Transistors M. -

ENERGY, ENTROPY, and INFORMATION Jean Thoma June

ENERGY, ENTROPY, AND INFORMATION Jean Thoma June 1977 Research Memoranda are interim reports on research being conducted by the International Institute for Applied Systems Analysis, and as such receive only limited scientific review. Views or opinions contained herein do not necessarily represent those of the Institute or of the National Member Organizations supporting the Institute. PREFACE This Research Memorandum contains the work done during the stay of Professor Dr.Sc. Jean Thoma, Zug, Switzerland, at IIASA in November 1976. It is based on extensive discussions with Professor HAfele and other members of the Energy Program. Al- though the content of this report is not yet very uniform because of the different starting points on the subject under consideration, its publication is considered a necessary step in fostering the related discussion at IIASA evolving around th.e problem of energy demand. ABSTRACT Thermodynamical considerations of energy and entropy are being pursued in order to arrive at a general starting point for relating entropy, negentropy, and information. Thus one hopes to ultimately arrive at a common denominator for quanti- ties of a more general nature, including economic parameters. The report closes with the description of various heating appli- cation.~and related efficiencies. Such considerations are important in order to understand in greater depth the nature and composition of energy demand. This may be highlighted by the observation that it is, of course, not the energy that is consumed or demanded for but the informa- tion that goes along with it. TABLE 'OF 'CONTENTS Introduction ..................................... 1 2 . Various Aspects of Entropy ........................2 2.1 i he no me no logical Entropy ........................ -

Lecture 2 the First Law of Thermodynamics (Ch.1)

Lecture 2 The First Law of Thermodynamics (Ch.1) Outline: 1. Internal Energy, Work, Heating 2. Energy Conservation – the First Law 3. Quasi-static processes 4. Enthalpy 5. Heat Capacity Internal Energy The internal energy of a system of particles, U, is the sum of the kinetic energy in the reference frame in which the center of mass is at rest and the potential energy arising from the forces of the particles on each other. system Difference between the total energy and the internal energy? boundary system U = kinetic + potential “environment” B The internal energy is a state function – it depends only on P the values of macroparameters (the state of a system), not on the method of preparation of this state (the “path” in the V macroparameter space is irrelevant). T A In equilibrium [ f (P,V,T)=0 ] : U = U (V, T) U depends on the kinetic energy of particles in a system and an average inter-particle distance (~ V-1/3) – interactions. For an ideal gas (no interactions) : U = U (T) - “pure” kinetic Internal Energy of an Ideal Gas f The internal energy of an ideal gas U = Nk T with f degrees of freedom: 2 B f ⇒ 3 (monatomic), 5 (diatomic), 6 (polyatomic) (here we consider only trans.+rotat. degrees of freedom, and neglect the vibrational ones that can be excited at very high temperatures) How does the internal energy of air in this (not-air-tight) room change with T if the external P = const? f ⎡ PV ⎤ f U =Nin room k= T Bin⎢ N room = ⎥ = PV 2 ⎣ kB T⎦ 2 - does not change at all, an increase of the kinetic energy of individual molecules with T is compensated by a decrease of their number. -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

PHYS 596 Team 1

Braun, S., Ronzheimer, J., Schreiber, M., Hodgman, S., Rom, T., Bloch, I. and Schneider, U. (2013). Negative Absolute Temperature for Motional Degrees of Freedom. Science, 339(6115), pp.52-55. PHYS 596 Team 1 Shreya, Nina, Nathan, Faisal What is negative absolute temperature? • An ensemble of particles is said to have negative absolute temperature if higher energy states are more likely to be occupied than lower energy states. −퐸푖/푘푇 푃푖 ∝ 푒 • If high energy states are more populated than low energy states, entropy decreases with energy. 1 휕푆 ≡ 푇 휕퐸 Negative absolute temperature Previous work on negative temperature • The first experiment to measure negative temperature was performed at Harvard by Purcell and Pound in 1951. • By quickly reversing the magnetic field acting on a nuclear spin crystal, they produced a sample where the higher energy states were more occupied. • Since then negative temperature ensembles in spin systems have been produced in other ways. Oja and Lounasmaa (1997) gives a comprehensive review. Negative temperature for motional degrees of freedom • For the probability distribution of a negative temperature ensemble to be normalizable, we need an upper bound in energy. • Since localized spin systems have a finite number of energy states there is a natural upper bound in energy. • This is hard to achieve in systems with motional degrees of freedom since kinetic energy is usually not bounded from above. • Braun et al (2013) achieves exactly this with bosonic cold atoms in an optical lattice. What is the point? • At thermal equilibrium, negative temperature implies negative pressure. • This is relevant to models of dark energy and cosmology based on Bose-Einstein condensation. -

Einstein Solid 1 / 36 the Results 2

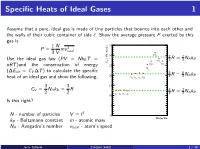

Specific Heats of Ideal Gases 1 Assume that a pure, ideal gas is made of tiny particles that bounce into each other and the walls of their cubic container of side `. Show the average pressure P exerted by this gas is 1 N 35 P = mv 2 3 V total SO 30 2 7 7 (J/K-mole) R = NAkB Use the ideal gas law (PV = NkB T = V 2 2 CO2 C H O CH nRT )and the conservation of energy 25 2 4 Cl2 (∆Eint = CV ∆T ) to calculate the specific 5 5 20 2 R = 2 NAkB heat of an ideal gas and show the following. H2 N2 O2 CO 3 3 15 CV = NAkB = R 3 3 R = NAkB 2 2 He Ar Ne Kr 2 2 10 Is this right? 5 3 N - number of particles V = ` 0 Molecule kB - Boltzmann constant m - atomic mass NA - Avogadro's number vtotal - atom's speed Jerry Gilfoyle Einstein Solid 1 / 36 The Results 2 1 N 2 2 N 7 P = mv = hEkini 2 NAkB 3 V total 3 V 35 30 SO2 3 (J/K-mole) V 5 CO2 C N k hEkini = NkB T H O CH 2 A B 2 25 2 4 Cl2 20 H N O CO 3 3 2 2 2 3 CV = NAkB = R 2 NAkB 2 2 15 He Ar Ne Kr 10 5 0 Molecule Jerry Gilfoyle Einstein Solid 2 / 36 Quantum mechanically 2 E qm = `(` + 1) ~ rot 2I where l is the angular momen- tum quantum number. -

Invention, Intension and the Limits of Computation

Minds & Machines { under review (final version pre-review, Copyright Minds & Machines) Invention, Intension and the Limits of Computation Hajo Greif 30 June, 2020 Abstract This is a critical exploration of the relation between two common as- sumptions in anti-computationalist critiques of Artificial Intelligence: The first assumption is that at least some cognitive abilities are specifically human and non-computational in nature, whereas the second assumption is that there are principled limitations to what machine-based computation can accomplish with respect to simulating or replicating these abilities. Against the view that these putative differences between computation in humans and machines are closely re- lated, this essay argues that the boundaries of the domains of human cognition and machine computation might be independently defined, distinct in extension and variable in relation. The argument rests on the conceptual distinction between intensional and extensional equivalence in the philosophy of computing and on an inquiry into the scope and nature of human invention in mathematics, and their respective bearing on theories of computation. Keywords Artificial Intelligence · Intensionality · Extensionality · Invention in mathematics · Turing computability · Mechanistic theories of computation This paper originated as a rather spontaneous and quickly drafted Computability in Europe conference submission. It grew into something more substantial with the help of the CiE review- ers and the author's colleagues in the Philosophy of Computing Group at Warsaw University of Technology, Paula Quinon and Pawe lStacewicz, mediated by an entry to the \Caf´eAleph" blog co-curated by Pawe l. Philosophy of Computing Group, International Center for Formal Ontology (ICFO), Warsaw University of Technology E-mail: [email protected] https://hajo-greif.net; https://tinyurl.com/philosophy-of-computing 2 H. -

Limits on Efficient Computation in the Physical World

Limits on Efficient Computation in the Physical World by Scott Joel Aaronson Bachelor of Science (Cornell University) 2000 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the GRADUATE DIVISION of the UNIVERSITY of CALIFORNIA, BERKELEY Committee in charge: Professor Umesh Vazirani, Chair Professor Luca Trevisan Professor K. Birgitta Whaley Fall 2004 The dissertation of Scott Joel Aaronson is approved: Chair Date Date Date University of California, Berkeley Fall 2004 Limits on Efficient Computation in the Physical World Copyright 2004 by Scott Joel Aaronson 1 Abstract Limits on Efficient Computation in the Physical World by Scott Joel Aaronson Doctor of Philosophy in Computer Science University of California, Berkeley Professor Umesh Vazirani, Chair More than a speculative technology, quantum computing seems to challenge our most basic intuitions about how the physical world should behave. In this thesis I show that, while some intuitions from classical computer science must be jettisoned in the light of modern physics, many others emerge nearly unscathed; and I use powerful tools from computational complexity theory to help determine which are which. In the first part of the thesis, I attack the common belief that quantum computing resembles classical exponential parallelism, by showing that quantum computers would face serious limitations on a wider range of problems than was previously known. In partic- ular, any quantum algorithm that solves the collision problem—that of deciding whether a sequence of n integers is one-to-one or two-to-one—must query the sequence Ω n1/5 times. -

Gibbs' Paradox and the Definition of Entropy

Entropy 2008, 10, 15-18 entropy ISSN 1099-4300 °c 2008 by MDPI www.mdpi.org/entropy/ Full Paper Gibbs’ Paradox and the Definition of Entropy Robert H. Swendsen Physics Department, Carnegie Mellon University, Pittsburgh, PA 15213, USA E-Mail: [email protected] Received: 10 December 2007 / Accepted: 14 March 2008 / Published: 20 March 2008 Abstract: Gibbs’ Paradox is shown to arise from an incorrect traditional definition of the entropy that has unfortunately become entrenched in physics textbooks. Among its flaws, the traditional definition predicts a violation of the second law of thermodynamics when applied to colloids. By adopting Boltzmann’s definition of the entropy, the violation of the second law is eliminated, the properties of colloids are correctly predicted, and Gibbs’ Paradox vanishes. Keywords: Gibbs’ Paradox, entropy, extensivity, Boltzmann. 1. Introduction Gibbs’ Paradox [1–3] is based on a traditional definition of the entropy in statistical mechanics found in most textbooks [4–6]. According to this definition, the entropy of a classical system is given by the product of Boltzmann’s constant, k, with the logarithm of a volume in phase space. This is shown in many textbooks to lead to the following equation for the entropy of a classical ideal gas of distinguishable particles, 3 E S (E; V; N) = kN[ ln V + ln + X]; (1) trad 2 N where X is a constant. Most versions of Gibbs’ Paradox, including the one I give in this section, rest on the fact that Eq. 1 is not extensive. There is another version of Gibbs’ Paradox that involves the mixing of two gases, which I will discuss in the third section of this paper. -

![Arxiv:1509.06955V1 [Physics.Optics]](https://docslib.b-cdn.net/cover/4098/arxiv-1509-06955v1-physics-optics-274098.webp)

Arxiv:1509.06955V1 [Physics.Optics]

Statistical mechanics models for multimode lasers and random lasers F. Antenucci 1,2, A. Crisanti2,3, M. Ib´a˜nez Berganza2,4, A. Marruzzo1,2, L. Leuzzi1,2∗ 1 NANOTEC-CNR, Institute of Nanotechnology, Soft and Living Matter Lab, Rome, Piazzale A. Moro 2, I-00185, Roma, Italy 2 Dipartimento di Fisica, Universit`adi Roma “Sapienza,” Piazzale A. Moro 2, I-00185, Roma, Italy 3 ISC-CNR, UOS Sapienza, Piazzale A. Moro 2, I-00185, Roma, Italy 4 INFN, Gruppo Collegato di Parma, via G.P. Usberti, 7/A - 43124, Parma, Italy We review recent statistical mechanical approaches to multimode laser theory. The theory has proved very effective to describe standard lasers. We refer of the mean field theory for passive mode locking and developments based on Monte Carlo simulations and cavity method to study the role of the frequency matching condition. The status for a complete theory of multimode lasing in open and disordered cavities is discussed and the derivation of the general statistical models in this framework is presented. When light is propagating in a disordered medium, the system can be analyzed via the replica method. For high degrees of disorder and nonlinearity, a glassy behavior is expected at the lasing threshold, providing a suggestive link between glasses and photonics. We describe in details the results for the general Hamiltonian model in mean field approximation and mention an available test for replica symmetry breaking from intensity spectra measurements. Finally, we summary some perspectives still opened for such approaches. The idea that the lasing threshold can be understood as a proper thermodynamic transition goes back since the early development of laser theory and non-linear optics in the 1970s, in particular in connection with modulation instability (see, e.g.,1 and the review2). -

![Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals](https://docslib.b-cdn.net/cover/4942/arxiv-1910-10745v1-cond-mat-str-el-23-oct-2019-2-2-symmetry-protected-time-crystals-304942.webp)

Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals

A Brief History of Time Crystals Vedika Khemania,b,∗, Roderich Moessnerc, S. L. Sondhid aDepartment of Physics, Harvard University, Cambridge, Massachusetts 02138, USA bDepartment of Physics, Stanford University, Stanford, California 94305, USA cMax-Planck-Institut f¨urPhysik komplexer Systeme, 01187 Dresden, Germany dDepartment of Physics, Princeton University, Princeton, New Jersey 08544, USA Abstract The idea of breaking time-translation symmetry has fascinated humanity at least since ancient proposals of the per- petuum mobile. Unlike the breaking of other symmetries, such as spatial translation in a crystal or spin rotation in a magnet, time translation symmetry breaking (TTSB) has been tantalisingly elusive. We review this history up to recent developments which have shown that discrete TTSB does takes place in periodically driven (Floquet) systems in the presence of many-body localization (MBL). Such Floquet time-crystals represent a new paradigm in quantum statistical mechanics — that of an intrinsically out-of-equilibrium many-body phase of matter with no equilibrium counterpart. We include a compendium of the necessary background on the statistical mechanics of phase structure in many- body systems, before specializing to a detailed discussion of the nature, and diagnostics, of TTSB. In particular, we provide precise definitions that formalize the notion of a time-crystal as a stable, macroscopic, conservative clock — explaining both the need for a many-body system in the infinite volume limit, and for a lack of net energy absorption or dissipation. Our discussion emphasizes that TTSB in a time-crystal is accompanied by the breaking of a spatial symmetry — so that time-crystals exhibit a novel form of spatiotemporal order.