Combining Confocal Imaging and Descattering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Is Optical Imaging?

What is Optical Imaging? Optical imaging uses light to interrogate cellular and molecular function in the living body, as well as in animal and plant tissue. The information is ultimately derived from tissue composition and biomolecular processes. Images are generated by using photons of light in the wavelength range from ultraviolet to near infrared. Contrast is derived through the use of: exogenous agents (i.e., dyes or probes) that provide a signal endogenous molecules with optical signatures (i.e., NADH, hemoglobin, collagens, etc.) reporter genes. Florescence Imaging Fluorescence protein imaging uses endogenous or exogenous molecules or materials that emit light when activated by an external light source such as a laser. An external light of appropriate wavelength is used to excite a target molecule, which then fluoresces by releasing longer-wavelength, lower-energy light. Fluorescence imaging provides the ability to localize and measure gene expression including normally expressed and aberrant genes, proteins and other pathophysiologic processes. Other potential uses include cell trafficking, tagging superficial structures, detecting lesions and for monitoring tumor growth and response to therapy. Bioluminescent Imaging (BLI) Bioluminescent imaging uses a natural light-emitting protein such as luciferase to trace the movement of certain cells or to identify the location of specific chemical reactions within the body. Bioluminescent imaging is being applied to both gene expression and therapeutic monitoring. Optical Imaging Technologies Near-infrared fluorescence imaging involves imaging fluorescence photons in the near-infrared range (typically 600– 900 nm). A fluorochrome is excited by a lower wavelength, light source and the emitted excitation is recorded as a slightly higher wavelength with a high sensitivity charge-coupled-device (CCD) camera. -

Supplement 197: Ophthalmic Optical Coherence Tomography for Angiographic Imaging Storage SOP Classes

Digital Imaging and Communications in Medicine (DICOM) Supplement 197: Ophthalmic Optical Coherence Tomography for Angiographic Imaging Storage SOP Classes Prepared by: DICOM Standards Committee 1300 N. 17th Street Suite 900 Rosslyn, Virginia 22209 USA Final Text VERSION: April 5, 2017 Developed pursuant to DICOM Work IteM: 2016-04-A Supplement 197 – Ophthalmic Optical Coherence Tomography for Angiographic Imaging Storage SOP Classes Page 2 Table of Contents Table of Contents ............................................................................................................................................................. 2 Scope and Field of Application ......................................................................................................................................... 3 Changes to NEMA Standards Publication PS 3.2 ............................................................................................................ 4 Part 2: Conformance ........................................................................................................................................................ 4 Changes to NEMA Standards Publication PS 3.3 ............................................................................................................ 5 Part 3: Information Object Definitions .............................................................................................................................. 5 A.aa Ophthalmic Optical Coherence Tomography En Face Image Information Object Definition .................... -

Three-Dimensional Diffuse Optical Tomography in The

Three-dimensional diffuse optical tomography in the parallel plane transmission geometry: Evaluation of a hybrid frequency domainÕcontinuous wave clinical system for breast imaging J. P. Culvera),b) R. Choe, M. J. Holboke, L. Zubkov, T. Durduran, and A. Slemp Department of Physics and Astronomy, University of Pennsylvania, Philadelphia, Pennsylvania 19104-6396 V. Ntziachristosb) and B. Chance Department of Biochemistry and Biophysics, University of Pennsylvania, Philadelphia, Pennsylvania 19104-6396 A. G. Yodh Department of Physics and Astronomy, University of Pennsylvania, Philadelphia, Pennsylvania 19104-6396 ͑Received 12 March 2002; accepted for publication 5 November 2002; published 23 January 2003͒ Three-dimensional diffuse optical tomography ͑DOT͒ of breast requires large data sets for even modest resolution ͑1cm͒. We present a hybrid DOT system that combines a limited number of frequency domain ͑FD͒ measurements with a large set of continuous wave ͑cw͒ measurements. The FD measurements are used to quantitatively determine tissue averaged absorption and scattering coefficients. The larger cw data sets (105 measurements͒ collected with a lens coupled CCD, permit 3D DOT reconstructions of a 1-liter tissue volume. To address the computational complexity of large data sets and 3D volumes we employ finite difference based reconstructions computed in parallel. Tissue phantom measurements evaluate imaging performance. The tests include the fol- lowing: point spread function measures of resolution, characterization of the size and contrast of single objects, field of view measurements and spectral characterization of constituent concentra- tions. We also report in vivo measurements. Average tissue optical properties of a healthy breast are used to deduce oxy- and deoxy-hemoglobin concentrations. Differential imaging with a tumor simulating target adhered to the surface of a healthy breast evaluates the influence of physiologic fluctuations on image noise. -

High-Resolution Optical Imaging: Progress and Perspectives

Molecular Imaging Summit imaging techniques. Nonetheless, high effective tissue presence of specific enzymes or a change in pH or concentrations can be achieved using ‘‘bulk carriers,’’ such other environmental variables. NEWSLINE as vesicles and macromolecular constructs in which the (4) Cell labels, which may be either inside or attached to number of paramagnetic species can be concentrated. specific cells, that then rely on the trafficking of the Susceptibility agents based on iron oxide particles have cells to be localized. At high resolution in animals, a greater effective relaxivity but affect transverse relaxation single labeled cells have been detected using this rates (1/T2), which are typically 10–30 times greater than approach. longitudinal (1/T1) rates. An alternative mechanism for affecting water MR signals is by magnetization transfer with labile protons on other species, notably amide groups Topical Issues in proteins or in agents designed to provide a large reser- The potential applications of these different approaches voir of exchangeable protons (such as dendrimers). The are intriguing, but the practical success to date of detecting chemical exchange by saturation transfer (CEST) effect is specific molecular targets has been limited. The prospects interesting, partly because the contrast produced can be for achieving sufficiently high levels of contrast material to switched ‘‘on’’ and ‘‘off’’ in a controlled manner. be seen reliably in clinical applications are poor compared MR contrast agents proposed to date can be classified with other modalities. Success will rely on combining into the following categories: improved designs for more effective relaxation agents and carrier vehicles that can deliver relatively large amounts of (1) Nonspecific contrast agents, such as lanthanide che- the agent to a target region. -

Biomedical Optical Imaging

Chapter 17. Biomedical Optical Imaging Biomedical Optical Imaging Academic Staff Professor James G. Fujimoto Research Staff and Visiting Scientists Dr. Yueli Chen, Dr. Iwona Gorczynska, Dr. Ben Potsaid, Dr. Chao Zhou Scientific Collaborators Dr. Jay S. Duker, M.D., Joseph Ho, Varsha Manjunath, (New England Eye Center) Dr. Joel Schuman, M.D. (University of Pittsburgh Medical Center) Dr. Allen Clermont, Dr. Edward Feener (Joslin Diabetes Center) Dr. Hiroshi Mashimo, M.D. (VA Medical Center) Dr. Joseph Schmitt (LightLab Imaging) Dr. David A. Boas, Lana Ruvinskaya, Dr. Anna Devor (MGH) Alex Cable, Dr. James Jiang, Dr. Ben Potsaid (Thorlabs, Inc.) Graduate Students Desmond C. Adler, Aaron D. Aguirre, M.D., Hsiang-Chieh Lee, Jonathan J. Liu, Vivek J. Srinivasan, Tsung-Han Tsai Technical and Support Staff Dorothy A. Fleischer, Donna L. Gale Research Areas and Projects 1. Optical coherence tomography (OCT) technology 1.1 Overview of Optical Coherence Tomography 1.2 Spectral / Fourier domain OCT Imaging 1.3 Swept source / Fourier domain OCT Imaging using Swept Lasers 2. OCT in Ophthalmology 2.1 OCT in Ophthalmology 2.2 Technology Ophthalmic OCT 2.3 Ultrahigh Speed Spectral / Fourier Domain OCT Retinal Imaging 2.4 Swept Source / Fourier domain OCT Imaging at 1050 nm Wavelengths 2.5 Clinical OCT Studies Quantitative OCT measurements of retinal structure En Face projection OCT Interactive Science Publication (ISP) Clinical Studies of Retinal Disease 2.6 Functional OCT Imaging in Human Retina 2.7 Small Animal Retinal Imaging 3. High Speed Three-Dimensional OCT Endoscopic Imaging 3.1 OCT imaging of the Esophagus 3.2 OCT imaging of the Lower GI Tract 3.3 Assessment of Therapies in the GI Tract 3.5 Novel contrast agents for OCT 4. -

Optical Coherence Tomography: an Overview Akshat Singh Department of Biomedical Engineering, Utkal University, Bhubaneswar, Odisha, India

Engineer OPEN ACCESS Freely available online al ing ic & d e M m e d o i i c B a f l o D l e a v Journal of Biomedical Engineering and n i r c u e s o J ISSN: 2475-7586 Medical Devices Perspective Optical Coherence Tomography: An Overview Akshat Singh Department of Biomedical Engineering, Utkal University, Bhubaneswar, Odisha, India PERSPECTIVE Frequency-domain optical coherence tomography, a relatively new version of optical coherence tomography, gives advantages in the Optical coherence tomography (OCT) is a technique for capturing signal-to-noise ratio given, allowing for faster signal capture. Since micrometer-resolution, two- and three-dimensional images from Adolf Fercher and colleagues' work in Vienna in the 1980s on within optical scattering environments using low-coherence light low-, partial-, or white-light interferometry for in vivo ocular eye (e.g., biological tissue). It's employed in medical imaging and measurements, imaging of biological tissue, particularly the human nondestructive testing in the workplace (NDT). Low-coherence interferometry is used in optical coherence tomography, which eye, has been studied in parallel by several groups across the world. commonly uses near-infrared light. The use of light with a relatively It's ideal for ophthalmology and other tissue imaging applications long wavelength permits it to pass through the scattering material. that require micrometre resolution and millimetre penetration Another optical approach, confocal microscopy, often penetrates depth. In 1993, the first in vivo OCT images of retinal structures the sample less deeply but with higher resolution. were published, followed by the first endoscopic images in 1997. -

Diffuse Optical Tomography Using Bayesian Filtering in the Human Brain

applied sciences Article Diffuse Optical Tomography Using Bayesian Filtering in the Human Brain Estefania Hernandez-Martin 1,2,* and Jose Luis Gonzalez-Mora 1 1 Department of Basic Medical Science, Faculty of Health Science, Medicine Section, Universidad de La Laguna, 38071 San Cristobal de La Laguna, Spain; [email protected] 2 Department of Biomedical Engineering, University of Southern California, Los Angeles, CA 90089, USA * Correspondence: [email protected] Received: 11 April 2020; Accepted: 12 May 2020; Published: 14 May 2020 Abstract: The present work describes noninvasive diffuse optical tomography (DOT), a technology for measuring hemodynamic changes in the brain. These changes provide relevant information that helps us to understand the basis of neurophysiology in the human brain. Advantages, such as portability, direct measurements of hemoglobin state, temporal resolution, and the lack of need to restrict movements, as is necessary in magnetic resonance imaging (MRI) devices, means that DOT technology can be used both in research and clinically. Here, we describe the use of Bayesian methods to filter raw DOT data as an alternative to the linear filters widely used in signal processing. Common problems, such as filter selection or a false interpretation of the results, which is sometimes caused by the interference of background physiological noise with neural activity, can be avoided with this new method. Keywords: diffuse optical imaging; image reconstruction algorithms; Bayesian filtering 1. Introduction Functional brain imaging has provided substantial information regarding how dynamic neural processes are distributed in space and time. Some imaging modalities to study brain function use functional magnetic resonance imaging (fMRI) and, although these measurements can cover the entire brain, they require costly infrastructure. -

A Feasibility Study

Diffuse optical tomography for breast cancer imaging guided by computed tomography: a feasibility study Reheman Baikejianga, Wei Zhanga, Changqing Lia,* a School of Engineering, University of California, Merced, 5200 N. Lake Road, Merced, CA, USA, 95343 Abstract. Diffuse optical tomography (DOT) has attracted attentions in the last two decades due to its intrinsic sensitivity in imaging chromophores of tissues such as hemoglobin, water, and lipid. However, DOT has not been clinically accepted yet due to its low spatial resolution caused by strong optical scattering in tissues. Structural guidance provided by an anatomical imaging modality enhances the DOT imaging substantially. Here, we propose a computed tomography (CT) guided multispectral DOT imaging system for breast cancer imaging. To validate its feasibility, we have built a prototype DOT imaging system which consists of a laser at the wavelength of 650 nm and an electron multiplying charge coupled device (EMCCD) camera. We have validated the CT guided DOT reconstruction algorithms with numerical simulations and phantom experiments, in which different imaging setup parameters, such as projection number of measurements and width of measurement patch, have been investigated. Our results indicate that an air-cooling EMCCD camera is good enough for the transmission mode DOT imaging. We have also found that measurements at six angular projections are sufficient for DOT to reconstruct the optical targets with 2 and 4 times absorption contrast when the CT guidance is applied. Finally, we have described our future research plan on integration of a multispectral DOT imaging system into a breast CT scanner. Keywords: Diffuse optical tomography, multi-modality imaging, breast cancer imaging * Changqing Li, [email protected], (209) 228-4777 1. -

Optical Tomographic Imaging for Breast Cancer Detection

1 Optical Tomographic Imaging for Breast Cancer Detection Wenxiang Cong, Xavier Intes, Ge Wang Biomedical Imaging Center, Rensselaer Polytechnic Institute, Troy, New York 12180 Abstract— Diffuse optical breast imaging utilizes near-infrared delineate cysts, benign and malignant masses. But it is limited (NIR) light propagation through tissues to assess the optical by poor soft tissue contrast, inherent speckle noise, and strong properties of tissue for the identification of abnormal tissue. This operator dependence [2]. The optical molecular imaging optical imaging approach is sensitive, cost-effective, and does not involve any ionizing radiation. However, the image modality uses exogenous fluorescent probes as additional reconstruction of diffuse optical tomography (DOT) is a contrast agents that target molecules relevant to breast cancer nonlinear inverse problem and suffers from severe ill-posedness, [3]. The use of fluorescent probes has a potential in early especially in the cases of strong noise and incomplete data. In this breast cancer detection but the effectiveness of fluorescence paper, a novel image reconstruction method is proposed for the imaging relies on the functions of the probes [4]. Molecular detection of breast cancer. This method split the image contrast probes on specific tumor receptors or tumor reconstruction problem into the localization of abnormal tissues and quantification of absorption variations. The localization of associated enzymes are also under development. abnormal tissues is performed based on a new well-posed Diffuse optical tomography (DOT) was introduced the early optimization model, which can be solved via differential evolution 1990s. DOT uses near-infrared light transmission and intrinsic optimization method to achieve a stable image reconstruction. -

Fast 3D Optical Mammography Using ICG Dynamics for Reader-Independent Lesion Differentiation Sophie Piper 1

Fast 3D Optical Mammography using ICG Dynamics for Reader-Independent Lesion Differentiation Sophie Piper 1 P.Schneider 1, N. Volkwein 1, N.Schreiter 1, C.H. Schmitz 1,2 , A.Poellinger 1 1Charité University Medicine Berlin 2NIRx Medizintechnik GmbH, Berlin OSA Biomed 28 April - 2 May 2012, Miami, Florida U N I V E R S I T Ä T S M E D I Z I N B E R L I N [email protected] Introduction Why alternatives to the gold standard of X-ray mammography? • Very reader- dependent diagnosis • Need for a better differentiation between malignant and benign lesions • Non-invasive (radioation free) alternative for breast cancer screening and monitoring preferred General Benefits of optical mammography: • Non- invasive, no radiation • Tomographic imaging • Functional information • Dynamic imaging possible - Ability to track changes of internal parameters (Hb, HbO etc) or extrinsic contrast agents over time U N I V E R S I T Ä T S M E D I Z I N B E R L I N [email protected] Motivation Fast 3D Diffuse Optical Imaging (SR >1 Hz): • Early Bolus kinetics can now be adequately imaged 10 -4 bolus injection Measuring early bolus kinetics over the entire breast can help differentiating between malignant and benign or healthy breast tissue U N I V E R S I T Ä T S M E D I Z I N B E R L I N [email protected] Fast 3D Diffuse Optical Imaging System Optical Mammography with 1.9 Hz Temporal Resolution - DYNOT 232 optical tomography system (NIRx Medizintechnik, Berlin, Germany//NY, USA) Study- 31 co-located Design: source/detector fibers: 961 S/D, 760 and 830nm -- 22 ~ 2 patients: complete 14 volume malignant scans + 8 per benign second lesions, 3 controls -- Reconstruction 25mg ICG bolus (NIRx within NAVI 5-10 Software) sec of relative absorption changes in each of 2243 FEM nodes/ 14000 isometric voxel P. -

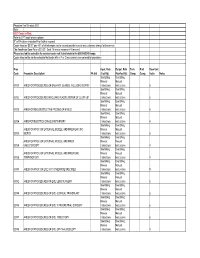

Physician Fee Schedule 2021 Note

Physician Fee Schedule 2021 Note: 2021 Codes in Red; Refer to CPT book for descriptions R" in PA column indicates Prior Auth is required Codes listed as '$0.00" pay 45% of billed amount not to exceed provider’s usual and customary charge for the service The Anesthesia Base Rate is $15.20. Each 15 minute increment=1 time unit. Please use lab fee schedule for covered codes not listed below in the 80000-89249 range. Codes listed on the lab fee schedule that begin with a P or Q are currently non-covered for physicians Proc Inpat. Rate Outpat. Rate Tech. Prof. Base Unit Code Procedure Description PA Ind (Facility) (NonFacility) Comp. Comp. Value Notes See Billing See Billing Manual Manual 00100 ANES FOR PROCEDURES ON SALIVARY GLANDS, INCLUDING BIOPSY Instructions Instructions 5 See Billing See Billing Manual Manual 00102 ANES FOR PROCEDURES INVOLVING PLASTIC REPAIR OF CLEFT LIP Instructions Instructions 6 See Billing See Billing Manual Manual 00103 ANES FOR RECONSTRUCTIVE PROCED OF EYELID Instructions Instructions 5 See Billing See Billing Manual Manual 00104 ANES FOR ELECTROCONVULSIVE THERAPY Instructions Instructions 4 See Billing See Billing ANES FOR PROC ON EXTERNAL, MIDDLE, AND INNER EAR ,INC Manual Manual 00120 BIOPSY Instructions Instructions 5 See Billing See Billing ANES FOR PROC ON EXTERNAL, MIDDLE, AND INNER Manual Manual 00124 EAR,OTOSCOPY Instructions Instructions 4 See Billing See Billing ANES FOR PROC ON EXTERNAL, MIDDLE, AND INNER EAR, Manual Manual 00126 TYMPANOTOMY Instructions Instructions 4 See Billing See Billing Manual -

Review Article Optical Coherence Tomography: Basic Concepts and Applications in Neuroscience Research

Hindawi Journal of Medical Engineering Volume 2017, Article ID 3409327, 20 pages https://doi.org/10.1155/2017/3409327 Review Article Optical Coherence Tomography: Basic Concepts and Applications in Neuroscience Research Mobin Ibne Mokbul Notre Dame College, Motijheel Circular Road, Arambagh, Motijheel, Dhaka 1000, Bangladesh Correspondence should be addressed to Mobin Ibne Mokbul; [email protected] Received 26 April 2017; Revised 22 June 2017; Accepted 14 September 2017; Published 29 October 2017 Academic Editor: Nicusor Iftimia Copyright © 2017 Mobin Ibne Mokbul. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Optical coherence tomography is a micrometer-scale imaging modality that permits label-free, cross-sectional imaging of biological tissue microstructure using tissue backscattering properties. After its invention in the 1990s, OCT is now being widely used in several branches of neuroscience as well as other fields of biomedical science. This review study reports an overview of OCT’s applications in several branches or subbranches of neuroscience such as neuroimaging, neurology, neurosurgery, neuropathology, and neuroembryology. This study has briefly summarized the recent applications of OCT in neuroscience research, including a comparison, and provides a discussion of the remaining challenges and opportunities in addition to future directions. The chief aim of the review study is to draw the attention of a broad neuroscience community in order to maximize the applications of OCT in other branches of neuroscience too, and the study may also serve as a benchmark for future OCT-based neuroscience research.