Unit Testing Best Practices: Tools for Unit Testing Success

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER Table of Contents Introduction 2 Basics of Unit Testing 2 Benefits 2 Critics 3 Practical Unit Testing in Embedded Development 3 The system under test 3 Importing uVision projects 4 Configure the C++test project for Unit Testing 6 Configure uVision project for Unit Testing 8 Configure results transmission 9 Deal with target limitations 10 Prepare test suite and first exemplary test case 12 Deploy it and collect results 12 Page 1 www.parasoft.com Introduction The idea of unit testing has been around for many years. "Test early, test often" is a mantra that concerns unit testing as well. However, in practice, not many software projects have the luxury of a decent and up-to-date unit test suite. This may change, especially for embedded systems, as the demand for delivering quality software continues to grow. International standards, like IEC-61508-3, ISO/DIS-26262, or DO-178B, demand module testing for a given functional safety level. Unit testing at the module level helps to achieve this requirement. Yet, even if functional safety is not a concern, the cost of a recall—both in terms of direct expenses and in lost credibility—justifies spending a little more time and effort to ensure that our released software does not cause any unpleasant surprises. In this article, we will show how to prepare, maintain, and benefit from setting up unit tests for a simplified simulated ASR module. We will use a Keil evaluation board MCBSTM32E with Cortex-M3 MCU, MDK-ARM with the new ULINK Pro debug and trace adapter, and Parasoft C++test Unit Testing Framework. -

Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2017 Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems Tosapon Pankumhang University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Computer Sciences Commons Recommended Citation Pankumhang, Tosapon, "Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems" (2017). Electronic Theses and Dissertations. 1353. https://digitalcommons.du.edu/etd/1353 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS __________ A Dissertation Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver __________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy __________ by Tosapon Pankumhang August 2017 Advisor: Matthew J. Rutherford ©Copyright by Tosapon Pankumhang 2017 All Rights Reserved Author: Tosapon Pankumhang Title: PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS Advisor: Matthew J. Rutherford Degree Date: August 2017 ABSTRACT Embedded systems are used in a wide variety of applications (e.g., automotive, agricultural, home security, industrial, medical, military, and aerospace) due to their small size, low-energy consumption, and the ability to control real-time peripheral devices precisely. These systems, however, are different from each other in many aspects: processors, memory size, develop applications/OS, hardware interfaces, and software loading methods. -

A Framework and Tool Supports for Generating Test Inputs of Aspectj Programs

A Framework and Tool Supports for Generating Test Inputs of AspectJ Programs Tao Xie Jianjun Zhao Department of Computer Science Department of Computer Science & Engineering North Carolina State University Shanghai Jiao Tong University Raleigh, NC 27695 Shanghai 200240, China [email protected] [email protected] ABSTRACT 1. INTRODUCTION Aspect-oriented software development is gaining popularity with Aspect-oriented software development (AOSD) is a new tech- the wider adoption of languages such as AspectJ. To reduce the nique that improves separation of concerns in software develop- manual effort of testing aspects in AspectJ programs, we have de- ment [9, 18, 22, 30]. AOSD makes it possible to modularize cross- veloped a framework, called Aspectra, that automates generation of cutting concerns of a software system, thus making it easier to test inputs for testing aspectual behavior, i.e., the behavior imple- maintain and evolve. Research in AOSD has focused mostly on mented in pieces of advice or intertype methods defined in aspects. the activities of software system design, problem analysis, and lan- To test aspects, developers construct base classes into which the guage implementation. Although it is well known that testing is a aspects are woven to form woven classes. Our approach leverages labor-intensive process that can account for half the total cost of existing test-generation tools to generate test inputs for the woven software development [8], research on testing of AOSD, especially classes; these test inputs indirectly exercise the aspects. To enable automated testing, has received little attention. aspects to be exercised during test generation, Aspectra automati- Although several approaches have been proposed recently for cally synthesizes appropriate wrapper classes for woven classes. -

Parasoft Dottest REDUCE the RISK of .NET DEVELOPMENT

Parasoft dotTEST REDUCE THE RISK OF .NET DEVELOPMENT TRY IT https://software.parasoft.com/dottest Complement your existing Visual Studio tools with deep static INCREASE analysis and advanced PROGRAMMING EFFICIENCY: coverage. An automated, non-invasive solution that the related code, and distributed to his or her scans the application codebase to iden- IDE with direct links to the problematic code • Identify runtime bugs without tify issues before they become produc- and a description of how to fix it. executing your software tion problems, Parasoft dotTEST inte- grates into the Parasoft portfolio, helping When you send the results of dotTEST’s stat- • Automate unit and component you achieve compliance in safety-critical ic analysis, coverage, and test traceability testing for instant verification and industries. into Parasoft’s reporting and analytics plat- regression testing form (DTP), they integrate with results from Parasoft dotTEST automates a broad Parasoft Jtest and Parasoft C/C++test, allow- • Automate code analysis for range of software quality practices, in- ing you to test your entire codebase and mit- compliance cluding static code analysis, unit testing, igate risks. code review, and coverage analysis, en- abling organizations to reduce risks and boost efficiency. Tests can be run directly from Visual Stu- dio or as part of an automated process. To promote rapid remediation, each problem detected is prioritized based on configur- able severity assignments, automatical- ly assigned to the developer who wrote It snaps right into Visual Studio as though it were part of the product and it greatly reduces errors by enforcing all your favorite rules. We have stuck to the MS Guidelines and we had to do almost no work at all to have dotTEST automate our code analysis and generate the grunt work part of the unit tests so that we could focus our attention on real test-driven development. -

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform Parasoft® dotTEST™ /Jtest (for Java) / C/C++test is an integrated Development Testing solution for automating a broad range of testing best practices proven to improve development team productivity and software quality. dotTEST / Java Test / C/C++ Test also seamlessly integrates with Parasoft SOAtest as an option, which enables end-to-end functional and load testing for complex distributed applications and transactions. Capabilities Overview STATIC ANALYSIS ● Broad support for languages and standards: Security | C/C++ | Java | .NET | FDA | Safety-critical ● Static analysis tool industry leader since 1994 ● Simple out-of-the-box integration into your SDLC ● Prevent and expose defects via multiple analysis techniques ● Find and fix issues rapidly, with minimal disruption ● Integrated with Parasoft's suite of development testing capabilities, including unit testing, code coverage analysis, and code review CODE COVERAGE ANALYSIS ● Track coverage during unit test execution and the data merge with coverage captured during functional and manual testing in Parasoft Development Testing Platform to measure true test coverage. ● Integrate with coverage data with static analysis violations, unit testing results, and other testing practices in Parasoft Development Testing Platform for a complete view of the risk associated with your application ● Achieve test traceability to understand the impact of change, focus testing activities based on risk, and meet compliance -

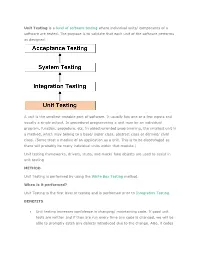

Unit Testing Is a Level of Software Testing Where Individual Units/ Components of a Software Are Tested. the Purpose Is to Valid

Unit Testing is a level of software testing where individual units/ components of a software are tested. The purpose is to validate that each unit of the software performs as designed. A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/ super class, abstract class or derived/ child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.) Unit testing frameworks, drivers, stubs, and mock/ fake objects are used to assist in unit testing. METHOD Unit Testing is performed by using the White Box Testing method. When is it performed? Unit Testing is the first level of testing and is performed prior to Integration Testing. BENEFITS Unit testing increases confidence in changing/ maintaining code. If good unit tests are written and if they are run every time any code is changed, we will be able to promptly catch any defects introduced due to the change. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less. Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse. Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) If you have unit testing in place, you write the test, write the code and run the test. -

Including Jquery Is Not an Answer! - Design, Techniques and Tools for Larger JS Apps

Including jQuery is not an answer! - Design, techniques and tools for larger JS apps Trifork A/S, Headquarters Margrethepladsen 4, DK-8000 Århus C, Denmark [email protected] Karl Krukow http://www.trifork.com Trifork Geek Night March 15st, 2011, Trifork, Aarhus What is the question, then? Does your JavaScript look like this? 2 3 What about your server side code? 4 5 Non-functional requirements for the Server-side . Maintainability and extensibility . Technical quality – e.g. modularity, reuse, separation of concerns – automated testing – continuous integration/deployment – Tool support (static analysis, compilers, IDEs) . Productivity . Performant . Appropriate architecture and design . … 6 Why so different? . “Front-end” programming isn't 'real' programming? . JavaScript isn't a 'real' language? – Browsers are impossible... That's just the way it is... The problem is only going to get worse! ● JS apps will get larger and more complex. ● More logic and computation on the client. ● HTML5 and mobile web will require more programming. ● We have to test and maintain these apps. ● We will have harder requirements for performance (e.g. mobile). 7 8 NO Including jQuery is NOT an answer to these problems. (Neither is any other js library) You need to do more. 9 Improving quality on client side code . The goal of this talk is to motivate and help you improve the technical quality of your JavaScript projects . Three main points. To improve non-functional quality: – you need to understand the language and host APIs. – you need design, structure and file-organization as much (or even more) for JavaScript as you do in other languages, e.g. -

Testing and Debugging Tools for Reproducible Research

Testing and debugging Tools for Reproducible Research Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR We spend a lot of time debugging. We’d spend a lot less time if we tested our code properly. We want to get the right answers. We can’t be sure that we’ve done so without testing our code. Set up a formal testing system, so that you can be confident in your code, and so that problems are identified and corrected early. Even with a careful testing system, you’ll still spend time debugging. Debugging can be frustrating, but the right tools and skills can speed the process. "I tried it, and it worked." 2 This is about the limit of most programmers’ testing efforts. But: Does it still work? Can you reproduce what you did? With what variety of inputs did you try? "It's not that we don't test our code, it's that we don't store our tests so they can be re-run automatically." – Hadley Wickham R Journal 3(1):5–10, 2011 3 This is from Hadley’s paper about his testthat package. Types of tests ▶ Check inputs – Stop if the inputs aren't as expected. ▶ Unit tests – For each small function: does it give the right results in specific cases? ▶ Integration tests – Check that larger multi-function tasks are working. ▶ Regression tests – Compare output to saved results, to check that things that worked continue working. 4 Your first line of defense should be to include checks of the inputs to a function: If they don’t meet your specifications, you should issue an error or warning. -

The Art, Science, and Engineering of Fuzzing: a Survey

1 The Art, Science, and Engineering of Fuzzing: A Survey Valentin J.M. Manes,` HyungSeok Han, Choongwoo Han, Sang Kil Cha, Manuel Egele, Edward J. Schwartz, and Maverick Woo Abstract—Among the many software vulnerability discovery techniques available today, fuzzing has remained highly popular due to its conceptual simplicity, its low barrier to deployment, and its vast amount of empirical evidence in discovering real-world software vulnerabilities. At a high level, fuzzing refers to a process of repeatedly running a program with generated inputs that may be syntactically or semantically malformed. While researchers and practitioners alike have invested a large and diverse effort towards improving fuzzing in recent years, this surge of work has also made it difficult to gain a comprehensive and coherent view of fuzzing. To help preserve and bring coherence to the vast literature of fuzzing, this paper presents a unified, general-purpose model of fuzzing together with a taxonomy of the current fuzzing literature. We methodically explore the design decisions at every stage of our model fuzzer by surveying the related literature and innovations in the art, science, and engineering that make modern-day fuzzers effective. Index Terms—software security, automated software testing, fuzzing. ✦ 1 INTRODUCTION Figure 1 on p. 5) and an increasing number of fuzzing Ever since its introduction in the early 1990s [152], fuzzing studies appear at major security conferences (e.g. [225], has remained one of the most widely-deployed techniques [52], [37], [176], [83], [239]). In addition, the blogosphere is to discover software security vulnerabilities. At a high level, filled with many success stories of fuzzing, some of which fuzzing refers to a process of repeatedly running a program also contain what we consider to be gems that warrant a with generated inputs that may be syntactically or seman- permanent place in the literature. -

Case Study Test the Untestable: Alaska Airlines Solves

CASE STUDY Testing the Untestable Alaska Airlines Solves the Test Environment Dilemma Case Study Testing the Untestable Alaska Airlines Solves the Test Environment Dilemma OVERVIEW Alaska Airlines is primarily a West Coast carrier that services the states of Alaska and Hawaii with mid-continent and destinations in Canada and Mexico. Alaska Airlines received J.D. Powers' “Highest in Customer Satisfaction Among Traditional Carriers” recognition for twelve years in a row even recently winning first in all but one of the seven categories. A large part of the credit belongs to their software testing team. Their industry-leading, proactive approach to disrupting the traditional software testing process ensures that testers can test faster, earlier, and more completely. Learn how Ryan Papineau and his team used advanced automation in concert with service virtualization to rigorously test their complex flight operations manager software. The result: operations that run smoothly— even if they encounter a snowstorm in July. RELIABLE & ON-DEMAND FALSE REPEATABLE TESTS AUTOMATED TEST CASES POSITIVES 100欥 500 ELIMINATED 2 Case Study Testing the Untestable Alaska Airlines Solves the Test Environment Dilemma THE CHALLENGES At Alaska Airlines, the flight operations manager software is ultimately responsible for transporting 46 million customers to 115 global destinations via approximately 440,000 flights per year, safely and efficiently. This software coordinates a highly complex set of inputs from systems around the organization to ensure flights are on time while evaluating and managing fuel, cargo, baggage, and passenger requirements. In addition to the previously mentioned requirements, the system considers many factors including weather, aircraft characteristics, market, and fuel costs. -

Parasoft Named an Omnichannel Functional Test Automation Leader

Parasoft Corp. Headquarters 101 E. Huntington Drive Monrovia, CA 91016 USA www.parasoft.com [email protected] Press Release Parasoft Named an Omnichannel Functional Test Automation Leader, Recognized by major analyst firm for Impressive Roadmap Parasoft shines in evaluation specifically around effective test maintenance, strong CI/CD and application lifecycle management (ALM) platform integration MONROVIA (USA) – July 30, 2018 – Parasoft, the global leader in automated software testing, today announced its position as a leader in The Forrester Wave™: Omnichannel Functional Test Automation Tools, Q3 2018, where it received the highest scores possible in the API Testing and Automation and Product Road Map criteria. The report notes Parasoft’s “impressive and concrete road map to increase test automation from design to execution, pushing autonomous testing.” Parasoft will be showcasing its technology and discussing the future of testing in an upcoming webinar, The Future of Test Automation: Next- Generation Technologies to Use Today on August 23rd. To register, click here. According to the report, conducted by Forrester’s Diego Lo Giudice, “Parasoft shined in our evaluation specifically around effective test maintenance, strong CI/CD and application lifecycle management (ALM) platform integration, as well as reporting through its analytics system PIE. Clients like the recent changes, and all reference customers reported achieving test automation of more than 50% in the past 12 months.” After examining past research, user need assessments, and vendor and expert interviews, Forrester evaluated 15 omnichannel functional test automation tool vendors across a comprehensive 26-criteria to help organizations working on enterprise, mobile, and web applications select the right tool. -

Devsecops DEVELOPMENT & DEVOPS INFRASTRUCTURE

DevSecOps DEVELOPMENT & DEVOPS INFRASTRUCTURE CREATE SECURE APPLICATIONS PARASOFT’S APPROACH - BUILD SECURITY IN WITHOUT DISRUPTING THE Parasoft provides tools that help teams begin their security efforts as DEVELOPMENT PROCESS soon as the code is written, starting with static application security test- ing (SAST) via static code analysis, continuing through testing as part of Parasoft makes DevSecOps possible with API and the CI/CD system via dynamic application security testing (DAST) such functional testing, service virtualization, and the as functional testing, penetration testing, API testing, and supporting in- most complete support for important security stan- frastructure like service virtualization that enables security testing be- dards like CWE, OWASP, and CERT in the industry. fore the complete application is fully available. IMPLEMENT A SECURE CODING LIFECYCLE Relying on security specialists alone prevents the entire DevSecOps team from securing software and systems. Parasoft tooling enables the BENEFIT FROM THE team with security knowledge and training to reduce dependence on PARASOFT APPROACH security specialists alone. With a centralized SAST policy based on in- dustry standards, teams can leverage Parasoft’s comprehensive docs, examples, and embedded training while the code is being developed. ✓ Leverage your existing test efforts for Then, leverage existing functional/API tests to enhance the creation of security security tests – meaning less upfront cost, as well as less maintenance along the way. ✓ Combine quality and security to fully understand your software HARDEN THE CODE (“BUILD SECURITY IN”) Getting ahead of application security means moving beyond just test- ✓ Harden the code – don’t just look for ing into building secure software in the first place.