Active Edge: Designing Squeeze Gestures for the Google Pixel 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

RADA Sense Mobile Application End-User Licence Agreement

RADA Sense Mobile Application End-User Licence Agreement PLEASE READ THESE LICENCE TERMS CAREFULLY BY CONTINUING TO USE THIS APP YOU AGREE TO THESE TERMS WHICH WILL BIND YOU. IF YOU DO NOT AGREE TO THESE TERMS, PLEASE IMMEDIATELY DISCONTINUE USING THIS APP. WHO WE ARE AND WHAT THIS AGREEMENT DOES We Kohler Mira Limited of Cromwell Road, Cheltenham, GL52 5EP license you to use: • Rada Sense mobile application software, the data supplied with the software, (App) and any updates or supplements to it. • The service you connect to via the App and the content we provide to you through it (Service). as permitted in these terms. YOUR PRIVACY Under data protection legislation, we are required to provide you with certain information about who we are, how we process your personal data and for what purposes and your rights in relation to your personal data and how to exercise them. This information is provided in https://www.radacontrols.com/en/privacy/ and it is important that you read that information. Please be aware that internet transmissions are never completely private or secure and that any message or information you send using the App or any Service may be read or intercepted by others, even if there is a special notice that a particular transmission is encrypted. APPLE APP STORE’S TERMS ALSO APPLY The ways in which you can use the App and Documentation may also be controlled by the Apple App Store’s rules and policies https://www.apple.com/uk/legal/internet-services/itunes/uk/terms.html and Apple App Store’s rules and policies will apply instead of these terms where there are differences between the two. -

Step 1(To Be Performed on Your Google Pixel 2 XL)

For a connection between your mobile phone and your Mercedes-Benz hands-free system to be successful, Bluetooth® must be turned on in your mobile phone. Please make sure to also read the operating and pairing instructions of the mobile phone. Please follow the steps below to connect your mobile phone Google Pixel 2 XL with the mobile phone application of your Mercedes-Benz hands-free system using Bluetooth®. Step 1(to be performed on your Google Pixel 2 XL) Step 2 To get to the telephone screen of your Mercedes-Benz hands-free system press the Phone icon on the homescreen. Step 3 Select the Phone icon in the lower right corner. Step 4 Select the “Connect a New Device” application. Page 1 of 3 Step 5 Select the “Start Search Function” Step 6 The system will now search for any Bluetooth compatible phones. This may take some time depending on how many devices are found by the system. Step 7 Once the system completes searching select your mobile phone (example "My phone") from the list. Step 8 The pairing process will generate a 6-digit passcode and display it on the screen. Verify that the same 6 digits are shown on the display of your phone. Step 9 (to be performed on your Google Pixel 2 XL) There will be a pop-up "Bluetooth Request: 'MB Bluetooth' would like to pair with your phone. Confirm that the code '### ###' is shown on 'MB Bluetooth'. " Select "Pair" on your phone if the codes match. Page 2 of 3 Step 10 After the passcode is verified on both the mobile and the COMAND, the phone will begin to be authorized. -

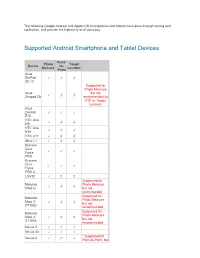

Supported Android Smartphone and Tablet Devices

The following Google Android and Apple iOS smartphones and tablets have gone through testing and calibration, and provide the highest level of accuracy: Supported Android Smartphone and Tablet Devices Point- Photo Target Device to- Measure Location Point Asus ZenPad ✓ ✗ ✗ 3S 10 Supported for Photo Measure Asus but not ✓ ✗ ✗ Zenpad Z8 recommended for P2P or Target Location Asus Zenpad ✓ ✓ ✓ Z10 HTC One ✓ ✗ ✗ M8 HTC One ✓ ✗ ✗ Mini HTC U11 ✓ ✗ ✗ iNew L1 ✓ ✗ ✗ Kyocera Dura ✓ ✓ ✓ Force PRO Kyocera Dura ✓ ✓ ✓ Force PRO 2 LGV20 ✓ ✗ ✗ Supported for Motorola Photo Measure ✓ ✗ ✗ Moto G but not recommended Supported for Motorola Photo Measure Moto X ✓ ✗ ✗ but not XT1052 recommended Supported for Motorola Photo Measure Moto X ✓ ✗ ✗ but not XT1053 recommended Nexus 5 ✓ ✓ ✓ Nexus 5X ✓ ✓ ✓ * Supported for Nexus 6 ✓ ✓* ✓ Point-to-Point, but cannot guarantee +/-3% accuracy * Supported for Point-to-Point, but Nexus 6P ✓ ✓* ✓ cannot guarantee +/-3% accuracy * Supported for Point-to-Point, but Nexus 7 ✓ ✓* ✓ cannot guarantee +/-3% accuracy Samsung Galaxy ✓ ✓ ✓ A20 Samsung Galaxy J7 ✓ ✗ ✓ Prime * Supported for Samsung Point-to-Point, but GALAXY ✓ ✓* ✓ cannot guarantee Note3 +/-3% accuracy Samsung GALAXY ✓ ✓ ✓ Note 4 * Supported for Samsung Point-to-Point, but GALAXY ✓ ✓* ✓ cannot guarantee Note 5 +/-3% accuracy Samsung GALAXY ✓ ✓ ✓ Note 8 Samsung GALAXY ✓ ✓ ✓ Note 9 Samsung GALAXY ✓ ✓ ✓ Note 10 Samsung GALAXY ✓ ✓ ✓ Note 10+ Samsung GALAXY ✓ ✓ ✓ Note 10+ 5G Supported for Samsung Photo Measure GALAXY ✓ ✗ ✗ but not Tab 4 (old) recommended Samsung Supported for -

HR Kompatibilitätsübersicht

HR-imotion Kompatibilität/Compatibility 2018 / 11 Gerätetyp Telefon 22410001 23010201 22110001 23010001 23010101 22010401 22010501 22010301 22010201 22110101 22010701 22011101 22010101 22210101 22210001 23510101 23010501 23010601 23010701 23510320 22610001 23510420 Smartphone Acer Liquid Zest Plus Smartphone AEG Voxtel M250 Smartphone Alcatel 1X Smartphone Alcatel 3 Smartphone Alcatel 3C Smartphone Alcatel 3V Smartphone Alcatel 3X Smartphone Alcatel 5 Smartphone Alcatel 5v Smartphone Alcatel 7 Smartphone Alcatel A3 Smartphone Alcatel A3 XL Smartphone Alcatel A5 LED Smartphone Alcatel Idol 4S Smartphone Alcatel U5 Smartphone Allview P8 Pro Smartphone Allview Soul X5 Pro Smartphone Allview V3 Viper Smartphone Allview X3 Soul Smartphone Allview X5 Soul Smartphone Apple iPhone Smartphone Apple iPhone 3G / 3GS Smartphone Apple iPhone 4 / 4S Smartphone Apple iPhone 5 / 5S Smartphone Apple iPhone 5C Smartphone Apple iPhone 6 / 6S Smartphone Apple iPhone 6 Plus / 6S Plus Smartphone Apple iPhone 7 Smartphone Apple iPhone 7 Plus Smartphone Apple iPhone 8 Smartphone Apple iPhone 8 Plus Smartphone Apple iPhone SE Smartphone Apple iPhone X Smartphone Apple iPhone XR Smartphone Apple iPhone Xs Smartphone Apple iPhone Xs Max Smartphone Archos 50 Saphir Smartphone Archos Diamond 2 Plus Smartphone Archos Saphir 50x Smartphone Asus ROG Phone Smartphone Asus ZenFone 3 Smartphone Asus ZenFone 3 Deluxe Smartphone Asus ZenFone 3 Zoom Smartphone Asus Zenfone 5 Lite ZC600KL Smartphone Asus Zenfone 5 ZE620KL Smartphone Asus Zenfone 5z ZS620KL Smartphone Asus -

These Phones Will Still Work on Our Network After We Phase out 3G in February 2022

Devices in this list are tested and approved for the AT&T network Use the exact models in this list to see if your device is supported See next page to determine how to find your device’s model number There are many versions of the same phone, and each version has its own model number even when the marketing name is the same. ➢EXAMPLE: ▪ Galaxy S20 models G981U and G981U1 will work on the AT&T network HOW TO ▪ Galaxy S20 models G981F, G981N and G981O will NOT work USE THIS LIST Software Update: If you have one of the devices needing a software upgrade (noted by a * and listed on the final page) check to make sure you have the latest device software. Update your phone or device software eSupport Article Last updated: Sept 3, 2021 How to determine your phone’s model Some manufacturers make it simple by putting the phone model on the outside of your phone, typically on the back. If your phone is not labeled, you can follow these instructions. For iPhones® For Androids® Other phones 1. Go to Settings. 1. Go to Settings. You may have to go into the System 1. Go to Settings. 2. Tap General. menu next. 2. Tap About Phone to view 3. Tap About to view the model name and number. 2. Tap About Phone or About Device to view the model the model name and name and number. number. OR 1. Remove the back cover. 2. Remove the battery. 3. Look for the model number on the inside of the phone, usually on a white label. -

Device Compatibility

Device compatibility Check if your smartphone is compatible with your Rexton devices Direct streaming to hearing aids via Bluetooth Apple devices: Rexton Mfi (made for iPhone, iPad or iPod touch) hearing aids connect directly to your iPhone, iPad or iPod so you can stream your phone calls and music directly into your hearing aids. Android devices: With Rexton BiCore devices, you can now also stream directly to Android devices via the ASHA (Audio Streaming for Hearing Aids) standard. ASHA-supported devices: • Samsung Galaxy S21 • Samsung Galaxy S21 5G (SM-G991U)(US) • Samsung Galaxy S21 (US) • Samsung Galaxy S21+ 5G (SM-G996U)(US) • Samsung Galaxy S21 Ultra 5G (SM-G998U)(US) • Samsung Galaxy S21 5G (SM-G991B) • Samsung Galaxy S21+ 5G (SM-G996B) • Samsung Galaxy S21 Ultra 5G (SM-G998B) • Samsung Galaxy Note 20 Ultra (SM-G) • Samsung Galaxy Note 20 Ultra (SM-G)(US) • Samsung Galaxy S20+ (SM-G) • Samsung Galaxy S20+ (SM-G) (US) • Samsung Galaxy S20 5G (SM-G981B) • Samsung Galaxy S20 5G (SM-G981U1) (US) • Samsung Galaxy S20 Ultra 5G (SM-G988B) • Samsung Galaxy S20 Ultra 5G (SM-G988U)(US) • Samsung Galaxy S20 (SM-G980F) • Samsung Galaxy S20 (SM-G) (US) • Samsung Galaxy Note20 5G (SM-N981U1) (US) • Samsung Galaxy Note 10+ (SM-N975F) • Samsung Galaxy Note 10+ (SM-N975U1)(US) • Samsung Galaxy Note 10 (SM-N970F) • Samsung Galaxy Note 10 (SM-N970U)(US) • Samsung Galaxy Note 10 Lite (SM-N770F/DS) • Samsung Galaxy S10 Lite (SM-G770F/DS) • Samsung Galaxy S10 (SM-G973F) • Samsung Galaxy S10 (SM-G973U1) (US) • Samsung Galaxy S10+ (SM-G975F) • Samsung -

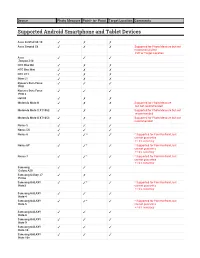

List of Supported Devices

Device Photo Measure Point- to- Point Target Location Comments Supported Android Smartphone and Tablet Devices Asus ZenPad 3S 10 ✓ ✗ ✗ Asus Zenpad Z8 ✓ ✗ ✗ Supported for Photo Measure but not recommended for P2P or Target Location Asus ✓ ✓ ✓ Zenpad Z10 HTC One M8 ✓ ✗ ✗ HTC One Mini ✓ ✗ ✗ HTC U11 ✓ ✗ ✗ iNew L1 ✓ ✗ ✗ Kyocera Dura Force ✓ ✓ ✓ PRO Kyocera Dura Force ✓ ✓ ✓ PRO 2 LGV20 ✓ ✗ ✗ Motorola Moto G ✓ ✗ ✗ Supported for Photo Measure but not recommended Motorola Moto X XT1052 ✓ ✗ ✗ Supported for Photo Measure but not recommended Motorola Moto X XT1053 ✓ ✗ ✗ Supported for Photo Measure but not recommended Nexus 5 ✓ ✓ ✓ Nexus 5X ✓ ✓ ✓ Nexus 6 ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% accuracy Nexus 6P ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% accuracy Nexus 7 ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% accuracy Samsung ✓ ✓ ✓ Galaxy A20 Samsung Galaxy J7 ✓ ✗ ✓ Prime Samsung GALAXY ✓ ✓* ✓ * Supported for Point-to-Point, but Note3 cannot guarantee +/-3% accuracy Samsung GALAXY ✓ ✓ ✓ Note 4 Samsung GALAXY ✓ ✓* ✓ * Supported for Point-to-Point, but Note 5 cannot guarantee +/-3% accuracy Samsung GALAXY ✓ ✓ ✓ Note 8 Samsung GALAXY ✓ ✓ ✓ Note 9 Samsung GALAXY ✓ ✓ ✓ Note 10 Samsung GALAXY ✓ ✓ ✓ Note 10+ Device Photo Measure Point- to- Point Target Location Comments Samsung GALAXY ✓ ✓ ✓ Note 10+ 5G Samsung GALAXY ✓ ✓ ✓ Note 20 Samsung GALAXY ✓ ✓ ✓ Note 20 5G Samsung GALAXY ✓ ✗ ✗ Supported for Photo Measure but not Tab 4 (old) recommended Samsung GALAXY ✓ ✗ ✓ Supported for Photo -

Mobile Device & OS Compatibility

Mobile Device & OS Compatibility Click here for English Cliquez ici pour Français Haga clic aquí para seleccionar Español Klicka här för Svenska Klicken Sie hier für Deutsch Fare clic qui per Italiano Hier klikken voor Nederlands Valitse tästä kieleksi suomi Κάντε κλικ εδώ για Ελληνικά Kliknij tutaj, aby włączyć język polski Haga clic aquí para la versión en español Cliquez ici pour le français Clique aqui para Português Klik her for dansk Klikk her for norsk اﻧﻘﺮ ھﻨﺎ ﻟﻠﻐﺔ اﻟﻌﺮﺑﯿﺔ Clique aqui para Português 點選此處瀏覽中文版 לחץ כאן עבור עברית 한국어를 선택하려면 여기를 클릭하십시오 Нажмите здесь, чтобы перейти на русский Türkçe için buraya tıklayın 中 文( 简 体 )请 点 击 这 里 FreeStyle, Libre, and related brand marks are trademarks of Abbott Diabetes Care Inc. in various jurisdictions. Other trademarks are the property of their respective owners. ©2019 Abbott ART39109-001 Rev. H 02/20 MOBILE DEVICE & OS COMPATIBILITY Popular mobile devices and operating systems (OS) are regularly tested to evaluate NFC scan performance, Bluetooth connectivity, and app compatibility with Sensors. We recommend checking this guide before installing a new OS version on your phone or before using the app with a new phone. Recommended app, device, and operating systems APP / VERSION DEVICE OS iPhone 7, 7 Plus, 8, 8 Plus, X, XS, XS Max, XR FreeStyle LibreLink Samsung Galaxy S6, Galaxy S7 Edge, Galaxy S8, Galaxy S8+, Galaxy S9 iOS: 12, 13 (version 2.4) Google Pixel, Pixel 2, Pixel 2 XL Android: 8, 9, 10 LG Nexus 5X iPhone 7, 7 Plus, 8, 8 Plus, X, XS, XS Max, XR Samsung Galaxy S5, Galaxy S6, Galaxy S7, Galaxy S7 Edge, Galaxy S8, FreeStyle LibreLink Galaxy S8+, Galaxy S9 iOS: 11, 12, 13 Android: 5, 6, 7, 8, 9, 10 (version 2.3) Google Pixel, Pixel 2, Pixel 2 XL LG Nexus 5X iPhone 7, 7 Plus, 8, 8 Plus, X Samsung Galaxy S5, Galaxy S6, Galaxy S7, Galaxy S7 Edge, Galaxy S8, Galaxy S8+, Galaxy S9 FreeStyle LibreLink iOS: 11, 12, 13 (version 2.2) Google Pixel, Pixel 2, Pixel 2 XL Android: 5, 6, 7, 8, 9, 10 Nexus 5X, 6P OnePlus 5T This table will be updated as other devices and operating systems are evaluated. -

Device Compatibility APPLE™ COMPATIBILITY

Device Compatibility Android devices running the required software must also have a microUSB port and support powered USB OTG. iOS devices running the required software must be equipped with a lightning connector port. Visit the Help Center for more detailed explanations of Android requirements and iOS requirements. APPLE™ COMPATIBILITY Works with and tested against: iPhone XS Max iPhone XS iPhone XR iPhone X iPhone 8 Plus iPhone 8 iPhone 7+ iPhone 7 iPhone SE iPhone 5 iPhone 5c iPhone 5s iPhone 6 iPhone 6+ iPhone 6s iPhone 6s+ iPhone SE iPod Touch 5th Generation Works with, but not optimized for: iPad Mini (all versions) iPad Air iPad 4th Generation Not compatible with: iPhone 4s or lower iPad 3 or lower iPod Touch 4 or lower iPad Pro ANDROID™ COMPATIBILITY Works with and tested against: Motorola Moto X Motorola Moto G Samsung Galaxy S3 Samsung Galaxy S4 Samsung Galaxy S5 (except some running 5.1.1*) Samsung Galaxy S6 Samsung Galaxy S6 Edge Samsung Galaxy S7 Samsung Galaxy S7 Edge Samsung Galaxy Note 2 Samsung Galaxy Note 3 Samsung Galaxy Note 4 Samsung Galaxy Note 8 Samsung Galaxy Note Edge HTC One Mini 2 (requires adapter) HTC One A9 (requires adapter) HTC One M8 (requires adapter) HTC One M9 (requires adapter) HTC Desire EYE (requires adapter) HTC Desire 820 (requires adapter) Google Nexus 5 (requires adapter) Google Nexus 6 (requires reversible adapter or cable) Google Nexus 5x (requires USB-C adapter) Google Nexus 6p (requires USB-C adapter) Google Pixel (Requires USB-C adapter) -

Online Banking and Mobile Banking System Requirements

Online Banking Supported Operating Systems and Browsers Supported Browswer Supported OS Microsoft Internet Google Microsoft Edge® Safari® Mozilla Firefox® Explorer® ChromeTM Windows 8 11 N/A N/A 74 68 Windows 10 11 42 N/A 77 68 Mac OS X® 10.3.6 High N/A N/A 12.1 77 68 Sierra iOS 12.4 (iPad Pro®) N/A N/A 12.1 N/A N/A iOS 12.4 (iPhone 7 and N/A N/A 12.2 77 N/A above) Android 8.0 Oreo (Samsung Galaxy S9+, and Google N/A N/A N/A 77 N/A Pixel 2) Mobile Banking Supported Operating Systems Full Support Full support means that all client modes are supported for the platform / OS version. Smartphone Platform / OS Version Devices Tested Tablet Apps Mobile Web Apps iPhone X, iOS 12 iPhone XS, YES YES YES iPhone XS Max Samsung Note 9, Samsung S9, Android 8.0 – Oreo Samsung S9+, YES YES YES Pixel 2XL Partial Support Partial support means not all client modes are supported for the platform / OS version. Smartphone Platform / OS Version Devices Tested Tablet Mobile Apps Apps Web iPhone X, iPhone 7+, iPhone 7, iOS 11 iPhone 6+, iPhone YES YES YES 5s, iPad mini 4, iPad Air Google Pixel Android 7 (Nougat) phone, S7/S7 YES YES YES Edge Nexus 6, Nexus 7 Tablet, Nexus 9 Tablet, Galaxy S7, Android 6 (Marshmallow) Galaxy s7 Edge, YES YES YES Galaxy s6, Galaxy s6 Edge + Mobile Banking Supported Operating Systems (Continued) Minimul Support Minimal support means that the platform / OS version receives minimal or no test coverage. -

List of Supported Devices

List of Compatible Devices Spike By: ikeGPS For: Spike Customers V 1.0.0.0 Date: 2020 1 Table of Contents Table of Contents Supported Android Smartphone and Tablet Devices Supported Apple iOS Smartphone and Tablet Devices 2 Introduction The following is a list of devices that are compatible with Spike. To us, compatible means more than just, it works. Compatible means that it has been tested and adheres to the accuracy standards that we hold ourselves to at ikeGPS. If you have any questions at all, please feel free to reach out to [email protected]. Supported Android Smartphone and Tablet Devices Device Photo Point-to- Target Comment Measure Point Location Asus ZenPad 3S 10 ✓ ✗ ✗ Asus Zenpad Z8 ✓ ✗ ✗ Supported for Photo Measure but not recommended for P2P or Target Location Asus Zenpad Z10 ✓ ✓ ✓ HTC One M8 ✓ ✗ ✗ HTC One Mini ✓ ✗ ✗ HTC U11 ✓ ✗ ✗ iNew L1 ✓ ✗ ✗ Kyocera Dura Force PRO ✓ ✓ ✓ 2 LGV20 ✓ ✗ ✗ Motorola Moto G ✓ ✗ ✗ Supported for Photo Measure but not recommended Motorola Moto X XT1052 ✓ ✗ ✗ Supported for Photo Measure but not recommended Motorola Moto X XT1053 ✓ ✗ ✗ Supported for Photo Measure but not recommended Nexus 5 ✓ ✓ ✓ 3 Nexus 5X ✓ ✓ ✓ Nexus 6 ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% accuracy Nexus 6P ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% accuracy Nexus 7 ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% accuracy Samsung Galaxy A20 ✓ ✓ ✓ Samsung Galaxy J7 ✓ ✗ ✓ Prime Samsung GALAXY Note3 ✓ ✓* ✓ * Supported for Point-to-Point, but cannot guarantee +/-3% -

Liste Smartphones Mit „Kompass-Funktion“ Stand: Mai 2019 Quelle: Actionbound

Liste Smartphones mit „Kompass-Funktion“ Stand: Mai 2019 Quelle: Actionbound Acer Liquid E3 plus Honor 5X Huawei P10 Lite Acer Liquid Jade plus Honor 6C Pro Huawei P10 Plus Alcatel A3 Honor 7 Huawei P8 Alcatel A5 LED Honor 7X Huawei P8 Lite Alcatel A7 Honor 8 Huawei P8 Lite (2017) Alcatel A7 XL Honor 9 Lite Huawei Y6 (2017) Alcatel Idol 4 Honor View 10 Huawei Y7 Alcatel Idol 4 HTC 10 ID2 ME ID1 Alcatel Idol 4S HTC 10 Evo Kodak Ektra Alcatel Idol 5 HTC Desire 10 Lifestyle Leagoo S9 Alcatel Pop 4 HTC Desire 825 Lenovo K6 Alcatel Pop 4+ HTC One A9 Lenovo Moto Z Alcatel Pop 4S HTC One A9s Lenovo P2 Alcatel Shine Lite HTC One M7 LG G2 D802 Apple iPhone 4 HTC One M8 LG G2 Mini Apple iPhone 4s HTC One M9 LG G3 D855 Apple iPhone 5 HTC One Max LG G4 Apple iPhone 5c HTC One Mini LG G5 Apple iPhone 5s HTC One mini2 LG G5 SE Apple iPhone 6 HTC U Ultra LG G6 Apple iPhone 6 Plus HTC U11 LG Google Nexus 5 16GB Apple iPhone 7 HTC U11 Life LG K10 (2017) Apple iPhone 7 Plus HTC U11 Plus LG L40 D160 Apple iPhone 8 HTC UPlay LG L70 D320 Apple iPhone 8 Plus Huawei Ascend G510 LG L90 D405 Apple iPhone SE Huawei Ascend P7 LG Optimus L9 II D605 Apple iPhone X Huawei Honor 6 LG Optimus L9 II D605 Blackberry DTEK50 Huawei Honor 6A LG Q6 Blackberry DTEK60 Huawei Honor 6C Pro LG V20 Blackberry Key One Huawei Honor 6x (2016) LG V30 Blackberry Motion Huawei Honor 8 LG XMach BQ Aquaris V Huawei Honor 9 LG XPower BQ Aquaris X5 Plus Huawei Honor 9 Lite LG XPower 2 Cat Phones S60 Huawei Mate 10 Meizu M5 Caterpillar Cat S60 Huawei Mate 10 Lite Meizu M5 Note Elephone S9 Huawei Mate 10 Pro Meizu Pro 6S Gigaset GS370 Plus Huawei Mate 9 Meizu Pro 7 Google Nexus 6P Huawei Nova Motorola Moto E Google Pixel Huawei Nova 2 Motorola Moto G Google Pixel 2 Huawei Nova 2S Motorola Moto G (2.