Interactive Information Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Secrecy Capacity and Secure Distance for Diffusion-Based Molecular Communication Systems

SPECIAL SECTION ON WIRELESS BODY AREA NETWORKS Received May 21, 2019, accepted July 25, 2019, date of publication August 1, 2019, date of current version August 22, 2019. Digital Object Identifier 10.1109/ACCESS.2019.2932567 Secrecy Capacity and Secure Distance for Diffusion-Based Molecular Communication Systems LORENZO MUCCHI 1, (Senior Member, IEEE), ALESSIO MARTINELLI 1, SARA JAYOUSI1, STEFANO CAPUTO1, AND MASSIMILIANO PIEROBON2, (Member, IEEE) 1Department of Information Engineering, University of Florence, 50139 Florence, Italy 2Department of Computer Science and Engineering, University of Nebraska-Lincoln, Lincoln, NE 68508, USA Corresponding author: Lorenzo Mucchi (lorenzo.mucchi@unifi.it) This work was supported by the U.S. National Science Foundation under Grant CISE CCF-1816969. ABSTRACT The biocompatibility and nanoscale features of Molecular Communication (MC) make this paradigm, based on molecules and chemical reactions, an enabler for communication theory applications in the healthcare at its biological level (e.g., bimolecular disease detection/monitoring and intelligent drug delivery). However, the adoption of MC-based innovative solutions into privacy and security-sensitive areas is opening new challenges for this research field. Despite fundamentals of information theory applied to MC have been established in the last decade, research work on security in MC systems is still limited. In contrast to previous literature focused on challenges, and potential roadmaps to secure MC, this paper presents the preliminary elements of a systematic approach to quantifying information security as it propagates through an MC link. In particular, a closed-form mathematical expression for the secrecy capacity of an MC system based on free molecule diffusion is provided. Numerical results highlight the dependence of the secrecy capacity on the average thermodynamic transmit power, the eavesdropper's distance, the transmitted signal bandwidth, and the receiver radius. -

Anna Lysyanskaya Curriculum Vitae

Anna Lysyanskaya Curriculum Vitae Computer Science Department, Box 1910 Brown University Providence, RI 02912 (401) 863-7605 email: [email protected] http://www.cs.brown.edu/~anna Research Interests Cryptography, privacy, computer security, theory of computation. Education Massachusetts Institute of Technology Cambridge, MA Ph.D. in Computer Science, September 2002 Advisor: Ronald L. Rivest, Viterbi Professor of EECS Thesis title: \Signature Schemes and Applications to Cryptographic Protocol Design" Massachusetts Institute of Technology Cambridge, MA S.M. in Computer Science, June 1999 Smith College Northampton, MA A.B. magna cum laude, Highest Honors, Phi Beta Kappa, May 1997 Appointments Brown University, Providence, RI Fall 2013 - Present Professor of Computer Science Brown University, Providence, RI Fall 2008 - Spring 2013 Associate Professor of Computer Science Brown University, Providence, RI Fall 2002 - Spring 2008 Assistant Professor of Computer Science UCLA, Los Angeles, CA Fall 2006 Visiting Scientist at the Institute for Pure and Applied Mathematics (IPAM) Weizmann Institute, Rehovot, Israel Spring 2006 Visiting Scientist Massachusetts Institute of Technology, Cambridge, MA 1997 { 2002 Graduate student IBM T. J. Watson Research Laboratory, Hawthorne, NY Summer 2001 Summer Researcher IBM Z¨urich Research Laboratory, R¨uschlikon, Switzerland Summers 1999, 2000 Summer Researcher 1 Teaching Brown University, Providence, RI Spring 2008, 2011, 2015, 2017, 2019; Fall 2012 Instructor for \CS 259: Advanced Topics in Cryptography," a seminar course for graduate students. Brown University, Providence, RI Spring 2012 Instructor for \CS 256: Advanced Complexity Theory," a graduate-level complexity theory course. Brown University, Providence, RI Fall 2003,2004,2005,2010,2011 Spring 2007, 2009,2013,2014,2016,2018 Instructor for \CS151: Introduction to Cryptography and Computer Security." Brown University, Providence, RI Fall 2016, 2018 Instructor for \CS 101: Theory of Computation," a core course for CS concentrators. -

What Every Computer Scientist Should Know About Floating-Point Arithmetic

What Every Computer Scientist Should Know About Floating-Point Arithmetic DAVID GOLDBERG Xerox Palo Alto Research Center, 3333 Coyote Hill Road, Palo Alto, CalLfornLa 94304 Floating-point arithmetic is considered an esotoric subject by many people. This is rather surprising, because floating-point is ubiquitous in computer systems: Almost every language has a floating-point datatype; computers from PCs to supercomputers have floating-point accelerators; most compilers will be called upon to compile floating-point algorithms from time to time; and virtually every operating system must respond to floating-point exceptions such as overflow This paper presents a tutorial on the aspects of floating-point that have a direct impact on designers of computer systems. It begins with background on floating-point representation and rounding error, continues with a discussion of the IEEE floating-point standard, and concludes with examples of how computer system builders can better support floating point, Categories and Subject Descriptors: (Primary) C.0 [Computer Systems Organization]: General– instruction set design; D.3.4 [Programming Languages]: Processors —compders, optirruzatzon; G. 1.0 [Numerical Analysis]: General—computer arithmetic, error analysis, numerzcal algorithms (Secondary) D. 2.1 [Software Engineering]: Requirements/Specifications– languages; D, 3.1 [Programming Languages]: Formal Definitions and Theory —semantZcs D ,4.1 [Operating Systems]: Process Management—synchronization General Terms: Algorithms, Design, Languages Additional Key Words and Phrases: denormalized number, exception, floating-point, floating-point standard, gradual underflow, guard digit, NaN, overflow, relative error, rounding error, rounding mode, ulp, underflow INTRODUCTION tions of addition, subtraction, multipli- cation, and division. It also contains Builders of computer systems often need background information on the two information about floating-point arith- methods of measuring rounding error, metic. -

Cryptography

Security Engineering: A Guide to Building Dependable Distributed Systems CHAPTER 5 Cryptography ZHQM ZMGM ZMFM —G. JULIUS CAESAR XYAWO GAOOA GPEMO HPQCW IPNLG RPIXL TXLOA NNYCS YXBOY MNBIN YOBTY QYNAI —JOHN F. KENNEDY 5.1 Introduction Cryptography is where security engineering meets mathematics. It provides us with the tools that underlie most modern security protocols. It is probably the key enabling technology for protecting distributed systems, yet it is surprisingly hard to do right. As we’ve already seen in Chapter 2, “Protocols,” cryptography has often been used to protect the wrong things, or used to protect them in the wrong way. We’ll see plenty more examples when we start looking in detail at real applications. Unfortunately, the computer security and cryptology communities have drifted apart over the last 20 years. Security people don’t always understand the available crypto tools, and crypto people don’t always understand the real-world problems. There are a number of reasons for this, such as different professional backgrounds (computer sci- ence versus mathematics) and different research funding (governments have tried to promote computer security research while suppressing cryptography). It reminds me of a story told by a medical friend. While she was young, she worked for a few years in a country where, for economic reasons, they’d shortened their medical degrees and con- centrated on producing specialists as quickly as possible. One day, a patient who’d had both kidneys removed and was awaiting a transplant needed her dialysis shunt redone. The surgeon sent the patient back from the theater on the grounds that there was no urinalysis on file. -

Equilibrium Signaling: Molecular Communication Robust to Geometry Uncertainties Bayram Cevdet Akdeniz, Malcolm Egan, Bao Tang

Equilibrium Signaling: Molecular Communication Robust to Geometry Uncertainties Bayram Cevdet Akdeniz, Malcolm Egan, Bao Tang To cite this version: Bayram Cevdet Akdeniz, Malcolm Egan, Bao Tang. Equilibrium Signaling: Molecular Communication Robust to Geometry Uncertainties. 2020. hal-02536318v2 HAL Id: hal-02536318 https://hal.archives-ouvertes.fr/hal-02536318v2 Preprint submitted on 5 Aug 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. 1 Equilibrium Signaling: Molecular Communication Robust to Geometry Uncertainties Bayram Cevdet Akdeniz, Malcolm Egan and Bao Quoc Tang Abstract A basic property of any diffusion-based molecular communication system is the geometry of the enclosing container. In particular, the geometry influences the system’s behavior near the boundary and in all existing modulation schemes governs receiver design. However, it is not always straightforward to characterize the geometry of the system. This is particularly the case when the molecular communication system operates in scenarios where the geometry may be complex or dynamic. In this paper, we propose a new scheme—called equilibrium signaling—which is robust to uncertainties in the geometry of the fluid boundary. In particular, receiver design only depends on the relative volumes of the transmitter or receiver, and the entire container. -

Theoretical Computer Science Knowledge a Selection of Latest Research Visit Springer.Com for an Extensive Range of Books and Journals in the field

ABCD springer.com Theoretical Computer Science Knowledge A Selection of Latest Research Visit springer.com for an extensive range of books and journals in the field Theory of Computation Classical and Contemporary Approaches Topics and features include: Organization into D. C. Kozen self-contained lectures of 3-7 pages 41 primary lectures and a handful of supplementary lectures Theory of Computation is a unique textbook that covering more specialized or advanced topics serves the dual purposes of covering core material in 12 homework sets and several miscellaneous the foundations of computing, as well as providing homework exercises of varying levels of difficulty, an introduction to some more advanced contempo- many with hints and complete solutions. rary topics. This innovative text focuses primarily, although by no means exclusively, on computational 2006. 335 p. 75 illus. (Texts in Computer Science) complexity theory. Hardcover ISBN 1-84628-297-7 $79.95 Theoretical Introduction to Programming B. Mills page, this book takes you from a rudimentary under- standing of programming into the world of deep Including well-digested information about funda- technical software development. mental techniques and concepts in software construction, this book is distinct in unifying pure 2006. XI, 358 p. 29 illus. Softcover theory with pragmatic details. Driven by generic ISBN 1-84628-021-4 $69.95 problems and concepts, with brief and complete illustrations from languages including C, Prolog, Java, Scheme, Haskell and HTML. This book is intended to be both a how-to handbook and easy reference guide. Discussions of principle, worked Combines theory examples and exercises are presented. with practice Densely packed with explicit techniques on each springer.com Theoretical Computer Science Knowledge Complexity Theory Exploring the Limits of Efficient Algorithms computer science. -

Innovation Insights 12

INNOVATIONINNOVATION insights insights THE LATEST INNOVATIONS, COLLABORATIONS AND TECHNOLOGY TRANSFER ISSUE 12 JUNE 2019 FROM THE UNIVERSITY OF OXFORD MENTORING OXFORD ENTREPRENEURS Oxford University and Vodafone, helping the bright sparks of Oxford. Enhanced nanoscale Zika viral communication vector vaccine Protection against blast-induced Electrodynamic traumatic brain injury Micro-Manipulator SUBSCRIBE | CONTENTS INNOVATION insights CONTENTS INVENTION Sickle cell screening during pregnancy: A high- Body motion monitoring in MRI and CT imaging: A Electrodynamic micro-manipulator: A technique to throughput non-invasive prenatal testing technique for graphene-based piezoelectric sensor that is compatible manipulate, align and displace non-spherical objects and the diagnosis of sickle-cell disease in the foetus with both MRI and CT imaging 2D nanomaterials while preserving their structural and chemical integrity Biosensors for bacterial detection: Methods for Early gestational diabetes diagnostic: A test using performing remote contamination detection using biomarkers from the placenta to give an early indication of Logical control of CRISPR gene editing system: A fluorescence mothers who are at risk of gestational diabetes control system that allows spatio-temporal activation of CRISPR, based on an engineered RNA guide strand New treatment in chronic lymphocytic Accurate camera relocalisation: An algorithm for estimating leukaemia: A specific use of the drug forodesine to the pose of a 6-degree camera using a single RGB-D frame A novel -

Publications Contents Digest May/2018

IEEE Communications Society Publications Contents Digest May/2018 Direct links to magazine and journal abstracts and full paper pdfs via IEEE Xplore ComSoc Vice President – Publications – Nelson Fonseca Director – Journals – Khaled B. Letaief Director – Magazines – Raouf Boutaba Magazine Editors EIC, IEEE Communications Magazine – Tarek El-Bawab AEIC, IEEE Communications Magazine – Antonio Sanchez-Esquavillas | Ravi Subrahmanyan EIC, IEEE Network Magazine – Mohsen Guizani AEIC, IEEE Network Magazine – David Soldani EIC, IEEE Wireless Communications Magazine – Hamid Gharavi AEIC, IEEE Wireless Communications Magazine – Yi Qian EIC, IEEE Communications Standards Magazine – Glenn Parsons AEIC, IEEE Communications Standards Magazine – Zander Lei EIC, China Communications – Chen Junliang Journal Editors EIC, IEEE Transactions on Communications – Naofal Al-Dhahir EIC, IEEE Journal on Selected Areas In Communications (J-SAC) – Raouf Boutaba EIC, IEEE Communications Letters – O. A. Dobre Editor, IEEE Communications Surveys & Tutorials – Ying-Dar Lin EIC, IEEE Transactions on Network & Service Management (TNSM) – Filip De Turck EIC, IEEE Wireless Communications Letters – Wei Zhang EIC, IEEE Transactions on Wireless Communications – Martin Haenggi EIC, IEEE Transactions on Mobile Communications – Marwan Krunz EIC, IEEE/ACM Transactions on Networking – Eytan Modiano EIC, IEEE/OSA Journal of Optical Communications & Networking (JOCN) – Jane M. Simmons EIC, IEEE/OSA Journal of Lightwave Technology – Peter J. Winzer Co-EICs, IEEE/KICS Journal of Communications -

The Computer Scientist As Toolsmith—Studies in Interactive Computer Graphics

Frederick P. Brooks, Jr. Fred Brooks is the first recipient of the ACM Allen Newell Award—an honor to be presented annually to an individual whose career contributions have bridged computer science and other disciplines. Brooks was honored for a breadth of career contributions within computer science and engineering and his interdisciplinary contributions to visualization methods for biochemistry. Here, we present his acceptance lecture delivered at SIGGRAPH 94. The Computer Scientist Toolsmithas II t is a special honor to receive an award computer science. Another view of computer science named for Allen Newell. Allen was one of sees it as a discipline focused on problem-solving sys- the fathers of computer science. He was tems, and in this view computer graphics is very near especially important as a visionary and a the center of the discipline. leader in developing artificial intelligence (AI) as a subdiscipline, and in enunciating A Discipline Misnamed a vision for it. When our discipline was newborn, there was the What a man is is more important than what he usual perplexity as to its proper name. We at Chapel Idoes professionally, however, and it is Allen’s hum- Hill, following, I believe, Allen Newell and Herb ble, honorable, and self-giving character that makes it Simon, settled on “computer science” as our depart- a double honor to be a Newell awardee. I am pro- ment’s name. Now, with the benefit of three decades’ foundly grateful to the awards committee. hindsight, I believe that to have been a mistake. If we Rather than talking about one particular research understand why, we will better understand our craft. -

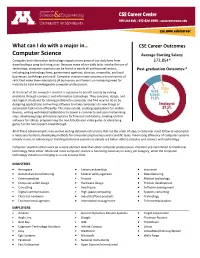

Computer Science Average Starting Salary

What can I do with a major in… CSE Career Outcomes Computer Science Average Starting Salary: Computer and information technology impacts many areas of our daily lives from $73,854* downloading a song to driving a car. Because many of our daily tasks involve the use of technology, computer scientists can be found in nearly all professional sectors, Post-graduation Outcomes:* including big technology firms, government agencies, startups, nonprofits, and local businesses, both large and small. Computer science majors possess a broad variety of skills that make them valuable to all businesses and there is an increasing need for industry to have knowledgeable computer professionals. At the heart of the computer scientist is a passion to benefit society by solving problems through computer and information technology. They conceive, design, and test logical structures for solving problems by computer and find ways to do so by designing applications and writing software to make computers do new things or accomplish tasks more efficiently. This may include, creating applications for mobile devices, writing web-based applications to power e-commerce and social networking sites, developing large enterprise systems for financial institutions, creating control software for robots, programming the next blockbuster video game, or identifying genes for the next biotech breakthrough. All of these advancements may involve writing detailed instructions that list the order of steps a computer must follow to accomplish a necessary function, developing methods for computerizing business and scientific tasks, maximizing efficiency of computer systems already in use, or enhancing or building immersive systems so people are better able to socialize and interact with technology Computer scientists often work on a more abstract level than other computer professionals. -

Mathematics and Computer Science

Mathematics and Computer Science he Mathematics and Computer Science Ma 315, Probability and Statistics TDepartment at Benedictine College is Ma 356, Modern Algebra I committed to maintaining a curriculum that Ma 360, Modern Algebra II or provides students with the necessary tools Ma 480, Introduction to Real Analysis to enter a career in their field with a broad, Ma 488, Senior Comprehensive solid knowledge of mathematics or computer Ma 493, Directed Research science. Our students are provided with the six hours of upper-division math electives knowledge, analytical, and problem solving and Cs 114, Introduction to Computer skills necessary to function as mathematicians Science I or Cs 230, Programming for or computer scientists in our world today. Scientists and Engineers The mathematics curriculum prepares stu- dents for graduate study, for responsible posi- Requirements for a major in tions in business, industry, and government, Computer Science: and for teaching positions in secondary and Cs 114, Introduction to Computer Science I elementary schools. Basic skills and tech- Cs 115, Introduction to Computer Science II niques provide for entering a career as an Ma 255, Discrete Mathematical Structures I actuary, banker, bio-mathematician, computer Cs 256, Discrete Mathematical Structures II programmer, computer scientist, economist, Cs 300, Information & Knowledge engineer, industrial researcher, lawyer, man- Management Cs 351, Algorithm Design and Data Analysis agement consultant, market research analyst, Cs 421, Computer Architecture mathematician, mathematics teacher, opera- Cs 440, Operating Systems and Networking tions researcher, quality control specialist, Cs 488, Senior Comprehensive statistician, or systems analyst. Cs 492, Software Development and Computer science is an area of study that Professional Practice is important in the technological age in which Cs 493, Senior Capstone we live. -

What Is Computer Science All About?

WHAT IS COMPUTER SCIENCE ALL ABOUT? H. Conrad Cunningham and Pallavi Tadepalli Department of Computer and Information Science University of Mississippi COMPUTERS EVERYWHERE As scientific and engineering disciplines go, computer science is still quite young. Although the mathematical roots of computer science go back more than a thousand years, it is only with the invention of the programmable electronic digital computer during the World War II era of the 1930s and 1940s that the modern discipline of computer science began to take shape. As it has developed, computer science includes theoretical studies, experimental methods, and engineering design all in one discipline. One of the first computers was the ENIAC (Electronic Numerical Integrator and Computer), developed in the mid-1940s at the University of Pennsylvania. When construction was completed in 1946, the ENIAC cost about $500,000. In today’s terms, that is about $5,000,000. It weighed 30 tons, occupied as much space as a small house, and consumed 160 kilowatts of electric power. Figure 1 is a classic U.S. Army photograph of the ENIAC. The ENIAC and most other computers of that era were designed for military purposes, such as calculating firing tables for artillery. As a result, many observers viewed the market for such devices to be quite small. The observers were wrong! Figure 1. ENIAC in Classic U.S. Army Photograph 1 Electronics technology has improved greatly in 60 years. Today, a computer with the capacity of the ENIAC would be smaller than a coin from our pockets, would consume little power, and cost just a few dollars on the mass market.